- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Earn a 50% discount on the DP-600 certification exam by completing the Fabric 30 Days to Learn It challenge.

- Power BI forums

- Community Blog

- Power BI Community Blog

- Performance Tuning DAX - Part 1

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

OK, let's get a few things out of the way right off the bat.

First and foremost, I do not consider myself a DAX performance optimization expert nor do I play one on TV. Truth be told, the vast majority of solutions that I post to the forums are with utmost certainty not optimized DAX code. Believe it or not, that doesn't bother me...at all. The reality is that DAX optimization is difficult and time consuming and, quite frankly, the vast majority of the time the optimization does not matter and is simply a waste of time. Or, the optimization makes the code less readable. We all remember the bad old days of Perl code. For the vast majority of use cases, if the DAX runs in 600 milliseconds or 300 milliseconds, the average user is not going to be all that upset. It's still sub-second response times. What matters most is a solution. Once you have a solution it can always be optimized if necessary.

So, with that out of the way, on to the next useless pre-emptive clarification. This post is about DAX optimization. Not data model optimization. Not Power Query optimization. Not, pick a topic, optimization. DAX optimization. If you want to leave comments about other optimizations that are not DAX, write your own blog article on them. That's not the purpose of this post.

Third clarification, I make no claims that this is the "most" optimized DAX code. In fact, I would LOVE to see feedback/comments on even more efficient DAX code. I have attached the PBIX file I used to this post.

So, what is this post about? Simple. A community member presented a problem where Power BI was taking 30 minutes to render a visualization. Let that sink in, 30 minutes. That's a lot of minutes folks. This post simply documents my efforts to optimize the DAX as much as possible. Now, that 30 minutes was more like 10 minutes on my machine. And I have a rickety old Surface Pro 4 with a mere 16 GB of memory so I feel bad for that guy... Anyway, I was able to get this calculation down to about 20 seconds, which is a reasonable amount of improvement. This post is about presenting to the community some lessons learned in this journey. Your mileage may vary because DAX optimization is a complex, tricky and relatively unexplored area. And, better yet, it is going to vary by circumstance. But, I felt that there were enough lessons learned here to open the discussion up to a wider audience. Maybe, maybe not.

The Original Problem

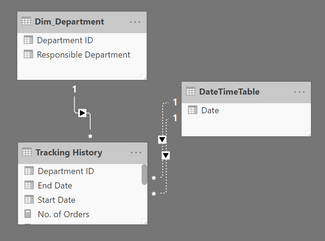

So the original problem involved three tables within a larger data model that looked like the following:

The fact table involved, Tracking History, only had about 60,000 rows in it, which made this problem even more intriguing. DAX performance issues are relatively rare and to have one occur on such a small dataset is somewhat surprising.

There were two measures involved:

No. of Orders =

VAR StartDate = VALUE ( SELECTEDVALUE ( 'Tracking History'[Start Date]) )

VAR EndDate = VALUE ( SELECTEDVALUE ( 'Tracking History'[End Date] ) )

VAR MinDateInContext = VALUE ( MIN ( 'DateTimeTable'[Date] ) )

VAR MaxDateInContext = VALUE ( MAX ( DateTimeTable[Date] ) )

RETURN

IF (

AND ( StartDate > MinDateInContext, EndDate < MaxDateInContext ),

1,

IF (

and(AND (StartDate > MinDateInContext, EndDate>MaxDateInContext),MaxDateInContext>StartDate),

1,

IF (

and( AND ( StartDate < MinDateInContext, EndDate < MaxDateInContext ),EndDate>MinDateInContext),

1,

IF (

AND ( StartDate < MinDateInContext, EndDate > MaxDateInContext ),

1,

BLANK ()

)

)

)

)

Total Orders = SUMX('Tracking History',[No. of Orders])

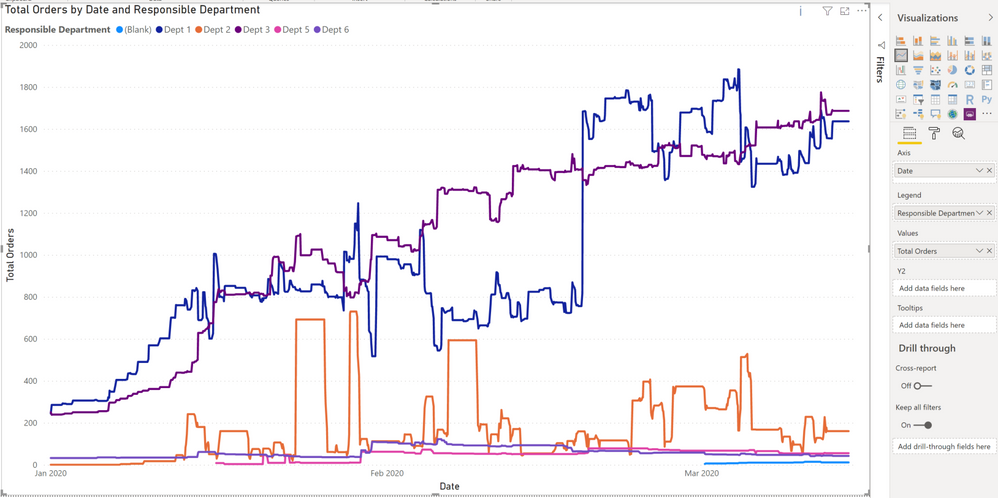

This creates the following visualization:

In Performance Analyzer, this visual took 595,908 milliseconds to render, or about 9 minutes, 56 seconds.

Step 1

OK, step one, let's clean up the code. Nested IF's. Ack! Let's change those to a SWITCH statement.

No. of Orders 2a =

VAR StartDate = VALUE ( SELECTEDVALUE ( 'Tracking History'[Start Date]) )

VAR EndDate = VALUE ( SELECTEDVALUE ( 'Tracking History'[End Date] ) )

VAR MinDateInContext = VALUE ( MIN ( 'DateTimeTable'[Date] ) )

VAR MaxDateInContext = VALUE ( MAX ( DateTimeTable[Date] ) )

RETURN

SWITCH(TRUE(),

AND ( StartDate > MinDateInContext, EndDate < MaxDateInContext ),1,

AND( AND (StartDate > MinDateInContext, EndDate > MaxDateInContext),MaxDateInContext > StartDate),1,

AND( AND ( StartDate < MinDateInContext, EndDate < MaxDateInContext ),EndDate > MinDateInContext),1,

AND ( StartDate < MinDateInContext, EndDate > MaxDateInContext ),1,

BLANK()

)

I also tried this with using && instead of AND like this:

No. of Orders 2 =

VAR StartDate = VALUE ( SELECTEDVALUE ( 'Tracking History'[Start Date]) )

VAR EndDate = VALUE ( SELECTEDVALUE ( 'Tracking History'[End Date] ) )

VAR MinDateInContext = VALUE ( MIN ( 'DateTimeTable'[Date] ) )

VAR MaxDateInContext = VALUE ( MAX ( DateTimeTable[Date] ) )

RETURN

SWITCH(TRUE(),

StartDate > MinDateInContext && EndDate < MaxDateInContext,1,

(MaxDateInContext > StartDate && StartDate > MinDateInContext) && EndDate > MaxDateInContext,1,

(StartDate < MinDateInContext && EndDate < MaxDateInContext) && EndDate > MinDateInContext,1,

StartDate < MinDateInContext && EndDate > MaxDateInContext,1,

BLANK()

)

Performance analyzer results were as follows:

- No. of Orders 2a, Total Orders 2a, 606,392 milliseconds or 10 minutes, 6 seconds

- No. of Orders 2, Total Orders 2, 613,939 milliseconds or 10 minutes, 14 seconds

So, first couple of lessons, no real impact to performance using SWITCH instead of nested IF statements. Also, no impact in using AND functions versus inline &&. While these times are slightly longer, they are not statistically significant as the same query will run slightly longer or shorter depending on a range of factors. But, the code is definitely more readable!

Step 2

Now that we can better see the logic involved, it is evident that the logic is filtering out certain rows and assigning a value of 1 while the remainder that do not fit the criteria are given a value of BLANK. However, in the visual, no more than 2,000 is displayed as a sum of all of the 1's and there are 60,000 rows in the fact table. This means that the vast majority of our rows have to be tested for a bunch of conditions before they "fall through" to be assigned BLANK. Thus, it follows that if we reverse the logic we will eliminate rows faster and thus there will be less testing involved and hence less processing and calculation.

No. of Orders 3 =

VAR StartDate = VALUE ( SELECTEDVALUE ( 'Tracking History'[Start Date]) )

VAR EndDate = VALUE ( SELECTEDVALUE ( 'Tracking History'[End Date] ) )

VAR MinDateInContext = VALUE ( MIN ( 'DateTimeTable'[Date] ) )

VAR MaxDateInContext = VALUE ( MAX ( DateTimeTable[Date] ) )

RETURN

SWITCH(TRUE(),

AND ( StartDate > MinDateInContext, EndDate > MaxDateInContext ),BLANK(),

AND( AND (StartDate > MinDateInContext, EndDate > MaxDateInContext),MaxDateInContext < StartDate),BLANK(),

AND( AND ( StartDate < MinDateInContext, EndDate < MaxDateInContext ),EndDate < MinDateInContext),BLANK(),

AND ( StartDate < MinDateInContext, EndDate < MaxDateInContext ),BLANK(),

1

)

Performance analyzer results were as follows:

- No. of Orders 3, Total Orders 3, 285,969 milliseconds or 4 minutes, 46 seconds

Aha! We've knocked the calculation time down by half! So, lesson learned, when performing conditional logic tests, structure your code such that you eliminate the most amount of rows as early as possible.

Step 3

Thus far I have been creating two measures for each step, creating a new Total Orders measure along with my new No. of Orders measure. This is a pain and I'm lazy so let's combine this into a single measure:

Total Orders 4 =

VAR MinDateInContext = VALUE ( MIN ( 'DateTimeTable'[Date] ) )

VAR MaxDateInContext = VALUE ( MAX ( DateTimeTable[Date] ) )

VAR __Table =

ADDCOLUMNS(

'Tracking History',

"__No of Orders",

SWITCH(TRUE(),

AND (

'Tracking History'[Start Date] > MinDateInContext,

'Tracking History'[End Date] > MaxDateInContext

),BLANK(),

AND(

AND (

'Tracking History'[Start Date] > MinDateInContext,

'Tracking History'[End Date] > MaxDateInContext

),

MaxDateInContext < 'Tracking History'[Start Date]

),BLANK(),

AND(

AND (

'Tracking History'[Start Date] < MinDateInContext,

'Tracking History'[End Date] < MaxDateInContext

),

'Tracking History'[End Date] < MinDateInContext

),BLANK(),

AND (

'Tracking History'[Start Date] < MinDateInContext,

'Tracking History'[End Date] < MaxDateInContext

),BLANK(),

1

)

)

RETURN

SUMX(__Table,[__No of Orders])

Performance analyzer results were as follows:

- Total Orders 4, 57,010 milliseconds, 57 seconds

Wow! Perhaps unexpectedly this really improved performance!! The lesson learned here? Being lazy is a good thing? Difficult to say but likely has something to do with internal DAX optimization. Having all of the code in a single measure helps DAX optimize the query, for example.

Step 4

Now that we have reversed the logic and have this all in one measure, we can clearly see that we could move the first logic test into a FILTER of the table. So pre-filter the table so we do not have to do the first logic test. Let's see what that does:

Total Orders 5 =

VAR MinDateInContext = VALUE ( MIN ( 'DateTimeTable'[Date] ) )

VAR MaxDateInContext = VALUE ( MAX ( DateTimeTable[Date] ) )

VAR __Table =

ADDCOLUMNS(

FILTER(

'Tracking History',

OR (

'Tracking History'[Start Date] > MinDateInContext,

'Tracking History'[End Date] > MaxDateInContext

)

),

"__No of Orders",

SWITCH(TRUE(),

AND(

AND (

'Tracking History'[Start Date] > MinDateInContext,

'Tracking History'[End Date] > MaxDateInContext

),

MaxDateInContext < 'Tracking History'[Start Date]

),BLANK(),

AND(

AND (

'Tracking History'[Start Date] < MinDateInContext,

'Tracking History'[End Date] < MaxDateInContext

),

'Tracking History'[End Date] < MinDateInContext

),BLANK(),

AND (

'Tracking History'[Start Date] < MinDateInContext,

'Tracking History'[End Date] < MaxDateInContext

),BLANK(),

1

)

)

RETURN

SUMX(__Table,[__No of Orders])Performance analyzer results were as follows:

- Total Orders 5, 44,158 milliseconds, 44 seconds

OK, not as dramatic of an improvement, but still knocked about 25% off of the calculation time. Lesson learned, filter early!

Step 5

Looking closely at our logic, the last two tests are redundant. We can get rid of the redundant test.

Total Orders 6 =

VAR MinDateInContext = VALUE ( MIN ( 'DateTimeTable'[Date] ) )

VAR MaxDateInContext = VALUE ( MAX ( DateTimeTable[Date] ) )

VAR __Table =

ADDCOLUMNS(

FILTER(

'Tracking History',

OR (

'Tracking History'[Start Date] > MinDateInContext,

'Tracking History'[End Date] > MaxDateInContext

)

),

"__No of Orders",

SWITCH(TRUE(),

AND(

AND (

'Tracking History'[Start Date] > MinDateInContext,

'Tracking History'[End Date] > MaxDateInContext

),

MaxDateInContext < 'Tracking History'[Start Date]

),BLANK(),

AND (

'Tracking History'[Start Date] < MinDateInContext,

'Tracking History'[End Date] < MaxDateInContext

),BLANK(),

1

)

)

RETURN

SUMX(__Table,[__No of Orders])Performance analyzer results were as follows:

- Total Orders 6, 43,844 milliseconds, 44 seconds

Nope, no real improvement (likely because DAX already optimized out this redundancy). But, the code is shorter and cleaner so that's a win!

Step 6

Those VALUE statements seem unnecessary, let's get rid of those.

Total Orders 7 =

VAR MinDateInContext = MIN ( 'DateTimeTable'[Date] )

VAR MaxDateInContext = MAX ( DateTimeTable[Date] )

VAR __Table =

ADDCOLUMNS(

FILTER(

'Tracking History',

OR (

'Tracking History'[Start Date] > MinDateInContext,

'Tracking History'[End Date] > MaxDateInContext

)

),

"__No of Orders",

SWITCH(TRUE(),

AND(

AND (

'Tracking History'[Start Date] > MinDateInContext,

'Tracking History'[End Date] > MaxDateInContext

),

MaxDateInContext < 'Tracking History'[Start Date]

),BLANK(),

AND (

'Tracking History'[Start Date] < MinDateInContext,

'Tracking History'[End Date] < MaxDateInContext

),BLANK(),

1

)

)

RETURN

SUMX(__Table,[__No of Orders])Performance analyzer results were as follows:

- Total Orders 7, 32,109 milliseconds, 32 seconds

Wow, another 25% reduction. Lesson learned, stop using VALUE and VALUES unnecessarily! I see a lot of it in the forums. It is costing you performance! Only use those functions if you really need to.

Step 7

In looking at the logic once again and considering our pre-filtering, that first logic test is overly complicated. Let's simplify it.

Total Orders 8 =

VAR MinDateInContext = MIN ( 'DateTimeTable'[Date] )

VAR MaxDateInContext = MAX ( DateTimeTable[Date] )

VAR __Table =

ADDCOLUMNS(

FILTER(

'Tracking History',

OR (

'Tracking History'[Start Date] > MinDateInContext,

'Tracking History'[End Date] > MaxDateInContext

)

),

"__No of Orders",

SWITCH(TRUE(),

MaxDateInContext < 'Tracking History'[Start Date],

BLANK(),

AND (

'Tracking History'[Start Date] < MinDateInContext,

'Tracking History'[End Date] < MaxDateInContext

),BLANK(),

1

)

)

RETURN

SUMX(__Table,[__No of Orders])Performance analyzer results were as follows:

- Total Orders 8, 24,208 milliseconds, 24 seconds

Hey! Another 33% improvement. Lesson learned, simpler logic is better!

Step 8

It worked before, what if we move the logic test to the filter clause?

Total Orders 9 =

VAR MinDateInContext = MIN ( 'DateTimeTable'[Date] )

VAR MaxDateInContext = MAX ( DateTimeTable[Date] )

VAR __Table =

ADDCOLUMNS(

FILTER(

'Tracking History',

AND (

OR (

'Tracking History'[Start Date] > MinDateInContext,

'Tracking History'[End Date] > MaxDateInContext

),

MaxDateInContext > 'Tracking History'[Start Date]

)

),

"__No of Orders",

SWITCH(TRUE(),

AND (

'Tracking History'[Start Date] < MinDateInContext,

'Tracking History'[End Date] < MaxDateInContext

),BLANK(),

1

)

)

RETURN

SUMX(__Table,[__No of Orders])Performance analyzer results were as follows:

- Total Orders 9, 22,467 milliseconds, 22 seconds

OK, maybe a minor peformance improvement, didn't seem to hurt anything and our fastest time yet!

Conclusion

Performance tuning DAX can have dramatic results. In this case, code that runs 30x faster than the original. To achieve these kinds of improvements, pay attention to the following:

- Clean up your code, it may not improve performance directly but will help you better understand what you are doing and more easily find optimizations

- Consider structuring your conditional logic so that you eliminate the most rows as early as possible. Sometimes this means reversing your logic.

- Put all of your code in one measure. This one I am not 100% on but in this case consolidating the code had the greatest impact perhaps due to internal DAX optimization. It is also possible that getting rid of the calculation of the two variables or some other optimization in using ADDCOLUMNS helped out here.

- Filter your data as much as possible before you start performing logic tests

- Simplify your logic

- Stop using VALUE and VALUES if you do not have to use them

Stay tuned for Part 2 where I will cover more optimizations and discuss the dangers of over-optimization!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Integrating Power BI with Tableau and Google Data ...

- Split a column and store values into corresponding...

- Administering Microsoft Fabric: Strategies and Rea...

- Optimizing DAX in Power BI: A Comprehensive How-To...

- Design Mobile and Browser Layout view within Power...

- Mastering the Art of Storytelling with Power BI Co...

- Dynamic rollback of the previous N weeks of data

- Supercharge Your Visuals: Easy Conditional Formatt...

- The using of Cartesian products in many-to-many re...

- How to Filter similar Columns Based on Specific Co...

-

Fowmy

on:

Supercharge Your Visuals: Easy Conditional Formatt...

Fowmy

on:

Supercharge Your Visuals: Easy Conditional Formatt...

-

marcoselias

on:

Power BI Dynamic Date Filters: Automatically Updat...

on:

Power BI Dynamic Date Filters: Automatically Updat...

- joseftantawi on: How to customize open-sourced custom visual.

- kalpeshdangar on: Creating Custom Calendars for Accurate Working Day...

- gwayne on: Embracing TMDL Functionalities in Power BI and Pre...

- jian123 on: Sharing Power Query tables

-

Martin_D

on:

From the Desk of An Experienced Power BI Analyst

Martin_D

on:

From the Desk of An Experienced Power BI Analyst

-

ibarrau

on:

[PowerQuery] Catch errors in a request http

ibarrau

on:

[PowerQuery] Catch errors in a request http

- Aditya07 on: How to import customised themes in Power BI - usin...

-

Martin_D

on:

Currency Conversion in Power BI: Enabling Seamless...

Martin_D

on:

Currency Conversion in Power BI: Enabling Seamless...

-

How To

578 -

Tips & Tricks

532 -

Support insights

121 -

Events

107 -

DAX

66 -

Opinion

65 -

Power BI

65 -

Power Query

62 -

Power BI Desktop

40 -

Power BI Dev Camp

36 -

Roundup

32 -

Power BI Embedded

20 -

Time Intelligence

19 -

Tips&Tricks

18 -

PowerBI REST API

12 -

Power Query Tips & Tricks

8 -

finance

8 -

Power BI Service

8 -

Direct Query

7 -

Power BI REST API

6 -

Auto ML

6 -

financial reporting

6 -

Data Analysis

6 -

Power Automate

6 -

Data Visualization

6 -

Python

6 -

Featured User Group Leader

5 -

Dax studio

5 -

Income Statement

5 -

powerbi

5 -

service

5 -

Power BI PowerShell

5 -

Machine Learning

5 -

community

4 -

RLS

4 -

M language

4 -

External tool

4 -

Paginated Reports

4 -

Power BI Goals

4 -

PowerShell

4 -

Desktop

4 -

Bookmarks

4 -

Group By

4 -

Line chart

4 -

Data Science

3 -

Azure

3 -

Data model

3 -

Conditional Formatting

3 -

Visualisation

3 -

Administration

3 -

M code

3 -

SQL Server 2017 Express Edition

3 -

Visuals

3 -

R script

3 -

Aggregation

3 -

Dataflow

3 -

calendar

3 -

Gateways

3 -

R

3 -

M Query

3 -

R visual

3 -

Webinar

3 -

CALCULATE

3 -

Reports

3 -

PowerApps

3 -

Tips and Tricks

2 -

Incremental Refresh

2 -

Query Plans

2 -

Power BI & Power Apps

2 -

Random numbers

2 -

Day of the Week

2 -

Number Ranges

2 -

M

2 -

hierarchies

2 -

Power BI Anniversary

2 -

Language M

2 -

Custom Visual

2 -

VLOOKUP

2 -

pivot

2 -

calculated column

2 -

Power BI Premium Per user

2 -

inexact

2 -

Date Comparison

2 -

Split

2 -

Forecasting

2 -

REST API

2 -

Editor

2 -

Working with Non Standatd Periods

2 -

powerbi.tips

2 -

Custom function

2 -

Reverse

2 -

measure

2 -

Microsoft-flow

2 -

Paginated Report Builder

2 -

PUG

2 -

Custom Measures

2 -

Filtering

2 -

Row and column conversion

2 -

Python script

2 -

Nulls

2 -

DVW Analytics

2 -

Industrial App Store

2 -

Week

2 -

Date duration

2 -

parameter

2 -

Weekday Calendar

2 -

Support insights.

2 -

construct list

2 -

Formatting

2 -

Power Platform

2 -

Workday

2 -

external tools

2 -

slicers

2 -

SAP

2 -

index

2 -

RANKX

2 -

Integer

2 -

PBI Desktop

2 -

Date Dimension

2 -

Power BI Challenge

2 -

Query Parameter

2 -

Visualization

2 -

Tabular Editor

2 -

Date

2 -

SharePoint

2 -

Power BI Installation and Updates

2 -

How Things Work

2 -

troubleshooting

2 -

Date DIFF

2 -

Transform data

2 -

rank

2 -

ladataweb

2 -

Sameperiodlastyear

1 -

Office Theme

1 -

matrix

1 -

bar chart

1 -

Measures

1 -

powerbi argentina

1 -

Model Driven Apps

1 -

REMOVEFILTERS

1 -

XMLA endpoint

1 -

translations

1 -

OSI pi

1 -

Parquet

1 -

Change rows to columns

1 -

remove spaces

1 -

Azure AAD

1 -

Governance

1 -

Fun

1 -

Power BI gateway

1 -

gateway

1 -

Elementary

1 -

Custom filters

1 -

Vertipaq Analyzer

1 -

powerbi cordoba

1 -

DIisconnected Tables

1 -

Sandbox

1 -

Honeywell

1 -

Combine queries

1 -

X axis at different granularity

1 -

ADLS

1 -

Primary Key

1 -

Microsoft 365 usage analytics data

1 -

Randomly filter

1 -

Week of the Day

1 -

Get latest sign-in data for each user

1 -

Retail

1 -

Power BI Report Server

1 -

School

1 -

Cost-Benefit Analysis

1 -

ISV

1 -

Ties

1 -

unpivot

1 -

Practice Model

1 -

Continuous streak

1 -

ProcessVue

1 -

Create function

1 -

Table.Schema

1 -

Acknowledging

1 -

Postman

1 -

Text.ContainsAny

1 -

Power BI Show

1 -

query

1 -

Dynamic Visuals

1 -

KPI

1 -

Intro

1 -

Icons

1 -

Issues

1 -

function

1 -

stacked column chart

1 -

ho

1 -

ABB

1 -

KNN algorithm

1 -

List.Zip

1 -

optimization

1 -

Artificial Intelligence

1 -

Map Visual

1 -

Text.ContainsAll

1 -

Tuesday

1 -

API

1 -

Kingsley

1 -

Merge

1 -

variable

1 -

financial reporting hierarchies RLS

1 -

Featured Data Stories

1 -

MQTT

1 -

Custom Periods

1 -

Partial group

1 -

Reduce Size

1 -

FBL3N

1 -

Wednesday

1 -

help

1 -

group

1 -

Scorecard

1 -

Json

1 -

Tops

1 -

Multivalued column

1 -

pipeline

1 -

Path

1 -

Yokogawa

1 -

Dynamic calculation

1 -

Data Wrangling

1 -

native folded query

1 -

transform table

1 -

UX

1 -

Cell content

1 -

General Ledger

1 -

Thursday

1 -

Power Pivot

1 -

Quick Tips

1 -

data

1 -

PBIRS

1 -

Usage Metrics in Power BI

1 -

HR Analytics

1 -

keepfilters

1 -

Connect Data

1 -

Financial Year

1 -

Schneider

1 -

dynamically delete records

1 -

Copy Measures

1 -

Friday

1 -

Table

1 -

Natural Query Language

1 -

Infographic

1 -

automation

1 -

Prediction

1 -

newworkspacepowerbi

1 -

Performance KPIs

1 -

Active Employee

1 -

Custom Date Range on Date Slicer

1 -

refresh error

1 -

PAS

1 -

certain duration

1 -

DA-100

1 -

bulk renaming of columns

1 -

Single Date Picker

1 -

Monday

1 -

PCS

1 -

Saturday

1 -

Q&A

1 -

Event

1 -

Custom Visuals

1 -

Free vs Pro

1 -

Format

1 -

Current Employees

1 -

date hierarchy

1 -

relationship

1 -

SIEMENS

1 -

Multiple Currency

1 -

Power BI Premium

1 -

On-premises data gateway

1 -

Binary

1 -

Power BI Connector for SAP

1 -

Sunday

1 -

update

1 -

Slicer

1 -

Visual

1 -

forecast

1 -

Regression

1 -

CICD

1 -

sport statistics

1 -

Intelligent Plant

1 -

Circular dependency

1 -

GE

1 -

Exchange rate

1 -

Dendrogram

1 -

range of values

1 -

activity log

1 -

Decimal

1 -

Charticulator Challenge

1 -

Field parameters

1 -

Training

1 -

Announcement

1 -

Features

1 -

domain

1 -

pbiviz

1 -

Color Map

1 -

Industrial

1 -

Weekday

1 -

Working Date

1 -

Space Issue

1 -

Emerson

1 -

Date Table

1 -

Cluster Analysis

1 -

Stacked Area Chart

1 -

union tables

1 -

Number

1 -

Start of Week

1 -

Tips& Tricks

1 -

deployment

1 -

ssrs traffic light indicators

1 -

SQL

1 -

trick

1 -

Scripts

1 -

Extract

1 -

Topper Color On Map

1 -

Historians

1 -

context transition

1 -

Custom textbox

1 -

OPC

1 -

Zabbix

1 -

Label: DAX

1 -

Business Analysis

1 -

Supporting Insight

1 -

rank value

1 -

Synapse

1 -

End of Week

1 -

Tips&Trick

1 -

Workspace

1 -

Theme Colours

1 -

Text

1 -

Flow

1 -

Publish to Web

1 -

patch

1 -

Top Category Color

1 -

A&E data

1 -

Previous Order

1 -

Substring

1 -

Wonderware

1 -

Power M

1 -

Format DAX

1 -

Custom functions

1 -

accumulative

1 -

DAX&Power Query

1 -

Premium Per User

1 -

GENERATESERIES

1 -

Showcase

1 -

custom connector

1 -

Waterfall Chart

1 -

Power BI On-Premise Data Gateway

1 -

step by step

1 -

Top Brand Color on Map

1 -

Tutorial

1 -

Previous Date

1 -

XMLA End point

1 -

color reference

1 -

Date Time

1 -

Marker

1 -

Lineage

1 -

CSV file

1 -

conditional accumulative

1 -

Matrix Subtotal

1 -

Check

1 -

null value

1 -

Report Server

1 -

Audit Logs

1 -

analytics pane

1 -

mahak

1 -

pandas

1 -

Networkdays

1 -

Button

1 -

Dataset list

1 -

Keyboard Shortcuts

1 -

Fill Function

1 -

LOOKUPVALUE()

1 -

Tips &Tricks

1 -

Plotly package

1 -

refresh M language Python script Support Insights

1 -

Excel

1 -

Cumulative Totals

1 -

Report Theme

1 -

Bookmarking

1 -

oracle

1 -

Canvas Apps

1 -

total

1 -

Filter context

1 -

Difference between two dates

1 -

get data

1 -

OSI

1 -

Query format convert

1 -

ETL

1 -

Json files

1 -

Merge Rows

1 -

CONCATENATEX()

1 -

take over Datasets;

1 -

Networkdays.Intl

1 -

Get row and column totals

1

- 05-05-2024 - 05-09-2024

- 04-28-2024 - 05-04-2024

- 04-14-2024 - 04-20-2024

- 04-07-2024 - 04-13-2024

- 03-24-2024 - 03-30-2024

- 03-17-2024 - 03-23-2024

- 03-10-2024 - 03-16-2024

- 03-03-2024 - 03-09-2024

- 02-25-2024 - 03-02-2024

- 02-18-2024 - 02-24-2024

- 02-11-2024 - 02-17-2024

- 02-04-2024 - 02-10-2024

- View Complete Archives