- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Refreshing one dataflow for multiple workspaces

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Refreshing one dataflow for multiple workspaces

For the record we're in a Premium Capacity environment-

In our environment, to help with the large number of reports we need to make, we've set up Apps/Workspaces for each department we distribute to. In large part due to how easy this makes handling permissions, since it's on the App level, not the report.

So for example we have an Audit workspace/app, and a Security workspace/App.

Report 1 is unique to audit, report 2 however, is used by both Audit and Security.

What i'm trying to accomplish is setting up one data flow (ideally in a Flow workspace to keep things conslidated) with everything needed for report 2, and then publishing it to the Flow workspace. I'd like to have only ONE refresh per day on this data, and then share it out (and i need that refresh to be incremental).

So for next steps, i've created a report, pulled the data from the dataflow, and published it to both Security and Audit workspaces.

From what i've seen this does not accomplish what i want, as now both Audit and Security have a dataset that connects to the dataflow. The dataflow will refresh, but the Audit and Security dataset will not. Further i'm not sure if i trigger the refresh if it will do it incrementally (just pulling the recent data) or if it'll refresh the ENTIRE thing.

Does anyone know if this is possible? If not how is this sort of distrobution/shared data problem supposed to be approached? I really don't want to have to clone my data eveywhere and refresh it multiple times.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Almost!

1. Create Data flow, set up my incremental refresh (archive everything older than 2 weeks, refresh previous 2 weeks). Good!

2. Open powerbi desktop, import from the dataflow everything I need, create the report. Publish it to my "Flow" workspace. Good!

3. This will create a report and dataset in the workspace. We don't care about the report, we care about the dataset. Good!

4. Copy the report i just made, this time importing from the dataset in the flow workspace (shoudl be trivial). Publish this to the Security and Audit workspaces.

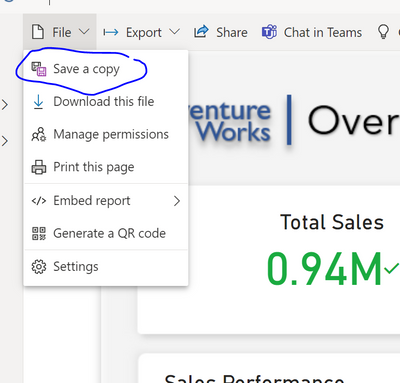

4. Open you report in flow workspace, and save a copy to the workspaces Security and Audit workspace.

This will also create a lineage from different workspaces to flow workspace.

5. Set schedule refresh on your dataset in flow workspace to refresh after your dataflow.

Dataset will not incremental refresh, it will do a full load. If you want dataset to incremental refresh, it has to be done in the query of the dataset itself.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, to make sure i understand this, could you confirm this workflow is correct-

1. Create Data flow, set up my incremental refresh (archive everything older than 2 weeks, refresh previous 2 weeks).

2. Open powerbi desktop, import from the dataflow everything I need, create the report. Publish it to my "Flow" workspace.

3. This will create a report and dataset in the workspace. We don't care about the report, we care about the dataset.

4. Copy the report i just made, this time importing from the dataset in the flow workspace (shoudl be trivial). Publish this to the Security and Audit workspaces.

Doing this seems to give me the lineage i would expect, however leaves me with one question, which is will the dataSET incrementally refresh like the dataFLOW, or will it pull from the entire dataflow each time?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Almost!

1. Create Data flow, set up my incremental refresh (archive everything older than 2 weeks, refresh previous 2 weeks). Good!

2. Open powerbi desktop, import from the dataflow everything I need, create the report. Publish it to my "Flow" workspace. Good!

3. This will create a report and dataset in the workspace. We don't care about the report, we care about the dataset. Good!

4. Copy the report i just made, this time importing from the dataset in the flow workspace (shoudl be trivial). Publish this to the Security and Audit workspaces.

4. Open you report in flow workspace, and save a copy to the workspaces Security and Audit workspace.

This will also create a lineage from different workspaces to flow workspace.

5. Set schedule refresh on your dataset in flow workspace to refresh after your dataflow.

Dataset will not incremental refresh, it will do a full load. If you want dataset to incremental refresh, it has to be done in the query of the dataset itself.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Dungar,

Report is driven by dataset, not dataflow. Dataset can pull data from dataflows. So if you use both dataflow and dataset, you need to configure refresh for both.

Refresh has to be scheduled in specific sequence, which means, dataflow should refresh first. Once that is completed, then the dataset can refresh by pulling data from dataflow.

For your case:

1 dataflow > 2 dataset > 2 reports

You can simplify by:

1 dataflow > 1 dataset > 2 reports

Check this link out on how to use 1 dataset for different reports in different workspaces

https://docs.microsoft.com/en-us/power-bi/connect-data/service-datasets-across-workspaces

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.