- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Incremental Refresh on Compute Entities

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Incremental Refresh on Compute Entities

Hi all,

we are building a complex ETL model using dataflows and I'd like to clarify how to use incremental refreshes on a P1 instance.

Our Requirements:

- A large dataset from a SQL server (~2 million records per day)

- Need history for the last 12 months minimum

- Need to join the data at each refresh to another dataflow ( ~12.5 million records)

- Daily updates

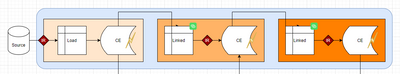

A partial diagram of what we are building is below, but the summary is:

- ingesting data into a staging dataflow with an incremental refresh

- loading it into a compute entity

- ingesting data into a transformation dataflow with an incremental refresh, rinse and repeat.

My question is should we use an incremental refresh in subsequent dataflows between linked objects and compute entities?

The reason why we want to propagate incremental refreshed between dataflows is to reduce the load on the P1 entity, particularly with joins: the dataset is currently running at ~360 million rows and joining with another 12 million records takes about 13 hours to run.

I am hoping to run the first data load to join the whole thing and save it and then only run the joins on one-two days of incrementally refreshed data, i.e. 2-4 million records, not the whole 360 million +.

However, this totally non-descriptive article from MS documentation tells me not to use incremental refresh between dataflows.

Am I going to screw the whole thing up by trying to improve it or am I reading this document wrong?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This has been a while since I posted the original question. We have implemented the incremental refresh between dataflows (for example, staging and processing dataflows) and it's been working excellent.

The confusion is between linked and compute entities, as mentioned above. The linked entities cannot be changed from downstream dataflows and so the question does not apply to them. But if you load your original data into a compute entity, then it is possible to implement IR between them.

In our case, it reduced the downstream processing of a large dataflow table (~500 million rows) from 4 hours to about 20 minutes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This has been a while since I posted the original question. We have implemented the incremental refresh between dataflows (for example, staging and processing dataflows) and it's been working excellent.

The confusion is between linked and compute entities, as mentioned above. The linked entities cannot be changed from downstream dataflows and so the question does not apply to them. But if you load your original data into a compute entity, then it is possible to implement IR between them.

In our case, it reduced the downstream processing of a large dataflow table (~500 million rows) from 4 hours to about 20 minutes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm still confused about this, as I asked what I think is a similar question here: https://community.fabric.microsoft.com/t5/Service/Incremental-Refresh-with-Linked-Referenced-Tables/...

Are you saying you implemented two incremental refreshes? One on the source staging table and another one on a linked referenced/computed entity?

I put an incremental refresh on the staging table when first bringing it in from an on-prem SQL server and it takes 30 seconds. But my linked referenced/computed entity to that staging table takes 3 minutes (I haven't done any transformations to it yet).

So I'm trying to figure out if I need to create a second incremental refresh on the downstream linked tables.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@MightyMicrobe, one more question if I may ask: when you enabled IR on your computed entity, did you have your Enhanced Configuration Engine for this dataflow set to Optimized or On?

I am asking this because it *seems* to work ONLY if ECE is not switched off. In my case, when ECE is switched off, I have error messages in the refresh log about invalid PBI credentials.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @fred31330, we're dealing with pretty large tables (100-600 million rows) in our dataflows, and most of them are cascaded, so by default, we always set the Enhanced Engine to ON. I'm not sure if this is the best practice or, in some cases, enhanced compute should not be turned on, but this seems to work well.

We're on a P1 Premium if that helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your feedback! Exactly what I needed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for posting. According to arvindsingh802 answer it wasn't necessary to explicitely set-up incfremental refresh between dataflows, so glad to hear that this in fact can and should be done. Appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are reading the Microsoft Article right, Incremental refresh between dataflows is not required if the source dataflow has incremental refresh implemented.

If this post helps, then please consider Accept it as the solution, Appreciate your Kudos!

If this post helps, then please consider Accept it as the solution, Appreciate your Kudos!!

Proud to be a Super User!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your reply. Does it mean that incremental refresh logic implemented at the source is propagated throughout all linked entities? Can you post a link to where I can read more?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, linked entities are reference entity that does not create a replica itself that is why linked entities should not use incremental refresh if they reference a dataflow.

Premium features of dataflows - Power BI | Microsoft Docs

If this post helps, then please consider Accept it as the solution, Appreciate your Kudos!!

Proud to be a Super User!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think there's been a misunderstanding. We're not referring to incremental refresh on linked entities, but for computed entities. See the original post. Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would also like to read the specifics on this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I am wondering how you finally solved this challenge as I am in the same situation. Could you kindly share your feedback?

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was wondering the exact same thing myself - definitely interested to hear the answer.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 58 | |

| 20 | |

| 18 | |

| 18 | |

| 9 |