- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Subtract Values in consecutive rows

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Subtract Values in consecutive rows

Hello All,

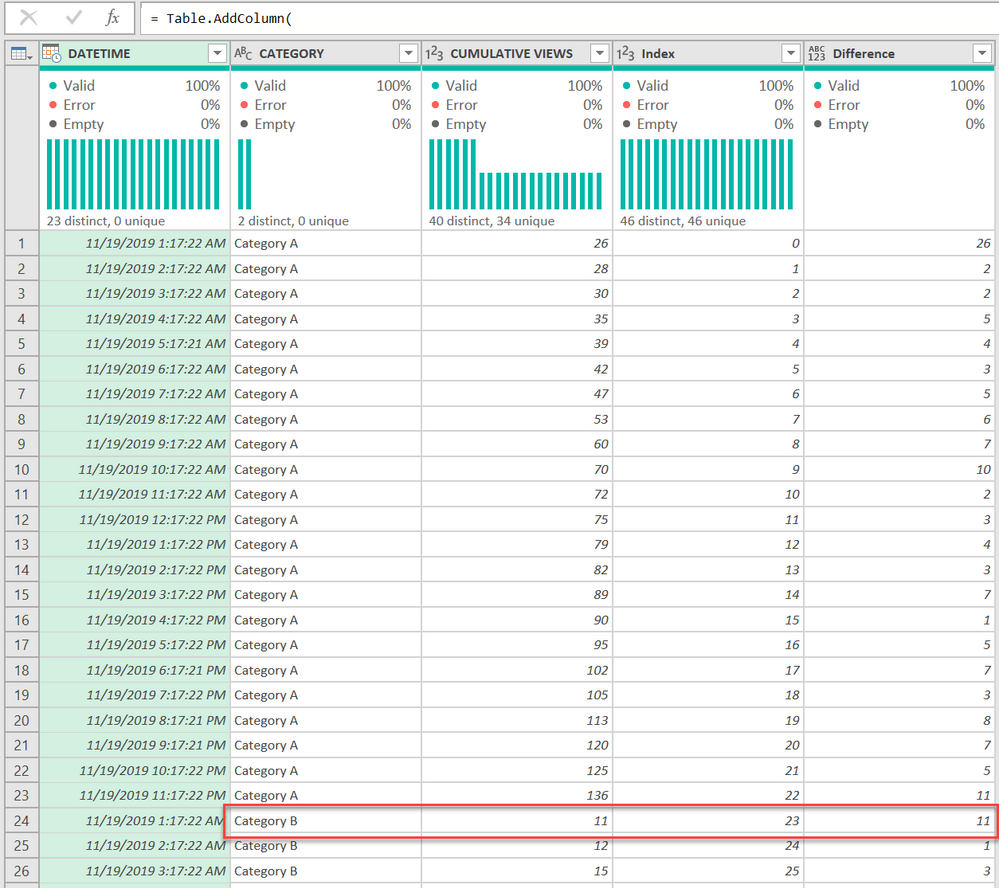

Im working on a task where I need to subtract values from consecutive rows.

More specifically i have a spreadsheet (see attached) where Culumn C contains cumulative values for category A and Category B. I want to convert the cumulative values to record (row) values, per category. In the dataset every record (row) respresents a different hour.

I would like to replicate the calculation in Column D using Power Query. I tried the methodology of using 2 index columns and at the end merging the same dataset but it takes ages (lots of processing Power and RAM memory) and it never returns any results.

Merge is very expensive process in PowerBI and I would also like to mention that i want to do this calculation over 1.7 million rows.

If you think that there is any other alternative using Power Query that would be great.

Thanks

Themis

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@themistoklis - one more solution:

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

#"Changed Type" = Table.TransformColumnTypes(Source,{{"DATETIME", type datetime}, {"CATEGORY", type text}, {"CUMULATIVE VIEWS", Int64.Type}}),

#"Sorted Rows" = Table.Buffer(Table.Sort(#"Changed Type",{{"CATEGORY", Order.Ascending}, {"DATETIME", Order.Ascending}})),

listCategory = List.Buffer(#"Sorted Rows"[CATEGORY]),

listCumulative = List.Buffer(#"Sorted Rows"[CUMULATIVE VIEWS]),

listCount = List.Count(listCategory),

listCumulativeDifference =

List.Skip(

List.Generate(

()=> [varCategory = listCategory{0}, varDiff = 0, Counter = 0],

each [Counter] <= listCount,

each

try

if [Counter] = 0

then [varDiff = listCumulative{[Counter]}, Counter = [Counter]+ 1]

else if listCategory{[Counter]} = listCategory{[Counter] - 1}

then [varDiff = listCumulative{[Counter]} - listCumulative{[Counter] -1}, Counter = [Counter]+ 1]

else [varDiff = listCumulative{[Counter]}, Counter = [Counter]+ 1]

otherwise [Counter = [Counter] + 1],

each [varDiff]

),

1

),

CombinedColumns =

Table.ToColumns(#"Sorted Rows") & {listCumulativeDifference},

BackToTable =

Table.FromColumns(

CombinedColumns,

Table.ColumnNames(#"Sorted Rows") & {"Difference"}

)

in

BackToTableThis will do 100,000 rows as fast as Excel can think about loading the .NET framework to process it. I suspect 1M rows would be equally fast.

this is in the original file link above so you can get the solution there. it has 100K rows for testing now.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

decumul:

let

decum=(tab)=>

let

listCumulative = List.Buffer(tab[CUMULATIVE VIEWS]),

listCount=List.Count(listCumulative),

names=Table.ColumnNames(tab)&{"shift"},

shift= List.Generate(

()=> [varDiff = listCumulative{0}, Counter = 0],

each [Counter] < listCount,

each [varDiff=listCumulative{Counter}-listCumulative{[Counter]},Counter=[Counter]+1],

each [varDiff]

),

ttc=Table.ToColumns(tab)

in

Table.FromColumns(ttc&{shift},names)

in decum

let

Origine = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("jZRBagMxDEWvEmYdiKWxR3Z2cY8RssgidFko3fT2HWtwodUXaGNIeBjP/9K73xdqF6ILJ2qnRFeSK/NyXt6eX6/3j8/v023/wdtxPM5/cXbwOg5Dr5heE6SzQ5f9KIYuSpOh235kQ2/47jz+WQ0tDi3wJRXTZd0Pm2DD9DYykf80JUzLoCkZ3GlTGAVOTplSUCjklCkwcHLKrDBwKg7dYChOmU1DMbRTZoNjRRWPFSWGT3HapAQz5OTcTmNWqsHJwRkOCzt9EsNPZadQWjd9kplcMFz9ICEOXqM4o5aQKpSGOSJVDFqjsisHVKF0hneD6epTWvZuMF1Kw41DqlC6RlXRp4ZaRBWDLgWNFjKF0hI1RZ/SCplC6Rw1hdISNcWgJUdN0afhQqYYdE1RUygtUVH06ayQJ5SuUU30XwmFNHHsMfxOpIljj+feP34A", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [DATETIME = _t, CATEGORY = _t, #"CUMULATIVE VIEWS" = _t, #"HOURLY VIEWS (POWER QUERY CALCULATION)" = _t]),

#"Modificato tipo" = Table.TransformColumnTypes(Origine,{{"DATETIME", type datetime}, {"CATEGORY", type text}, {"CUMULATIVE VIEWS", Int64.Type}, {"HOURLY VIEWS (POWER QUERY CALCULATION)", Int64.Type}}),

#"Raggruppate righe" = Table.Group(#"Modificato tipo", {"CATEGORY"}, {"all", each decumul(_)}),

#"Tabella all espansa" = Table.ExpandTableColumn(#"Raggruppate righe", "all", {"DATETIME", "CUMULATIVE VIEWS", "HOURLY VIEWS (POWER QUERY CALCULATION)", "shift"}, {"DATETIME", "CUMULATIVE VIEWS", "HOURLY VIEWS (POWER QUERY CALCULATION)", "shift"})

in

#"Tabella all espansa"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @themistoklis - whenever I deal with any kind of list now, I almost always buffer the results through Table.Buffer or List.Buffer.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@themistoklis - one more solution:

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

#"Changed Type" = Table.TransformColumnTypes(Source,{{"DATETIME", type datetime}, {"CATEGORY", type text}, {"CUMULATIVE VIEWS", Int64.Type}}),

#"Sorted Rows" = Table.Buffer(Table.Sort(#"Changed Type",{{"CATEGORY", Order.Ascending}, {"DATETIME", Order.Ascending}})),

listCategory = List.Buffer(#"Sorted Rows"[CATEGORY]),

listCumulative = List.Buffer(#"Sorted Rows"[CUMULATIVE VIEWS]),

listCount = List.Count(listCategory),

listCumulativeDifference =

List.Skip(

List.Generate(

()=> [varCategory = listCategory{0}, varDiff = 0, Counter = 0],

each [Counter] <= listCount,

each

try

if [Counter] = 0

then [varDiff = listCumulative{[Counter]}, Counter = [Counter]+ 1]

else if listCategory{[Counter]} = listCategory{[Counter] - 1}

then [varDiff = listCumulative{[Counter]} - listCumulative{[Counter] -1}, Counter = [Counter]+ 1]

else [varDiff = listCumulative{[Counter]}, Counter = [Counter]+ 1]

otherwise [Counter = [Counter] + 1],

each [varDiff]

),

1

),

CombinedColumns =

Table.ToColumns(#"Sorted Rows") & {listCumulativeDifference},

BackToTable =

Table.FromColumns(

CombinedColumns,

Table.ColumnNames(#"Sorted Rows") & {"Difference"}

)

in

BackToTableThis will do 100,000 rows as fast as Excel can think about loading the .NET framework to process it. I suspect 1M rows would be equally fast.

this is in the original file link above so you can get the solution there. it has 100K rows for testing now.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@edhans's query run faster and i noticed that the table.buffer function was the one that made a huge impact on the performance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

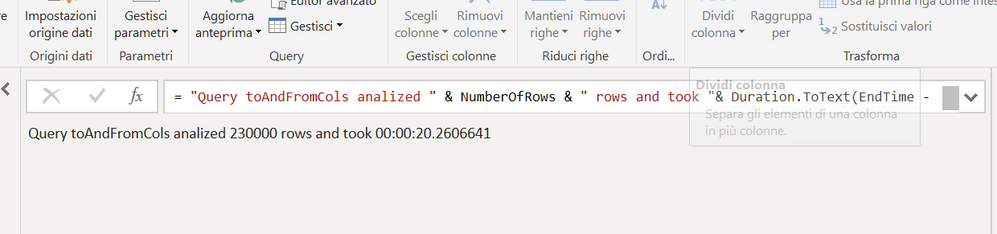

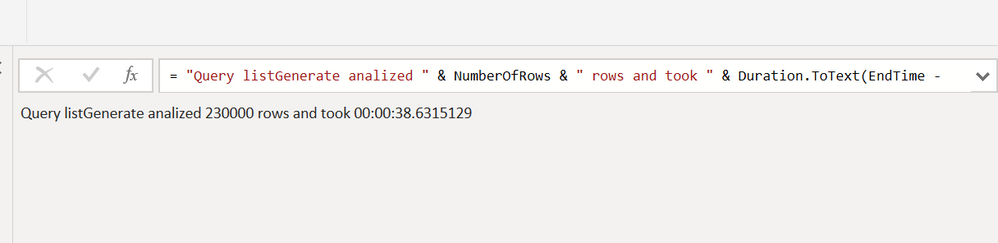

let me have some doubts about this statement. In any case, for those wishing to try the different times of the two different solutions, you can use this script that I got from Ben Gribaudo's blog.

Obviously the results can vary a lot depending on many things. But you can test the performance of these scripts for different dimensions of the dataset

let

//Get the current time, which will be the query start time

StartTime = DateTime.LocalNow(),

//Make sure StartTime is evaluated just before we request data from Facebook

trig = if StartTime<>null

then

#"toAndFromCols LDS"

else

null,

//Find the number of rows returned

NumberOfRows = Number.ToText(Table.RowCount(trig)),

//Get the current time, which will be the query end time

EndTime = DateTime.LocalNow(),

//Make sure PQ evvaluates all expressions in the right order:

//first, get the Number of rows, which ensure that

//the Source expression is evaluated, and in turn StartTime is evaluated

//second, the EndTime is evaluated as part of the duration calculation

Output = "Query analized " & NumberOfRows & " rows and took "& Duration.ToText(EndTime - StartTime)

in

Output

let

//Get the current time, which will be the query start time

StartTime = DateTime.LocalNow(),

//Make sure StartTime is evaluated just before we request data from Facebook

trig = if StartTime<>null

then

#"listgenerate LDS"

else

null,

//Find the number of rows returned

NumberOfRows = Number.ToText(Table.RowCount(trig)),

//Get the current time, which will be the query end time

EndTime = DateTime.LocalNow(),

//Make sure PQ evvaluates all expressions in the right order:

//first, get the Number of rows, which ensure that

//the Source expression is evaluated, and in turn StartTime is evaluated

//second, the EndTime is evaluated as part of the duration calculation

Output = "Query analized " & NumberOfRows & " rows and took " & Duration.ToText(EndTime - StartTime)

in

Output

listGenerate LDS:

let

Source = LDS,// Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

#"Changed Type" = Table.TransformColumnTypes(Source,{{"DATETIME", type datetime}, {"CATEGORY", type text}, {"CUMULATIVE VIEWS", Int64.Type}}),

#"Sorted Rows" = Table.Buffer(Table.Sort(#"Changed Type",{{"CATEGORY", Order.Ascending}, {"DATETIME", Order.Ascending}})),

listCategory = List.Buffer(#"Sorted Rows"[CATEGORY]),

listCumulative = List.Buffer(#"Sorted Rows"[CUMULATIVE VIEWS]),

listCount = List.Count(listCategory),

listCumulativeDifference =

List.Skip(

List.Generate(

()=> [varCategory = listCategory{0}, varDiff = 0, Counter = 0],

each [Counter] <= listCount,

each

try

if [Counter] = 0

then [varDiff = listCumulative{[Counter]}, Counter = [Counter]+ 1]

else if listCategory{[Counter]} = listCategory{[Counter] - 1}

then [varDiff = listCumulative{[Counter]} - listCumulative{[Counter] -1}, Counter = [Counter]+ 1]

else [varDiff = listCumulative{[Counter]}, Counter = [Counter]+ 1]

otherwise [Counter = [Counter] + 1],

each [varDiff]

),

1

),

CombinedColumns =

Table.ToColumns(#"Sorted Rows") & {listCumulativeDifference},

BackToTable =

Table.FromColumns(

CombinedColumns,

Table.ColumnNames(#"Sorted Rows") & {"Difference"}

)

in

BackToTable

toAndFromCols LDS:

let

Origine = LDS,

decumul =(cumulist)=>

let

ttc=Table.ToColumns(cumulist),

names=Table.ColumnNames(cumulist),

tfc = Table.FromColumns(ttc&{{0}&cumulist[CUMULATIVE VIEWS]},names&{"shifted"}),

ac = Table.AddColumn(tfc, "dec", each [CUMULATIVE VIEWS]-[shifted]),

rl = Table.RemoveLastN(ac,1)

in

rl,

#"Raggruppate righe" = Table.Group(Origine, {"CATEGORY"}, {"all", each decumul(_)}),

#"Tabella all espansa" = Table.ExpandTableColumn(#"Raggruppate righe", "all", {"DATETIME", "CUMULATIVE VIEWS", "HOURLY VIEWS (POWER QUERY CALCULATION)", "dec"}, {"DATETIME", "CUMULATIVE VIEWS", "HOURLY VIEWS (POWER QUERY CALCULATION)", "dec"})

in

#"Tabella all espansa"

LDS:

let

Origine = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("jZRBagMxDEWvEmYdiKWxR3Z2cY8RssgidFko3fT2HWtwodUXaGNIeBjP/9K73xdqF6ILJ2qnRFeSK/NyXt6eX6/3j8/v023/wdtxPM5/cXbwOg5Dr5heE6SzQ5f9KIYuSpOh235kQ2/47jz+WQ0tDi3wJRXTZd0Pm2DD9DYykf80JUzLoCkZ3GlTGAVOTplSUCjklCkwcHLKrDBwKg7dYChOmU1DMbRTZoNjRRWPFSWGT3HapAQz5OTcTmNWqsHJwRkOCzt9EsNPZadQWjd9kplcMFz9ICEOXqM4o5aQKpSGOSJVDFqjsisHVKF0hneD6epTWvZuMF1Kw41DqlC6RlXRp4ZaRBWDLgWNFjKF0hI1RZ/SCplC6Rw1hdISNcWgJUdN0afhQqYYdE1RUygtUVH06ayQJ5SuUU30XwmFNHHsMfxOpIljj+feP34A", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [DATETIME = _t, CATEGORY = _t, #"CUMULATIVE VIEWS" = _t, #"HOURLY VIEWS (POWER QUERY CALCULATION)" = _t]),

mt= Table.TransformColumnTypes(Origine,{{"DATETIME", type datetime}, {"CATEGORY", type text}, {"CUMULATIVE VIEWS", Int64.Type}, {"HOURLY VIEWS (POWER QUERY CALCULATION)", Int64.Type}}),

ttc=Table.ToColumns(mt),

names=Table.ColumnNames(mt),

largeDS= List.Transform(ttc, each List.Repeat(_,5000)),

tfc=Table.FromColumns(largeDS,names)

in

tfc

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

Just to mention that all scripts ran successfully (a few amendments where necessary). So users can use/test any of the scripts posted on this thread, based on their needs.

I agree that perfornance on Power Query running times is dependent on various things.

In my case i tried various things to improve performance. I also consulted the information from this site, which is actually good.

https://www.thebiccountant.com/speedperformance-aspects/

I would expect a pretty straight forward methodology to process the data (it is just a sorting and subtraction of consecutive rows), and without trial and error noone will ever find the best approach.

Although the query ran successfully I think I will also try python scripting

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

un esempio:

this is not always the case, the results are very variable.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @themistoklis

i tried a similar approach that @Anonymous ... also because I didn't check out all answers allready given 🙂

I used the group-function and then added a new column with a shifted version of your cumulative column (all the code of the Table.TransformColumns could also be integrated into the group-function). Then subtracted both of them. Here a standalone-code 🙂

I would be currious how this code is performing

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("hZQxbsMwEAS/Yqg2DO5JFEl3UZ5huHBhpAwQpPHvbbELZwOXBAZ3PM4tL5dJ7SSdIqkdks4q03H6vP3ev75/HoeP1yHW6Xr8i4XDKrDZYHMCtjgsA8sOa8BWgy0BrDisAKsGyzOwZrAVkyoZrBBzFgpGkLNQ8G5yFgreTc5CZVNnobKas9A4qbPQOIKzoMTLOQ1KqBfOgwSt4UQoMEY4Ewr2dSo0M19j363f720MO8ZVH7t2jPkaF2DHgk3HBejY8jaGm8/+uAAd4wjjAnSMH87of7OhRgx3LHPrnIXMas6Cyb6zsOLdEMOOsamzUFjNWTBfhLNQOYKzUHk3Z4HZRwg7BqfI4PZPpm0YxLY2DfueX58=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [DATETIME = _t, CATEGORY = _t, #"CUMULATIVE VIEWS" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"DATETIME", type datetime}, {"CATEGORY", type text}, {"CUMULATIVE VIEWS", Int64.Type}}),

#"Sorted Rows" = Table.Sort(#"Changed Type",{{"DATETIME", Order.Ascending}}),

#"Grouped Rows" = Table.Group(#"Sorted Rows", {"CATEGORY"}, {{"AllRows", each _, type table [DATETIME=datetime, CATEGORY=text, CUMULATIVE VIEWS=number]}}),

AddHourlyViews = Table.TransformColumns

(

#"Grouped Rows",

{

{

"AllRows",

(tbl)=> Table.AddColumn

(

Table.FromColumns

(

Table.ToColumns(tbl) & {{0}&(List.RemoveLastN(tbl[#"CUMULATIVE VIEWS"]))},

Table.ColumnNames(tbl)&{"Shifted"}

),

"Hourly Views",

(add)=> add[#"CUMULATIVE VIEWS"]-add[Shifted]

)

}

}

),

#"Expanded AllRows" = Table.ExpandTableColumn(AddHourlyViews, "AllRows", {"DATETIME", "CUMULATIVE VIEWS", "Hourly Views"}, {"DATETIME", "CUMULATIVE VIEWS", "Hourly Views"})

in

#"Expanded AllRows"Copy paste this code to the advanced editor in a new blank query to see how the solution works.

If this post helps or solves your problem, please mark it as solution (to help other users find useful content and to acknowledge the work of users that helped you)

Kudoes are nice too

Have fun

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

try also this (100k rows less than 3')

let

Origine = Excel.Workbook(File.Contents("C:\Users\xyz\OneDrive - TIM\MyD2020\BI\Cumulative_to_row_calculation.xlsx"), null, true),

Sheet1_Sheet = Origine{[Item="Sheet1",Kind="Sheet"]}[Data],

decumul =(cumulist)=>

let

shift={0}&List.RemoveLastN(cumulist,1)

in List.Transform({0..List.Count(cumulist)-1}, each cumulist{_}-shift{_}),

#"Intestazioni alzate di livello" = Table.PromoteHeaders(Sheet1_Sheet, [PromoteAllScalars=true]),

#"Modificato tipo" = Table.TransformColumnTypes(#"Intestazioni alzate di livello",{{"DATETIME", type datetime}, {"CATEGORY", type text}, {"CUMULATIVE VIEWS", Int64.Type}, {"HOURLY VIEWS (POWER QUERY CALCULATION)", Int64.Type}}),

#"Raggruppate righe" = Table.Group(#"Modificato tipo", {"CATEGORY"}, {"all", each Table.FromColumns({[DATETIME],[CUMULATIVE VIEWS],decumul([CUMULATIVE VIEWS])},{"DATETIME","CUMULATIVE VIEWS","HV"})})

in

#"Raggruppate righe"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this scheme takes less than 60 "to process 2000000 (2M) of rows

let

Origine = List.Buffer(List.Transform({1..2000000}, each Number.Round(Number.RandomBetween(1,100)))),

#"Conversione in tabella" = Table.FromColumns({Origine,{0}&Origine}),

#"Aggiunta colonna personalizzata" = Table.AddColumn(#"Conversione in tabella", "dec", each [Column1]-[Column2])

in

#"Aggiunta colonna personalizzata"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

See if this works for you @themistoklis

I did 2 things:

- sorted the data by category and time first (ensuring the right sort - the used Table.Buffer to prevent a change)

- If the category changes, it resets the count.

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

#"Changed Type" = Table.TransformColumnTypes(Source,{{"DATETIME", type datetime}, {"CATEGORY", type text}, {"CUMULATIVE VIEWS", Int64.Type}}),

#"Sorted Rows" = Table.Buffer(Table.Sort(#"Changed Type",{{"CATEGORY", Order.Ascending}, {"DATETIME", Order.Ascending}})),

#"Added Index" = Table.AddIndexColumn(#"Sorted Rows", "Index", 0, 1, Int64.Type),

PreviousRow =

Table.AddColumn(

#"Added Index",

"Difference",

each

let

varCurrentRow = [Index],

varCurrentCategory = [CATEGORY]

in

if varCurrentRow = 0 then [CUMULATIVE VIEWS]

else if varCurrentCategory <> #"Added Index"[CATEGORY]{varCurrentRow - 1} then [CUMULATIVE VIEWS]

else [CUMULATIVE VIEWS] - #"Added Index"[CUMULATIVE VIEWS]{varCurrentRow - 1},

Int64.Type

)

in

PreviousRow

Here is your file back. You can of course delete the index column if you don't want it at this point.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI ReportingHelpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.