- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Earn a 50% discount on the DP-600 certification exam by completing the Fabric 30 Days to Learn It challenge.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Reset count of ID once they've all been seen, coun...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Reset count of ID once they've all been seen, count how many days it took then reset until next time

Hi,

I have 1500 unique IDs, everyday many are seen and some multiple times a day.

I would like to do a cumulative count and know how many days does it takes to see them all 1500, once it reaches that amount, the cumulative sum needs to reset for another count until the next time the goal is met.

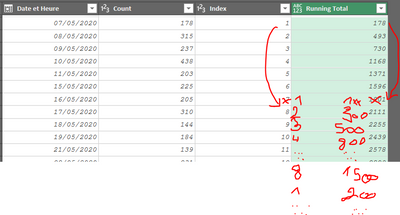

I've done a running total after a grouped count by date, but my issue aside from the needed reset is that the duplicates needs to be removed based on the time period needed to attain the goal. If i'm grouping my IDs by date then i'm losing the ability to sort out duplicates...

So i'm guessing I need to first find how much time is needed, then remove the duplicates from a custom column spanning over its own run tower the goal, and then, calculate the running...

Here's an ugly paint mockup of what i'd like my final result to look like (I realize I need my date format to be date.time otherwise i'll never get a correct goal and it'll be tricky)

I've found this solution here from Lind25 but the syntax is wrong, maybe from a previous PQ version:

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

AddedIndex = Table.AddIndexColumn(Source, "Index", 1, 1),

#"Added Custom" = Table.AddColumn(AddedIndex, "Running total reset", each List.Accumulate(List.FirstN(AddedIndex[Difference],[Index]),0,(state,current)=>if state+current > 60 then 0 else state+current))

in

#"Added Custom"

Any takers ? I'm completely lost on that one...

Thanks

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

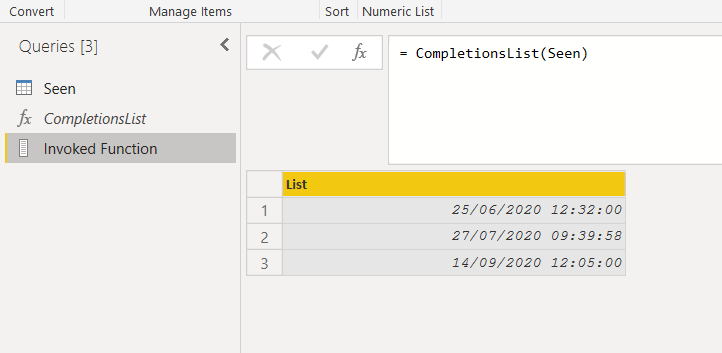

I've simulated a scenario with only 10 IDs using some of the data you provided, see if I'm on the right track? If I'm right, I'll think of the code to implement the end result.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did a test performing the core operation in Python and it seems to finish in less than 20 secs for the data you posted. See it in the attached file. You'll have to update the path to the excel file you shared. Here is the M code for the main query:

Note it should be cleaned up a bit, as it is doing a lot of stuff that might not be necessary

let

Source = Excel.Workbook(File.Contents("d:\Downloads\Sample PQ Help.xlsx"), null, true),

Maintenance_Itinérante___CSV_Table = Source{[Item="Maintenance_Itinérante___CSV",Kind="Table"]}[Data],

#"Changed Type" = Table.TransformColumnTypes(Maintenance_Itinérante___CSV_Table,{{"Date&Time Seen", type datetime}, {"ID", Int64.Type}}),

#"Removed Duplicates" = Table.Distinct(#"Changed Type", {"Date&Time Seen", "ID"}),

#"Sorted Rows" = Table.Sort(#"Removed Duplicates",{{"Date&Time Seen", Order.Ascending}}),

#"Added Custom" = Table.AddColumn(#"Sorted Rows", "Date", each Date.From([#"Date&Time Seen"])),

#"Removed Columns" = Table.RemoveColumns(#"Added Custom",{"Date&Time Seen"}),

#"Reordered Columns" = Table.ReorderColumns(#"Removed Columns",{"Date", "ID"}),

#"Removed Duplicates1" = Table.Distinct(#"Reordered Columns", {"Date", "ID"}),

#"Changed Type1" = Table.TransformColumnTypes(#"Removed Duplicates1",{{"Date", Int64.Type}}),

#"Run Python script" = Python.Execute("# 'dataset' holds the input data for this script#(lf)groupeddataset = dataset.groupby(['Date'])['ID'].apply(lambda x: list(x)).to_frame().reset_index()#(lf)#test.groupby(['Pos'])['Pos2'].apply(lambda x: list(x)).to_frame().reset_index()#(lf)a = list(groupeddataset['ID']) #(lf)acc=list(initial['ID']); res=[]#(lf)for i in range(len(a)):#(lf) acc=set(acc)-set(a[i])#(lf) #acc=set(acc)-set([a[i]])#(lf) if acc == set(): #(lf) acc=initial#(lf) res=res+[i]#(lf)#(lf)output=pandas.DataFrame(res,columns=['Positions'])",[dataset=#"Changed Type1", initial=Table.SelectRows(All_IDsT,each [ID]<> 15133)]),

groupeddataset = #"Run Python script"{[Name="groupeddataset"]}[Value],

groupeddataset2 = Table.TransformColumnTypes(groupeddataset,{{"Date", Int64.Type}}),

#"Changed Type3" = Table.TransformColumnTypes(groupeddataset2,{{"Date", type date}}),

#"Sorted Rows1" = Table.Sort(#"Changed Type3",{{"Date", Order.Ascending}}),

CompletionPositionsT = #"Run Python script"{[Name="output"]}[Value],

CompletionPositionsT2 = Table.TransformColumnTypes(CompletionPositionsT,{{"Positions", Int64.Type}}),

result = List.Select(groupeddataset2[Date], each List.Contains(CompletionPositionsT2[Positions],_ - List.Min(groupeddataset2[Date]))),

#"Converted to Table" = Table.FromList(result, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Changed Type2" = Table.TransformColumnTypes(#"Converted to Table",{{"Column1", type date}}),

#"Renamed Columns" = Table.RenameColumns(#"Changed Type2",{{"Column1", "Completion dates"}})

in

#"Renamed Columns"

The main step is #"Run Python script", with the following Python code:

groupeddataset = dataset.groupby(['Date'])['ID'].apply(lambda x: list(x)).to_frame().reset_index()

a = list(groupeddataset['ID'])

acc=list(initial['ID']); res=[]

for i in range(len(a)):

acc=set(acc)-set(a[i])

if acc == set():

acc=initial

res=res+[i]

output=pandas.DataFrame(res,columns=['Positions'])

It groups the IDs by Dates (day level) and then operates on that to extract a list of the positions where each section with all IDs seen ends. Note I have filtered out ID 15133 from the list of IDs so that there is at least one section that has all IDs

Please mark the question solved when done and consider giving kudos if posts are helpful.

Contact me privately for support with any larger-scale BI needs, tutoring, etc.

Cheers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

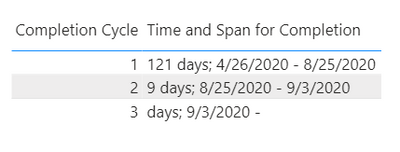

As much a I love M, I will put another plug in for the DAX approach for this one. I don't know if your IDs have category columns associated with them and you will want to also have slicers (which would require a DAX approach). Attached is a pbix with your example data. It uses the below DAX expression to generate the shown table (I also added a small table with the "Cycle" values of 1,2,3). You could do them separate but it is calculation intensive (so since I already had the virtual table in the measure, I generated both outputs and concatenated them together).

Time and Span for Completion =

VAR thiscycle =

SELECTEDVALUE ( Cycle[Completion Cycle] )

VAR ids =

ALLSELECTED ( Seen[ID] )

VAR idcount =

COUNTROWS ( ids )

VAR summarylastcycle =

ADDCOLUMNS (

VALUES ( Seen[Date&Time Seen] ),

"IDsSoFar",

VAR thistime = Seen[Date&Time Seen]

RETURN

COUNTROWS (

FILTER (

ids,

CALCULATE (

COUNT ( Seen[ID] ),

Seen[Date&Time Seen] <= thistime

) >= thiscycle - 1

)

)

)

VAR completiontimelastcycle =

IF (

thiscycle = 1,

MIN ( Seen[Date&Time Seen] ),

MINX (

FILTER (

summarylastcycle,

[IDsSoFar] >= idcount

),

Seen[Date&Time Seen]

)

)

VAR summarythiscycle =

ADDCOLUMNS (

FILTER (

VALUES ( Seen[Date&Time Seen] ),

Seen[Date&Time Seen] >= completiontimelastcycle

),

"IDsSoFar",

VAR thistime = Seen[Date&Time Seen]

RETURN

COUNTROWS (

FILTER (

ids,

CALCULATE (

COUNT ( Seen[ID] ),

Seen[Date&Time Seen] <= thistime

) >= thiscycle

)

)

)

VAR completiontimethiscycle =

MINX (

FILTER (

summarythiscycle,

[IDsSoFar] >= idcount

),

Seen[Date&Time Seen]

)

VAR span =

DATEDIFF (

completiontimelastcycle,

completiontimethiscycle,

DAY

)

VAR range = completiontimelastcycle & " - " & completiontimethiscycle

RETURN

span & " days" & "; " & range

Regards,

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the code that implements the algorithm I described in the previous message

let

completion = (tab)=>

let

grpID=Table.Group(tab, {"ID"}, {"grp", each _}),

nids=Table.RowCount(grpID),

currLastDate=List.Min(List.Last(grpID[grp])[Date]),

rest= Table.SelectRows(tab, each _[Date] > currLastDate ),

result= if Table.RowCount(Table.Distinct(rest,"ID")) < nids then {currLastDate} else {currLastDate} & @ completion(rest)

in

result

in

completion

This function receives as input a table with Date and ID columns and provides a list of dates where the saturation cycle of all the distinct ids of the table is completed.

It seems very fast, respect previou solution based on list.generate and list.difference and so on ...

to obtain a more pleasant output, I grafted a copy of the vector of ids in the original table in three different random points of the [ID] column 😊

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

= let

// {1..6} is the set of IDs

Source = List.Accumulate(Tabl[Index],

[dcompl={[when=Tabl[Date]{0},atPos=Tabl[Index]{0}]},ck={1..6}],

(s,c)=> if List.IsEmpty(s[ck]) then [dcompl=s[dcompl]&{[when=Tabl[Date]{c},atPos=c]},ck={1..6}] else [dcompl=s[dcompl],ck=List.Difference(s[ck],{Tabl[id]{c}})])

in

Table.FromRecords(Source[dcompl])

This can be overcome by using the concept of union of sets, with the List.Union function.

It can be done in many ways,

If you place some tables that can be copied easily I can try it.

basically with the same logic, but this implementation is much more efficient, as it avoids continuous list sorting and comparisons are simpler (IsEmpty I think is much faster than list1 = list2)

let

Source = List.Accumulate(Tabl[Index],

[dcompl={[when=Tabl[Date]{0},atPos=Tabl[Index]{0}]},ck={1..6}],

(s,c)=> if List.IsEmpty(s[ck]) then [dcompl=s[dcompl]&{[when=Tabl[Date]{c},atPos=c]},ck={1..6}] else [dcompl=s[dcompl],ck=List.Difference(s[ck],{Tabl[id]{c}})])

in

Table.FromRecords(Source[dcompl])

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Kiwizqt

I don't quite understand.

Can you show

1. the original data you are working on (an excerpt that shows all the relevant fields)?

2. the result you expect from the data on 1 and how you get there

Please mark the question solved when done and consider giving kudos if posts are helpful.

Contact me privately for support with any larger-scale BI needs, tutoring, etc.

Cheers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AlB thank you for your time and sorry not to have explained it better.

My answer got removed for suspission of spam 😞 here's another go at it.

Here's what i'm working with:

And here's what I'd like to know about it:

Where Completion Cycles refer to the moment I choose to start counting ID toward the 1500 IDs needed to be seen,

Where Days to Achieve refer to the time it took to reach those 1500 unique IDs,

And ideally, a Time Span column telling me the time period those completion cycles took place in.

The trick is that I'm registering duplicates IDs on that road to 1500 uniques and that the next completion cycle needs to start as soon as the previous one is done toward a new 1500 unique IDs goal.

I do have another table where my 1500 total IDs are stored if a join is needed.

Note: I don't have much knowledge in DAX/PBI but if you think it's easier or you don't think it's doable in PQ, I could also upload my source data and make it work there.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is easier done in DAX in my opinion. Here is a measure expression you can try in a card visual to give the first (min) datetime where all the IDs have been seen. To see the 2nd and 3rd time, just change the 1 to 2 or 3. I made some sample data and called that table Seen, so change that throughout to your actual table name. Note there is a lot of calculation going on here, so it may not be performant at large scale. If you try it, please let me know how it performs.

First All IDs Seen =

VAR ids =

ALLSELECTED ( Seen[ID] )

VAR idcount =

COUNTROWS ( ids )

VAR summary =

ADDCOLUMNS (

VALUES ( Seen[Date & Time Seen] ),

"IDsSoFar",

VAR thistime = Seen[Date & Time Seen]

RETURN

COUNTROWS (

FILTER (

ids,

CALCULATE (

COUNT ( Seen[ID] ),

Seen[Date & Time Seen] <= thistime

) >= 1

)

)

)

RETURN

MINX (

FILTER (

summary,

[IDsSoFar] >= idcount

),

Seen[Date & Time Seen]

)

Regards,

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mahoneypatthanks for the try but the card only returns the date of completion (correctly I might add, I checked it), but it doesn't count the numbers of days needed.

Ideally, I would have liked to plot it in on a line graph with the numbers of IDs on (y) and raw days on (x) so that I could superimpose them throughout various periods.

Hi @Anonymous ! Thank you for your time, i'll be honest I can't decypher much of your code but i'm eager to learn from it, i've hosted an excel on googledrive below, is that enough/available for you ?

https://drive.google.com/file/d/1gWHYl3FxX3t2Dt6jQ8MCBWV1WF4d80V0/view?usp=sharing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Kiwizqt

Given the size of your data, the List.Accumulate function is not good.

You could try List.Generate (but try incrementally (*) ) or try the path indicated by @mahoneypat .

try list.generate starting from small lists and gradually increasing the size to evaluate the times.

I propose you a draft, which calculates only the essential. Returns a vector with all nulls except the values of the position where the breaks occur.

You can use this list (filtered by null values) to find the affected rows.

let

//idsV is a partial list of all ids

// Seen is a partial list (20k-items, f.i.) of overall ids into Seen table

ids=List.Buffer(idsV),

s=List.Buffer(Seen),

nID=List.Count(Seen),

Source = List.Generate(

()=>[atPos = null,ck=ids,idx=0],

each [idx] < nID,

each if List.IsEmpty([ck]) then [atPos= idx,ck=ids,idx=[idx]+1] else [atPos=null,ck=List.Difference([ck],{s{[idx]}}),idx=[idx]+1],

each [atPos])

in

Source

(*)

at worst, you have 1500 ids and 100K rows, then for each of the 100k ids in the table it is necessary to "traverse" the whole vector of distinct ids 1,5M check.

If trying a list of 20k and 100 distinct ids it takes 1 ', then for your table it takes 100k / 20k * 1500/100 = about 75' (1H, as an order of magnitude of course)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I thought the finish time was one of your requests, but you can easily adapt the expression I suggested to get the time it took as follows. You can choose another time dimension instead of MINUTE (DAY, HOUR, etc.). Also, for cycles 2 and higher you would need to set the starttime variable to the same expression but with cycle minus 1.

Time for First Seen =

VAR ids =

ALLSELECTED ( Seen[ID] )

VAR idcount =

COUNTROWS ( ids )

VAR summary =

ADDCOLUMNS (

VALUES ( Seen[Date & Time Seen] ),

"IDsSoFar",

VAR thistime = Seen[Date & Time Seen]

RETURN

COUNTROWS (

FILTER (

ids,

CALCULATE (

COUNT ( Seen[ID] ),

Seen[Date & Time Seen] <= thistime

) >= 1

)

)

)

VAR completiontime =

MINX (

FILTER (

summary,

[IDsSoFar] >= idcount

),

Seen[Date & Time Seen]

)

VAR startime =

MIN ( Seen[Date & Time Seen] )

RETURN

DATEDIFF (

startime,

completiontime,

MINUTE

)

Regards,

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube