- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Earn a 50% discount on the DP-600 certification exam by completing the Fabric 30 Days to Learn It challenge.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Evaluation taking huge amount of time

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Evaluation taking huge amount of time

Hello all,

I seem to be struggling with an issue that several of you have already mentioned, and I have read through several posts already trying all the suggestions but I keep stuck at the same situation.

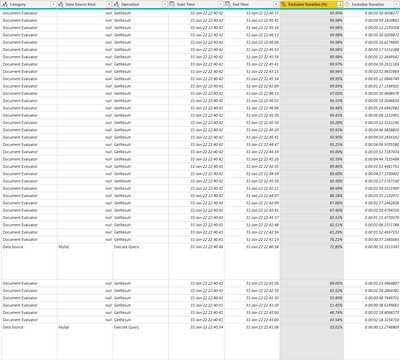

My refresh action is taking a long time, and more specifically, the Evaluation step. I performed a query diagnostics analysis and in the screenshot below, it seems that my query fetching is basically done in a matter of seconds, but then the Document Evaluator takes a huge amount of time for no apparent reason.

When scanning through the other posts on the platform, I already tried the following:

- Cleaning cache

- Cleaning cache & refresh all in preview

- Disable privacy check for the report

- Check path locations for broken paths

For your information, I am access one MySQL database with several tables. The tables are rather small (on average about 100 rows, several larger ones up to several thousands of line). I do have a lot of relationships between the tables, but that should not make a different I would assume.

Any pointers on how to reduce this time-consuming Refresh step are highly welcome.

Thanks in advance

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

It is unclear to me what happened, but the problem seems to have resolved itself. The frustrating part is, I don't know what triggered it. So now my refresh action runs within 25 seconds instead of 5+ minutes.

At first, I thought it was because I disabled the "grouped rows" query mentioned below, but even now, when I enable it again, the performance stays identical.

Also, the version I am using did not update automatically by the Windows Store (Version: 2.100.1401.0 64-bit (December 2021)), so nothing changed from Power BI side, neither from the database side.

So I am sorry not to provide any tangible pointers for users with similar issues, or to be able to acknowledge the users here for their suggestions, but it remains unclear. Apologies.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

It is unclear to me what happened, but the problem seems to have resolved itself. The frustrating part is, I don't know what triggered it. So now my refresh action runs within 25 seconds instead of 5+ minutes.

At first, I thought it was because I disabled the "grouped rows" query mentioned below, but even now, when I enable it again, the performance stays identical.

Also, the version I am using did not update automatically by the Windows Store (Version: 2.100.1401.0 64-bit (December 2021)), so nothing changed from Power BI side, neither from the database side.

So I am sorry not to provide any tangible pointers for users with similar issues, or to be able to acknowledge the users here for their suggestions, but it remains unclear. Apologies.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

Glad your issue has been resolved.

Please mark your own reply as the solution, more people will see it.

Slow refresh will involve many aspects, you could refer to below documents and blogs that may help you:

Optimization guide for Power BI - Power BI | Microsoft Docs

Power BI Performance Tips and Techniques

Best Regards,

Stephen Tao

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately, I cannot mark my reply as the solution. I will mark yours, which in itself is a response to my reply,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just one more thing. I'm sure the using the two "not Text.Contains" isn't folding to a native query, and after, the grouping step is a "full table" operation. If you are working with 70 tables, you are importing 70 tables, and then your local machine is processing all of these full table operations. At MINIMUM, do the grouping step before the filtering step. I bet that makes a difference--you'll see what I mean!

--Nate

I’m usually answering from my phone, which means the results are visualized only in my mind. You’ll need to use my answer to know that it works—but it will work!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's the each not Text.Contains that is likely the issue. Your query has to check each full column to see if it does not contain the text. If it does contain the text, then I has to check if the other whole column ALSO doesn't contain the other text. I bet if you break those into separate steps, it'll save a ton of time.

--Nate

I’m usually answering from my phone, which means the results are visualized only in my mind. You’ll need to use my answer to know that it works—but it will work!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @watkinnc ,

Thanks for the suggestion. I disabled the query and it did not improve the speed (unfortunately).

Is there a way to get more insights into which actions are undertaken in the "Evaluating" step?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there a reason that you have to bring in and aggregate the whole unfiltered tables from the database? I mean, canmy you filter first, so that the db only gives you what you need, as far as columns and rows? Why would you try to aggregate 70 unfiltered tables with a of the columns? Can't you just bring in the columns and filtered rows, and then aggregate? You might be aggregating/filtering on 70 million rows, instead of, say, 700,000 rows of you filter and choose your columns first. It makes a difference.

--Nate

I’m usually answering from my phone, which means the results are visualized only in my mind. You’ll need to use my answer to know that it works—but it will work!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there a reason that you have to bring in and aggregate the whole unfiltered tables from the database? I mean, canmy you filter first, so that the db only gives you what you need, as far as columns and rows? Why would you try to aggregate 70 unfiltered tables with a of the columns? Can't you just bring in the columns and filtered rows, and then aggregate? You might be aggregating/filtering on 70 million rows, instead of, say, 700,000 rows of you filter and choose your columns first. It makes a difference.

--Nate

I’m usually answering from my phone, which means the results are visualized only in my mind. You’ll need to use my answer to know that it works—but it will work!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @watkinnc ,

Placing an intermediate component in the pipeline to aggregate part of the data would make the entire architecture much more complex than it needs to be. As mentioned, the total amount of rows is about 200'000. So the amount of data is already rather limited.

For some reason in my older files, the data was loading faster. So my main question remains, what is happening during the Evaluation step and how can I reduce this time. If I look at the filter and sort step of one of my tables, it takes a couple of seconds, not more. So there is something that is taking such a huge time, but the Query Diagnostics step does not really provide insights into this Evaluation step.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

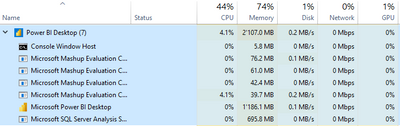

I'd be willing to bet that there is some early transformation that does not fold back to the server, and that a sort or other table operation is causing you to import all of these full tables into memory in order to perform these operations, and is paging to disk after your RAM is used up. If on the bottom right of the window, you see something like "Importing x,xxx,xxx rows from MySQL", then you know that this is what is happening.

Another thing to consider: if were able to see the related tables, can you actually expand those tables, instead of calling them via separate queries?

If neither thing is helpful, then I would talk to your IT/DBA folks; I would refuse to perform actions on 70+ tables.

--Nate

I’m usually answering from my phone, which means the results are visualized only in my mind. You’ll need to use my answer to know that it works—but it will work!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, that does not happen.

My RAM usage is also very low, so it is not a matter of the amount of rows that are creating this problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

By the looks of your diagnostics I'm guessing the delay is in the transformations, so can you share the M code from the query or queries that are causing you problems please?

You can remove or anonymise the Source step and just replace with 'mySQL query', or 'Excel workbook on SharePoint' or similar.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @BA_Pete ,

First of all, thanks for the swift reply.

There are very little transformations being performed on the tables. Typically only removal of some (irrelevant) columns. For example, the majority of the (~70) tables is looking as follows:

let

Source = MySQL.Database(DB_Full_URL, "platform", [ReturnSingleDatabase=true]),

platform_platform_users = Source{[Schema="platform",Item="platform_users"]}[Data]

in

platform_platform_users

There is only duplicated table which I use to create an aggregated view where a filtering and sorting actions is involved.

let

Source = MySQL.Database(DB_Full_URL, "platform", [ReturnSingleDatabase=true]),

platform_session_tracks = Source{[Schema="platform",Item="session_tracks"]}[Data],

#"Filtered Rows" = Table.SelectRows(platform_session_tracks, each not Text.Contains([meta_data], "invalid_csrf_token: true") and not Text.Contains([meta_data], "was_unautorized: true")),

#"Grouped Rows" = Table.Group(#"Filtered Rows", {"session_id"}, {{"StartTime", each List.Min([start_time]), type datetime}, {"EndTime", each List.Max([end_time]), type datetime}})

in

#"Grouped Rows"

One element that also triggers my attention is the fact that besides the existing columns, PowerBI also included the relationship tables as extra columns, meaning that columns are added which are basically other tables (and each row contains the word "Table" with a reference to that table).

I did turn off the import relationships automatically (perhaps not from the very beginning - not sure anymore). Even deleting all of these additional columns doesn't save any time though.

Not sure if this has anything to do with it.

Thanks for the assistance!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

70 tables? And it's only taking 5 minutes? I think you're doing ok here.

Technicalities aside, I'd start by looking at why you need 70 tables in a single report, and how you can reduce that number drastically.

Obviously I don't know what your exact reporting scenario is, but I'd be thinking about:

1) Appending common data tables together (at source as far as possible).

2) Breaking the report into smaller, more focused reports (you can use dashboards for a broader view).

3) Marking a dedicated calendar table as the date table and turning off auto-time-intelligence.

4) Checking that every query is folding back to the server.

5) Checking that the server itself has resource capacity for so many requests at once.

6) Removing any merges between queries and moving to model relationships.

7) Removing (where feasible) any pivots/unpivots that are not being folded to the server.

Don't forget that your relationships also have a 'size' within the model, so there may be some effect here, but I don't know for certain.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @BA_Pete ,

The thing is, loading the queries in my MySQL viewer takes milliseconds because the tables are so small. In addition, I have the feeling that the PowerBI files from a year ago don't have this delay. They always were in the order of 1-2 min max. Half a year ago, I wanted to start from a clean version again and re-created the queries, and now I have this problem. So I know it can be faster as nothing basically changed to our database.

So either it has something to do with the way the queries have been created in my recent file (but comparing the M code, they look identical), or a change in the PowerBI software that is resulting in this rather large increase.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.