- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Cannot exclude broken connections from refresh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Cannot exclude broken connections from refresh

Hi all,

I have a Power BI dashboard that draws on datasets from several sources. Our team is having trouble accessing a number of these datasets due to trouble with CRM access, so our solution has been to house data elsewhere when it requires continuous updating, but for data that does not require updating, I have just been using the historical pre-loaded data held in the PBI file from when the connection was working.

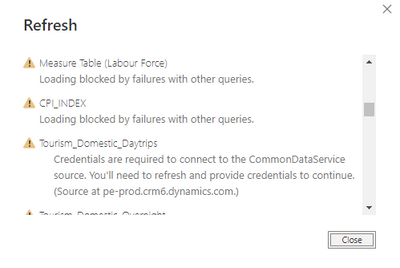

I now want to set up the queries so that clicking Refresh will only refresh the 15-20 data tables housed elsewhere while ignoring those archived files with the broken connection -- since including these will cause the whole refresh fail like so:

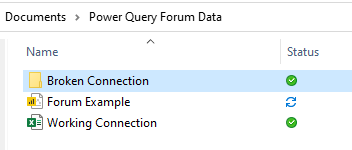

To replicate this, I have attached an example file which calls on two datasets:

- Working Connection -> local csv which connects correctly

- Broken Connection -> local csv which initially connects correctly, but breaks due to a change in the subfolder name

LINK: https://drive.google.com/drive/folders/1TFelWa3OmuLTtI4cAnUoMe9Vvxuxw4KX?usp=sharing

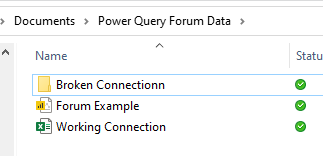

NOTE: you will need to add these two datasets to the PBI file on your local device, then close the PBI file and change the subfolder name like so:

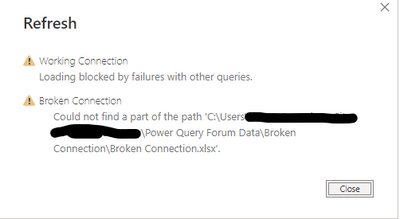

Refreshing now produces an error:

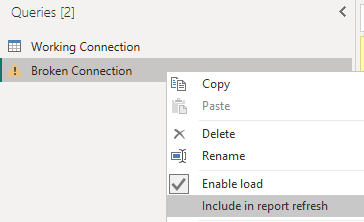

In Power Query, I had hoped that a solution to this would be to uncheck 'Include in refresh report' (as below), however I am unable to apply this change without having a working connection to begin with, leaving me unable to refresh it and unable to exclude it from refresh. Is there a workaround to resolve this?

So far the solutions I see are:

- Turn off 'Enable load' - This will remove the whole data source from the model, breaking model connections and any visuals calling on this data (Not a solution)

- Manually refresh each of the 15-20 functioning data tables with each update (time consuming and hard to explain to other users who are not familiar with Power BI)

- Extract each archived data table into a csv housed in the new working location, then change the initial 'Source' step of each of these to pull from this new location. (Could work, though there are approx. 40 of these tables and I will lose the ability to revert back to the CRM connection if that connection is ever repaired)

Is there a better way here that I am not seeing? Really appreciate any help with this!

Many thanks,

Taylor

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Taylor,

Ok, so there's two parts to this: 1) holding a static version of your data to use until the link is fixed, then 2) dynamically switching to the 'correct' data should the link become unbroken.

1) For this part, I would recommend loading your archive data into a Dataflow from whichever link currently works. You only need to connect and refresh once, then don't schedule any further refreshes on this Dataflow so it remains in a static state but also easily available to all reports.

2) In each of your queries, you should reference both sources (archive Dataflow and maybe-unbroken-link-source) and dynamically switch between them based on an "if desired link still broken then use Dataflow" evaluation.

Chris Webb covers this really well in the following link. Just make sure to also read the comments on the post as Ken Puls adds a key update to the original code presented by Chris:

https://blog.crossjoin.co.uk/2014/09/18/handling-data-source-errors-in-power-query/

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Taylor,

Ok, so there's two parts to this: 1) holding a static version of your data to use until the link is fixed, then 2) dynamically switching to the 'correct' data should the link become unbroken.

1) For this part, I would recommend loading your archive data into a Dataflow from whichever link currently works. You only need to connect and refresh once, then don't schedule any further refreshes on this Dataflow so it remains in a static state but also easily available to all reports.

2) In each of your queries, you should reference both sources (archive Dataflow and maybe-unbroken-link-source) and dynamically switch between them based on an "if desired link still broken then use Dataflow" evaluation.

Chris Webb covers this really well in the following link. Just make sure to also read the comments on the post as Ken Puls adds a key update to the original code presented by Chris:

https://blog.crossjoin.co.uk/2014/09/18/handling-data-source-errors-in-power-query/

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Pete,

Thanks for taking the time to look into this, I think this will probably be the smoothest way to address the issue and future proof the current system.

Thanks again!

Taylor

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.