- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Optimizing search for simultaneous logins - Slow m...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Optimizing search for simultaneous logins - Slow measure

Hi all,

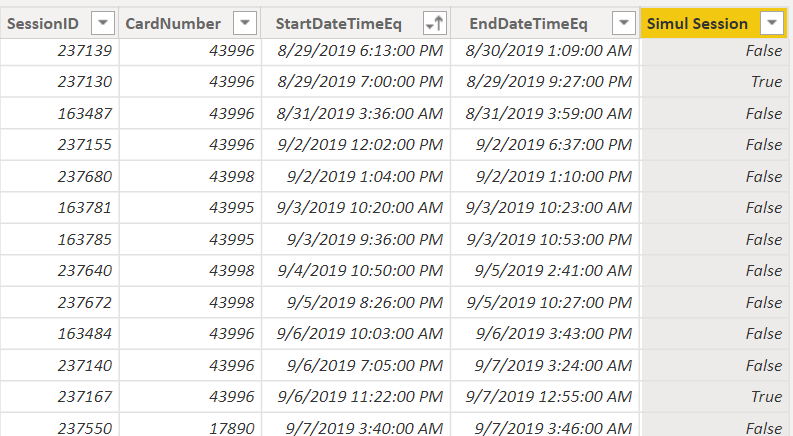

We have a Sessions table that registers logs into a system:

| SessionID | CardNumber | StartDateTimeEq | EndDateTimeEq |

| 1 | 100 | 01/09/20 09:35 | 01/09/20 10:18 |

| 2 | 102 | 01/09/20 07:10 | 01/09/20 08:15 |

| 3 | 134 | 01/09/20 10:56 | 01/09/20 11:01 |

| … | … | … | … |

Mock data is attached

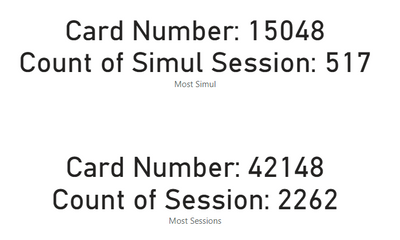

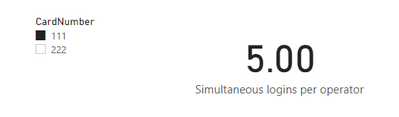

Card number uniquely identifies a user. Each login is uniquely identified by a SessionID (no SessionID dupes in the table). A user can log into the system thru multiple access points and can therefore have simultaneous logins. We are trying to measure the average number of simultaneous logins per user. This is an intensive operation (nested SUMX, FILTER) and the Session table has 1.5 million rows. Below is the measure that we currently have. It is way too slow unless calculated over small chunks of the table. Any ideas on how to make it faster?

Many thanks

Simultaneous logins per operator =

VAR auxT_ =

ADDCOLUMNS (

DISTINCT ( Sessions[CardNumber] ),

"@SimultaneousLogs",

VAR sessionsList_ =

CALCULATETABLE (

SUMMARIZE (

Sessions,

Sessions[SessionID],

Sessions[StartDateTimeEq],

Sessions[EndDateTimeEq]

)

)

RETURN

0

+ SUMX (

sessionsList_,

COUNTROWS (

FILTER (

sessionsList_,

[StartDateTimeEq] > EARLIER ( [StartDateTimeEq] )

&& [StartDateTimeEq] < EARLIER ( [EndDateTimeEq] )

&& [SessionID] <> EARLIER ( [SessionID] )

)

)

)

)

RETURN

AVERAGEX ( auxT_, [@SimultaneousLogs] )

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes.

I tried calculated column with 520k+ rows, it takes 1min or so to load and calculate; I think it's worthwhile. On one hand, the attribute of a specific session is static; on the other hand, measures to slice and dice the data model on the fly takes a blink of an eye only.

| Thanks to the great efforts by MS engineers to simplify syntax of DAX! Most beginners are SUCCESSFULLY MISLED to think that they could easily master DAX; but it turns out that the intricacy of the most frequently used RANKX() is still way beyond their comprehension! |

DAX is simple, but NOT EASY! |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's not exactly what I needed but you've given some pretty good and useful ideas

Thanks very much

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @CNENFRNL

So what would be the result of simultaneous logins in the table above? the sum of sessions that are non-primary?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes.

I tried calculated column with 520k+ rows, it takes 1min or so to load and calculate; I think it's worthwhile. On one hand, the attribute of a specific session is static; on the other hand, measures to slice and dice the data model on the fly takes a blink of an eye only.

| Thanks to the great efforts by MS engineers to simplify syntax of DAX! Most beginners are SUCCESSFULLY MISLED to think that they could easily master DAX; but it turns out that the intricacy of the most frequently used RANKX() is still way beyond their comprehension! |

DAX is simple, but NOT EASY! |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

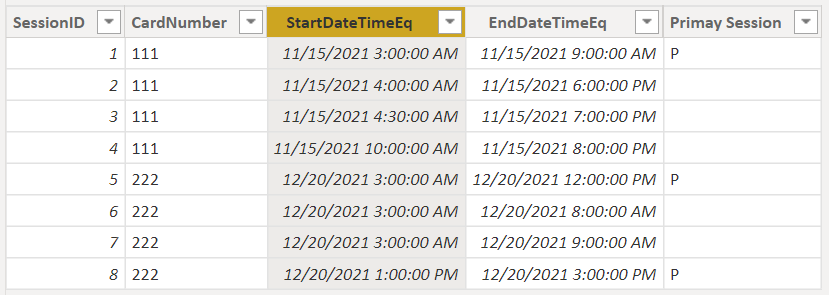

I'd spot "Primary Sessions" defined this way,

- [StartDateTimeEq] doesn't fall in between any other sessions (like session 1 and 8 below)

- longest session timespan among all sessions with the same [StartDateTimeEq] (like 5 below, there're also such cases in your mock dataset)

a coarse calculated column measure does the trick,

Primay Session =

VAR st = SESSIONS[StartDateTimeEq]

VAR e = SESSIONS[EndDateTimeEq]

VAR span = e - st

VAR sessionList = FILTER( SESSIONS, SESSIONS[CardNumber] = EARLIER( SESSIONS[CardNumber] ) )

RETURN

IF(

ISEMPTY(

FILTER(

sessionList,

SESSIONS[StartDateTimeEq] < st

&& st < SESSIONS[EndDateTimeEq]

)

)

&& ISEMPTY(

FILTER(

sessionList,

SESSIONS[StartDateTimeEq] = st

&& SESSIONS[EndDateTimeEq] - SESSIONS[StartDateTimeEq] > span

)

),

"P"

)

As you see, it's already complex enough even in calculated column; I've got no time for the moment to author an equal measure for viz now.

In fact, it's a self-join calculation with sessionList table for each CardNumber; I'm expecting much better performance on DB end. I'll give it shot later.

| Thanks to the great efforts by MS engineers to simplify syntax of DAX! Most beginners are SUCCESSFULLY MISLED to think that they could easily master DAX; but it turns out that the intricacy of the most frequently used RANKX() is still way beyond their comprehension! |

DAX is simple, but NOT EASY! |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, the mockup dataset is too small, hard to author an elegant measure based on it, but

ADDCOLUMNS (

DISTINCT ( Sessions[CardNumber] ),

"@SimultaneousLogs",

VAR sessionsList_ =

CALCULATETABLE (

SUMMARIZE (

Sessions,

Sessions[SessionID],

Sessions[StartDateTimeEq],

Sessions[EndDateTimeEq]

)

)

...

this part seems weird to me when interpreted in plain English,

for each unique Sessions[CardNumber], Sessions table is grouped by [SessionID]+[StartDateTimeEq]+[EndDateTimeEq].

As you mentioned that no SessionID dupliate in the table, why bother to Summarize it? Even more, impossible that there are some records with the same combination of [SessionID]+[StartDateTimeEq]+[EndDateTimeEq].

| Thanks to the great efforts by MS engineers to simplify syntax of DAX! Most beginners are SUCCESSFULLY MISLED to think that they could easily master DAX; but it turns out that the intricacy of the most frequently used RANKX() is still way beyond their comprehension! |

DAX is simple, but NOT EASY! |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @CNENFRNL

Good points.

1. I've added some mock data

2. The actual table has many more than those 4 more columns. That's why I'm using the SUMMARIZE, as a way to grab only the columns I need. I guess we could use something like SELECTCOLUMNS, but don't think that will have a significant performance impact

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

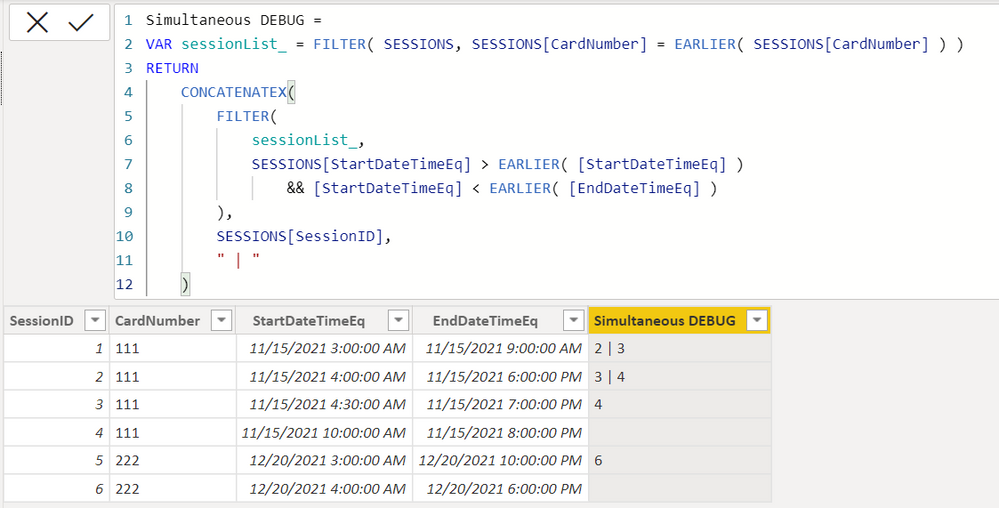

Frankly speaking, I didn't catch the underlying logic of your measure because your measure evaluates like this, which seems unlogical to me,

I replicate the logic in calculated column for debugging,

| Thanks to the great efforts by MS engineers to simplify syntax of DAX! Most beginners are SUCCESSFULLY MISLED to think that they could easily master DAX; but it turns out that the intricacy of the most frequently used RANKX() is still way beyond their comprehension! |

DAX is simple, but NOT EASY! |

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 105 | |

| 105 | |

| 87 | |

| 73 | |

| 66 |

| User | Count |

|---|---|

| 122 | |

| 112 | |

| 98 | |

| 79 | |

| 72 |