- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Incremental and Max Memory usage for a P1

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Incremental and Max Memory usage for a P1

Hi,

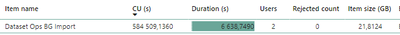

I am currently in the process of migrating our Analysis Services (AAS) cube to Power BI. I have successfully transferred the full model into a PBIX file and set up incremental refresh for it. Our system is under a P1 license, and the dataset size does not exceed 7.2 GB according to the administrative report.

I can load the entire dataset into the report without any issues after the initial publication. However, I encounter a problem with the incremental refresh (during the second refresh), where it fails:

| Resource Governing: This operation was canceled because there wasn't enough memory to finish running it. Either reduce the memory footprint of your dataset by doing things such as limiting the amount of imported data, or if using Power BI Premium, increase the memory of the Premium capacity where this dataset is hosted. More details: consumed memory 4561 MB, memory limit 3264 MB, database size before command execution 22335 MB. See https://go.microsoft.com/fwlink/?linkid=2159753 to learn more. |

Firstly, I am puzzled by the 22 GB size mentioned, which I also see in the Fabric Metrics app, but this contradicts what is shown in the admin settings :

Secondly, I don't understand why the failure occurs during the incremental refresh and not during the full refresh.

Could you provide any insights or suggestions on this matter? Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JohnnyDax ,

I have faced this issue many times. I can help if you can give me some details.

Is your dataset configured to large dataset format in setting. If not, please do that and see the actual size of the dataset.

The moment you set up incremental refresh and deploy, the first step that you must be doing is a one time full refresh. Can you tell the size of the dataset after full refresh.

Second thing, do you have any calculated columns in the report which might be consuming lot of memory.

Please let me know the response, I will try my best to resolve as I myself have faced this multiple times.

Thanks,

Pallavi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @pallavi_r ,

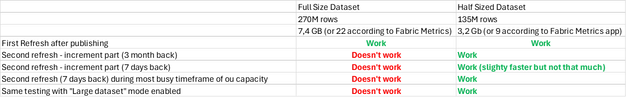

Thank you for your answer, i configured into "large dataset" setting with no luck. It still say 7,2 GB from the admin center and 22 GB from the Fabric Metrics App. The one time full refresh work fine, it's the second one, in increment that fail (only for full dataset size, for half ot if no issue).

I have no calculated columns, the heaviest table is directly loaded from a azure synapse pool with no PowerQuery step except the filter on RangeStart/RangeEnd date.

I guess my last option is through XMLA endpoint but i don't know how to automatize the refresh process through SMSS or Tabular Editor.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JohnnyDax

There won't be any difference in scheduling the refresh of dataset. it is irrespective of full refresh, incremental refresh, partition based refresh. XMLA end point Partitioned based resfresh is one time deployment activity. and after that we just need to trigger/schedule the refresh. it will do the incremental refresh.

You must have already done the desktop setting for incremental. What is the incremental range - is it weekly, monthly, quarterly.

1. Go to workspace setting. And copy the workspace connection and login to SSMS with this connection.

2.better to cleanse all the data by writing a view that we can modify outside of pbi to take one day range.

3.Click on full refresh from power bi service

4.It will create partitions as per your incremental configurations

5.Go to ssms->go to the dataset-> right click on partitions

6.individually run the partitions by clicking on the partion and select process data

7.After all partions are done, Right click on the entire dataset->process database->select process recalc

8.Now this is done, you can trigger or schedule the refresh is usual way. it would work incrementally

Few things to take note. I) Query folding should be taken care of. II) does not work well with git integration (this is what i had faced in the past, not sure if any new update is there).

I followed this video from guy in a cube - https://www.youtube.com/watch?v=5AWt6ijJG94

Please try this out and let me know if you have face any challenges.

Thanks,

Pallavi

Thanks,

Pallavi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll try this and keep you inform, i can't do it for the next 2 weeks so i can be long to answer sorry for that. Thank you for the method

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's fine @JohnnyDax . Happy to help you anytime you start this. Thanks, Pallavi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JohnnyDax ,

I get it now. The exact same issue I was also facing for the first time. I went for xmla end point partitioned based refresh from SSMS. Its not difficult to automate. But answer me if you have CI/CD devops configured. It doesnt work that well with incremental setting. Let me share if there is any already available blog. If not there I will give you the full steps in next comment.

Thanks,

Pallavi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We do have CI/CD Devops but only for the ADF/Synapse part.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi , @JohnnyDax

That's a strange result from your test, the full refresh and the first incremental refresh both contain the largest amount of data and they both work. Perhaps you could adjust its refresh frequency to control the amount of data in one incremental refresh to try.

Based on the above information, if you are a Power BI Pro licensee, you can create a support ticket for free and a dedicated Microsoft engineer will come to solve the problem for you.

It would be great if you continue to share in this issue to help others with similar problems after you know the root cause or solution.

The link of Power BI Support: Support | Microsoft Power BI

For how to create a support ticket, please refer to How to create a support ticket in Power BI - Microsoft Power BI Community

Best regards.

Community Support Team_Scott Chang

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @v-tianyich-msft ,

I've experimented with several scenarios, and all seem to lead to the same outcome: the heaviest version fails during incremental refresh.

We're using P1 Capacity, which I believe has a maximum of 25 GB of RAM. According to the Fabric Metrics App, our full dataset is nearing this limit (22GB), which is puzzling since the Capacity Admin Center shows only 7.2 GB. It's possible the system accounts for the maximum dataset size, hence the larger figure during refreshes. I also suspect that the entire dataset might be cached during the incremental refresh, which is the only explanation I can think of for these issues. With half the dataset, it works fine as it stays well below the 25 GB limit.

This behavior seems unusual since I would expect the service to load only the incremental part, not the entire dataset, into RAM. From what I've read in several blog posts, large datasets might not be well-suited for the built-in incremental refresh feature of the Power BI Service. It seems more feasible to use the XMLA endpoint. I tried to manually refresh only the necessary partitions, which has worked for me. However, I'm unsure how to automate this process at specific times.

Could you provide any insights or suggestions on how to better manage or automate this process? We're using Tabular Editor 2 or SSMS.

Thank you for your help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JohnnyDax

Can you please tell me what is your dataset size after full refresh when large dataset format is enabled. Please check the dataset size in Power BI Service -> Workspace->Manage group storage. Please check the individual dataset size.

Thanks,

Pallavi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @pallavi_r ,

Short answer : The full dataset is probably 22 GB.

Long answer :

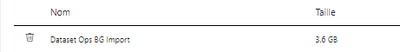

Power BI Service -> Workspace->Manage group storage doesn't seem very reliable at first sight.

Yesterday when i uploaded the full dataset it said 7,2 GB, and the half-dataset 3,6 GB.

But on the fabric metric app i had 22 GB for the full one and 9 GB for the half-one.

I left the half dataset the whole night on the workspace to test an increment refresh in "normal" condition, and it worked well like usual. However this time in Power BI Service -> Workspace->Manage group storage it does say "9 GB" like in the Fabric Metrics App. I suppose there is some sort of delay in this page depending on which state of refresh is the dataset. (Or maybe it's just my navigator cache which induce me in error).

Do you think the 22 GB is too much for a P1? Even tho i try to refresh only a small part of it ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JohnnyDax ,

Few points and my learning from this issue in the past:

Point 1-

Resource governing error occurs if refresh is taking lot more memory and cpu usage, so at that time the refresh fails and stops. I have noticed that this time memory cache is not clear. And on top of this error, if again and again we try to re-refresh, then it shows memory increase continually with each refresh. So better to republish and refresh, rather then re-refreshed memory failed dataset refresh. That's why we will see the 9 to 14 to 25 weird and bloated memory size.

Point 2:

XMLA endpoint partitioned based refresh is th optimum way to deal with this. Normal incremental refresh at times does not work for large dataset format. Probably for a large dataset 7 gb+, incremental is not working with normal setup. Trial and tested by me too. The same issue exactly I had got. XMLA refresh saved me 🙂

Point 3:

For P1 capacity (irrespective of full or incremental), dataset size should be below 14 gb. It has cap of 25 GB, which is like when we refresh the size gets almost doubled. 25/2 = 12.5. Till 12.5-13 it works perfectly fine, the moment it reaches 13-14 gb, the dataset even if we are doing incremental refresh it sometime fails giving memory issue.

Thanks,

Pallavi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm making more test and the error message doesn't seem consistent, now it speak about a 25Gb max memory usage when it was >5 GB before :

Resource Governing: This operation was canceled because there wasn't enough memory to finish running it. Either reduce the memory footprint of your dataset by doing things such as limiting the amount of imported data, or if using Power BI Premium, increase the memory of the Premium capacity where this dataset is hosted. More details: consumed memory 25480 MB, memory limit 25348 MB, database size before command execution 251 MB. See https://go.microsoft.com/fwlink/?linkid=2159753 to learn more.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I followed up on the incremental refresh issue by testing with half of the dataset, and it appears to work successfully. Although this solution is manageable, ideally, I would prefer to utilize the full dataset for our analyses.

It seems that the full data volume might be overwhelming the system, but if there are any additional insights or recommendations that could help us accommodate the entire dataset without compromising performance, I would greatly appreciate your advice.

I have already minimized the cardinality for our main table, which contains approximately 270 million rows and accounts for 95% of the dataset size. This table has been optimized with the fewest columns necessary and an efficient primary key setup.

Despite these optimizations, I'm still facing challenges with managing the full dataset during the incremental refresh process. If there are further optimization strategies or adjustments within Power BI that could help manage such a large dataset more effectively, I would be keen to learn about them.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 56 | |

| 19 | |

| 18 | |

| 18 | |

| 9 |