- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Incremental Refresh for Dataflow calling Source AP...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Incremental Refresh for Dataflow calling Source API Multiple Times

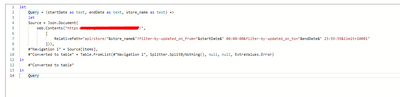

We have Dataflows that utilise API Sources that we would like to refresh incrementally and the incremental refresh is not working as expected.

The issue we are seeing is that each time the incremental refresh runs, Power BI makes multiple calls to the source API. While this doesn't present a problem for most of our sources, we have a couple that provides larger result sets and is memory intensive, making multiple calls to the source multiple times based on the number of the past days before the last day. It uses too much memory and ultimately brings our server to overcapacity.

A couple of things to note:

The Dataflow has one Entity (The API Source)

There are multiple transformation steps (e.g. taking the JSON Doc, converting it to a table, applying data types, etc.)

Situation: We are receiving many power BI requests through API. We need to review the performance of some dataflows.

We have some dataflows doing a call to an internal company API. These dataflows were developed to enable the Incremental refresh option with the date/time column.

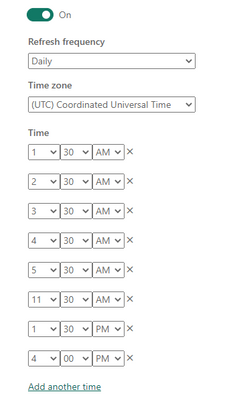

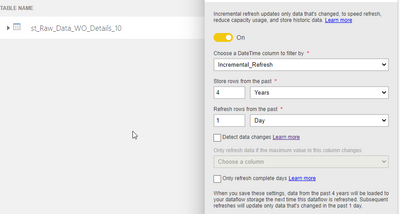

In the incremental set-up configuration, it is defined as keeping the data for four years and performing the incremental update for the last day. These dataflows were configured to refresh hourly.

For knowledge:

Updates were scheduled for early morning to facilitate the identification of incoming requests and performance evaluation

The original dataflows are set up to be refreshed from 9am to 5pm - 8x/day

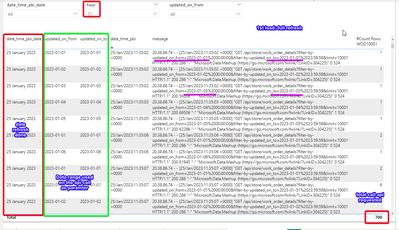

Incremental_refresh column start date = 01/01/2023

We are just limiting the year 2023 data to understand how many API calls power Bi is making. Around 25 dataflows are running and collecting data from different tables/parts of the system.

We collect data from a mobile app database and do not use a direct query to the database or gateway access.

The database is MongoDB.

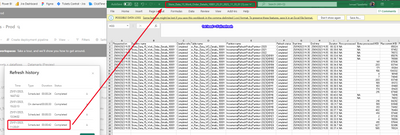

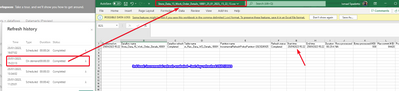

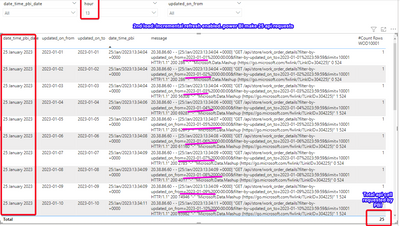

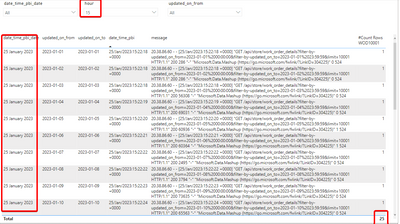

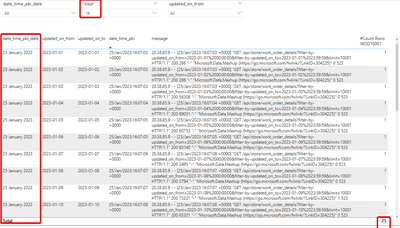

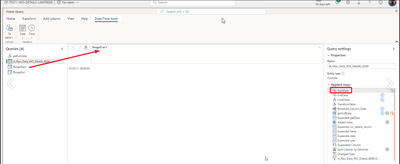

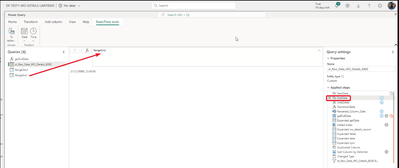

When we look at the Power BI update histories, it appears to be performing its function correctly. (Images below)

1st update = full update

2nd update = 1 day

Here is the point now.

When we are analyzing the number of MS Power BI requests using the API for our server, it seems every time, it is going through/passing the initial dates (01/01 to 24/01) before effectively making the last date in case 25/ 01

Below is a print of the 1.30pm, 3.22pm & 4.07pm

The same number of API call has been observed in the 3rd and 4th refreshes

Does anyone know why PBI would be redoing the API call every day and informing that only the last day (01/24 in this case) was executed in the refresh history?

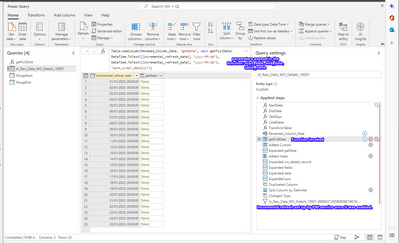

Here are some screenshots that may help to give more details of the steps.

I would really appreciate it if someone could give an idea or advise how to limit the number of API sent by Power BI/MS

Kind regards,

Ismael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi people,

My issue has been fixed using the following instructions.

We use the RangeStart and RangeEnd parameters created automatically by configuring the incremental update in Dataflow to create the list of dates used in the API.

First, we create a list of dates and after we have a function for each date row passing the range period as a parameter to this function.

The list of dates will be managed/increased by Power BI background

@OllieSvT Hope this can help you.

kind regards,

Ismael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

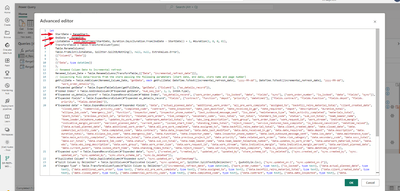

Can you post the M code for your Power Query queries? The issue above turned out to be caused by inefficient code.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

let

Source = Json.Document(Web.Contents(Url)),

Navigation = Source[items],

#"Converted to table" = Table.FromList(Navigation, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Expanded Columns" = Table.ExpandRecordColumn(#"Converted to table", "Column1", {"dateTime", "date", "value", "valid", "invalid", "missing", "completeness", "quality"}, {"DateTime", "Date", "Value", "valid", "invalid", "missing", "completeness", "quality"}),

#"Changed column type" = Table.TransformColumnTypes(#"Expanded Columns", {{"DateTime", type datetime}, {"Date", type date}, {"Value", type number}, {"valid", Int64.Type}, {"invalid", Int64.Type}, {"missing", Int64.Type}, {"completeness", type text}, {"quality", type text}}),

#"Inserted time" = Table.AddColumn(#"Changed column type", "Time", each DateTime.Time([DateTime]), type nullable time),

#"Added TID" =

Table.AddColumn(

#"Inserted time",

"Time_ID",

each

Text.PadStart(

Text.From((

Number.Round(

Number.From(

Time.From(Number.Round(Number.From(Time.From([DateTime]))*(24*60/15))/(24*60/15)) //Create rounded 15 min time interval (96 divisions for TimeslotID)

)

*96)

+1))

,2,"0")),

#"Added TSID" = Table.AddColumn(#"Added TID", "Timeslot_ID", each Text.Combine({

Text.From(Date.Year([DateTime])),

Text.PadStart(Text.From(Date.Month([DateTime])),2,"0"),

Text.PadStart(Text.From(Date.Day([DateTime])),2,"0"),

Text.From([Time_ID])

})),

#"Change TimeID type" = Table.TransformColumnTypes(#"Added TSID", {{"Timeslot_ID", Int64.Type}, {"Time_ID", Int64.Type}}),

#"Removed columns" = Table.RemoveColumns(#"Change TimeID type", {"Time_ID"}),

#"Reordered columns" = Table.ReorderColumns(#"Removed columns", {"DateTime", "Date", "Time", "Timeslot_ID", "Value", "valid", "invalid", "missing", "completeness", "quality"}),

#"RF_Parkend-incremental_refresh" = Table.SelectRows(#"Reordered columns", each DateTime.From([DateTime]) >= RangeStart and DateTime.From([DateTime]) < RangeEnd),

#"RF_Parkend-4461746554696D65-autogenerated_for_incremental_refresh" = Table.SelectRows(#"RF_Parkend-incremental_refresh", each DateTime.From([DateTime]) >= RangeStart and DateTime.From([DateTime]) < RangeEnd)

in

#"RF_Parkend-4461746554696D65-autogenerated_for_incremental_refresh"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm fairly sure I see the problem here: incremental refresh will only make your refresh faster if the date/time filter is passed back to the data source, otherwise you're going to be loading all the data from the source and filtering it in the Power BI Service. I can see that the filter is not being passed back to the source here: the URL in your code is asking for all the data between 1920 and 2050:

https://environment.data.gov.uk/hydrology/id/measures/5adcd239-4420-40b5-abe2-69082f9e24ff-rainfall-...?mineq-date=1920-01-01&max-date=2050-01-01&_limit=2000000

You need to rewrite your code so the date filters specified in RangeStart and RangeEnd are passed back to the mineq-date and max-date parameters in the URL.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did think this may be the case, I had originally tried to include the parameters as drynamic ranges in the url but get caught in a loop whereby I can't save the dataflow as I have dynamic ranges. I am confused where to go from here! Thanks

let Url = "http://environment.data.gov.uk/hydrology/id/measures/5adcd239-4420-40b5-abe2-69082f9e24ff-rainfall-t..."&RangeStart&"&max-date="&RangeEnd&"&_limit=2000000", Source = Json.Document(Web.Contents(Url)), Navigation = Source[items], #"Converted to table" = Table.FromList(Navigation, Splitter.SplitByNothing(), null, null, ExtraValues.Error), #"Expanded Columns" = Table.ExpandRecordColumn(#"Converted to table", "Column1", {"dateTime", "date", "value", "completeness", "quality"}, {"DateTime", "Date", "Value", "completeness", "quality"}), #"Changed column type" = Table.TransformColumnTypes(#"Expanded Columns", {{"DateTime", type datetime}, {"Date", type date}, {"Value", type number}, {"completeness", type text}, {"quality", type text}}), #"Inserted time" = Table.AddColumn(#"Changed column type", "Time", each DateTime.Time([DateTime]), type nullable time), #"Added TID" = Table.AddColumn( #"Inserted time", "Time_ID", each Text.PadStart( Text.From(( Number.Round( Number.From( Time.From(Number.Round(Number.From(Time.From([DateTime]))*(24*60/15))/(24*60/15)) //Create rounded 15 min time interval (96 divisions for TimeslotID) ) *96) +1)) ,2,"0")), #"Added TSID" = Table.AddColumn(#"Added TID", "Timeslot_ID", each Text.Combine({ Text.From(Date.Year([DateTime])), Text.PadStart(Text.From(Date.Month([DateTime])),2,"0"), Text.PadStart(Text.From(Date.Day([DateTime])),2,"0"), Text.From([Time_ID]) })), #"Change TimeID type" = Table.TransformColumnTypes(#"Added TSID", {{"Timeslot_ID", Int64.Type}, {"Time_ID", Int64.Type}}), #"Removed columns" = Table.RemoveColumns(#"Change TimeID type", {"Time_ID"}), #"Reordered columns" = Table.ReorderColumns(#"Removed columns", {"DateTime", "Date", "Time", "Timeslot_ID", "Value", "completeness", "quality"}), #"Renamed columns" = Table.RenameColumns(#"Reordered columns", {{"Value", "Value (mm)"}, {"completeness", "Completeness"}, {"quality", "Quality"}}), #"Sorted rows" = Table.Sort(#"Renamed columns", {{"DateTime", Order.Descending}}), #"RF_Parkend_API-for_incremental_refresh" = Table.SelectRows( #"Sorted rows", each DateTime.From([DateTime]) >= DateTime.From(RangeStart) and DateTime.From([DateTime]) < DateTime.From(RangeEnd)) in #"RF_Parkend_API-for_incremental_refresh"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you tried using the RelativePath option of Web.Contents to handle the parameterisation? https://blog.crossjoin.co.uk/2016/08/23/web-contents-m-functions-and-dataset-refresh-errors-in-power...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Potentially getting closer but also feel this may not be the best method.. I now have an issue where I need three batches of additional text to add on to create a full URL but I can only define the 'RelativePath' field name once in the query:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry, my mistake - I should have said to use the Query option for RangeStart and RangeEnd, and not the RelativePath option.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No worries at all, all guidance is super appreciated 🙂 I am still trying to make sense of this and found the relevant guidance pages on the Microsoft pages but won't lie that I am struggling with the correct syntax. Would you be able to demonstrate the structure of the query for the two Range sections and how to wrap the other parts of the url to this? Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here's a version of the Power Query query which I put together and which works for me:

let

RangeStartText = DateTime.ToText(RangeStart, [Format="yyyy-MM-dd"]),

RangeEndText = DateTime.ToText(RangeEnd, [Format="yyyy-MM-dd"]),

CallWebService = Json.Document(Web.Contents("http://environment.data.gov.uk/hydrology/id/measures/5adcd239-4420-40b5-abe2-69082f9e24ff-rainfall-t-900-mm-qualified/readings.json?mineq-date=1920-01-01&max-date=2050-01-01&_limit=2000000", [Query=[#"mineq-date"=RangeStartText, #"max-date"=RangeEndText, #"_limit"="2000000"]])),

items = CallWebService[items],

#"Converted to Table" = Table.FromList(items, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Expanded Column1" = Table.ExpandRecordColumn(#"Converted to Table", "Column1", {"measure", "date", "dateTime", "value", "valid", "invalid", "missing", "completeness", "quality"}, {"measure", "date", "dateTime", "value", "valid", "invalid", "missing", "completeness", "quality"}),

#"Expanded measure" = Table.ExpandRecordColumn(#"Expanded Column1", "measure", {"@id"}, {"@id"}),

#"Changed Type" = Table.TransformColumnTypes(#"Expanded measure",{{"@id", type text}, {"date", type date}, {"dateTime", type datetime}, {"value", type number}, {"valid", Int64.Type}, {"invalid", Int64.Type}, {"missing", Int64.Type}, {"completeness", type text}, {"quality", type text}}),

HandleError = try #"Changed Type" otherwise #table(type table [#"@id" = Text.Type, date = Date.Type, dateTime = DateTime.Type, value = Number.Type, valid = Int64.Type, invalid = Int64.Type, missing = Int64.Type, completeness = Text.Type, quality = Text.Type], {}),

#"Added Custom" = Table.AddColumn(HandleError, "Time_ID", each Text.PadStart(

Text.From((

Number.Round(

Number.From(

Time.From(Number.Round(Number.From(Time.From([dateTime]))*(24*60/15))/(24*60/15))

)

*96)

+1))

,2,"0")),

#"Changed Type1" = Table.TransformColumnTypes(#"Added Custom",{{"Time_ID", Int64.Type}}),

#"Added Custom1" = Table.AddColumn(#"Changed Type1", "Timeslot_ID", each Text.Combine({

Text.From(Date.Year([dateTime])),

Text.PadStart(Text.From(Date.Month([dateTime])),2,"0"),

Text.PadStart(Text.From(Date.Day([dateTime])),2,"0"),

Text.From([Time_ID])

})),

#"Changed Type2" = Table.TransformColumnTypes(#"Added Custom1",{{"Timeslot_ID", Int64.Type}})

in

#"Changed Type2"

Apart from the code at the beginning for passing the RangeStart and RangeEnd parameters back to the API, the other challenge was handling the situation where the API doesn't have data for the requested date and returns no data at all (rather than an empty table) - I'm handling this by using a try...otherwise statement and returning my own empty table when that happens. Can you let me know if this works for you?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can see that in the screenshots showing the API activity there is a parameter of &limit=10001 attached to the API requests. This suggests Power Query is calling the API to try to get the schema of the response. Can you try turning off all data privacy settings for the dataflow? You can do this by going to the Options dialog in Power Query Online, going to Project/Privacy and selecting "Allow combining data from multiple sources" - this may help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi cpwebb, I have been having the exact same issue as described by ismael.

I checked my PQ online and can confirm the same thing happens even with the 'allow combioning...' option selected. I am connecting into a freely available database with an API. This has a fair usage limit on it which I am currently breaching due to the way PBI is pulling the data when using an incremental refresh. I have a temporary solution in place that works outside of PBI but I wish to develop an all encompassing dataflow for the long term and this process will be key in the long term stability of this.

Any thoughts on how to improve the efficiency of the API/Incremental Refresh process is much appreciated.

Thanks

Ollie

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.