- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Earn a 50% discount on the DP-600 certification exam by completing the Fabric 30 Days to Learn It challenge.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Data Refresh Times and Duplicate/Referenced Tables

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Data Refresh Times and Duplicate/Referenced Tables

Hello,

I have a question around referencing vs. duplicating data tables. I am working with large data tables (>20 million rows) and in order to increase the efficiency of the reports, I break down the master data table into dimension tables (for dates and locations etc.). To create these dimension tables, I have two options - either duplicate the master data table or reference it, remove all in-applicable columns and remove duplicate and use that as the dimention table.

When I duplicated the table, and tried the data refresh on the report in Power BI Service, I got time-out errors because the data refresh was taking too long. I changed my query to instead create dimension tables by referencing the master data table.

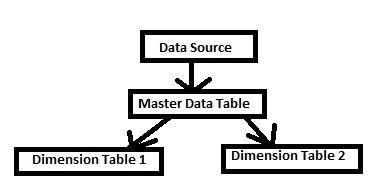

Although the data refresh time has gone down considerably when I reference the master data table to create the dimension tables, it appears that Power BI when refreshing the dimension tables, doesn't actually just hit the main data source once, and then refresh the dimension tables, it will hit the main data source, get the master table, update dimension table 1, then hit it again, update the master data table and then update dimension table 2.

What I'm struggling to understand is, why is the data refresh time in Service considerably less (went down from over 5 hours to 45 minutes) when the data is being refreshed and loaded from the main data source the same number of times as it would have if I had duplicated the data table. Also, which one do we use for creating dimension tables?

Any help or insight would be greatly appreciated.

Thank you!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

20M rows is not considered large (unless you have gazillions of columns)

Consider using Table.Buffer if you keep re-using a source table in your query. (Doesn't help across queries)

Use Query Diagnostics to know for sure where your mashup is spending its time, or use the SQL Profiler option

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

20M rows is not considered large (unless you have gazillions of columns)

Consider using Table.Buffer if you keep re-using a source table in your query. (Doesn't help across queries)

Use Query Diagnostics to know for sure where your mashup is spending its time, or use the SQL Profiler option