- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Report Server

- Question on data limitation

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Question on data limitation

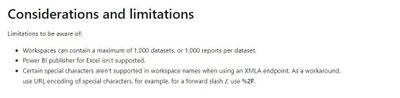

I am in the beginning process of developling a data governance process at work. Does it make sense to require everyone who publishes a report to go through the PowerBI pipeline deployment process? I am skeptical because we currently already have an ecosystem of 140 workspaces. About 20% currently use the pipeline deployment process. If we create a governance that requires everyone to go through the pipeline deployment process, this would result in 300+ workspaces. My concerns are does this become to monstrous to manage? What is the best practice? and will I run into data storage issues? I know premium capacity allows us to have up to 100TB worth of data. The 1000 dataset limitation also scares me. What does this mean for pipeline deployment?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @andrewmvu41 ,

The following article tells deployment pipelines best practices,you could refer it:

https://learn.microsoft.com/en-us/power-bi/create-reports/deployment-pipelines-best-practices

Best Regards

Lucien

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 10 | |

| 5 | |

| 4 | |

| 3 | |

| 3 |

| User | Count |

|---|---|

| 13 | |

| 11 | |

| 7 | |

| 3 | |

| 3 |