- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Unpack .blob files in PowerBI

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unpack .blob files in PowerBI

Hi!

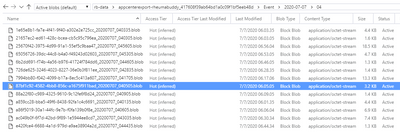

I have created a Blob Storage, where daily data from Azure App Insights are placed in .blob format.

I can easily connect to the storage, but I have a hard time unpacking the files further. The screenshot below is an example of a line with an event. I can use Split by Delimiter in an array of different ways and rename columns, but the issue then is that not all lines are similar in the sequence and content, e.g. not all lines have a UserID (if the event happened before an user was created, such as onboarding-events). It's clear that there is a logical structure to the way data is created and I would love if there was a way for it to split into columns based on that structure.

Can anyone help?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should be able to apply Json.Document to the Contents column you are showing. Also, it looks like there are only a few types of files/data structures. You could disable load on the query you show, and then reference it in 2-3 other queries where you first filter using an identifying keyword before you apply the right Json.Document and navigation steps. You could then have these as separate tables in your model, or further transform them to match before you append them back together if appropriate.

Regards,

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hiya. Thanks for your help in solving this.

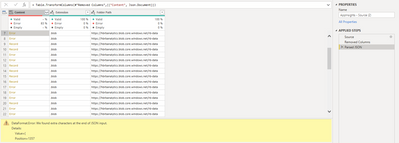

Apparently a large portion of the data rows are not in JSON-format, and it's independent of data-structure (I have found there are two basic ones). Weird since the attributes are similar for those it recognizes as JSON and those it does not. I guess it does not make sense to have half of the rows automatically divided in a separate table, if I still have to do it manually on the other half, and then stich it together. Any other suggestions?

(Another idea would be to ask the people providing the .blob data if they can nummerate the columns or change the structure to something more similar to regular .csv).

But I have made the Source-query, used Parse to Text (before I used Combine on the binaries), disabled load, referenced it from two separate queries based on the two different data structures and then manually used 6 - 10 x SplitColumns, then finally appended them together. The data is correctly presented in columns, but my concern is the speed. It's faster than before, where I used Combine rather than Parse to Text, but it still takes 5 hours to load 1.2GB data. Any suggestions on how to make it faster? Would it make it faster if I found a way to avoid all the SplitColumns and appending?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Anonymous

as I already suggested you can try some "try otherwise" to try several data transformation in your TransformColumnns-function

BR

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You could try connecting to that folder and then adding a custom column that uses Json.Document to parse each line so you can pull out the data.

Regards,

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mahoneypat

Hi - thank you for the suggestion. It returns an error - perhaps because it recognizes the files as .blob and not JSON-format.

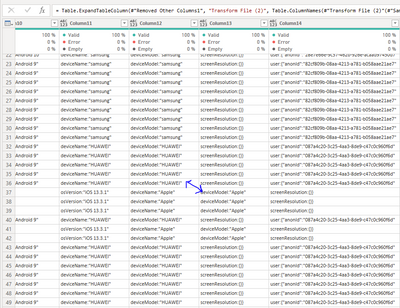

This screenshot pretty well shows the general issue with lines that have different attributes and values, when using Comma to separate the columns, where 'DeviceModel' turns up in different columns.

If only there was a way to have Power Query go through each line and detect attributes, such as DeviceModel, and put it together in same column. No matter how many attributes were before or after in the line. A solution could be to keep the original column intact and manually create an array of customized columns that extracts values, e.g. with Text.Select to find DeviceModel-attribut and the value. Although not sure how to create the code in this instance.

I am solving it manually right now by having a separate query (linked to data from same Source-query) for the different kind of blob-files, where the attributes and values are in the same order. It's extremely slow though and takes up to 10 hours to load the 1.2 GB of data into Desktop, so I have filtered out and only play with the top 5000 rows.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Anonymous

to me it seems as if some of your data is json some other not. So you could try to integrate in your TransformColumns a try otherwise like TransformColumn(Previousste, {{"Content", each try Json.Document(_) otherwise OtherDataTransformation(_)}})

If this post helps or solves your problem, please mark it as solution (to help other users find useful content and to acknowledge the work of users that helped you)

Kudoes are nice too

Have fun

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jimmy!

Thank you! I gave that a shot. It worked on the JSON and the only other data transform I knew was the XML-version, which gives a record. I guess I can save this query, disable load and make two tables, one with filtering on Record (unpacking that) and one with keeping the JSONs.

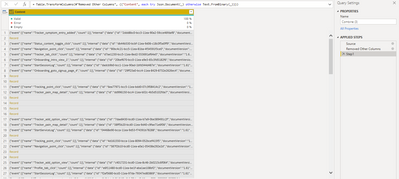

Still, this unpacks each file correctly, but it still have the following issues, so would be grateful if you (or anyone else with magic M abilities) could help with amending the solution:

1) It does not appropriately extract and divide the Content attributes and variables into correctly sequenced columns, unless I am missing something? I would still have to use SplitColumns to achieve that (and would need several tables for each structure).

2) It requires several tables and appending them together, as I am doing right now.

3) It's still very slow, takes a few hours to load the total data. I suppose that is just PowerBI query and .blob in general, maybe I need to load it in batches and then set up incremental load. Using BLOB for higher speed was a solution recommended by a consultant, because AppInsights put a maximum of 50,000 rows.

Thank you 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

These blobs seem to contain JSON structures. Do you already know which parts of these structures you need, or do you need to get all the contents without fully knowing its structure?

JSON is hierarchical, whereas Power BI relies on flat tables. At some point you will have to make a compromise.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@lbendlinHi! I'm afraid I would need all of the content without fully knowing the structure, until we are more familiar with the data and possibilities.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.