- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- RAM Problems -Excel Crashes even with a very small...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RAM Problems -Excel Crashes even with a very small DataModel

Hi to all!

I'm working with Win 64bit, Office/Excel 365 Desktop with 32bit. Using PQ, PPivot, DaxMeasures an Excel-Tables.

I developed a small Data Model with 6-8 tables, some relationsships and the fact table is less than 10.000 rows and the DaxStudio show me the size of DataModel ist about 1,5 MB (not GB!). When am opening Excel and working a while with some query, transformations, refreshs, dax measureas etc. the RAM is growing and it comes to the point Excel doesn't work stable, sometimes crashs, messages like "not enough RAM - switch to 64bit versions etc. are appearing.

Am developing a tool for my customers, 99% of them use Excel 32bit. So I really have to avoid 64bit version...

Because of the small DataModel I can't imagine the problem causes of to much data... Filesize 2,5 MB...

Are there any other possibitlies which can cause this problem? Any tools to analyse? I have about 50 measures, could this be a problem?

Any help appreciated! I would even pay a professional who could help me to solve the problem. Am a very advanced Excel and PQ user, but beginner with Power Pivot and Dax Measures... Thanks!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Pete,

as I told before it's difficult to change to 64bit, because my customers have 32bit and the solution is for my customers... I will first try to find other ways.

I tried a workaround now: I'm loading the main fact table from SQL in an Excel workbook separetly and in the Main application I'm loading from this Excel-Workbook. Now its a bit more stable and the Workbook mostly uses betwenn 800 MB and 1,2 GB RAM.

But am interested to learn more about efficient transformation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Pete,

I have the FactTable and all the Dimension Tables with relationships in my model, I know this concept.

Indeed it is the second example - I did 4 joins/merge with other information and expand and did filtering with that...

Just now I'm trying to get rid of the concept and I lost already the merges with this concept:

I did some "lookups" with this Add.Column ... each Filialen[VertriebsID]{List.PositionOf(Filialen[FilialID], [Filiale])} and some Filtering with list concept like ... each List.Contains(FEDAS[FEDAS],[FEDAS])

After loosing all my nestedJoin/merge I will test again what happend with Ram 🙂

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I rebuild the query without any merge/join, only with Filtering with lists and "lookup"... as mentionend before, unfortunately I was not able to refresh the table... this way needs even more ressources as before...

Any other ideas beside of avoiding merge/joins to find a solution?

I understand that this refreshing & merging process needs RAM, but when the Data is loaded the Model is very small (1,5 MB), the fact tables has about 8500 Rows... Why do I have RAM problems AFTER, I mean beside of refreshing this....

I have to look for other solutions... 😞

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gehe_muc ,

My recommendation would be to work in Excel 64bit and increase your RAM to 16GB plus.

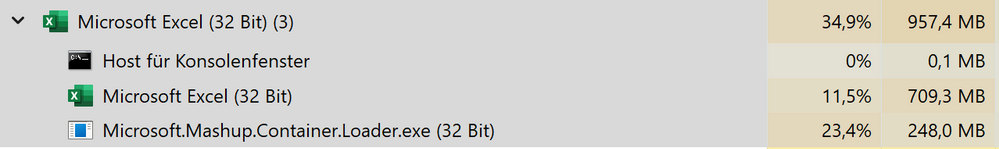

Regarding the RAM vs data size issue, this is almost completely irrelevant. It's not just the data size that potentially goes into RAM, it's running Excel, Power Query, Mashup Evaluation Containers, CefSharp processes, SAS processes etc. etc.

As I said before, I think you're really going to struggle to use Power Query in any anger in a 32bit Excel file.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yep, this was exactly the kind of thing I was talking about.

I assume that Power Query in Excel also supports query folding so, as long as your fact and reference tables both fully fold back to the server, you should see significant performance gains using these List methods.

If you want to speed it up even further, you can use List.Buffer to buffer your reference lists which will, in the sort term, increase RAM usage, but will release it far quicker, therefore reducing RAM requirement overall.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Pete,

thanks for your response!

I'm using 8 GB RAM on a Virtual Windows machine (I Know I can use only 2GB for Excel 32Bit). I'm loading about 150-200K rows from SQL, after that I transfer and merge 3-4 times to some Dimension Tables, after I aggregate everything and there are remaining less then 10K rows and about 15 columns. The DataModel Table shows 8.500 rows loaded. All the other dimension tables are very small and have only a few columns.

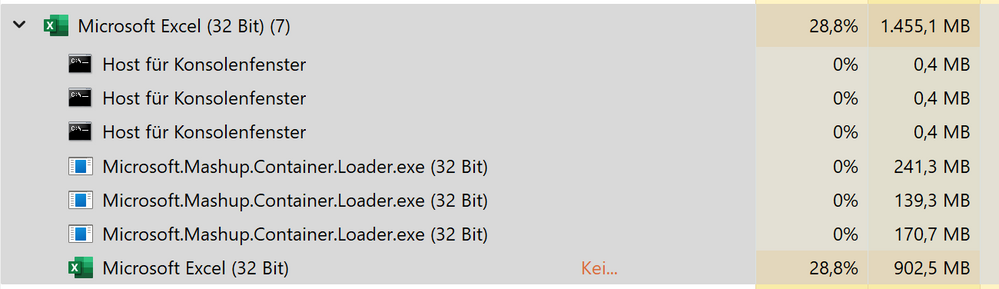

I noticed the following behavior: Even when I refresh only one small table in the DataModel (refreshing from an Excel table inside a file, 1 column, 1 row - single value, takes 1-2 sec) the RAM goes up from 143 MB to nearly 1 GB?? Even though I don't refresh the data from the SQL-Server....

What happens at this time? There ist no PQ action, no transformation at this time....

Any further ideas?

Could it be a solution to load the Data to Excel in the workbook, not storage the Data in the PowerPivot Kompressed Ram? If the file is going up to some MB doesn't mind if the solution is stable after...

Thanks!

After some minutes doing nothing at the DataModel or PQ Excel stops working:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gehe_muc ,

You're getting into the programming architecture of how Power Query technically works, which is way beyond my skillset I'm afraid.

However, I notice that you mentioned that you "transfer and merge 3-4 times". As I said before, merges are very expensive and should be avoided, especially if you're limited to 32bit RAM limitations. There are a number of alternatives/mitigations to merge costs which I'm happy to explore with you if required.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Pete,

thanks for trying to solve my issue! I'm german so I'm not sure what you mean bei "merge". In the Main-Query I do 4 "Joins" and adding columns and filtering after. Maybe I have to try another kind of transformation...

I'm curious about alternatives to solve my problems, thank you for sharing!

Steve

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gehe_muc ,

'Merges' ar what 'joins' are called in Power Query, and they're very intensive on system resources. Four merges/joins on one table could quite easily cause you major issues.

The primary way to avoid merges is to recreate them in the data model using relationships instead. Where you might do a left outer merge on your fact table to bring in item description from a dimension table, you would recreate this using a relationship like this:

factTable[itemID] MANY : ONE dimensionTable[itemID]. You would then use the dimensionTable[itemDescription] in any visuals where you want to display it.

If you're using merges to perform table filtering operations, there are other ways around this using list references and buffering, but I suspect it's my first example that you're actually using the merges for.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gehe_muc ,

The biggest RAM draw usually comes from SQL SAS and Mashup Evaluations. Therefore, it's possible that your M queries are not efficient in their transformations.

It's difficult to be sure on any of this, but be mindful of using 'whole table' operations, such as pivot/unpivot etc. Also, PQ merges are very expensive, so should be passed off to the data model where possible, or should utilise smart table buffering to avoid multiple calls to a reference table.

You didn't specify how much RAM your machine actually has but, as far as I'm aware, a 32bit app will only use up to 4GB RAM maximum, so you're really going to struggle to use Power Query in any anger in a 32bit Excel file.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.