- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- M Code to remove blanks and nulls from rows in a s...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

M Code to remove blanks and nulls from rows in a single sweep

I have multiple data sets with 10+ million each rows from our ERP that are subject to name and format changes by our IT team. CSV dumps are provided daily starting at 3am running until 6am or so, then I pull the extracts in the morning at 8am using PowerQuery in Excel and the data manipulation takes a couple of hours across the various files. Changes to the fields may happen during the day but I don't want to edit the new formats directly into PQ during the day because it runs the query again when I save but the changes won't be reflected in the data until tomorrow's extract.

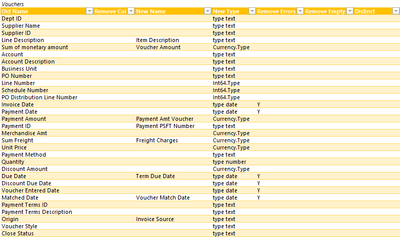

So I have created tables in Excel with metadata for the various extracts. Below is a sample subset for our vouchers with the table named tblVoucherMeta. I can remove columns my queries don't need, change the name of the column, identify the type, etc.

I then have a function that looks at the table metadata and performs the desired changes as needed. The function will do everything to the table except remove the empty rows. I need a logical OR instead of AND... in other words if any of the columns that are marked for removal are blank or null then the entire row should be deleted. I don't want to have to sweep the recordset 3 times, one for each condition, as that would vastly increase the time it takes to finish the query.

Power Query function code

/* --- fnSetMetaDataFromTable ---

MetaTable columns:

0 "Old Name" - Name from source Text

1 "Remove Col" - Delete the column? Y/N

2 "New Name" - New name of column Text

3 "New Type" - New column type Text - standard type names

4 "Remove Errors" - Remove errors from column e.g. dates Y/N

5 "Remove Empty" - Remove blanks and nulls Y/N

Not used... have to cycle for logical OR

6 "Distinct" - Eliminate duplicates Y/N

Always a difficult decision to know if column names or column positions are more likely to change.

This function is written to use column numbers as above

*/

(Source as table, MetaTable as table) as table =>

let

// MySchema = Table.Schema(Source),

/* ========================================================================

GET THE LISTS FOR ALL THE CHANGES NEEDED.

DO NOT CHANGE THE SOURCE TABLE

ALL ACTIONS ARE AGAINST THE META TABLE

*/

// Identify the unwanted columns first and then the remainder

WhatIsColumn = Table.ColumnNames(MetaTable){1},

FilterColsToDelete = Table.SelectRows(MetaTable,

each List.Contains(

{"Y", "Yes"},

Record.Field(_, Table.ColumnNames(MetaTable){1}))),

ListZapVals = Table.Column(FilterColsToDelete, Table.ColumnNames(MetaTable){0} ),

FilterColsToSave = Table.SelectRows(MetaTable,

each List.Contains(

{null, "", "N", "No"},

Record.Field(_, Table.ColumnNames(MetaTable){1}))),

// Identify the columns to rename and create the lists of old and new names

FilterColsToRename = Table.SelectRows(FilterColsToSave,

each not List.Contains(

{null},

Record.Field(_, Table.ColumnNames(MetaTable){2}))),

ListOldNameVals = Table.Column(FilterColsToRename, Table.ColumnNames(MetaTable){0} ),

ListNewNameVals = Table.Column(FilterColsToRename, Table.ColumnNames(MetaTable){2} ),

// Identify the columns to change the type. We will do this based on the old names and first filter where there is a type listed

FilterColsToChangeType = Table.SelectRows(FilterColsToSave,

each not List.Contains(

{null},

Record.Field(_, Table.ColumnNames(MetaTable){3}))),

ListChangeTypeNameVals = Table.Column(FilterColsToChangeType, Table.ColumnNames(MetaTable){0} ),

RawTypeList = Table.Column(FilterColsToChangeType, Table.ColumnNames(MetaTable){3} ),

ConvertedRawList = List.Transform(RawTypeList, each Expression.Evaluate( _, #shared) ),

// Identify the columns for distinct values

FilterColsToZapDupes = Table.SelectRows(FilterColsToSave,

each not List.Contains(

{null, "", "N", "No"},

Record.Field(_, Table.ColumnNames(MetaTable){6}))),

ListDistinctNameVals = Table.Column(FilterColsToZapDupes, Table.ColumnNames(MetaTable){0} ),

/* NOT USED but kept for reference

// With the basic Expression.Evaluate it doesn't recognize types that aren't primitive.

// This code will change any fancy terms to the primitive term

FixedTypeList = List.ReplaceMatchingItems(RawTypeList, {{ "Int64.Type", "type number"}, {"Currency.Type", "type number"}}),

// Convert the primitive (text) values to a type

ConvertedFixedList = List.Transform(FixedTypeList, Expression.Evaluate ),

*/

/* =======================================================

IMPLEMENT CHANGES FOR THE DESIGNATED COLUMNS

*/

// -- Implement actions for the columns --

// Delete the unwanted columns

RemoveCol = if not List.IsEmpty(ListZapVals) then

Table.RemoveColumns(Source,ListZapVals)

else Source,

// Change the column types to the types

ChangeColTypes = if not List.IsEmpty(ListChangeTypeNameVals) then

Table.TransformColumnTypes(RemoveCol, List.Zip({ListChangeTypeNameVals, ConvertedRawList}))

else RemoveCol,

/* ------------------------------------------------------------------

If we have to use the primitives, this search-and-replace text from biccountant.com will do it with hard-coded values

Now we have to filter yet again to find types that were not primitives and change them manually

Use the old column name before we rename the columns since renamed columns may not need a new name

This seems to work OK if no fields match the criteria, but could put in a List.Count

// Change type back to Int64

FilterColsToInt64 = Table.SelectRows(FilterColsToSave, each ([New Type] = "Int64.Type")),

ListInt64Fields = Table.Column(FilterColsToInt64, Table.ColumnNames(Rename){0} ),

ConvertFieldsToInt64 = Table.TransformColumnTypes(ChangeColTypes,

List.Transform(ListInt64Fields,

each {_, Int64.Type}

)

),

// Change type back to Currency

FilterColsToCurrency = Table.SelectRows(FilterColsToSave, each ([New Type] = "Currency.Type")),

ListCurrencyFields = Table.Column(FilterColsToCurrency, Table.ColumnNames(Rename){0} ),

ConvertFieldsToCurrency = Table.TransformColumnTypes(ConvertFieldsToInt64,

List.Transform(ListCurrencyFields,

each {_, Currency.Type}

)

),

------------------------------------------------------------------

*/

// Remove rows with errors

FilterColsToRemoveErrors = Table.SelectRows(MetaTable,

each List.Contains(

{"Y", "Yes"},

Record.Field(_, Table.ColumnNames(MetaTable){4}))),

ListErrorNameVals = Table.Column(FilterColsToRemoveErrors, Table.ColumnNames(MetaTable){0} ),

RemoveErrorsFromTable = if not List.IsEmpty(ListErrorNameVals) then

Table.RemoveRowsWithErrors(ChangeColTypes, ListErrorNameVals)

else ChangeColTypes,

/* -----------------------------------------------------------------

Remove empty rows

// Will have to cycle through each column for this since we want an OR

// for each column rather than AND. If all columns A,B,C checked at once

// all 3 would have to be empty or null to filter out the row.

FilterColsToRemoveEmpty = Table.SelectRows(MetaTable,

each List.Contains(

{"Y", "Yes"},

Record.Field(_, Table.ColumnNames(MetaTable){5}))),

ListEmptyNameVals = Table.Column(FilterColsToRemoveEmpty, Table.ColumnNames(MetaTable){5} ),

// FilterEmptyRowsFromTable = Table.SelectRows(NewTable, each [MSR History] <> null and [MSR History] <> ""),

------------------------------------------------------------------

*/

// Eliminate duplicates

RemoveDuplicateRows = if not List.IsEmpty(ListDistinctNameVals) then

Table.Distinct(RemoveErrorsFromTable, ListDistinctNameVals)

else RemoveErrorsFromTable,

// Finally, rename the columns with the new name

RenameCols = if not List.IsEmpty(ListNewNameVals) then

Table.RenameColumns(RemoveDuplicateRows, List.Zip({ListOldNameVals, ListNewNameVals}))

else RemoveDuplicateRows

in

RenameCols

Function called in other query

PromoteHeaders = Table.PromoteHeaders(Source),

MetaDataSource = Excel.CurrentWorkbook() { [Name = "tblVoucherMeta"]}[Content],

TableSetMetaData = fnSetMetaDataFromTable(PromoteHeaders, MetaDataSource),

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here are all the transforms, including the changed types and column names. This replaces the entirety of your function (minus the removal of duplicates, but that's easy to add).

h/t to Mr. von Neumann

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm still failing to understand.

In your code I see this line (truncated):

let

Source = Table.FromRows(

Json.Document(

Binary.Decompress(

Binary.FromText(

...

let

_t = ((type nullable text) meta [Serialized.Text = true])

in

type table [

#"Dept ID" = _t,

#"Supplier Name" = _t,

#"Supplier ID" = _t,

#"Line Description" = _t,

#"Sum of monetary amount" = _t,

Account = _t,

#"Account Description" = _t,

#"Business Unit" = _t,

#"PO Number" = _t,

#"Line Number" = _t,

#"Schedule Number" = _t,

...It appears the column names are still hardcoded?

If IT notifies me at 2:30pm that Finance requested to change the column name "Payment Date" to "Accrual Date", and by the way they've added a column "Accrued Amount" that I might be interested in, I can't modify the code that day to kick off a rerun since those fields aren't going to be in my raw data dump until 6am the following morning. I need my report available at 9am. If I get in at 8am and make the changes before kicking off the job it won't be available until 11am. Currently If I just make the changes to my Excel table by the time I leave the office then the job will run with the correct fields in the morning.

Every other activity in my function is able to run off the column name listing from the Excel table without hardcoding column names. Can M do the same for this requirement?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That first Source line is just the sample data that you gave me (Power Query adds some compression and (wrong) meta data). In your real world you would replace that step with the pointer to the actual source table.

You can see that by examining the "applied steps" one by one.

The M code I provided is totally flexible, and running off the reference table instructions. But beware - Power Query does not take kindly to dynamic structural changes in the output (adding/removing/renaming columns) - You will make the acquaintance of the (very unpleasant) "Evaluating..." monster.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you. Our organization is not a big Power BI user so most our PQ work is in Excel. An advantage for example is that I can take the data, find (say) users who created requisitions without ever making a corresponding purchase order and send targeted emails using VBA to get Outlook info and see if they want to cancel the req. MSFT will catch up with that at some point I suppose.

I'm unfamiliar with the "duplicate" construction you used - basically defining the action in the xp_ lines and then using Expression.Evaluate. Since I began working in PQ around 8 years ago and rarely go back to modify my code I'm sure the syntax and performance around your construction is superior, but it's new to me.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm unfamiliar with the "duplicate" construction you used - basically defining the action in the xp_ lines and then using Expression.Evaluate.

That is mainly for code readability and easier debugging. You can do that in a single step but it will be really hard to remember what it does. For example:

#"Removed Empty" = Expression.Evaluate("Table.SelectRows(Source, each not List.Contains({"""",null},[" & Text.Combine(Table.SelectRows(TransformReference, each ([Remove Empty] = "Y"))[Old Name],"]) and not List.Contains({"""",null},[") & "]))",[Table.SelectRows=Table.SelectRows,Source=Source,List.Contains=List.Contains]),

I wouldn't wish that on my enemy, and certainly not on the developer after me. There's PowerQueryFormatter.com which makes it a bit more readable:

Expression.Evaluate(

"Table.SelectRows(Source, each not List.Contains({"""",null},["

& Text.Combine(

Table.SelectRows(TransformReference, each ([Remove Empty] = "Y"))[Old Name],

"]) and not List.Contains({"""",null},["

)

& "]))",

[Table.SelectRows = Table.SelectRows, Source = Source, List.Contains = List.Contains]

)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there a good M book to have, like Rob Collie's DAX book, to get a handle on M? I find it a very dense language... between treating something between a list and a record, square vs curly brackets, the "_" operator, a lot of power is tucked into small space.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The only "book" you will ever need on M is Ben Gribaudo's Primer. It is absolutely fantastic.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My original question.

Here is standard code to remove blanks for a single column, in this case "Item Description".

#"Filtered Rows" = Table.SelectRows(#ReplaceNull, each [Item Description] <> null and [Item Description] <> "")

I can copy that statement for each column I want to remove nulls, but that's (say) 3 passes through the large data set. Is there a way I can get a list for all the columns I want to test for blanks and have them removed on a single pass? Any solution I've tried, passing a list of the columns to the Table.SelectRows or similar function and/or using the "_" operator, creates an AND function which means all the columns have to be blank in order for the row to be removed. I want the row to be removed if any column meets that condition, and to do so in a single pass.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think that should be possible. Please provide a small sample that covers the issue, and indicate the expected outcome based on that sample.

Some things to ponder: Table.ColumnNames() and List.Contains()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have created a small sample file, but I'm failing the IQ test on how to upload a spreadsheet to this forum. Try this link to pull a sample data file and the spreadsheet to run the query functions.

Based on the settings in the "Remove Empty" column I want fnSetMetaDataFromTable to remove both rows 4 and 6.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nearly there - can you please make the OneDrive link not ask for authentication?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Give it another shot...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("pZXbTuMwEIZfJeJqV4JkPD5fllIOWqAIKrTAchHaqHS3Tao0ef/1OKmbVGwXCSlNx649h/+zpy8vR2zAANnR8dHgdOjeUrrX3XtarlJn6NiPkQGE6WlWV4tputy4mdOrC/dmzEoOgGR2PjxhkCAw7WyRyK0pY8XJJ4IAsLsJ2Jmj80nrA/xeu93rvWLw2rVp9W1WRRxCLffZvF6mZfRY1NP3rHQz43WWUyHFpspmNM6XizxzhmUKGAdF1d6klVtOPw/HN0B+h5fDH5Qds5Z7Qdhe8PC0IUma4s29fz49k3fJDZXMjdaGxaiOXo//ozxDLmLFPiO+lmAs3xOfYUIJMtnasB2glFvHbgIY9Ob6oz4HliA5UU2lzqnpBNjZX+KglQUjrdCHOAiJVii1qzQE/4DDeeldNCAk83UxhcJJFmvWgHBaS3I3PBv5HHzwzR9/SmMkxxI8hHHl8o9ushkxiH7V7tCr6KEu59FVla12UBA4NxbNHhRsFNTeNOHkAotFQ9o4ef01Uk1Yiskbs2GxncSEYefwtbB13/SSEAsPeXR29SkWzToNSqBCqfEQCcUtShGq4wfuw6CuisDBWM9BoQIbw78pPKyLfE7ngtm4xUD5nC0262KTvi0zYpFcp2/RXVnM6mlFIUc348mogSCNkf5mdK+HEz40I5uI0JeMjYVHrpzpixLxVm9HaAfBywn93Y3fwLRrfw2DRiu1VeogBg0Wm7uwF/sDEAECcw3P+k3IUMVa7zDQ1vOLy4ChStdLnyXXGBtPwjUdyjKfFats+p7mdCP21LfW+EXbtuA/rhExys7fDdamSgMlZOMahVDMW2EKuoO9rgQJDw7bANgNoMPgSxwsWA3SHmxM2t16zjuVYiexfQ5Dpyi1mOengT/g7qz6QCBM70rssZjUb4t8TqrGBJsLpAXD1HWmyvWmtieFVT0ekovtZe3yUJ0sofk6abz7gx5LDKqfhLPvpJWdfbr5k7YthJ7U49tragCz3/WmWmV5tfHVFxsvYRD6PqvqMo8mRfSYuVNVRt8e6vV6ucjK7xQS0LVgJjSPTiIAzYUCcT95pDhFFQ1o5ZQ6wkdUaG1bbz/Pw0zoeX39Cw==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [#"Dept ID" = _t, #"Supplier Name" = _t, #"Supplier ID" = _t, #"Line Description" = _t, #"Sum of monetary amount" = _t, Account = _t, #"Account Description" = _t, #"Business Unit" = _t, #"PO Number" = _t, #"Line Number" = _t, #"Schedule Number" = _t, #"PO Distribution Line Number" = _t, #"Invoice Date" = _t, #"Payment Date" = _t, #"Payment Amount" = _t, #"Payment ID" = _t, #"Merchandise Amt" = _t, #"Sum Freight" = _t, #"Unit Price" = _t, #"Payment Method" = _t, Quantity = _t, #"Discount Amount" = _t, #"Due Date" = _t, #"Discount Due Date" = _t, #"Voucher Entered Date" = _t, #"Matched Date" = _t, #"Payment Terms ID" = _t, #"Payment Terms Description" = _t, Origin = _t, #"Voucher Style" = _t, #"Close Status" = _t, #"Post Status" = _t, #"Voucher Source" = _t, #"Invoice Number" = _t, #"Match Status" = _t, #"Bank Code" = _t, #"Bank Account" = _t, #"Voucher ID" = _t, #"Voucher Line Number" = _t, #"Accounting Date" = _t, #"Match Line Status" = _t, #"Voucher Approval Status" = _t, #"Voucher Approval Date" = _t, #"Supplier Persistence" = _t, #"Entered By" = _t, #"Last User to Update" = _t, #"Check Number" = _t, #"Check Amount" = _t]),

#"Filtered Rows" = Table.SelectRows(Source, each not List.Contains({"",null},[Entered By]) and not List.Contains({"",null},[Check Number]))

in

#"Filtered Rows"

Do you need help with the type changes and column renames too?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I guess I do need help understanding your approach.

It appears you have hard-coded the column names and the logic. I don't control the extract - it goes to multiple departments and IT makes changes based on inputs from those departments as well as Finance. As stated in my initial request I don't want to hard-code fields or edit the query itself because Power Query will run the multi-hour query as soon as I finish editing today, and the changes won't be in the file until tomorrow morning. The point of the function is to be able to enter field changes without having to edit the query itself, have it run at 6am the next morning, and correctly manipulate the fields while I'm driving into work. You'll see that the fnSetMetaDataFromTable function has no hard-coded fields or logic. I want the process parameterized via the function.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

understood. Let me work on that. Looks like we need Expression.Evaluate for that.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Got it:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("pZXbTuMwEIZfJeJqV4JkPD5fllIOWqAIKrTAchHaqHS3Tao0ef/1OKmbVGwXCSlNx649h/+zpy8vR2zAANnR8dHgdOjeUrrX3XtarlJn6NiPkQGE6WlWV4tputy4mdOrC/dmzEoOgGR2PjxhkCAw7WyRyK0pY8XJJ4IAsLsJ2Jmj80nrA/xeu93rvWLw2rVp9W1WRRxCLffZvF6mZfRY1NP3rHQz43WWUyHFpspmNM6XizxzhmUKGAdF1d6klVtOPw/HN0B+h5fDH5Qds5Z7Qdhe8PC0IUma4s29fz49k3fJDZXMjdaGxaiOXo//ozxDLmLFPiO+lmAs3xOfYUIJMtnasB2glFvHbgIY9Ob6oz4HliA5UU2lzqnpBNjZX+KglQUjrdCHOAiJVii1qzQE/4DDeeldNCAk83UxhcJJFmvWgHBaS3I3PBv5HHzwzR9/SmMkxxI8hHHl8o9ushkxiH7V7tCr6KEu59FVla12UBA4NxbNHhRsFNTeNOHkAotFQ9o4ef01Uk1Yiskbs2GxncSEYefwtbB13/SSEAsPeXR29SkWzToNSqBCqfEQCcUtShGq4wfuw6CuisDBWM9BoQIbw78pPKyLfE7ngtm4xUD5nC0262KTvi0zYpFcp2/RXVnM6mlFIUc348mogSCNkf5mdK+HEz40I5uI0JeMjYVHrpzpixLxVm9HaAfBywn93Y3fwLRrfw2DRiu1VeogBg0Wm7uwF/sDEAECcw3P+k3IUMVa7zDQ1vOLy4ChStdLnyXXGBtPwjUdyjKfFats+p7mdCP21LfW+EXbtuA/rhExys7fDdamSgMlZOMahVDMW2EKuoO9rgQJDw7bANgNoMPgSxwsWA3SHmxM2t16zjuVYiexfQ5Dpyi1mOengT/g7qz6QCBM70rssZjUb4t8TqrGBJsLpAXD1HWmyvWmtieFVT0ekovtZe3yUJ0sofk6abz7gx5LDKqfhLPvpJWdfbr5k7YthJ7U49tragCz3/WmWmV5tfHVFxsvYRD6PqvqMo8mRfSYuVNVRt8e6vV6ucjK7xQS0LVgJjSPTiIAzYUCcT95pDhFFQ1o5ZQ6wkdUaG1bbz/Pw0zoeX39Cw==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [#"Dept ID" = _t, #"Supplier Name" = _t, #"Supplier ID" = _t, #"Line Description" = _t, #"Sum of monetary amount" = _t, Account = _t, #"Account Description" = _t, #"Business Unit" = _t, #"PO Number" = _t, #"Line Number" = _t, #"Schedule Number" = _t, #"PO Distribution Line Number" = _t, #"Invoice Date" = _t, #"Payment Date" = _t, #"Payment Amount" = _t, #"Payment ID" = _t, #"Merchandise Amt" = _t, #"Sum Freight" = _t, #"Unit Price" = _t, #"Payment Method" = _t, Quantity = _t, #"Discount Amount" = _t, #"Due Date" = _t, #"Discount Due Date" = _t, #"Voucher Entered Date" = _t, #"Matched Date" = _t, #"Payment Terms ID" = _t, #"Payment Terms Description" = _t, Origin = _t, #"Voucher Style" = _t, #"Close Status" = _t, #"Post Status" = _t, #"Voucher Source" = _t, #"Invoice Number" = _t, #"Match Status" = _t, #"Bank Code" = _t, #"Bank Account" = _t, #"Voucher ID" = _t, #"Voucher Line Number" = _t, #"Accounting Date" = _t, #"Match Line Status" = _t, #"Voucher Approval Status" = _t, #"Voucher Approval Date" = _t, #"Supplier Persistence" = _t, #"Entered By" = _t, #"Last User to Update" = _t, #"Check Number" = _t, #"Check Amount" = _t]),

xp = "Table.SelectRows(Source, each not List.Contains({"""",null},[" & Text.Combine(Table.SelectRows(TransformReference, each ([Remove Empty] = "Y"))[Old Name],"]) and not List.Contains({"""",null},[") & "]))",

#"Filtered Rows" = Expression.Evaluate(xp,[Table.SelectRows=Table.SelectRows,Source=Source,List.Contains=List.Contains])

in

#"Filtered Rows"

"TransformReference" is your instruction table.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("nVZNb6MwEP0rFudqT1XvCbRSpKbNimSlKurBNbPBCtjIHlfLv68JmEBasFmJi8dvZt58muMxSqBCskmiu6j9sK6AIPzDXhK93x2j1FRVwUGRF1pCMNhv95kLIAlopniFXIr2aoNQ3kgnXZVE/iWlFIBU1YSW0ogO80callsWKyeLjVIgWP1rb42N7awYuypOu+tg3xlPq6yNtjFqTQ6Ce+3vXsmLKT9ABeXtBroR+HD/Q2ypzUJmimC8JZFwjYp/mCZAssTXRnxKzmxJKd60SdZK3oaOaF1Ck80l4NWgwlcZkq7a82V2Cq4x3XmXPu2vIU7kfAuK5VRkXEPjsb+c8de055MCfso7eHcgcU7VCfS8dtMxZKdsPkN8uVi2gLnMfA3021CBHOsxToyrfEHaXmibfpj5eSqJGXbAHpSd5qtoqsK9o7H6jIab8EeBoCALU9pSbAZiAHZmLjc+li7NTVQ6YMON8QsWx6viJ+4WYjdWqTSKwbSOiyTFuvCu6biQtpNTpGi0NwqpMRDac3BcZ9EusrC111YojMeaijOJZealcAEGPgAuOH/dHXLJ9uxIcHFa0Mqth2W1WVWVkp+0+F+1IHb9b8AOlLYPil0W3lq4SV53i8me7aseF6DO35XenNYztd150NYVSnKosp5de3ERTBnppyEHdh4VavRYTLlu1X5+lDy/He9f", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [#"Old Name" = _t, #"Remove Col" = _t, #"New Name" = _t, #"New Type" = _t, #"Remove Errors" = _t, #"Remove Empty" = _t, Distinct = _t])

in

Source

You can do the other steps the same way.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

like so:

...

xp_remove_empty = "Table.SelectRows(Source, each not List.Contains({"""",null},[" & Text.Combine(Table.SelectRows(TransformReference, each ([Remove Empty] = "Y"))[Old Name],"]) and not List.Contains({"""",null},[") & "]))",

xp_remove_errors = "Table.RemoveRowsWithErrors(#""Removed Empty"", {""" & Text.Combine(Table.SelectRows(TransformReference, each ([Remove Errors] = "Y"))[Old Name],""", """) & """})",

#"Removed Empty" = Expression.Evaluate(xp_remove_empty,[Table.SelectRows=Table.SelectRows,Source=Source,List.Contains=List.Contains]),

#"Removed Errors" = Expression.Evaluate(xp_remove_errors,[Table.RemoveRowsWithErrors=Table.RemoveRowsWithErrors,#"Removed Empty"=#"Removed Empty"])

in

#"Removed Errors"- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry to hear about your situation

I need a logical OR instead of AND

Maybe you don't. Instead of removing rows, switch your approach to keeping rows ... where column A is not null and columnB is not null and column C is not null etc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have 56 columns of data. Most of them are regularly populated; some can be blank (e.g. payment date) if the voucher hasn't been processed and matched. So I can accept blanks in some fields and testing for permissible blanks under various circumstances would be a spaghetti plate of if-then logic. In some cases we only know the voucher hasn't paid yet because the payment field is blank. It's only a few fields that if they're blank the record is no good to me.

As an example, PeopleSoft (our ERP) has a known bug that when exporting to .csv if it encounters special characters such as copyright or trademark symbols it interprets that as a line feed/new record indicator. We do our best to clean the data before the extract (and keep our operators from copying product descriptions including those characters into the respective fields), but there are some mandatory fields that if they're blank we know the PeopleSoft extract encountered a problem and we have to throw away the row. (We go in afterward to the source data and figure out what record had the character so we can correct the source.)

In the main body of the voucher query I have the lines to check for those missing fields. But again, if I have to make a change to which columns I want to test I have to edit the query itself and then it runs for hours.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Use Table.Buffer and Table.AddKey to speed things up.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.