- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Daily Incremental Refresh with Delta Capture and R...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Daily Incremental Refresh with Delta Capture and Reporting

Hey folks,

I need to design a process that stages and appends a daily CSV file that is a snapshot of a table in a database (no direct connection allowed). This process needs to identify if any records that changed between each day, and then make that information available to the team.

For example, if on Monday, record 1 changed valueA from "Low" to "High" then that record needs to be flagged for review in a sub-process that uses the output of this report as an input. I.e., if a record shows up on this report, then it gets manually entered into a tracking tool to investigate.

I was thinking of staging this data in a folder in SharePoint, having the dba automate an e-mail delivery of the CSV daily an then having PowerAutomate save the file into the target folder with a file name DD-MM-YYYY.CSV. And then using the PowerBI server to do an incremental refresh.

I think I can figure out the incremental refresh, but I'm not sure how I would identify and flag records that changed from day to day. Do you have any suggestions?

Day 1 Snapshot - Export table example

| ID | Monitored_Field |

| 1 | Low |

| 2 | Moderate |

| 3 | High |

The change happens in real life and ID1 escalates to "Moderate" and ID2 escalates to "High" or even "High to "Low". Items moving to High or from High are the ones I'd want to have further investigated.

Day 2 Snapshot - Export table example

| ID | Monitored_Field |

| 1 | Moderate |

| 2 | High |

| 3 | Low |

So the output report would show Record 2 and Record 3 and if it's possible to show what the original value was and what it changed to that would be awesome. I just cannot figure out how to do it.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is only an incremental refresh in terms of the file date. What you are doing in fact is storing a bunch of snapshots of (nearly) the same data.

You need to identify a column in your CSV files that can serve as unique transaction key (maybe your ID column), and then you can use that in combination with the file date to run your change tracking for inserts/updates/deletes.

Depending on how big the CSVs are and how many you keep for your comparison you may not even need the incremental refresh.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good morning! I do have unique IDs in each of the table snapshots for every record. When you say use unique key and file date, are you referring to the actual file name "mm-dd-yyyy.csv"? I also add a 'snapshot_date' field to the CSVs in the data model for snapshot selections by the user. I.e., the user can pick their 'version of history' they want to look at.

The CSVs range in file size from 500KiB to 200MiB. The daily files would be 3MiB to 8MiB.

Do you have a method for actually identifying "changed", "new" or "deleted" records over time? I cannot seem to find a good tutorial.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That seems like a manageable amount of data. I would just ingest the CSVs as is. If you have the file datetime inside the data then that is ok too, but the filename datetime is usually enough.

For the delta processing you can use the standard period over period patterns but also have a look at EXCEPT and INTERSECT.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does it matter if my data does not have an audit date time field? Unfortuantely, it doesn't look like any of the target tables have a "lastUpdated" or "LastModified" field exposed to the report layer export. So the only thing I have are exact snapshots each day of the same fields with a file name of the previous day's export and an appended 'snapshot date' field into each record of the target snapshot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the file date is sufficient.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you. I am still kind of lost. I've read the EXCEPT, INTERSECT and a bunch of tutorials about combining two tables. But my problem is that I don't have tables or distinct queries, I have one query with appended data already in it. I.e., my single query has 03-01-2024 through 03-31-2024 data all essentially stacked on top of itself with a 'snapshot date' field.

It's escaping me how on a rolling daily basis I compare today's records to yesterday's records to identify any records that have changed since I don't have an audit field (lastModified).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

give me some sample data to work with and I can show you the mechanics. "Table" can be a physical thing, but it can also be your filter context, or a table variable.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You rock!

Day 1 Data

| ID | Name | Target_Chg_Field | Snapshot_Dt | Product |

| 1 | John | High | 03-01-2024 | Shoes |

| 2 | Mary | Moderate | 03-01-2024 | Fabric |

| 3 | Sue | Low | 03-01-2024 | Leather |

Day 2 Data

| ID | Name | Target_Chg_Field | Snapshot_Dt | Product | Value Changed |

| 1 | John | Low | 03-02-2024 | Shoes | No change |

| 2 | Mary | Moderate | 03-02-2024 | Fabric | No change |

| 3 | Sue | High | 03-02-2024 | Leather | No change |

| 4 | Zach | Low | 03-02-2024 | Guitars | No change |

Output table comparing Today to Yesterday (would be awesome if this was dynamic to any two given periods)

| ID | Name | Target_Chg_Field | Snapshot_Dt | Product | Value Changed |

| 1 | John | Low | 03-02-2024 | Shoes | High to Low |

| 3 | Sue | High | 03-02-2024 | Leather | Low to High |

| 4 | Zach | Low | 03-02-2024 | Guitars | New Record |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Really appreciate your effort on that! For some reason mine is throwing an error that it cannot find my columns that hold data that might change. I'm trying to figure out how to upload the PBIX but sanitizing the data connection is a trick. Should I just create a copy and point it to a CSV on my laptop? I'm not sure how to send the data along.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please provide sample data that covers your issue or question completely, in a usable format (not as a screenshot).

Do not include sensitive information or anything not related to the issue or question.

If you are unsure how to upload data please refer to https://community.fabric.microsoft.com/t5/Community-Blog/How-to-provide-sample-data-in-the-Power-BI-...

Please show the expected outcome based on the sample data you provided.

Want faster answers? https://community.fabric.microsoft.com/t5/Desktop/How-to-Get-Your-Question-Answered-Quickly/m-p/1447...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Crud we're not allowed to access any kind of file sharing sites from work. I could give you some sample data with the actual header names maybe?

| ID | Overall Inherent Risk | Overall Control Effectiveness | snapshot_dt |

| 1809498 | Moderate | Satisfactory | 3/1/2024 |

| 1809499 | Low | Satisfactory | 3/1/2024 |

| 1809500 | Low | Satisfactory | 3/1/2024 |

| 1809501 | Moderate | Satisfactory | 3/1/2024 |

| 1809502 | Moderate | Satisfactory | 3/1/2024 |

| 1809503 | High | Satisfactory | 3/1/2024 |

| ID | Overall Inherent Risk | Overall Control Effectiveness | snapshot_dt |

| 1809498 | High | Satisfactory | 3/2/2024 |

| 1809499 | Low | Satisfactory | 3/2/2024 |

| 1809500 | Low | Satisfactory | 3/2/2024 |

| 1809501 | Low | Satisfactory | 3/2/2024 |

| 1809502 | Moderate | Satisfactory | 3/2/2024 |

| ID | Overall Inherent Risk | Overall Control Effectiveness | snapshot_dt |

| 1809498 | Low | Satisfactory | 3/3/2024 |

| 1809499 | Low | Satisfactory | 3/3/2024 |

| 1809500 | High | Satisfactory | 3/3/2024 |

| 1809501 | Low | Satisfactory | 3/3/2024 |

| 1809502 | Moderate | Satisfactory | 3/3/2024 |

| 1809503 | High | Satisfactory | 3/3/2024 |

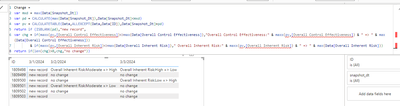

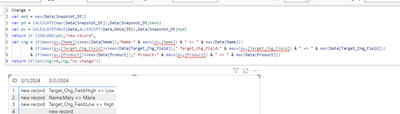

And my measure in my table:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

need more snapshot dates.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I refined the post with two morte snap shot dates of changed data. thank you so much for your help so far 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

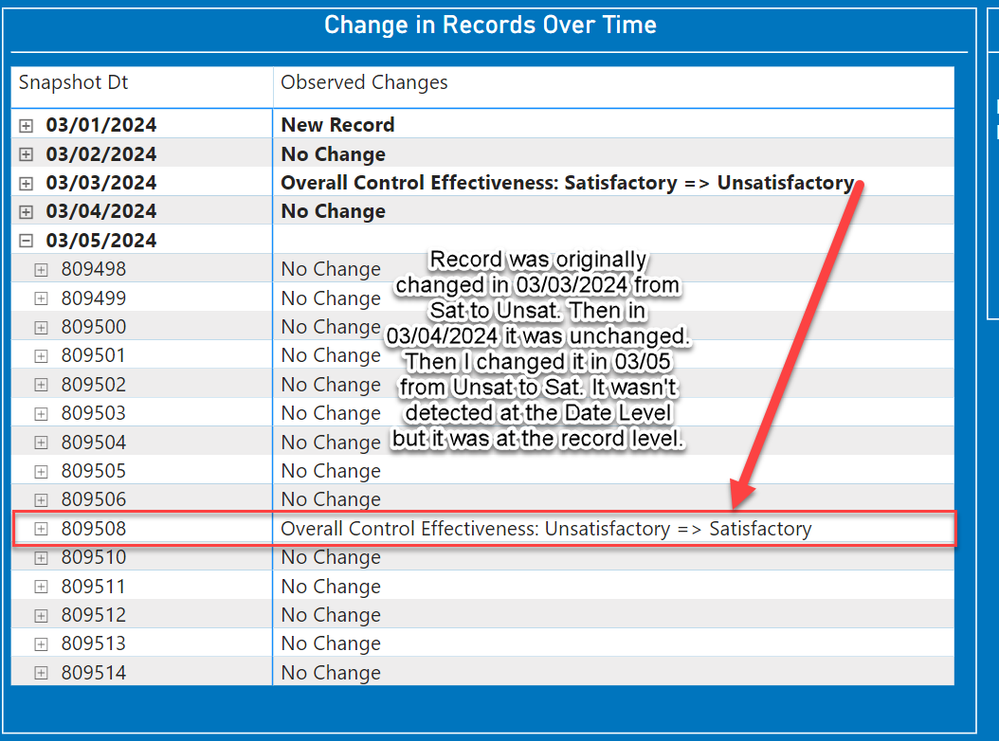

Hey man, for some reason the code is producing some interesting issues. If a record changes more than once it stops recognizing the change. It's almost like it's remembering that the original value from the date the record was loaded is now the same, and not showing it at the 'Date Level'. But it is detecting it at the 'Record Level'. Any thoughts on how to tweak that?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you originally asked to compare between two selected snapshot dates. Now it looks more like a list of dates, Please clarify,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I apologize I wasn't more clear. I should have better described the business process. Each day a new file will be added to the SharePoint directory. So each day when the service refreshes, I need to compare the current day's file with the previous day's file to see if there were changes.

I.e., tomorrow at 12:01 AM ET on 17 Apr 2024 I will receive a file for today 16 Apr 2024. The file will be stamped 04-16-2024.CSV and will need to compare itself against 04-15-2024.CSV. Or any two date values that are passed to the filter. I.e., if I say show me 04-01-2024 and 04-02-2024, what changs, if any, occurred there?

So at the end of the year I"m going to have 366 files (Leap year this year).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you select exactly two dates then my code will compare between them. If you select more dates then the code will compare between the smallest and largest selected dates.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, it does that very well! But I noticed an interesting bug that if a record changes and then in the future that record changes again, the code doesn't detect at the "Date Level" that a change was detected. But if I expand the Date to show all of the records, it shows that the record was indeed changed.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 106 | |

| 105 | |

| 79 | |

| 69 | |

| 61 |

| User | Count |

|---|---|

| 143 | |

| 104 | |

| 103 | |

| 82 | |

| 70 |