Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Synapse forums

- Forums

- Get Help with Synapse

- General Discussion

- Re: Error HTTP code 404 when using PySpark / Opena...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Error HTTP code 404 when using PySpark / Openai from Synapse Notebook

Hi,

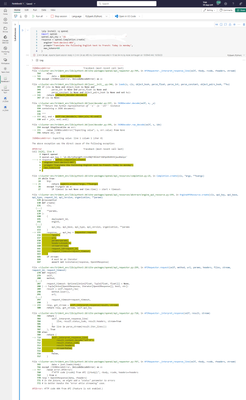

I'm trying to use Openai in a notebook with some simple PySparc code:

#Returns ok with: "Successfully installed openai-0.28.1"

I do not understand what is wrong with the first code cell!

Aslak Jonhaugen

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Follow up to see whether your issue got resolved?

In case if you have any resolution please do share that same with the community as it can be helpful to others .

Otherwise, will respond back with the more details and we will try to help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I have stopped to investigate the case. We got access to Azure OpenAI. Then it works as it should. So I guess we can close the case.

Thanks for the help anyway!

Regards,

Aslak

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Versionv3.3.1.5.2-105098986

Package Version

----------------------------- ----------------

absl-py 1.4.0

adal 1.2.7

adlfs 2023.1.0

aiohttp 3.8.4

aiosignal 1.3.1

anyio 3.6.2

argcomplete 2.1.2

argon2-cffi 21.3.0

argon2-cffi-bindings 21.2.0

asttokens 2.2.1

astunparse 1.6.3

async-timeout 4.0.2

attrs 22.2.0

azure-common 1.1.28

azure-core 1.26.4

azure-datalake-store 0.0.51

azure-graphrbac 0.61.1

azure-identity 1.12.0

azure-mgmt-authorization 3.0.0

azure-mgmt-containerregistry 10.1.0

azure-mgmt-core 1.4.0

azure-mgmt-keyvault 10.2.1

azure-mgmt-resource 21.2.1

azure-mgmt-storage 20.1.0

azure-storage-blob 12.15.0

azure-storage-file-datalake 12.9.1

azure-synapse-ml-predict 1.0.0

azureml-core 1.49.0

azureml-synapse 0.0.1

backcall 0.2.0

backports.functools-lru-cache 1.6.4

backports.tempfile 1.0

backports.weakref 1.0.post1

bcrypt 3.2.2

beautifulsoup4 4.11.2

bleach 6.0.0

blinker 1.6.1

Brotli 1.0.9

brotlipy 0.7.0

cached-property 1.5.2

cachetools 5.3.0

certifi 2022.12.7

cffi 1.15.1

charset-normalizer 2.1.1

click 8.1.3

cloudpickle 2.2.1

colorama 0.4.6

comm 0.1.3

conda-package-handling 2.0.2

conda_package_streaming 0.7.0

configparser 5.3.0

contextlib2 21.6.0

contourpy 1.0.7

cryptography 40.0.1

cycler 0.11.0

dash 2.9.2

dash-core-components 2.0.0

dash-cytoscape 0.2.0

dash-html-components 2.0.0

dash-table 5.0.0

databricks-cli 0.17.6

debugpy 1.6.7

decorator 5.1.1

defusedxml 0.7.1

dill 0.3.6

distlib 0.3.6

docker 6.0.0

entrypoints 0.4

et-xmlfile 1.1.0

executing 1.2.0

fastjsonschema 2.16.3

filelock 3.11.0

Flask 2.2.3

Flask-Compress 1.13

flatbuffers 23.1.21

flit_core 3.8.0

fluent-logger 0.10.0

fonttools 4.39.3

frozenlist 1.3.3

fsspec 2023.4.0

fsspec-wrapper 0.1.9

gast 0.4.0

geographiclib 1.52

geopy 2.3.0

gevent 22.10.2

gitdb 4.0.10

GitPython 3.1.31

google-auth 2.17.2

google-auth-oauthlib 0.4.6

google-pasta 0.2.0

graphviz 0.20.1

greenlet 2.0.2

grpcio 1.51.1

gson 0.0.3

h5py 3.8.0

html5lib 1.1

humanfriendly 10.0

idna 3.4

imageio 2.25.0

importlib-metadata 5.2.0

importlib-resources 5.12.0

impulse-python-handler 1.0.15.1.0.0

interpret 0.3.1

interpret-core 0.3.1

ipykernel 6.22.0

ipython 8.9.0

ipywidgets 8.0.4

isodate 0.6.1

itsdangerous 2.1.2

jedi 0.18.2

jeepney 0.8.0

Jinja2 3.1.2

jmespath 1.0.1

joblib 1.2.0

jsonpickle 2.2.0

jsonschema 4.17.3

jupyter_client 8.1.0

jupyter_core 5.3.0

jupyter-events 0.6.3

jupyter_server 2.2.1

jupyter_server_terminals 0.4.4

jupyterlab-pygments 0.2.2

jupyterlab-widgets 3.0.7

keras 2.11.0

Keras-Preprocessing 1.1.2

kiwisolver 1.4.4

knack 0.10.1

liac-arff 2.5.0

library-metadata-cooker 0.0.7

lightgbm 3.3.3

lime 0.2.0.1

llvmlite 0.39.1

lxml 4.9.2

Markdown 3.4.1

MarkupSafe 2.1.2

matplotlib 3.6.3

matplotlib-inline 0.1.6

mistune 2.0.5

mlflow-skinny 2.1.1

msal 1.21.0

msal-extensions 1.0.0

msgpack 1.0.5

msrest 0.7.1

msrestazure 0.6.4

multidict 6.0.4

multiprocess 0.70.14

munkres 1.1.4

mypy 0.780

mypy-extensions 0.4.4

nbclient 0.7.3

nbconvert 7.3.0

nbformat 5.8.0

ndg-httpsclient 0.5.1

nest-asyncio 1.5.6

notebookutils 3.3.0-20230926.8

numba 0.56.4

numpy 1.23.5

oauthlib 3.2.2

openpyxl 3.1.0

opt-einsum 3.3.0

packaging 22.0

pandas 1.5.3

pandasql 0.7.3

pandocfilters 1.5.0

paramiko 3.3.1

parso 0.8.3

pathos 0.3.0

pathspec 0.11.1

patsy 0.5.3

pexpect 4.8.0

pickleshare 0.7.5

Pillow 9.4.0

pip 23.0.1

pkginfo 1.9.6

pkgutil_resolve_name 1.3.10

platformdirs 3.2.0

plotly 5.13.0

ply 3.11

pooch 1.7.0

portalocker 2.7.0

pox 0.3.2

ppft 1.7.6.6

prettytable 3.6.0

prometheus-client 0.16.0

prompt-toolkit 3.0.38

protobuf 4.21.12

psutil 5.9.4

ptyprocess 0.7.0

pure-eval 0.2.2

py4j 0.10.9.5

pyarrow 11.0.0

pyasn1 0.4.8

pyasn1-modules 0.2.7

pycosat 0.6.4

pycparser 2.21

Pygments 2.14.0

PyJWT 2.6.0

PyNaCl 1.5.0

pyodbc 4.0.35

pyOpenSSL 23.1.1

pyparsing 3.0.9

pyperclip 1.8.2

PyQt5 5.15.7

PyQt5-sip 12.11.0

pyrsistent 0.19.3

PySocks 1.7.1

pyspark 3.3.1

python-dateutil 2.8.2

python-json-logger 2.0.7

pytz 2022.7.1

pyu2f 0.1.5

PyYAML 6.0

pyzmq 25.0.2

regex 2022.10.31

requests 2.28.2

requests-oauthlib 1.3.1

rfc3339-validator 0.1.4

rfc3986-validator 0.1.1

rsa 4.9

ruamel.yaml 0.17.21

ruamel.yaml.clib 0.2.7

ruamel-yaml-conda 0.15.80

SALib 1.4.7

scikit-learn 1.2.0

scipy 1.10.1

seaborn 0.12.2

SecretStorage 3.3.3

Send2Trash 1.8.0

setuptools 67.6.1

shap 0.41.0

sip 6.7.7

six 1.16.0

slicer 0.0.7

smmap 3.0.5

sniffio 1.3.0

soupsieve 2.3.2.post1

SQLAlchemy 2.0.9

sqlanalyticsconnectorpy 1.0.1

sqlparse 0.4.3

stack-data 0.6.2

statsmodels 0.13.5

synapseml-cognitive 0.11.2.dev1

synapseml-core 0.11.2.dev1

synapseml-deep-learning 0.11.2.dev1

synapseml-internal 0.13.0

synapseml-lightgbm 0.11.2.dev1

synapseml-mlflow 1.0.22.post1

synapseml-opencv 0.11.2.dev1

synapseml-utils 1.0.17

synapseml-vw 0.11.2.dev1

tabulate 0.9.0

tenacity 8.2.2

tensorboard 2.11.2

tensorboard-data-server 0.6.1

tensorboard-plugin-wit 1.8.1

tensorflow 2.11.0

tensorflow-estimator 2.11.0

termcolor 2.2.0

terminado 0.17.1

threadpoolctl 3.1.0

tinycss2 1.2.1

toml 0.10.2

toolz 0.12.0

torch 1.13.1

tornado 6.2

tqdm 4.65.0

traitlets 5.9.0

treeinterpreter 0.2.2

typed-ast 1.4.3

typing_extensions 4.5.0

unicodedata2 15.0.0

urllib3 1.26.14

virtualenv 20.19.0

wcwidth 0.2.6

webencodings 0.5.1

websocket-client 1.5.1

Werkzeug 2.2.3

wheel 0.40.0

widgetsnbextension 4.0.7

wrapt 1.15.0

xgboost 1.7.1

yarl 1.8.2

zipp 3.15.0

zope.event 4.6

zope.interface 6.0

zstandard 0.19.0

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Follow up to see whether your issue got resolved?

In case if you have any resolution please do share that same with the community as it can be helpful to others .

Otherwise, will respond back with the more details and we will try to help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I have stopped to investigate the case. We got access to Azure OpenAI. Then it works as it should. So I guess we can close the case.

Thanks for the help anyway!

Regards,

Aslak

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can someone help me to get this simple PySpark code to work i an Facric Notebook?

Or tell me what's wrong with it?

Thanks in advance! 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the information that you have provided and apologies for delay in response.

I have repro the scenario and I am able to translate from English to French.

Please find the screenshot.

For more details please refer : OpenAI API from a Microsoft Fabric Notebook

I hope this information helps you. Please do let us know in case of any further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the quick replay! But when I run the same code I get this error: What can be wrong?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try using the below mention command in order to install the Open AI library in Python.

!pip install -q openaiinstead of

!pip install openaiThis might help you in resolving your query.

I hope this helps in resolving your query. Please do let us know in case of any further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, but I'm sorry to tell that it didn't help:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you please try to generate new API key and try to run the code in new notebook. If you still facing the issue please let me know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi again. Same problem with new Api key.

This "low level"-call to OpenAI works, so I think the key is not the problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry for the inconvenience that you are facing.

Could you please provide the spark runtime version which you are using along with the packages that are installed?

This helps me in understanding the issue in a better way.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I am still struggling to get data from OpenAI using Python in a Fabric Notebook.

This extremely simple code:

File ~/cluster-env/trident_env/lib/python3.10/site-packages/openai/api_resources/abstract/engine_api_resource.py:85, in EngineAPIResource.__prepare_create_request(cls, api_key, api_base, api_type, api_version, organization, **params) 83 if typed_api_type in (util.ApiType.AZURE, util.ApiType.AZURE_AD): 84 if deployment_id is None and engine is None: ---> 85 raise error.InvalidRequestError( 86 "Must provide an 'engine' or 'deployment_id' parameter to create a %s" 87 % cls, 88 "engine", 89 ) 90 else: 91 if model is None and engine is None:

APIError: HTTP code 404 from API (Feature is not enabled.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The Semantic Kernel is way easier then this direct call you are trying to do. The skills of the semantic kernel are the system prompt and you can link with your data.

https://blog.fabric.microsoft.com/en-us/blog/chat-your-data-in-microsoft-fabric-with-semantic-kernel...

There is a thread here about this as well and soon I will be publishing an article.

Kind Regards,

Dennes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

This looks very interesting, but as far as I can see, you need access to Azure OpenAI. Is that correct?

We don't have access to Azure OpenAI, so then I have to do it the hard way?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

You are right, it uses Azure OpenAPI. I didn't notice you were using the public open api, not the azure one.

In this case, you can easily run this notebook locally in VS Code. You can use tools as Fiddler to capture the communication and postman to reproduce it until you locate the communication problelm.

Kind Regards,

Dennes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Following up to see if the above suggestion was helpful. And, if you have any further query do let us know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I am still struggling to get data from OpenAI using Python in a Fabric Notebook.

This extremely simple code:

File ~/cluster-env/trident_env/lib/python3.10/site-packages/openai/api_resources/abstract/engine_api_resource.py:85, in EngineAPIResource.__prepare_create_request(cls, api_key, api_base, api_type, api_version, organization, **params) 83 if typed_api_type in (util.ApiType.AZURE, util.ApiType.AZURE_AD): 84 if deployment_id is None and engine is None: ---> 85 raise error.InvalidRequestError( 86 "Must provide an 'engine' or 'deployment_id' parameter to create a %s" 87 % cls, 88 "engine", 89 ) 90 else: 91 if model is None and engine is None:

APIError: HTTP code 404 from API (Feature is not enabled.)

Helpful resources

| User | Count |

|---|---|

| 9 | |

| 8 | |

| 7 | |

| 4 | |

| 4 |