- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Organising ETL Best Practice

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Organising ETL Best Practice

Hi all

I'm building a new data model from 3 dataflows. The dataflows ingest Snowflake data and all pull in the same table structure from 3 different entities.

I removed all the unnecessary tables for my purposes from the dataflow inputs on load, and my first step was to create a single table for each of the source tables by appending all 3 of the same table from each entity for each table. I de-select "Enable Load" on each of the original source tables so they won't show up for me end users.

From here is where I'm not sure if I'm doing it "right". My initial thought was to do all the transformative steps on these appended tables (remove columns / add custom columns / replace values / additional merge and append steps / etc etc) and present them to the users to build reports from them. However, having got a decent chunk of the way through building like this I've realised that if anyone has to go back in and fix / change anything in this model it's going to be a lot for them to unpack and figure out to make a change without breaking anything.

Is there a cleaner way of doing this that makes the data model easier to understand for someone coming in cold? Should I leave my raw appended tables untouched, and then either reference or duplicate them into the various other tables I want to build for the users? For example, one of my currently built transformations has 40-50 different basic steps (remove columns/replace values, etc), then it merges with another table (also with a bunch of basic transforms already done) to pull a value from that, then merges with yet another table (with more basic steps) to pull a value from that, then is duplicated and another raft of change values is done.

I get the feeling I'm overcomplicating it, but I come from a low code/no code background not a data science one so I don't know what I don't know about what I might be doing wrong...

PS. For the record I don't have access to the data structure in the data source, that's a third party vendor that uses the same product across hundreds of customers and has flat out refused to change the source as it will impact other people and their processes (understandable I think), so everything I want to do has to be done through pwoer query / M.

Any general advice, tips or tricks, or links to how to make the ETL process easily documentable / understandable are very much appreciated!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

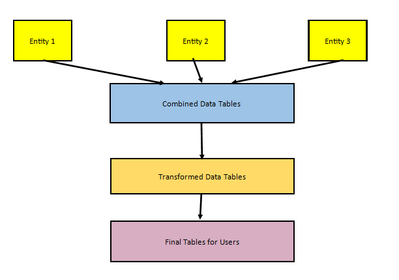

To try and explain a little more clearly, here's a picture:

Right now I'm confident in the Yellow to Blue step. But it feels like I'm combining the Blue to Orange and the Orange to Pink steps in a way that's going to cause trouble later on by using the blue tables throughout the process. Should I leave the blue tables untouched (and just deselect "Enable Load" on them), then duplicate out new tables for the orange steps with the pink step just being their final form, or is it OK to just build it all into the tables created in the blue step and trust that future me (or future colleague) will be able to unpick it all?

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.