Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Synapse forums

- Forums

- Get Help with Synapse

- General Discussion

- Re: Spark Job Definition: Spark_Ambiguous_NonJvmUs...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

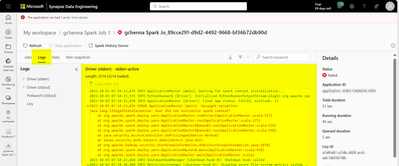

Spark Job Definition: Spark_Ambiguous_NonJvmUserApp_FailedContainerLaunch

Hi,

I created a notebook which runs sucessfully on my lakehouse.

After that, I created a spark job definition, downloaded the notebooks in format .py, uploaded to the job definition and tested. Of course, I linked the lakehouse to the job definition, the same lakehouse.

I'm getting the error Spark_Ambiguous_NonJvmUserApp_FailedContainerLaunch

I couldn't find reference to this error anywhere. What does it means? It seems like I missed some step related to authentication, but I couldn't identify what.

Thank you in advance!

Kind Regards,

Dennes

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The innitial message made me think the execution was not started, but going deep on the logs, I discovered the execution started and failed. The trick is that when using a notebook, "spark" is acessible as the current spark session, but when running a spark job, it's not.

As a result, the spark job code needs more details. In this case, a statement to create the session in a variable called "spark":

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @DennesTorres - Thanks for using Fabric Community,

As I understand you are trying to run/schedule notebook code using Spark Job Definition in MS Fabric and facing an error.

You might be facing this error Spark_Ambiguous_NonJvmUserApp_FailedContainerLaunch because your Spark job definition is missing a Spark session.

When you run the code in a notebook, Apache Spark automatically creates a Spark session. However, when you run the same code in a Spark job definition, you need to explicitly create a Spark session.

In addition, you need to avoid using the display() function in your Spark job definition, as it is also not supported.

To fix the error, you need to add the following code to the beginning of your Spark job definition:

from pyspark.sql import SparkSession

spark = SparkSession.builder.getOrCreate()

Once you have added this code, your Spark job definition should run successfully.

You can also find your issues for Spark Job Definition here:

Hope this helps, Please do let us know if you have further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The innitial message made me think the execution was not started, but going deep on the logs, I discovered the execution started and failed. The trick is that when using a notebook, "spark" is acessible as the current spark session, but when running a spark job, it's not.

As a result, the spark job code needs more details. In this case, a statement to create the session in a variable called "spark":