Earn a 50% discount on the DP-600 certification exam by completing the Fabric 30 Days to Learn It challenge.

- Data Factory forums

- Forums

- Get Help with Data Factory

- Dataflows

- Gen2 Dataflow to Warehouse - really slow performan...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Gen2 Dataflow to Warehouse - really slow performance

Hi, I'm hitting an issue with loading data from on-prem SQL server to a Fabric Warehouse using dataflows gen2. I'm only moving 34k rows (about 30 columns), and it's taking almost 13 minutes to do this (see image at bottom). Staging is disabled for this (these jobs literally just copy the data as is, with no transformations).

- If I enable staging, I can see the staging objects load in < 30 seconds, and then it takes 12+ minutes to write from the staging warehouse to the actual real warehouse.

- If I write the records to a Lakehouse instead of a Warehouse, the whole thing always completes in 30 - 45 seconds.

Is this type of performance typical for loading a Warehouse??? Can't believe it is, but the jobs are too simple to have anything messed up I think. Any ideas?

Thanks,

Scott

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any update on this issue? Im trying to load data with Knime ETL tool to the Fabric warehouse but it is incredebly slow..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I basically gave up on dataflows gen2 because there was a speed requirement it couldn't satisfy. However doing the ETL in Pyspark and storing the data to the lake works really good and is fast enough. But you need to include 1-2minutes for the spark pool to find you a session. Besides you have an extreme library of python packages allowing you to basically do anything. BTW. I'm not a true Python coder, but Bing Copilot and chatgpt were enough to get me started.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What kind of on-prem datasource are thinking of? I haven't tried to access any onprem sql databases or other sources. I just used API calls to several endpoints. But maybe you could try using odbc/jdbc from spark if you want to connect to sql?

spark = SparkSession.builder \

.appName("YourAppName") \

.config("spark.jars", "/path/to/your/sqljdbc42.jar") \ # Path to your SQL Server JDBC driver

.getOrCreate()

df = spark.read.jdbc(url=jdbc_url, table="your_table", properties=connection_properties)

Or:

from pyspark import SparkContext, SparkConf, SQLContext

appName = "PySpark SQL Server Example - via JDBC"

master = "local"

conf = SparkConf() \

.setAppName(appName) \

.setMaster(master) \

.set("spark.driver.extraClassPath", "sqljdbc_7.2/enu/mssql-jdbc-7.2.1.jre8.jar")

sc = SparkContext(conf=conf)

sqlContext = SQLContext(sc)

spark = sqlContext.sparkSession

database = "test"

table = "dbo.Employees"

user = "username"

password = "password"

jdbcDF = spark.read.format("jdbc") \

.option("url", f"jdbc:sqlserver://localhost:1433;databaseName={database}") \

.option("dbtable", table) \

.option("user", user) \

.option("password", password) \

.option("driver", "com.microsoft.sqlserver.jdbc.SQLServerDriver") \

.load()

jdbcDF.show()

Or:

from pyspark import SparkContext, SparkConf, SQLContext

import pyodbc

import pandas as pd

appName = "PySpark SQL Server Example - via ODBC"

master = "local"

conf = SparkConf() \

.setAppName(appName) \

.setMaster(master)

sc = SparkContext(conf=conf)

sqlContext = SQLContext(sc)

spark = sqlContext.sparkSession

database = "test"

table = "dbo.Employees"

user = "username"

password = "password"

conn = pyodbc.connect(f'DRIVER={{ODBC Driver 13 for SQL Server}};SERVER=localhost,1433;DATABASE={database};UID={user};PWD={password}')

query = f"SELECT * FROM {table}"

pdf = pd.read_sql(query, conn)

sparkDF = spark.createDataFrame(pdf)

sparkDF.show()

Maybe you need to install the pyodbc: !pip install pyodbc

Please let me know if it works.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm experiencing the same performance issues with Dataflow Gen 2 using an On-prem data gateway:

Pulling the data from the underlying SQL database is quick. I can see that in the refresh details (about 10 tables each taking less than 1 min to pull the data).

However, the second Dataflow Gen 2 step where it's writing the data (WriteToDataDestination) to the warehouse takes ages and eventually times out. It succeeds in writing about 3-4 tables but then fails.

"104100 Couldn't refresh the entity because of an issue with the mashup document MashupException.Error: Microsoft SQL: A transport-level error has occurred when receiving results from the server. (provider: TCP Provider, error: 0 - An existing connection was forcibly closed by the remote host.)"

Is there a way to see what jobs/requests are running on the warehouse? It seems that running too many requests (e.g., 1 Dataflow Gen 2 with many tables or running multiple Dataflows Gen 2 at the same time against the same Warehouse) results in locking up the Warehouse.

Is there even a way to stop all current jobs?

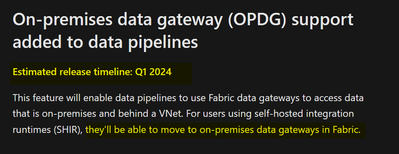

I'm inclined to look for other solutions, but notebooks and fast copy are still not supported for on-premise sources as of Jan 2024. Many new features will be coming for Dataflow Gen 2 in Q1 of 2024, though. As a next step, I will be trying Dataflow Gen 2 to store data into the lakehouse to get a properly robust ETL process set up and let you know if that resolved it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

UPDATE (to the above comment): Writing & storing data from on-prem SQL server directly to Lakehouse with Staging turned off (using a data gateway) has been able to deliver on my requirements and limitations of warehouse.

Even though I don't know any Python or Scala, I was able to do all the required tranformations in notebooks using Spark SQL.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm having the exact same expirience. But I fetch my data from an api endpoint. The timeout is set to a few seconds. If the endpoint fails. Well, then the next dataflow is executed. My pipeline consists of 21 dataflows. Each dataflow fetches minimal amounts of data from an endpoint, we are talking 2-3kb of json each run. There is just something utterly wrong, if the monitor shows some dataflows run 1minute. But some run 4hours... and they seem to eat all my F2 capacity. As a consequence every other dataflow executed after that one flow that executes 4h, fails.... I mean, Dataflow Gen2 are really powerful and I see them as the main selling point for companies looking for great visual ETL. So, Microsoft, please put some serious attention to these dataflow issues.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same thing on our end. I can't even get tables with a few thousand rows to write to the warehouse. I've had a support ticket open for 2 months now and they have been stringing me along.

I wish MSFT would acknowledge these issues. I have high hopes for DFG2 and Fabric as a whole, but it seems like things just don't work now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After the christmas/new year holidays I have plan to try something else. Currently the data is stored to a Datawarehouse for practical "SQL" reasons. The json is basically structured as a table row and easy to insert into a table. But from what I'm hearing and reading the Lakehouse seems to work better with Gen2. It's a minor modification to get the dataflows write the fetched endpoint data to a Lakehouse instead of the current warehouse. Also, I changed the scheduling for the main pipeline to 1.5hours from 50minutes. I counted approx 2-3minutes seems to go on "startup" of the dataflow, the rest is the execution of the dataflow, approx 2minutes. So any dataflow should execute approx within 4-5min. Now my pipeline with it's 21 dataflows is stable. But 90minutes to get a few kb of data is way too slow.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apologise for the delay in response and for the inconvenience that you are facing here.

Just as a work around, instead of loading on prem data to Dataflow Gen2 and then to warehouse (On-Prem -> DFg2 -> Warehouse) can you please try to test by loading the data from on-prem to Dataflow Gen2 and then to Lakehouse.(On-Prem -> DFg2 -> Lakehouse) (files or tables, would test both)

Then create a Data Pipeline to move data from Lakehouse to Warehouse.

Just like the medallion architecture we see often, we want to do a quick load of the source into a Raw layer, then work our way to the Warehouse which would be Silver/Gold.

The issue which you are facing while loading the data into warehouse is just temporary. This won't be a thing once Data Pipelines can use On Prem Data Gateway which is in development.

Hope this helps. Please let me know if you have any further questions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi @v-cboorla-msft , I'm actually in the process of getting this ready to test right now. I was able to successfully load about 1 billion rows of transactions into a lakehouse using Gen2 dataflows (running multiple dataflows in parallel across several gateway clusters). Now I'll try copying those across to a DWH using the copy utility. I'll update this post as soon as I have results to report (this is a side project so might be a couple of days).

One question / idea - if really feels to my like the underlying Dataflow Gen2 "engine" should be able to tap into the spark compute just like notebooks do. Similar to how it "pushes down" SQL queries to a database. Wish it could "push down" some of this type of work to spark - seems like it would be much faster.

Thanks!

Scott

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the feedback.

If you want to enable spark compute please upvote this idea: Microsoft Idea

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all and especially @miguel , just a quick update. First, having a really hard time getting support to actually pay any attention to this - even though I submitted a ticket over a month ago. Bit frustrated as I don't think they've actually done anything at all on the ticket.

Having said that, I think I've discovered at least one of the causes of really poor performance. I was trying yesterday to load about 11 million rows into a Warehouse. The load job takes 5 minutes writing to a Lakehouse, but timed out after hours on the warehouse.

Looking in to the new "query insights" schema available in the Warehouse, I could see the records were being written in batches of only 1,000 records each. And each commit seems to have been taking 3 or 4 seconds. With 11 million records, that would be 11,000 commits * 4 seconds each = 12+ hours of time.

If this is indeed the issue - MS you've GOT to change the commit size. Maybe make it 1 million, or 10 million, but not 1000 rows.

p.s. still not sure this is "all" of the issue - on my original issue above it was only writing 34,000 records which would have been 34 commits at 4 seconds each = several minutes of excess time. I was seeing way more than that. I'll try to go back and reproduce this tomorrow, looking at the query logs to see what's going on in the database.

p.p.s. Microsoft if it helps, the ticket I opened was 2310180040012820

Thanks,

Scott

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am in the same boat as you. I think this all comes down to the write speed to the warehouse. Things just seem to lock up and time out. I can't even get 100k rows to write...

I watched the query against our on-prem sql server and noticed that it had a ASYNC_NETWORK_IO wait the whole time. According to MSFT docs, these are due to the client application (the warehouse) not accepting results fast enough.

I also noticed that the warehouse becomes unresponsive while the dataflow is running.

I can't imagine we are the only ones who are experiencing these issues. There must be some solution or acknoledgment if Fabric is now GA. I'm trying to sell Fabric to my org, but it's a bit hard when we can't get our data into it.

Here's my support ticket if it helps: 2311060040014961

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same boat here.

Cannot get the dataflows to write into the warehouse for the life of me.

Support ticket is this: 2311150050004676

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm seeing the same thing as well. I'd really love to start using warehouses but can't seem to get anything but really slow performance or errors.

I have a DFG2 writing 2 tables 300k and 400k records each.

With no destination: 50 seconds

With warehouse destination, staging enabled: 50 minutes

With warehouse destination, staging disabled, times out after 3.5 hour and locks up the warehouse from being accessed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Scott,

It's difficult to tell what could be the reason for this performance. It could be the gateway or the computer running the gateway. The data source having some bottlenecks or perhaps something on the Fabric service end (perhaps your capacity or something different).

The best way to troubleshoot this would be to raise a support ticket and have an engineer take a closer look at this performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Miguel,

I'm experiencing the same issues as Scott - expect that 9/10 times our dataflows are failing. Support has not been able to help. The only suggestion they had so far was to downgrade to a gateway from August..

We are looking to purchase a fabric capacity, but most of our data is on-prem. We've been trying for months to get things up and running with DFG2, but are running into error after error.

Any thoughts on what our next steps should be?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Will do - thanks @miguel . Just so odd that this takes seconds (which is what I expect) in a Lakehouse but then drags out so long in a warehouse. I'll get an easily reproducible case ready, will update this thread if I'm able to narrow down the issue with support.

Thanks!

Scott

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm experiencing the exact same thing as you. My DFG2 takes a long time to write to the warehouse, but only takes a fraction of the time to write to the lakehouse. Hopefully support has a solution for you, but I modified my process to use a Pipeline instead:

- Refresh the dataflow (destination: lakehouse)

- SQL Script to delete the data from the warehouse (beneficial for incremental data loads)

- Copy data from the lakehouse to the warehouse.

This process is much quicker than when I was trying to write directly to the warehouse.