- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Power BI Spanish forums

- Translated Spanish Desktop

- Buscar si se producen varios valores antes que otr...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Buscar si se producen varios valores antes que otras columnas

¡Hola de nuevo!

Tengo este conjunto de datos con una serie de intervenciones y una fecha para cada intervención:

| INDX | INT 1 | FECHA INT 1 | INT 2 | FECHA INT 2 | INT 3 | FECHA INT 3 | INT 4 | FECHA INT 4 | INT 5 | FECHA INT 5 | INT 6 | FECHA INT 6 |

| 1 | R | 06/01/2022 | T | 08/04/2022 | U | 04/08/2022 | O | 01/09/2022 | P | 06/04/2022 | Xx | 05/01/2022 |

| 2 | Q | 17/01/2022 | T | 06/01/2022 | B | 01/01/2022 | ||||||

| 3 | Ll | 05/06/2022 | Xx | 02/01/2022 | Jj | 04/01/2022 | Yy | 02/01/2022 | ||||

| 4 | Xx | 04/01/2022 | U S | 04/01/2022 | Q | 05/01/2022 | Tt | 10/01/2022 | ||||

| 5 | Hh | 04/01/2022 | Ss | 09/04/2022 | AA | 17/01/2022 | Vv | 05/09/2022 | ||||

| 6 | Pp | 09/01/2022 | Ll | 06/01/2022 | Yy | 03/01/2022 | Hh | 04/05/2022 | Xx | 04/01/2022 | Xx | 07/01/2022 |

| 7 | Ss | 03/01/2022 | Oo | 04/01/2022 | Xx | 05/01/2022 | ||||||

| 8 | Yy | 02/01/2022 | Xx | 01/01/2022 | AA | 03/01/2022 | ||||||

| 9 | Yy | 05/01/2022 | Jj | 01/01/2022 | Yy | 06/01/2022 | Ll | 04/09/2022 |

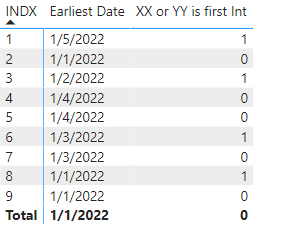

Quiero obtener 1 si XX O YY ocurren antes de cualquier otra intervención. Así, por ejemplo, en la primera fila devolveré un 1 y en la fila 7 no devolverá nada.

Si XX y YY ocurren juntos antes que cualquier otra cosa (como en la fila 3) está bien obtener un 1; si XX o YY ocurren el primer día pero togeter con algo (como en la fila 4) de lo contrario me gustaría no obtener nada.

¡Gracias de antemano por la ayuda!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Esos datos de origen están en bastante mal estado. El primer paso es despivoarlo en algo utilizable

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("jZLLCoMwEEV/pWQt5GESzdIuRERQSxVF/P/faKbGIQ8tXQTiwZzJ3Mm2EU4y8rKLaco4FUwI+/EGUFImTzABkNQyB3oAnDJzgsE58MiyAFFo3bONAB/t4kVczK/+dG4Ej8sFwtzuus4V0mFp4Rna1jWAZF3jf1K7RJd/cppiMoad2pagJ85+ypXdNU2sqmsgxsuxquLA5tnVM/d2DSMZnAtPHlHpJIbcI3gnFcbp3/IgRTDbAm/v2/r++qz6a7zl5aAOg/9AvhkFde+dBp0qeSA8SUYn6cn73PcP", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [INDX = _t, #"INT 1" = _t, #"DATE INT 1" = _t, #"INT 2" = _t, #"DATE INT 2" = _t, #"INT 3" = _t, #"DATE INT 3" = _t, #"INT 4" = _t, #"DATE INT 4" = _t, #"INT 5" = _t, #"DATE INT 5" = _t, #"INT 6" = _t, #"DATE INT 6" = _t]),

CT = (List.Count(Table.ColumnNames(Source))-1)/2,

#"Merged Columns1" = List.Accumulate({1..CT},Source,(state,current)=>Table.CombineColumns(state,{"INT " & Text.From(current), "DATE INT " & Text.From(current)},Combiner.CombineTextByDelimiter(",", QuoteStyle.None),"Merged " & Text.From(current))),

#"Unpivoted Other Columns" = Table.UnpivotOtherColumns(#"Merged Columns1", {"INDX"}, "Attribute", "Value"),

#"Filtered Rows" = Table.SelectRows(#"Unpivoted Other Columns", each ([Value] <> " , ")),

#"Split Column by Delimiter" = Table.SplitColumn(#"Filtered Rows", "Value", Splitter.SplitTextByDelimiter(",", QuoteStyle.Csv), {"INT", "Date"}),

#"Changed Type with Locale" = Table.TransformColumnTypes(#"Split Column by Delimiter", {{"Date", type date}}, "en-GB"),

#"Changed Type" = Table.TransformColumnTypes(#"Changed Type with Locale",{{"INDX", Int64.Type}}),

#"Removed Other Columns" = Table.SelectColumns(#"Changed Type",{"INDX", "INT", "Date"})

in

#"Removed Other Columns"Después de eso, puede crear una medida que implemente la lógica deseada.

XX or YY is first Int =

var a = min('Table'[Date])

var i = CALCULATE(min('Table'[INT]),'Table'[Date]=a)

return if (i in {"XX","YY"},1,0)

ver adjunto.

Gracias por el desafío: la parte que no se dividía era complicada, ya que necesitaba pares de columnas.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |

| User | Count |

|---|---|

| 2 | |

| 2 | |

| 1 | |

| 1 | |

| 1 |