- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- gateway client has exceeded the limit of 10 GB... ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

gateway client has exceeded the limit of 10 GB... follow up

Hello All,

I had posted a question on the forums in January. found here: https://community.powerbi.com/t5/Desktop/The-amount-of-uncompressed-data-on-the-gateway-client-has/m...

My probelm was that I would get the error: "gateway client has exceeded the limit of 10 GB..." when I would try to refresh. The solution provided by the post above was to use DAX studio to determine what fields were the largest and removing them they where not needed. This worked for a while but I soon ran out of fields to remove and I am still getting the error.

I am not sure if this was the problem in the first place though. The total size that resulted in running the query in DAX studio was 25,000 kb this converts to .025 GB.. WAY below the 10GB limit.

A member of our IT team found that calculated columns can be inefficient.

(I have 8 calculated columns in the workbook) I cut this number down to 4 and I still have not been able to refresh the data source without getting the "gateway client has exceeded the limit of 10 GB..." error.

I am looking for a way to resolve this for good. Is there any way that I can resolve this for the long term?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @tstackhouse ,

The maximum size for datasets imported into the Power BI service is 1 GB. These datasets are heavily compressed to ensure high performance.

In addition, in shared capacity, the service places a limit on the amount of uncompressed data that is processed during refresh to 10 GB. This limit accounts for the compression, and therefore is much higher than 1 GB.

Datasets in Power BI Premium are not subject to this limit.

If refresh in the Power BI service fails for this reason, please reduce the amount of data being imported to Power BI and try again.

The exact limitation is 10 GB of uncompressed data per table.

If you're hitting this issue, there are good options to optimize and avoid it. In particular, reduce the use of highly constant, long string values and instead use a normalized key. Or, removing the column if it's not in use helps.

Solution:

1. Use Power BI Premium

2. Reduce the amount of data being imported to Power BI.

3. Reduce the use of highly constant, long string values and instead use a normalized key. Or, removing the column if it's not in use helps.

4. Use Direct Query or Live Connection mode.(Step Beyond the 10GB Limitation of Power BI )

5. Use incremental refresh.(Now Pro license also can use incremental refresh.)

Best regards,

Lionel Chen

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @tstackhouse ,

The maximum size for datasets imported into the Power BI service is 1 GB. These datasets are heavily compressed to ensure high performance.

In addition, in shared capacity, the service places a limit on the amount of uncompressed data that is processed during refresh to 10 GB. This limit accounts for the compression, and therefore is much higher than 1 GB.

Datasets in Power BI Premium are not subject to this limit.

If refresh in the Power BI service fails for this reason, please reduce the amount of data being imported to Power BI and try again.

The exact limitation is 10 GB of uncompressed data per table.

If you're hitting this issue, there are good options to optimize and avoid it. In particular, reduce the use of highly constant, long string values and instead use a normalized key. Or, removing the column if it's not in use helps.

Solution:

1. Use Power BI Premium

2. Reduce the amount of data being imported to Power BI.

3. Reduce the use of highly constant, long string values and instead use a normalized key. Or, removing the column if it's not in use helps.

4. Use Direct Query or Live Connection mode.(Step Beyond the 10GB Limitation of Power BI )

5. Use incremental refresh.(Now Pro license also can use incremental refresh.)

Best regards,

Lionel Chen

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you perhaps have a very large date table? These can blow up a data model with the automatic hiearchy creation and especially if you have lots of unique values.

You could check the Issues forum here:

https://community.powerbi.com/t5/Issues/idb-p/Issues

And if it is not there, then you could post it.

If you have Pro account you could try to open a support ticket. If you have a Pro account it is free. Go to https://support.powerbi.com. Scroll down and click "CREATE SUPPORT TICKET".

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: The Definitive Guide to Power Query (M)

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the response @Greg_Deckler !

I do have a table that I would consider to be "fairly large" but I do not know exactly how big or how to find how big it is. It has been mostly trial and error up to this point but I am looking for something long term.

Is there a way to find out how big the table is?

Can you give me more information on the "automatic hiearchy creation" in the data model? I am not familair with this. I do have hieracies built in some visuals if that is what you are refering to.

Thanks Again

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

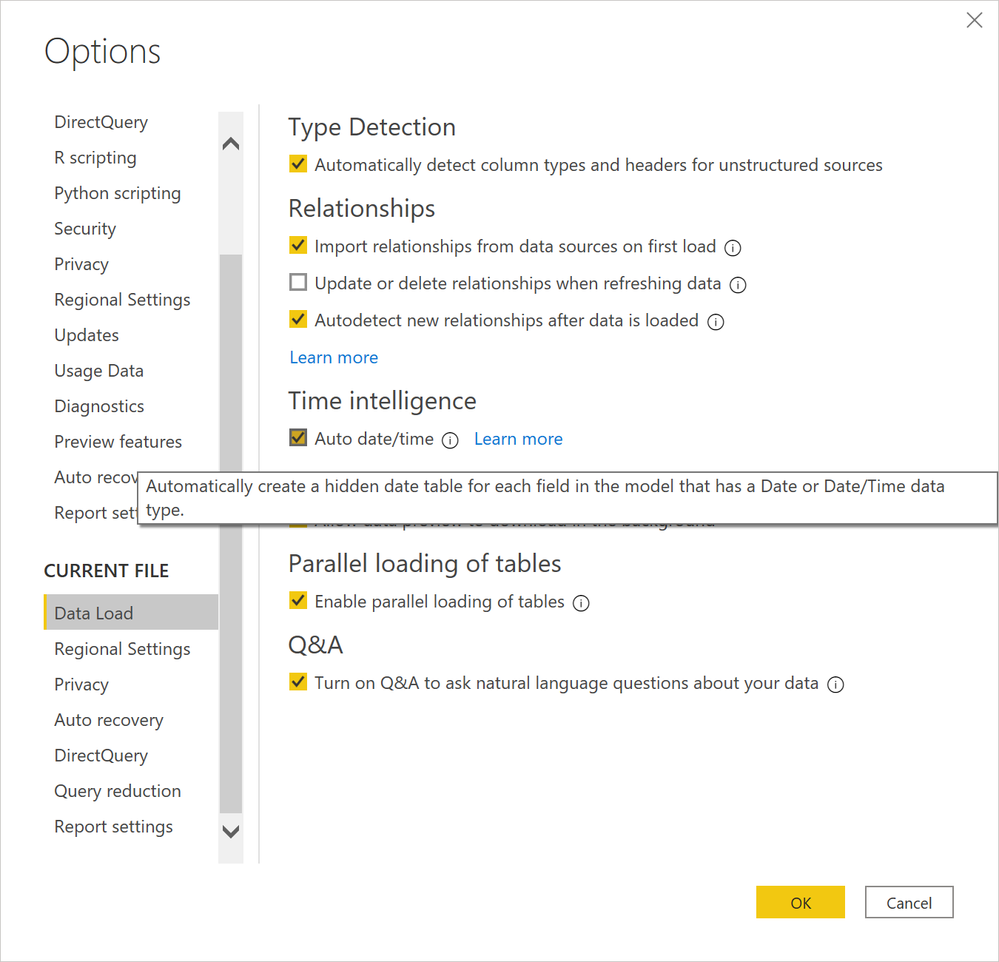

So this is what I am talking about, File | Options and settings | Options (see image below). I've run into this with customers that have had date tables that were like from the beginning of time to 300 years in the future, that blew the data model up by like 700 MB or something crazy. Also ran into this with my book where this caused one of PBIX files to exceed GitHub allowed sizes so I had to turn it off and note it in the book chapter.

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: The Definitive Guide to Power Query (M)

DAX is easy, CALCULATE makes DAX hard...

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.