- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- What is the Dataset refresh Limit of Power BI Prem...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the Dataset refresh Limit of Power BI Premium Gen2 P3?

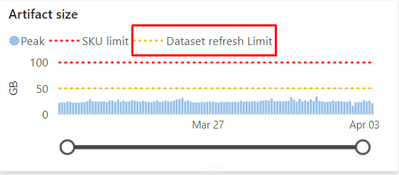

There was an error even it's only refreshing one table via API, the table size is 6G in memory checking with DAX.

It's P3, so there should be 100G for refresh, even it needs10 times of data size, it's enough, but why it failed with memory limit error time to time?

I noticed there is dataset refresh limit in utilization app, what is it and how to change it?

Thanks,

Simon

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @simoncui ,

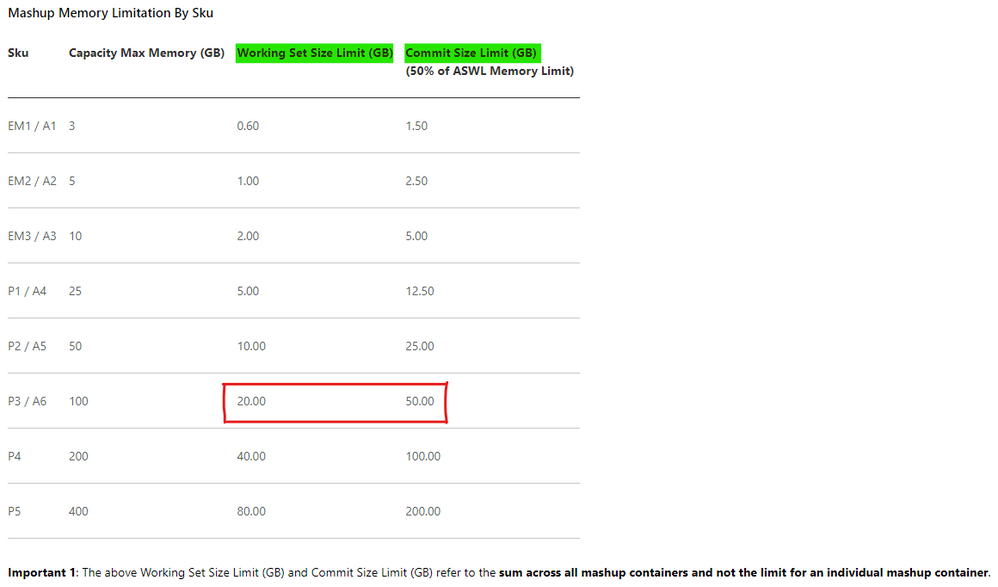

Working set is the physical memory (RAM) used by the mashup processes, while commit size is the amount of space reserved in the paging file for the process. In modern operating systems such as Windows, it is possible for processes to circumvent limits on physical memory -- this is achieved through paging, where memory referenced by the process can be temporarily reserved on disk instead of physical memory. The commit size/limit governs the amount of virtual memory in a process that can be backed by the paging file. As such, it is possible for Mashup process to encounter Out of Memory issues (typically denoted by presence of 0x0000DEAD error code) if the commit size limit is exceeded, even though there is significant unused physical memory on the capacity.

You can use Kusto to check if these refreshes exceed the commit size limit for that time period.

If the dataset fails to refresh at any time (avoid other dataset refreshes taking up resources), then please try upgrading the capacity or optimizing the model.

If the problem is still not resolved, please provide detailed error information or the expected result you expect. Let me know immediately, looking forward to your reply.

Best Regards,

Winniz

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @simoncui ,

Working set is the physical memory (RAM) used by the mashup processes, while commit size is the amount of space reserved in the paging file for the process. In modern operating systems such as Windows, it is possible for processes to circumvent limits on physical memory -- this is achieved through paging, where memory referenced by the process can be temporarily reserved on disk instead of physical memory. The commit size/limit governs the amount of virtual memory in a process that can be backed by the paging file. As such, it is possible for Mashup process to encounter Out of Memory issues (typically denoted by presence of 0x0000DEAD error code) if the commit size limit is exceeded, even though there is significant unused physical memory on the capacity.

You can use Kusto to check if these refreshes exceed the commit size limit for that time period.

If the dataset fails to refresh at any time (avoid other dataset refreshes taking up resources), then please try upgrading the capacity or optimizing the model.

If the problem is still not resolved, please provide detailed error information or the expected result you expect. Let me know immediately, looking forward to your reply.

Best Regards,

Winniz

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @simoncui ,

Unfortunately, this is an internal Microsoft document that is not available to the public and users do not have access to view it. Also, since I don't have access to Kusto, I could not help you determine if your dataset exceeds the commit size limit.

If you still have not resolved this issue, please submit a ticket and then the team will contact you and provide service.

How to create a support ticket in Power BI

Best Regards,

Winniz

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @GilbertQ ,

It was refreshing a single table's partitions in parallel. Do you know what is the memory limit for such operation and where to check usage details or change limit?

Thanks,

Simon

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @simoncui

have a look at these settings which you might need to tweak

How to configure workloads in Power BI Premium - Power BI | Microsoft Docs

I would suggest trying incremental refresh which could solve this issue?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Workloads setting doesn't help.

Yes, incremental refresh is working, but we need to do full refresh sometimes.

Thanks,

Simon

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @simoncui

From your error this is not to do with the actual dataset memory but the Power Query refresh limit is being hit when you are loading the data. It looks like you are using Redshift, just make sure to use the Batching process for redshift and not to stream the entire dataset at once.

If you stream it, it means it will try and load the entire query at once which could be VERY big.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.