- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Incremetnal refresh problem

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Incremetnal refresh problem

Hello,

I am having problem with the refresh, the error is shown in the picture below.

It says that the database size before execution is 14.2GB.

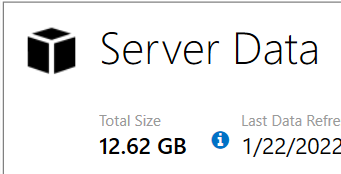

When I opened Dax studio it shows that the dataset size is 12.6GB ( there are a couple of excel sheets also connected but they dont make up alot of size).

I have Incremental refresh set up to refresh only a small partition of the data.

My question is why is the datasize before execution is the same as the full dataset size, its like the incremental refresh is not taking part of the data to refresh but instead its taking the whole dataset.

Appreciate any assistance.

Thanks in advance.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @fhdalsafi ,

First, please make sure the increment refresh can work. Incremental refresh is designed for data sources that support query folding, most data source(like flat file, SQL and other relational data sources etc.) support query folding. Could you please tell me that what is the data source you are using? Please refer to the following document to determine if you have configured it correctly.

Configure incremental refresh and real-time data

Typically, the effective memory limit for a command is calculated on the memory allowed for the dataset by the capacity (25 GB, 50 GB, 100 GB) and how much memory the dataset is already consuming when the command starts executing. For example, a dataset using 12 GB on a P1 capacity allows an effective memory limit for a new command of 13 GB. However, the effective memory limit can be further constrained by the DbPropMsmdRequestMemoryLimit XMLA property when optionally specified by an application. Using the previous example, if 10 GB is specified in the DbPropMsmdRequestMemoryLimit property, then the command’s effective limit is further reduced to 10 GB.

To potentially reduce exceeding the effective memory limit:

- Upgrade to a larger Premium capacity (SKU) size for the dataset.

- Reduce the memory footprint of your dataset by limiting the amount of data loaded with each refresh.

- For refresh operations through the XMLA endpoint, reduce the number of partitions being processed in parallel. Too many partitions being processed in parallel with a single command can exceed the effective memory limit.

More details: Resource governing command memory limit in Premium Gen 2

Best Regards

Community Support Team _ Polly

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @fhdalsafi ,

First, please make sure the increment refresh can work. Incremental refresh is designed for data sources that support query folding, most data source(like flat file, SQL and other relational data sources etc.) support query folding. Could you please tell me that what is the data source you are using? Please refer to the following document to determine if you have configured it correctly.

Configure incremental refresh and real-time data

Typically, the effective memory limit for a command is calculated on the memory allowed for the dataset by the capacity (25 GB, 50 GB, 100 GB) and how much memory the dataset is already consuming when the command starts executing. For example, a dataset using 12 GB on a P1 capacity allows an effective memory limit for a new command of 13 GB. However, the effective memory limit can be further constrained by the DbPropMsmdRequestMemoryLimit XMLA property when optionally specified by an application. Using the previous example, if 10 GB is specified in the DbPropMsmdRequestMemoryLimit property, then the command’s effective limit is further reduced to 10 GB.

To potentially reduce exceeding the effective memory limit:

- Upgrade to a larger Premium capacity (SKU) size for the dataset.

- Reduce the memory footprint of your dataset by limiting the amount of data loaded with each refresh.

- For refresh operations through the XMLA endpoint, reduce the number of partitions being processed in parallel. Too many partitions being processed in parallel with a single command can exceed the effective memory limit.

More details: Resource governing command memory limit in Premium Gen 2

Best Regards

Community Support Team _ Polly

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @fhdalsafi

The message says it all. Do you have the permission to control the Premium Capacity? otherwise you need to reduce the size of your dataset. To answer your question; did you set up the incremental refresh correctly? did you apply it on the fact table?

Regards

Amine Jerbi

If I answered your question, please mark this thread as accepted

and you can follow me on

My Website, LinkedIn and Facebook

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.