- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Incremental Refresh takes almost same time as Full...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Incremental Refresh takes almost same time as Full refresh in PBI Service

Hi,

I have set up incremental refresh on 2 fact tables that are part of my dataset. I have given an active range of 12 months with an archive of 2 years and use LastModified to detect changes. I know the incremental refresh works as intended when I look at the partitions and last refreshed timestamps using the xmla endpoints. Only the needed partitions (1 or 2 out of the active 12) is getting refreshed.

What I observe though is that despite all this, the time it takes for a full refresh is apprx same as for an incremental refresh. From the capacity Planning metrics report, I observed that the ratio of cpu(s) to Duration howers around 250% for a full refresh and drops to apprx 150% for an incremental refresh. This drop is because the cpu(s) drop by almost 60% ( I assume that is because of less data in the incremental refresh) but the duration remains apprx the same. What causes this.

Any suggestions/advice would be welcome!

Thanks,

Anand

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Prachi,

1. Incremental refresh tends to have some overhead of its own since it manages partitions at the back end. (create new, merge existing ones etc). But overall it gets more effective as loads increase.

2. Also I think, the point about using an unmutable date is key for both performance and accuracy. Not really sure if this applies to your scenario specifically but typically, "last updated" is used with "detect data changes" setting to further speed up the refresh process. The refresh partitions should be based on an unmutable date (such as "Order Date" if its present in the sales table. I believe @lbendlin mentioned creation date as when the record was originally created in the source database and not when it was first loaded into the model ) so that 2022 records are its own partition in this example.

3. There are also model specific characteristics (no of calculated columns, presense of multiple text feilds in the fact data ) that can make the process slow down.

Thanks,

Anand

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @AnandGNR and @lbendlin

I have tried Incremental Refresh (Import Mode) with the TransactionDate where its in DateTime format having the different time stamps and Data detect change propery in Incremental Refresh is set to UpdateDate. But this process is also taking almost 40 mins (previously time taken was around 55-60 mins). Another observation is, data issue. For year 2023 data for 1st Jan is more (more than 2 M) compared to the actual data. This Incremental Refresh I am trying with the XMLA end-point method.

Has anyone faced such type of issue. And what are the solutions which you all have tried.

Thanks & Regards

Prachi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Keep in mind that "Detect Change" will create a canary table, doubling your space requirements.

Duplicate data means your filters are set incorrectly. Only one of the ranges can be inclusive, usually RangeStart.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@lbendlin Thank you for the quick revert.

Below are the parameters I am using for it,

= Table.SelectRows(#"Changed Type", each [TransactionDate] >= RangeStart and [TransactionDate] <= RangeEnd)

TransactionDate is in DateTime Format and I am using just date part from this column (named as TransactionsDate) to connect to the Calendar dimension table.

My queries:

1. Does the mentioned part above makes any difference in the data.

2. Detect data change I am seting on the UpdateDate - this the date (in our datawarehouse table) reflecting when the source data is actually updated. As TransactionDate is the combination 2-3 date columns combination from the source. So we are maintaining InsertDate (data loaded date) and UpdateDate (to reflect the updation in source data).

So, still you have the same concern about the data detect change using UpdateDate ?

Kindly suggest a solution for Import data.

Thanks and Regards,

Prachi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

= Table.SelectRows(#"Changed Type", each [TransactionDate] >= RangeStart and [TransactionDate] < RangeEnd)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey thanks, it worked. Can you help me with the Detect Data change option, because the refresh is still taking 40 mins. And I need to use UpdateDate for detecting the data chnges, its the requirement.

Thanks and Regards

Prachi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Disable that, it is useless.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

But then how can we keep a control of the data which is updated, as the UpdateDate is keeping track of such records at our DataWarehouse end.

As the transactions which are updated at a later date for whatever reason, UpdateDate is keeping track of such records.

For eg. My transaction is on date 10th Feb 2024 and this data is loaded in table. Then later this transaction data is updted on 14th Feb 2024, so I need to make the changes in my data in the warehouse. So in such scenario, is UpdateDate will be needed to keep track of updated records. So its needs to be in the Detect Data Change option?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is a fundamental misconception of how incremental refresh works its magic. Namely - there is no magic.

Incremental refresh is designed for immutable data, like IOT sensor data that will never change again.

What you are after is Differential Refresh - inserts AND updates AND deletions. That is not something Incremental Refresh can do. To mitigate that it is your responsibility to determine if and when to refresh older partitions, or to perform a full refresh across all partitions.

"Detect Data Change" attempts to do that for you but does so in a desastrous way. It creates canary partitions to be able to compare with the "live" data for that period. In doing so it doubles the amount of space required for your table, including partitions that won't ever change again (because there's no magical way to know that).

Let me repeat: it is your responsibility to determine if and when to refresh older partitions, or to perform a full refresh across all partitions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello all,

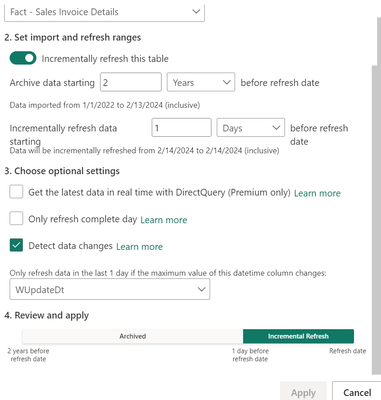

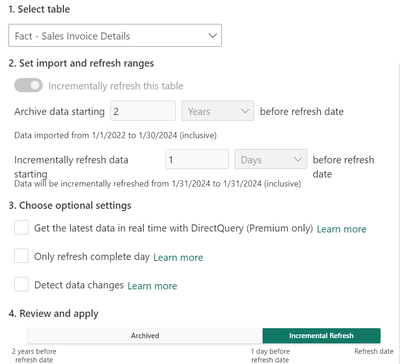

I have almost 4 GB dataset and I have Incremental Refresh on the Sales fact table as below:

Incremental refresh is taking almost 50-55 minutes and the normal refresh was taking 60-65 minutes. Can someone help me in knowing how to tackle the issue?

Data Connectivity is Import and database is Azure Synapse. And Power BI dataset is used.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Use SSMS or DAX Studio to check how many partitions that table has

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

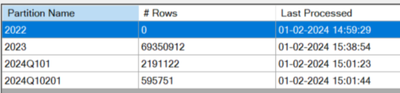

I am trying the Incremental refresh now with the XMLA end-point and the partitions I can see are as below for now

As the incremental is on UpdateDate, is the reason all 2022 records count is in 2023.

Lets us see how much time this XMLA method takes. But need to understand has anyone used this method for Incremental Refresh and how you find it working?

Is it helpful in resolving the long time refresh with the Incrementsl refresh?

Regards,

Prachi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You cannot use UpdateDate for incremental refresh, that will lead to data duplicates. Use something immutable like CreatedDate.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Update date is the date when data is loaded from source to the destination so I think its same a the created date, and we have Incremental Refresh set just for a day.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello! Thanks for responding.

1. Data source for PBI are Synapse Serverless Views based on Gen2 Azure Data Lake (Delta - parquet files).

2. With regards to concurrency and time etc: defenitely are valids concerns but I did try to run tests (full and incremental) at different times of the day including some late hours post business. Results were always consistent so far in that full load refresh time is pretty much the same as the incremental refresh time with the same kind of range ratios that I had mentioned above when I look at the capacity metrics.

Partition sizes : roughly around 700,000 rows per active range monthly partition. I have added a snip of the distribution after an incremental refresh below.

One additional aspect that i found was that a model with a bigger footprint(archive with 10 years and active range of 12 months with the exact same row counts in the partitions) takes more time on an incremental refresh as compared to a smaller footprint model such as the one below ( 2 years archive and active range of 12 months) which also seems strange if incremental refresh hits only the needed partitions. In a bigger model too, i found the same behaviour of the full refresh just maybe a 1-2 mins longer than the incremental refresh.

Thanks,

Anand

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My guess would be that your data source takes a long time to provision each time you run any type of query, which then negates the supposed advantages of the incremental refresh. You can do real time monitoring of the dataset refresh to see the gap between connection start and data spooling start.

Visualise your Power BI refresh - in real-time - Phil Seamark on DAX

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did try a trace using SQL profiler mentioned here. Also added Command Event in addition to Progress Events. The command is consistant with the TMSL for an incremental refresh.

{

"sequence": {

"maxParallelism": 6,

"operations": [

{

"refresh": {

"type": "full",

"applyRefreshPolicy": true,

"effectiveDate": "07/15/2022",

"objects": [

{

"database": "XXXXX"

}

]

}

}

]

}

}

Trace also shows that it only hits the needed partitions with a ExecuteSQL command post the "Analyze refresh policy" phase

If Synapse serverless views were slow, I would think that a full refresh would much slower since its hitting all partitions againt the same views based on looking at the Trace. Really drawing a blank..

Thanks,

Anand

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have not specified what your data source is and what its performance is. There can be many other factors like time of day, concurrency settings at the data source, network conditions etc.

How many rows per partition?

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.