- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Incremental Refresh fails at around 2 AM

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Incremental Refresh fails at around 2 AM

Hello,

I have set up incremental refresh on my Power BI report for 3 tables with the correct set up of my parameters RangeStart and RangeEnd. I'm working with the Flow Power Automate to refresh my dataset every 10 minutes and my workspace is on a premium capacity.

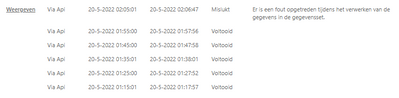

I have been testing my incremental refresh 2 days and during the day it works perfectly but I've seen that at around 02 AM at night it crashes... stating that there's a duplicate value in my table (the typical error that everybody has with incremental refresh)

When I do the full refresh of my tables in SSMS via de XMLA-endpoints it works and then I can reuse my incremental refresh again...

Someone who had the same problem like me and can help me with this?

Kind regards

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is the file date meaningful? Can it be used as part of the PK ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @lbendlin

Thank you for your answer but the RangeStart and RangeEnd (see code underneath) parameters are set correctly because my incremental refresh runs during the whole day and the right partitions are created with the correct amount of rows for each partition. I've tested it for 3 days straight and each time past midnight it fails. If I want to run it again, I need to run a full load via the XMLA endpoints and then the incremental refresh works again till past midnight...

The incremental refresh fails on the table with the code underneath. Could it be possible that the problem is caused by the second line 'ReplaceNullByDate'? I have some fields that don't have a datetime but they need to be included anyway so I'm using the current datetime.localnow value so the incremental refresh includes it during the refresh.

let

Source = Table.Combine({ Exercises, Stations, Areas, VPEs_RWYs }),

ReplaceNullByDate = Table.ReplaceValue(Source, null, DateTime.From(Date.From(DateTime.LocalNow())), Replacer.ReplaceValue, { "Date" }),

SelectRowsIncrementalRefresh = Table.SelectRows(ReplaceNullByDate, each [Date] >= RangeStart and [Date] < RangeEnd)

in

SelectRowsIncrementalRefresh

I'm indeed in CEST time zone so I presume that the refresh fails at 00:05 UTC time.

Kind regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh, you are skating on thin ice here. Why do you have null date values in your source tables? Are they consistently null, or randomly? Will they be replaced by other values later or are they immutable?

Let's say on monday you have null values. You use daily partitions. Then on tuesday you are running the same refresh again. Now your tuesday partition carries the rows, but worst case your monday partition does not get updated and now you ended up with duplicate data...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @lbendlin

Well the tables I combine into one dataset for the incremental refresh have some empty datetime values. That's why I use the 'ReplaceNullByDate' to fill in those null values by the 'DateTime.LocalNow()' before using the incremental refresh. So during each refresh the null values are replaced by local datetime and then the incremental refresh does its work by putting those values into the right partition. During day, those values are stored into the correct partition as I have the same amount of rows.

let

Source = Table.Combine({ Exercises, Stations, Areas, VPEs_RWYs }),

ReplaceNullByDate = Table.ReplaceValue(Source, null, DateTime.From(Date.From(DateTime.LocalNow())), Replacer.ReplaceValue, { "Date" }),

SelectRowsIncrementalRefresh = Table.SelectRows(ReplaceNullByDate, each [Date] >= RangeStart and [Date] < RangeEnd)

in

SelectRowsIncrementalRefreshThose rows that had null values are consistent, like the stations, areas and VPEs_RWYS stay the same each time the refresh happens.

I don't really understand your last sentence? How can my values be null if I fill them with 'DateTime.LocalNow()'?

Thanks for your time to figure this out with me but it's really difficult to understand what's happening if the refresh works the whole day but fails when jumping on a new day.

Kind regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

" then the incremental refresh does its work by putting those values into the right partition."

I would challenge that. I think your process leads to lots of duplicate rows because every day

DateTime.LocalNow()will point to a different partition. This is likely also the reason for your refresh to fail, as your partition pointer will change dynamically, in the middle of a refresh.

Find a way to NOT use

DateTime.LocalNow()in your process.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @lbendlin

I've did some tests and apparently it's not because of the datetime.localnow() or rows without a date.

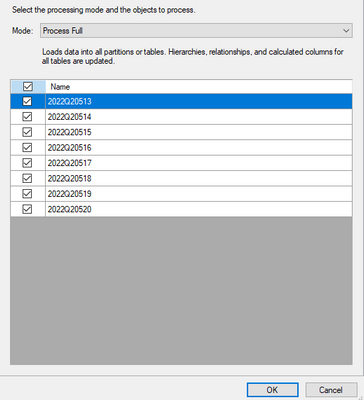

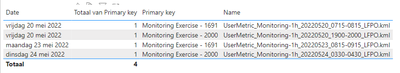

I don't have a primary key in my table because this is data imported from files in Azure Data Lake. Each file contains multiple rows but they only differ based on a row which contains coordinates. What I do is creating an index column in Power Query M to make each row unique but apparantly this is the problem. When I'm archiving some data, the index is being regenerated which causes some rows having the same index (primary key).

As you can see in my image (I broke the relationships to not have the error during my incremental refresh but you see that I have the same primary keys for 2 different dates.

Is this possible? How do I create a primary key without having this issue?

Kind regards

Kind regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is the file date meaningful? Can it be used as part of the PK ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @lbendlin ,

That's a good idea. A concatenation of category and index was not enough like in the image. I've used some other fields like the datetime and the incremental refresh worked during the day change! As I only keep data for 7 days some rows are removed and new ones are added again, the 'Table.AddIndexColumn' always creates new index values sequentially and there was the problem of having two times 'Monitoring Exercise - 1691'.

Thanks again!

Kind regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"stating that there's a duplicate value in my table (the typical error that everybody has with incremental refresh)"

i would very much hope that this is the exception. Likely caused by incorrectly including both RangeStart and RangeEnd. One of them must be inclusive, the other must be exclusive.

Are you in CEST time zone? Power BI service runs on UTC.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.