- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Import huge amout of data from BigQuery

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Import huge amout of data from BigQuery

Hello,

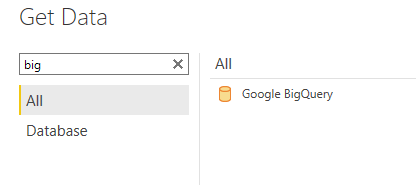

we strongly need to import about 8 GB of Data from BigQuery to our PowerBI Dataset twiche a day. To make this work we have to send an aditional Parameter "allowLargeResults" in our query or Google BigQuery cancels the request with a "Response too large to return" error.

Is there any way to solve this and import a huge amount of data from Google BigQuery without a gateway?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi! Did you solve this without a gateway? I've been trying like you to set the "allowLargeResuls" paramenter without success. Any hint would be great!

Regards!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hej @matuchote4 ,

sadly we can't set ODBC Parameters for connections so there is no solution for this. I made a microsoft idea right now, if you like you can upvote this: Microsoft Idea · Setting ODBC parameters (powerbi.com)

Best regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @dd8zc

Due to the nature of work, we can't make any reply outside the post. For your question, I would like to know why not try the following way, it can use DQ mode without limiting the size of the data. If you use odbc, then the import mode only allows pbix files within 1gb.

Best Regards

Janey Guo

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @v-janeyg-msft ,

our solution down allow us DQ (with a lot of users, DirectQuery is getting very expensive).

We don't have 1 gb PBIX limitations using other sources via ODBC which work fine.

Best regards,

dd8zc

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @dd8zc

If you don't want to use DQ, you can try to import part of the data each time, and finally integrate them together. If the data source is connected to support the use of parameters to filter the data, it can be filtered multiple times and then imported. If not, then the data of the data source needs to be divided into several parts and imported separately.

Best Regards

Janey Guo

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

unfortunatly BigQuery adapter dont support the use of parameters. If we could send the parameter "allowLargeResults" everything would be finde.

Do you have a documentation how to import several parts and imported separately into the same dataset without inkremental load?

Thanks

dd8zc

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hej @v-janeyg-msft ,

that sounds awesome. How can I import one table at a time? The dataset is stored in https://app.powerbi.com/, is there any possibility set options to "refresh" (I didn't found any)?

Also, how could this solve my issue with a big source? Even if I try to import one table at a time, one table will be still to hughe to import because I can't set the "allowLargeResults" parameter.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @dd8zc

If importing one table at a time doesn't work, Data may indeed be too large. DQ mode is recommended.

What do you mean by 'The dataset is stored in https://app.powerbi.com/, '. If he dataset can be stored in PBI service, why can't it be imported? Do you try to connect in PBI desktop?

Best Regards

Janey Guo

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hej @v-janeyg-msft ,

DQ is not an option. We're expermimenting in exporting from BigQuery to Buckets / BlogStorage and import from these as a workaround.

If there is a board for feature requests: we would love so send ODBC Parameters to our sources. I'm sure we're not the only one.

Best regards

dd8zc

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @dd8zc

According to your description, If the data is large, it is recommended to use DQ mode to connect. Have you tried?

Reference:Use DirectQuery in Power BI Desktop - Power BI | Microsoft Docs

If it doesn’t solve your problem, please feel free to ask me.

Best Regards

Janey Guo

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you can import chunks that fall below the BigQuery limit and then append the results in Power Query.

Or consider using incremental refresh.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hej @lbendlin ,

sadly we can't use incremental refresh. How can I import just chunks? Is there a parameter used by the BigQuery Connector?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @dd8zc !

Did you manage to upload the data in the end?

I faced the same problem, and so far nothing helps

Thanks in advance for your reply!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hej @analytics_wow ,

because we can't use incremental refresh we changed the data source and are loading some stuff from BigQuery and other stuff from a different source.

Best solution should be to load incremental from a growing BigQuery if this is possible on your side.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.