- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Error on PBI Service dataset refresh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Error on PBI Service dataset refresh

Hi!

My model includes datasources from Databricks (cloud), Oracle (on-prem) and SharePoint (cloud).

For the Oracle data the gateway is set up and runs perfectly (in all my dataflows). All privacy options are set to "organizational". I use a deployment pipeline with three workspaces (dev, test, prod). I work with PBI Desktop April 2022, as I am aware there are issues with the May 2022 update and the Databricks connector.

In PBI Desktop I am able to refresh my model without any issues. I publish to the dev workspace and check the privacy settings and the gateways/credentials - the refresh runs without errors.

I move the dataset from dev to test workspace via my deployment pipeline. Again ensured privacy settings, gateways/credentials are as in the dev workspace - but this time I get an error:

"A retriable error occurred while attempting to download a result file from the cloud store but the retry limit had been exceeded"

The table mentioned in the error message is a table that combines Oracle (on-prem) and Databricks (cloud) data.

Any idea, what I am doing wrong? Why is it working in one workspace, but not in the other - despite same Gateways, credentials, privacy set ups? Thanks in advance!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you connect in Direct mode or import mode?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous,

I connect via import mode.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @daniel_st,

Indeed, last Friday we figured that an additional parameter has to be added in the source step. Please have a try, and let me know if it works for you, too.

The issue might be related to Databrick's new Cloud Fetch architecture in runtime 8.3 and above. With a cluster runtime of 7.3 the refresh on service worked smoothly for the same dataset. The issue only occurs, when merging on prem with cloud data in PBI power query and using a on prem Enterprise gateway. As 7.3 has end of support in September the fix below worked for clusters running on 10.4, at least in our environment.

= Databricks.Catalogs("xyz.cloud.databricks.com", "sql/protocolv1/o/0/clusterxyz", [Catalog=null, Database=null, EnableExperimentalFlagsV1_1_0=null, EnableQueryResultDownload="0"])

copy @GilbertQ

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Worked for me, Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi @Fromit87 ,

Thanks for your reply. I'm aware of the parameter EnableQueryResultDownload="0".

Microsoft & Databricks suggested to set this parameter in order to get it running. However when you set this parameter you disable Cloud Fetch and you get very slow performance importing data.

I noticed that the issue doesn't occur when I go through the public internet (withouth any gateway in place and connecting directly from PBI service to databricks).

Let's hope that Microsoft updates the driver in the next release.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am having the same issue and adding that parameter works fine desktop however just for ~25k records it takes about 2 mins to refresh the power bi service which is not at all acceptable performance.

I have been following up with MS team and Databricks team on this and have been getting the same answer to add the parameter. Due to security reasons, I cannot connect it without the gateway.

Are you able to confim how slow the performance was in your case?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @GilbertQ,

Indeed, it's weird. I setup a completly new PBI file with a new table that combines a Oracle and a Databricks source connection (created a new connection, no copy/pasting).

Refresh works fine on PBI Desktop, but on Service I get the same Error:

"A retriable error occurred while attempting to download a result file from the cloud store but the retry limit had been exceeded."

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

are you using a Power BI gateway in between? If so make sure you have installed the latest version. We had the very same issue and it was fixed with the November release. Hope that helps for you too 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @daniel_st, lucky you - unfortunately we have the latest Gateway release running, but I still get the error when merging databricks with other non-databricks sources... only works with parameter EnableQueryResultDownload="0"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did anyone find any solution for this?

Both MS and Databricks not helping enough on this and hand bowling it to each other.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same here, MS / Databricks are not helpful...

Does the latest announcement maybe change something in this regard?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

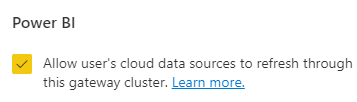

Yeah that is strange it could be a setup on your gateway, do you have the cloud and on-premise data sources configured in the gateway as shown below?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @GilbertQ ,

I checked with my IT department, and yes the Gateway settings have this option activated. My IT department has raised a ticket with MS. As soon as they find out the rootcause and fix. I'll post it here.

Thank you for you time and assistance so far! Appreciated!

Fromit87

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Fromit87

Can you confirm for your Dev workspace it is pointing via the gateway to the same source as in Power BI Desktop?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @GilbertQ,

Yes, sources are identical in the Gateway and PBI Desktop. This morning the refresh on the Dev workspace failed as well with the same error as mentioned in my original post.

I also have to mention, the model refresh in the service worked until a week ago. The only thing that I changed, I had to move all Databricks sources to the Databricks E2 environment (that entailed changing the server address and path address to the new Databricks cluster).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Fromit87

That does seem a little weird. Can you confirm if you create a new data source to the new databricks cluster that it works?

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.