- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Enabling large models for a dataset of 97gb

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Enabling large models for a dataset of 97gb

Hi,

I have been trying to upload a dataset of 97.8 GB onto power bi service with an a premium account. I have enabled the premium files which means that i should not have any limitations on the dataset size and should only depend on the premium capacity size.

I defined the incremental refresh policy and uploaded the dataset with a days data which is around 700k. However when i refresh the dataset, I have received a error saying that the "The refresh operation failed because it took more than 120 minutes to complete. Consider reducing the size of your dataset or breaking it up into smaller datasets".

Please note that this also coincides with the azure services being down on 14the september.

It would be really appreciative if someonce can guide me on right way to do it, is it even possible to upload 97gb dataset onto power bi service.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

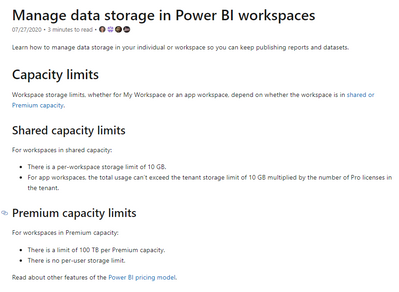

hi @sunildatalytyx - There is a limit of 100TB if you are on the Premium license; see below Microsoft link for details.

Also have you looked into options of optimzing your data model to reduce the size; you can take a look at the below link for some tips for reducing model size.

https://www.youtube.com/watch?v=c-ZqToc85Yc

Please mark the post as a solution if my comment helped with solving your issue. Thanks!

Proud to be a Super User!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sumanth

Thank you for your response, yes I am aware of this and since my dataset is 97.8gb any optimization would not result in a drastic change.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @sunildatalytyx

As mentioned in this document, "Scheduled refresh for imported datasets timeout after two hours. This timeout is increased to five hours for datasets in Premium workspaces. If you encounter this limit, consider reducing the size or complexity of your dataset, or consider breaking the dataset into smaller pieces."

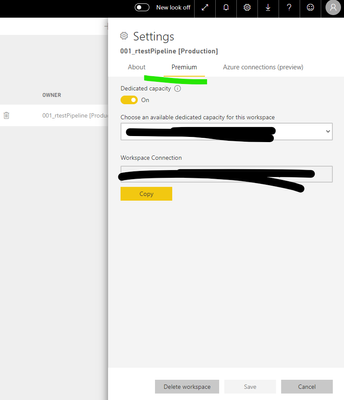

Can you re-check whether dedicated capacity is successfully allocated to this workspace first ?

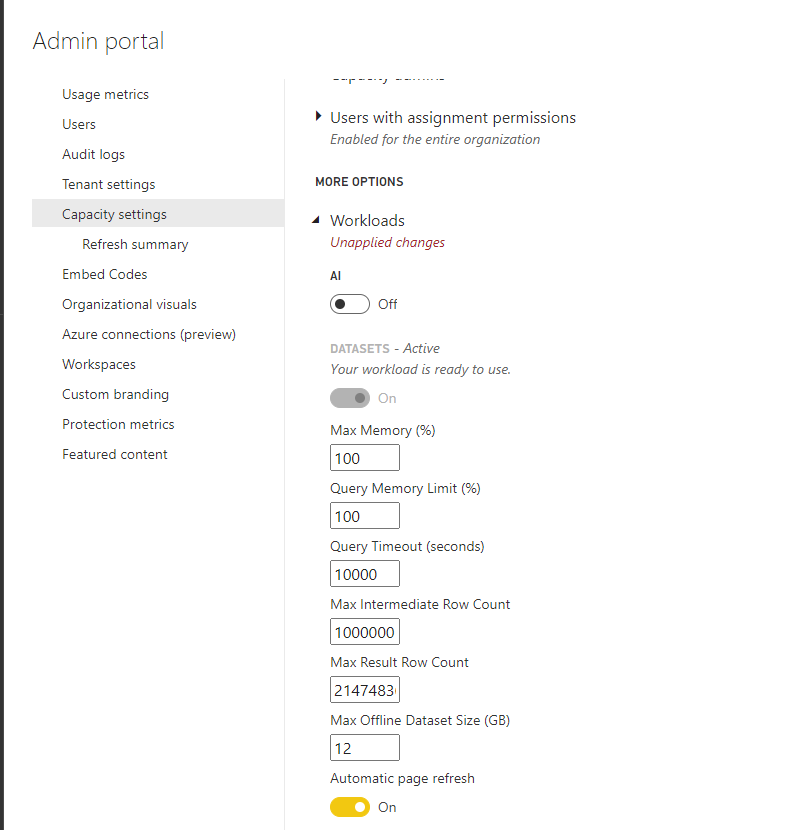

You can also configure the workloads of datasets.For example,you can try to increase the Max query TimeOut and Max offline Dateset Size.

Best Regards,

Community Support Team _ Eason

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @v-easonf-msft,

Thank you for your response.

I am not sure if the max query time out will resolve the issue as the hard limit for running queries on imported dataset has a hard cieling of 5 hours for premium capacity. I was able to upload 6 months data which took around 2.5 hours but it fails when i try to do the 1 or 3 years data. Can you please shed light on how will this help me in this situation

And yes the workspace is allocated to the dedicated capacity

And with regards to the size and complexity, I dont think I cannot do anything as the client wants the entire table for their internal analysis, this is a rolling 3 year period data. And considering the option on the breaking of datasets to smaller chunks, I dont think it is possible considering below

1. Need the complete 3 years rolling data as one, so if i break this into bits not sure how I can create something which is a single entity for the end user

2. How do I really upload 6 months data individually for the 3 years, are you saying physically downloading data onto PBI desktop and then import onto power bi service? if thats the way then the problem is data will not be rolling and is static

3. How do i implement incremental refresh individually on each 6 month period as it takes current UTC time and pulls data for last days/months/years periods. So not sure how this happens

Are there any other ways for me to bulk upload this 100gb data onto premium files and then use it through PBI.

It will be really help full if you can shed some light on how I can resolve this

Thank you again for the response, really appreciative.

Cheers!

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.