- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Dataflows and large datasets

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflows and large datasets

I would like to know what exactly the recommendation is for using dataflows with large data sources.

Currently, when I make changes to a dataset that is connected to a database, I need to restrict the query in the desktop file to only include the last couple of months, by using the RangeStart and RangeEnd parameters.

Since the dataset covers the last 2 years it is not practical to load the entire history in the local desktop file, as it comprises over 160 million rows.

So, when the data is published, the incremental policy takes care of the historical data. This works fine, except for the fact that between the initial upload, and when the complete refresh in the service is complete, our users can only see the last two months’ data.

Therefore, I would like to use dataflows to try and deal with this issue. So far it’s pretty encouraging - load times seem to be a little bit quicker. And I can switch from a database connection to a dataflow connection seamlessly. But, if I want to extend the historical size of the dataflow - using the example above, from 2 months to 24 months - it seems like the change in the dataflow incremental load policy is not reflected in the published dataset in Service. To achieve this, it looks like I would need to reload the data in the desktop file, and publish this again.

What would make sense to me is if Power BI Desktop could use a snapshot or preview of the dataflow to create a dataset, without having to download the entire thing and then upload it back again.

Have I missed something important? Because if not, this seems to me to be incredibly inefficient…

I know that I can change the incremental policy temporarily, reload the dataflow, use the abbreviated resultant dataset in desktop, make changes, re-publish and then switch the incremental policy back, but still... it doesn't feel like this is the best solution.

Can anyone suggest any improvements I could make to this, or solutions?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

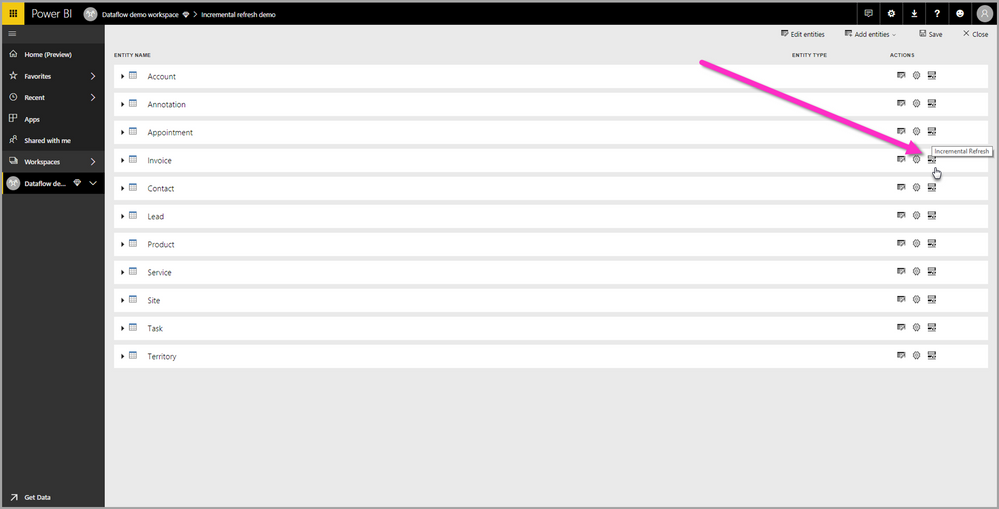

You can set incremental refresh for dataflow in Power BI Service via clicking incremental refresh icon and needn't to republish , see more detail: Using incremental refresh with Power BI dataflows .

You can also learn more: How to Use Dataflow to Make the Refresh of Power BI Solution FASTER!

Best Regards,

Amy

Community Support Team _ Amy

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @v-xicai but setting the incremental load in itself is not the issue. I have done this several times and I'm happy with it, and also with connecting dataflows to other sources etc.

The problem is figuring out the best way to work with existing datasets based on either source data (in databases) or from dataflows, that have quite a lot of history.

Please see my reply to Jeff for additional information.

The single question that I would like to be answered is:

"What is the optimal way to make changes to a large dataset, with signficant historical data, that has a minimal impact on my organisation's users?"

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

Have you found a solution since then ? I face the same issue with multiple huge dataflows used in the dataset, which takes tremendeous amount of time to download, and then to upload back to PBI Service. It has not real value, since once the dataset is published back to PBI Service, it will refresh anyway 😕

Thanks !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I haven't used incremental refresh in datasets but have in dataflows. They operate separately--incrementally load data into your dataflow, and incrementally load data into your dataset.

For development and prototyping, I create a "sample" dataflow with 1,000 records from each entity in my source dataflow and use that in Power BI Desktop. When I'm ready, I switch to the full dataflow. Of course all the data still has to be downloaded to Power BI Desktop just to refresh and publish up to the cloud again. I agree this is inefficient and I hope this functionality is evolving within the product team.

I also separate my "ingest" dataflows from my "final" dataflows that are used in downstream dataflows/datasets. So you could insert this sample filter in the "final" dataflow, and then just remove it when you want the full entity loaded. This is nice because you can publish with the sample set, update the dataflow, and then refresh the dataset to pull in the full entity.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apologies for the delay, but thanks a lot for the detailed response, that’s good to know.

On reflection, I think I might be able to use dataflows in conjunction with the REST API in order to achieve what I’m looking for, if a dataset is based on one or more dataflows.

This would be my suggested workflow for changes to a given dataset, using the API as much as possible:

- Save 2 copies of the dataset, one suffixed _bkp (for backup) and one suffixed _dev (development)

- Change the incremental load policy to 1 month (or whatever time period provides a small enough example to work with)

- Download the _dev dataset

- Refresh the data from the flow, so that it only covers the limited time period

- Make all required changes

- Upload the updated _dev dataset

- Reset the incremental policy of the dataflow to normal

- Refresh dataflow

- If changes all appear correctly, copy _dev over the production dataset

- Delete _dev, and archive _bkp

If there are problems, then additional steps would of course be necessary to address them.

I would be happy to implement all this - however, given the fact that the new deployment pipeline is imminent, I’m not sure it would be worth the effort.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.