- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Dataflows, Azure and Azure SQL processing large da...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflows, Azure and Azure SQL processing large dataset

Hey all,

I'm reaching out here because I have a pretty unique situation that I am facing. To further explain this I'd like to mention what we are doing and what we are trying to accomplish. Skip to each section as needed, this is a rather lengthy explanation so I apologize in advance.

Process:

We have set up Log Analytics for about 90% of our workspaces (on Premium). We needed this data historically, which is why the current Microsoft solution does not work (I think they go back 30 days).

Our process was to load the Log Analytics into a Resource Group in Azure, from that Resource group we complete a process that loads all of the logs into Azure SQL. By the way, the data we have there is amazing, but its quite large. We have down to the DAX level computations and their CPU/Duration usage. We have data starting in January up until current, which equates to about 7 million rows. Ignoring the row-size, its the columns that creates a massive problem. Since we have an EventText column that includes the actual DAX query, some of those fields can be rather large. All in all, the dataset is about 30 GB in size.

Goal:

My goal is rather simple, I was tasked with finding a way to connect Activity Data (API) to Performance data (Log Analytics). Wasn't an easy task, but I was able to join the datasets in 5 minute increments, we are able to see who did what and when, and how much it cost our server (computationally).

I am trying to get a Dataflow to load all of the Azure SQL, but I keep getting hit with timeouts. Back to the 30GB in size dataset, I just need to get this loaded 1 single time so I can set up an incremental and not focus on the past and only load 1 day at a time. Unfortunately the Dataflow does not process the data fast enough to stay under 10 minutes.

For reference:

P2 SKU

Azure SQL 8 CPU server

The problem looks to be the throughput, where we are only processing roughly a MB/sec, which will never finish before 10 minutes. So my idea of using a Dataflow seems to be a waste of time, it would be nearly impossible to reload this entire dataset daily as it grows. It definitely needs to be incremental.

What we tried:

-Loaded the entire table into a CSV and stored on a Data Lake, again, 30GB and it just doesn't process fast enough - we also got errors in the data load (I checked the errors and they weren't true)

-Created a rolling 3 day SQL table that holds only 3 days worth of Performance data. We thought that we could load this table into a Dataflow, then the dataflow would keep the stored data and we could keep old data and continue to fetch new data and store. Unfortunately Dataflows dont look like they actually store the data on incremental. It just became a rolling 3 day dataset in Power BI.

Has anyone been down this path? Does anyone have any suggestions for what we can do? The ultimate goal is to get the entire table in a dataflow, keep it stored and just increment 1 day at a time. We dont care how long the first load takes, I can run that overnight... the following refreshes will only take a fraction of time (usually about 2-3 minutes). I would load the entire dataset into a dataflow but it cant process due to time restraints. Just receive timeout errors every time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What's the cardinality like on the dax query column? If people are just accesing power bi reports I would guess there is a large amount of repetition?

If so could you use azure data factory to process it into a dimensional model in sql before you load? Eg the dax query column becomes a dimension table.

I have very little experience with azure data factory but that approach would work in on premise SSIS.

An alternative would be to have your incremental dataflow point at a view in SQL. Intitially you could put a date filter in the view to limit to the first few weeks. Run the dataflow. Extend the filter in the view. Run again and build up gradually.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Ben,

I like the idea with the view and gradual expansion. That is a possible solution (albeit a bit painful). I also suggested breaking the DAX column out to its own table and it would significantly shrink the data size, but the other side of that is we are duplicating data. Instead of 7 million rows, we'll have 14 million... just spanned over 2 tables.

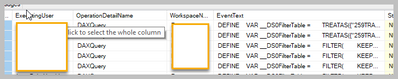

So the cardinality on the DAX column is 1-1 on the Performance level. Meaning each "transaction" has its own ID and what happened. There is an additional event type column, since a user doesnt necessarily need to use DAX to create a performance transaction. Here's an example below, I'm not including all the columns on this but you get the idea. There are other ones for CPU usage and Duration as well that are helpful.

The issue with cardinality comes when you JOIN to the Activity Log table (This is done through the Admin APIs, which gather specifically what a user is doing). The JOIN is tricky, but I was able to create a 5 min interval that defines a user doing "something" and join it to the performance data at that point with the same user in that same time frame doing "something". There is no direct JOIN between Activity Log and Performance Logs as far as I have seen.

Basically what this means is we are able to see what a user is doing within a 5 minute window and what they did, but it is possible for duplication... except at that level it doesn't hold a ton of importance because we still see what they did and how it had an effect on our performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll have a play with our log analytics tomorrow and give it some thought. As said I'm new to the azure id of life, how are you getting data from log analytics into your sql server?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That question is a bit outside of my realm, I'm working with a DBA as well. My guess is that he either runs it through Data Factory or some sort of direct feed from the storage on the Resource Group directly into SQL. I Know we have a few SPROCs running to keep the data clean and loading properly.

It can get pretty daunting because theres so much data (Depending on how active your users are).

It would be really cool if Microsoft actually did this and stored this in an efficient environment. I know they have the data but we just can't see it or access it other than the pre-loaded template reports they provide.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.