- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Dataflow to Dataset refresh memory usage

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflow to Dataset refresh memory usage

I am trying to nail down our memory usage. For background we have Power BI A2 Premium embedded - used by our application, with all our data being stored in an Azure DB and DW. We have to move our capacity to A3 just to refresh one dataset, our largest dataset. I had a thought to split up some of the queries into some dataflows, and refresh the dataset from those dataflows. Logically, it would seem this might not work however as resources have to be used for first the dataset refresh and then the dataset refresh, as a dataflow is just a data storage method. However, I thought it might cut down our memory usage due to the optimized source being a dataflow for part of the dataset's "refresh." Am I totally wrong on how the architecture of the service handles dataflows and dataset refreshes? To be clear, I'm not worried about storage size - I'm more concerned with the 5 GB memory limit of A2.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @NetJer20

When refreshing a dataflow, the dataflow workload spawns a container for each entity in the dataflow. Each container can take memory up to the volume specified in the Container Size setting. The default for all SKUs is 700 MB. You might want to change this setting if:

- Dataflows take too long to refresh, or dataflow refresh fails on a timeout.

- Dataflow entities include computation steps, for example, a join.

It's recommended you use the Power BI Premium Capacity Metrics app to analyze Dataflow workload performance.

In some cases, increasing container size may not improve performance. For example, if the dataflow is getting data only from a source without performing significant calculations, changing container size probably won't help. Increasing container size might help if it will enable the Dataflow workload to allocate more memory for entity refresh operations. By having more memory allocated, it can reduce the time it takes to refresh heavily computed entities.

The Container Size value can't exceed the maximum memory for the Dataflows workload. For example, a P1 capacity has 25GB of memory. If the Dataflow workload Max Memory (%) is set to 20%, Container Size (MB) cannot exceed 5000. In all cases, the Container Size cannot exceed the Max Memory, even if you set a higher value.

If you'd like to refresh the dataset with a large model, you might refer to the official document: You can use incremental refresh to configure a dataset to grow beyond 10 GB

https://docs.microsoft.com/en-us/power-bi/service-premium-large-models

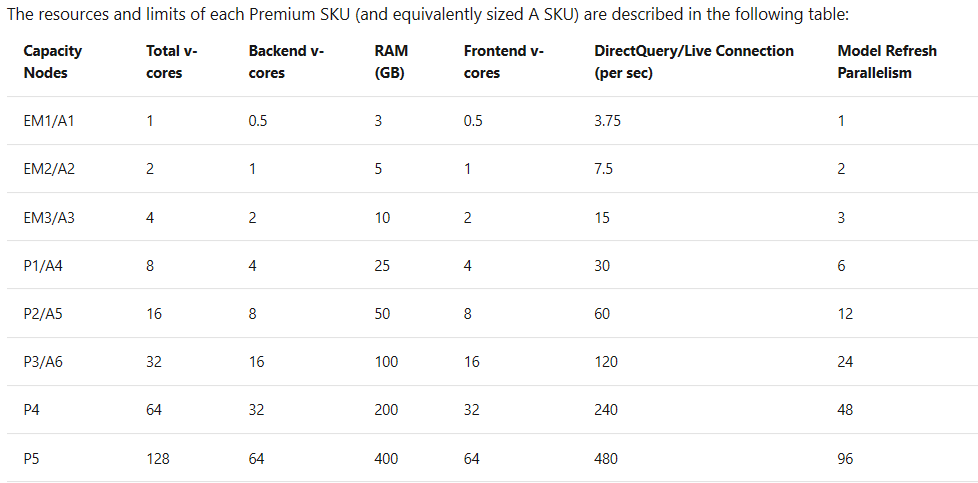

Also, if you;d like to split the dataset into several dataflow and run the number of parallel model refreshes. Kindly note the below limitations:

https://docs.microsoft.com/en-us/power-bi/service-premium-what-is#capacity-nodes

If this post helps, then please consider Accept it as the solution to help the other members find it more

quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @NetJer20

When refreshing a dataflow, the dataflow workload spawns a container for each entity in the dataflow. Each container can take memory up to the volume specified in the Container Size setting. The default for all SKUs is 700 MB. You might want to change this setting if:

- Dataflows take too long to refresh, or dataflow refresh fails on a timeout.

- Dataflow entities include computation steps, for example, a join.

It's recommended you use the Power BI Premium Capacity Metrics app to analyze Dataflow workload performance.

In some cases, increasing container size may not improve performance. For example, if the dataflow is getting data only from a source without performing significant calculations, changing container size probably won't help. Increasing container size might help if it will enable the Dataflow workload to allocate more memory for entity refresh operations. By having more memory allocated, it can reduce the time it takes to refresh heavily computed entities.

The Container Size value can't exceed the maximum memory for the Dataflows workload. For example, a P1 capacity has 25GB of memory. If the Dataflow workload Max Memory (%) is set to 20%, Container Size (MB) cannot exceed 5000. In all cases, the Container Size cannot exceed the Max Memory, even if you set a higher value.

If you'd like to refresh the dataset with a large model, you might refer to the official document: You can use incremental refresh to configure a dataset to grow beyond 10 GB

https://docs.microsoft.com/en-us/power-bi/service-premium-large-models

Also, if you;d like to split the dataset into several dataflow and run the number of parallel model refreshes. Kindly note the below limitations:

https://docs.microsoft.com/en-us/power-bi/service-premium-what-is#capacity-nodes

If this post helps, then please consider Accept it as the solution to help the other members find it more

quickly.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.