- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Dataflow error for huge records - Evaluation r...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflow error for huge records - Evaluation resulted in a stack overflow

I have a table with lakhs of records. 'Time' is one of the fields. I have created a function fetchlatest to get the latest record based on 'time'. As you can see in the below code, the function basically groups the table based on unique key (GroupByIds) and then create column 'MaxDateTime'. Then filter out the records which has time = MaxDateTime.

I validated the function with a table having thousands of records and it worked fine. But when I did scale testing with lakhs of records, I am getting the error 'We encountered an error during evaluation. Errors- Evaluation resulted in a stack overflow and cannot continue'. When I manually tested each step, it happens at the step Table.Group.

let

FetchLatest = (Table , AllProperties, GroupByIds) =>

let

Properties = List.Difference(AllProperties, GroupByIds),

GroupRowsById = Table.Group( Table, GroupByIds, {{"MaxDateTime", each List.Max([time]), type datetime}, {"All rows", each _, type table}}),

ExpandGroupedRows = Table.ExpandTableColumn(GroupRowsById , "All rows", Properties, Properties),

FilterMaxTimeRow = Table.SelectRows(ExpandGroupedRows, each [time] = [MaxDateTime]),

RemoveExtraColumns = Table.RemoveColumns(FilterMaxTimeRow ,{"MaxDateTime"}),

Result =

if List.IsEmpty(GroupByIds) = true

then Table

else RemoveExtraColumns

in

Result

in

FetchLatest

I have shared pbix and excel in this link: pbix and excel table

Please note that I used Dataflows and the code was written in powerquery online.

To test the functionality in powerBI desktop, this is what I have done:

1. Fetch table from blobsource in dataflow without calling getlatest function.

2. Import the entire table in powerBI desktop by connecting to dataflow.

3. Then called getlatest funtion in powerBI desktop in m code and it worked!

When I tried in powerBI desktop for two tables (dataflow has around 10 tables), my local machine hit CPU and memory limit.

In dataflows, I am getting this stack overflow error and that too only for huge records and not for small table.

It is most likely due to high memory consumption. I also confirmed the premium capacity in powerbi exceeded 80% utilization.

How to optimize the M code to get latest records from the huge table?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi V55,

These kind of issue may caused by the datasize. Is the data source Azure Data Lake Storage Gen2? Please refer to consideration and limitation here:

Regards,

Jimmy Tao

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @v-yuta-msft,

Thanks for the response.

I am assuming all the dataflow data gets stored ADLS Gen2.

My datasource is blob, but I am actually storing it in a different dataflow.

Then I created another dataflow to fetch data from above dataflow, transform the data and then call get latest function.

I just want to highlight that I did not get the error when datasize is less. When I have lakhs of records, I am getting this error.

The error returned by dataflow can be seen here :Dataflow Error. (WCUS)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does the data file you shared represent the full data set or just a partial data set? Is it the same as the case which fails in Dataflows?

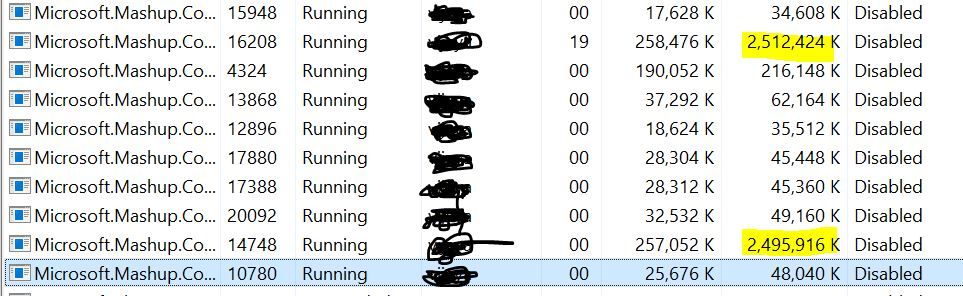

When you successfully load the data in Desktop, what's the maximum value reached for the commit size of the process Microsoft.Mashup.Container.NetFx45.exe? (You can see that in the Details tab of the Task Manager, but you'll probably need to right-click on the column header and select the "Commit size" column.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tested with much larger records. This is what I noticed in my localmachine. The highlighted value is the commit size.

Since it is not executing in dataflow and giving stack overflow error, could it be related to dataflow capacity?

I am using powerBI premium shared capacity. But unfortunately, I dont know the dataflow capacity set.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Actually the issue seems to due to the heavy function (get latest function) on the table having lakhs of records.

In the above post, I posted the commit size when I loaded single table in powerbi desktop. Now this image shows the commit size when I tried to do the same operation for three tables in powerbi desktop. And my CPU and memory hit the peak.

I also confirmed the memory thrashing in premium exceeded 80%.

Wondering if there is a better way to optimize get latest function?

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.