- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Dataflow +Dataset = NEED more Clarity!

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflow +Dataset = NEED more Clarity!

Hi All,

I have successfully built an Enterprise Dataflow that loads all my tables in PBI service. Its incremental, scheduled and all is good! Now I use PowerBI Desktop to consume my Dataflow:

PS: I know I sound, irritated and angry, but this is after putting all hopes into this feature, waiting for a long time for it to be available!

SHOCKER #1: Why is PowerBI Desktop downloading EVERYTHING from Dataflow into my DATASET? Shouldn't it be a Thin-client like connection to the Dataflow? What was the purpose of having an on Cloud-ETL , if everything gets downloaded to my local desktop? I am talking above GBs of enterprise data here (which I could limit using top 100 using onpremise connection to build my Model)

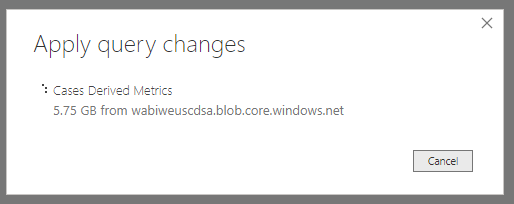

Here is one of my Dataflow getting "downloaded" to my PBI Desktop. It contain 53M rows.... and its been 10+ mins since the download is running!

EDIT: 84 GB data getting downloaded from my Dataflow on my Desktop! This makes NO SENSE!

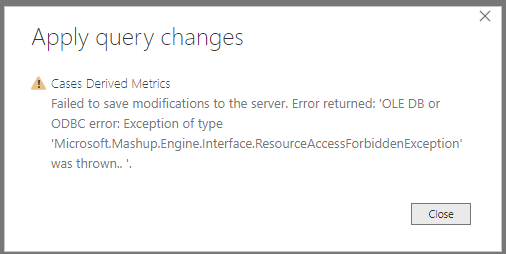

After about 30 mins, I get this error! What I am supposed to do now?

Anyways, I try again patiently wait for the Dataflow to complete its download on my desktop, then I build my Datamodel, join my tables, create Lovely reports and publish it to my Premium Workspacem then... boom..

SHOCKER #2: My new DATASET doesn't automatically refresh data from DATAFLOW either! I am told that I need to schedule the DATASET(which is supposed to be already linked to Dataflows) to be refreshed too! WHY? I again ask, whats then the purpose of preloading data on Dataflows? Why doesnt Dataset automcatially refreshes itself when Dataflow is refreshed?

Why are none of your articles talking about these two limitations? They all made it look like Datset will connect in a "Direct Query" or "Live connection" method to the Dataflows. Noboday says that you need to download the entire data and then refresh your datasets as well!

SHOCKER #3:

EDIT: MS has fixed this restriction on the recent PBI Services update! Thank you!

You need to be a Gateway Admin to access on Premises data sources? Why? We have close to 800 Power BI Pro developer. We cant make everyone an "Admin" to the Gateway. If a User has been granted access to a particular Gateway connection, he needs to be able to use it to build a Dataflow. This again defeats the purpose of Dataflow. Its very restricting! Now a Gateway admin needs to help developers build Dataflows becuase granting access to Enterprise Gateway (with 100s of connections) is too risky!

Other posts the confirm my fear

https://community.powerbi.com/t5/Service/Dataflow-vs-Dataset-refresh/m-p/572076#M57973

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

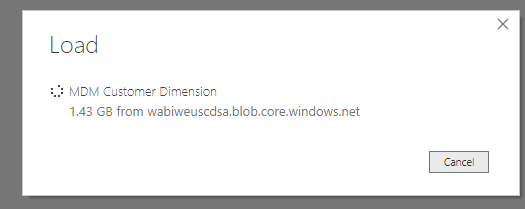

Here is another shining example of how the way Dataflow fetches data on Desktop forever and ever. Not an ideal way to start development of a report. Why cant it just give us a preview or sample of few 1000s?

MAKE ABSOLUTELY NO SENSE to download every data to the desktop!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would start in Power BI Service, create a derived Dataflow that filters to the data you need and then connect to that new Dataflow from Power BI Desktop.

You could also create a "Sample" Dataflow, with a few hundred records to enable report creation and then switch to the full Dataflow before publishing the report to service.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. But the problem is every-time you switch it starts downloading the data again right?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Correct, @Anonymous you'll eventually need to download all the data to Desktop to publish--but at least this eases report creation.

I'm pretty sure DirectQuery is coming to Enhanced Dataflows Compute Engine, so maybe there will be options to limit data during report creation too 🙂

EDIT: Actually, no--you don't have to download everything to Desktop if you don't remove the filter from the derived Dataflow until after publish. From @MatthewRoche 's blog

"Quick Tip: Developing with large dataflows

- Develop and test the dataflows in the Power BI service

- Add filters to the too-large dataflow entities’ queries[2] to reduce the number of records in the entities

- Refresh the dataflow

- Develop the model and reports in Power BI Desktop

- Publish the model and reports to the Power BI service

- Remove the filters from the filtered dataflow entities

- Refresh the dataflow

- Refresh the dataset"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@jeffshieldsdevThank you so much! This sounds promising! 🙂

Sad we have to beat around bushes to find such solution. Wish MS made it clear from day 1.

Also happy to hear DirectQuery is coming to Dataflows!

However, is the DirectQuery from the data loaded to a Flow?

Or DirectQuery from the source DB via Dataflow?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My understanding is the dataflows we create will have DirectQuery support in Power BI Desktop, via the enhanced compute engine. It was mentioned at MBAS in June, however I'm not seeing anything in the release notes now, so who knows 😞 https://docs.microsoft.com/en-us/power-platform-release-plan/2019wave2/index

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'd guess there might be an update announced at PASS Summit in November 5-8. You might also look at Power Platform Release Plan BI Features that is updated occasionally

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'd hope that in Add Data for the dataflow if you bring it in with the Edit option instead of Load, that you could filter by a date field and that would be used to limit the data?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the twitter link! This was a topic we dicussed in last week's PBI governance call of my company. Glad its being addressed! 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No response?? :'(

I have a Dataflow Entity that has about 54Million record.s

I am trying to create a report using this Dataflow and everytime, Power BI Desktop trys to download 10s of GB of data from the Dataflow. This does not make any sense!

If I multiple this with the other Entities that have similar volume, I will never be able to create a report on my Desktop!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@AnonymousI saw your reply in our thread about dataflow timeouts.

I don't think you should need to load your entire dataflow. When I open PBI Desktop and click 'Get Data,' then pick 'Power BI Dataflows,' and go through the wizard to eventually select some specific dataflow that is deployed to the service, the next thing that happens is the Power Query Editor opens and creates a new query pointing to the selected dataflow as it's source.

You should be able to apply whatever filters and limiters you want at this point before loading the data to your model. You could curtail that 54 million rows by whatever degree you need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@RBunting Thanks for the response!

My requriemnt is to show historical data/trends and I need to have all the data from this Dataflow. Of course, there will be filters for Year/Month/Week and Region to ensure not everything is loaded at once.

This is the reson I decided to go with a Dataflow to pre-load my data into Cloud.

Even if I do apply a filter, the data is still downloaded from Dataflow , its still going to be GBs worth of data everytime I need to fetch new data for my development. Do you think I am doing something wrong here?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ideally, if you have Power BI Premium, you could use Incremental Refresh, both in loading data to the dataflow and to incrementally load new data into the dataset. Otherwise, you have to load all of the the data all the time. Only way around without Premium would be to create a table with history data that is not refreshed, then another table with currrent data. In a DAX measure you could combine the values, such as: Sales = SalesHistory[Units] + SalesCurrent[Units}.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @parishdapbi

We do have Premium capacity and I am using Incremental Refresh on the Dataflow.

I liked the idea of having Incremental refresh on the Dataset, however I always thought Dataset will have a live connection to Dataflows. This is really disappointing.

And I hope MS does something to only enable a preview Data during development using Dataflow and not force use to download complete data onto desktop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Direct Query connections to dataflow is 'under review' in the ideas forum. You should vote it up.

https://ideas.powerbi.com/forums/265200-power-bi-ideas/suggestions/35976663-dataflows-direct-query

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @RBunting for the link!

I read some of the comments on this idea. Glad to see I am not alone here 🙂

Hope MS takes this up soon

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@AnonymousSo I've been following this post now for a couple weeks as I believe I'm in the same boat with our current PBI environment. For my understanding, are you, essentially, importing your dataflows into the PBI Desktop? If so, aren't you waiting a long time for things to load? I thought the whole point of Dataflows was to have a "connection" to the cloud where resource utilization would take place on the Azure Data Lake level (scratching my head here)?

I thought as a work around I would create a Dataflow dataset (wait a half day for everything to import 🙂 ). This way I have one central dataset the masses could leverage as well as me having one central ETL point.

Any additional clarification would be greatly appreciated!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Still no response!

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.