- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- DataFlow and Dataset size

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DataFlow and Dataset size

Hi

I started creating bunch of dataflows and subsequently created datasets using them and later paginated reports. It was working smoothly. Now we added more data years and each dataset size grown as 400MB+.

How do we know the Dataflow size? (Upon searching in this forum, it says not possible)

How do we modify the code and control the dataset size during our development? It is becoming hard to debug or add more tables to it. or any other suggestions for faster development?

TIA

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

400 MB seems small. What is your constraint?

To control development dataset size you can use a fake incremental refresh that uses RangeStart and RangeEnd to limit the dev size but then in Power BI you specify that the storage range is the same as the refresh range.

Or use Deployment Pipelines to point at different (sub) data sources.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Two part to my question:

a) I would like to know is there any way to know the dataflow - size, each table/query size inside the dataflow. I use deployment pipelines between environments. So, I like to compare between UAT and Prod enironments by sizes and code (.json file). FYI, UAT and Prod both points to same datasources.

b) Let me clarify further, Size is not an issue at PBI service. I am aware that it can go upto 10GB.

My Issue is more related to the dataset editing (.pbix file), it is taking too much time and being remote and VPN causes even slower. To do changes I am downloading and editing and then publishing.

Thanks for replying.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

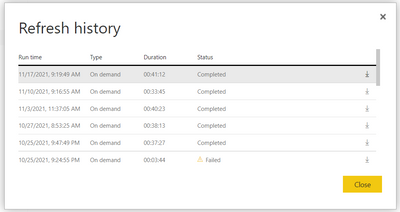

a) you can get that information from the dataflow refresh history. Click on that little download arrow and then look inside the downloaded CSV file to see the partition sizes.

b) My proposal to use the RangeStart and RangeEnd parameters seems to fit your requirement. As you likely know any structural updates will re-trigger the full refresh anyway.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

a) I looked at it before posting but it is not giving the storage size. Unless Max commit is storage size.

For example, check the relevant columns

| Rows processed | Bytes processed (KB) | Max commit (KB) |

| 4391838 | 1639203 | 156016 |

| 180 | 6 | 55860 |

| 4390648 | 1676142 | 122948 |

| 4379521 | 1676142 | 157216 |

b) Thanks for elaborating. I am aware and let me rethink about this approach.

Meanwhile I googled, somewhere it says that they are thinking to provide direct query mode to power bi dataflows that are consumed in the datasets, until then, no other options.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Remember the values are shown in KB. Multiply Bytes processed by 1024 to get your partition sizes.

Direct Query against Dataflows is a travesty. All it does is put an Azure SQL database between your blob storage and your dataset.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

a) So you are saying Max commit (KB) is the storage of partition size. Adding each row values will give the whole dataflow size.

I know this is not 100%, but say If this is true, it helps for me to do storage sizes for all dataflows as we have many dataflows.

The idea, to view dataflow sizes, is already submitted: https://ideas.powerbi.com/ideas/idea/?ideaid=5b955cf8-38ac-4864-ac3a-4993cad1b2d6

Thank you

b) I reached out to my team, currently they want all data in all environments as project is young. 😞 I will look for other options.

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"So you are saying Max commit (KB) is the storage of partition size."

No. Bytes Processed is the uncompressed dataflow partition size.

" it helps for me to do storage allocation for all reports as we have many reports."

This has nothing to do with reports.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am confused, as I started the thread to get storage sizes.

Sorry, I meant Dataflows and updated previous reply.

" it helps for me to do storage sizes for all dataflows as we have many dataflows."

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My opinion is that the storage size of the dataflow partition is represented by the "Bytes processed" column, not by the "Max commit" column.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I doubt it sir, because we can process lot of bytes and it stores only needed data in compressed format.

This article talks in details https://docs.microsoft.com/en-us/power-bi/transform-model/dataflows/dataflows-understand-optimize-re... ... To cache the entity, Power BI writes it to storage and to SQL."

It is not easy way to determine.

For now, let me use as "All partitions * Bytes Processed * 90%" to do size estimates, as we don't have straight forward approach yet.

Appreciate your reply.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.