- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Data Flows Schedule refresh not working consis...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Data Flows Schedule refresh not working consistently - Urgent help needed

Hello Community,

I want to bring this as a fresh discussion and seek help on managing data flows schedule refresh. There is one data flow i have created and i knew that it holds only 30 records. However , it takes more 30 minutes to refresh the data flow. Am I doing something wrong in following processes ?

Some where i read that If I am under premium capacity, they suggested to run datasets/dataflows at different times. But i checked the schedules and not more than 5 datasets are running currently. In this way, I am not getting good use of dataflows and unable to convey pros of using data flows with clients .

I am under Premium capacity and sure that environment doesnt have any momory issues as well. Could some one tell how to get rid of this situation ?

Thanks,

G VEnkatesh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi @Anonymous

You may try to restart the capacity to if it get better.

https://docs.microsoft.com/en-us/power-bi/service-admin-premium-restart

Regards,

Lin

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Lin,

Sure .Thanks for the suggestions. We would certainly restart the premium capacity as a measure to free up the memory.

Thanks,

G venkatesh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can you have a look at the premium metrics capacity app and see what is happening at the time of refresh.

Also review this whitepaper to help you troubleshoot performance issues.

https://docs.microsoft.com/en-us/power-bi/service-premium-capacity-scenarios

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Gilbert,

Thanks a lot for sharing those whitepapers. This information is very useful . I have reached out to Power BI Admin in my org. I have gone through some of those Statistics ( CPU Utilization and Memory usage) which we need to look at . I see that only 10 GB of Memory added for data flows . While my admin is yet to get back to me about the usage details, I would like to know if there is a way to increase this limits so that the dataflows will run faster .

At this time, I am just looking for one thing . I want my Dataflows to RUN FAST to make sure data is not old in my reports . We are not concerned about buying new hardware or clean up/flush out memory for dataflows to run fast.

Please assist !!!

Thanks,

G Venkatesh

Thanks,

G Venkatesh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

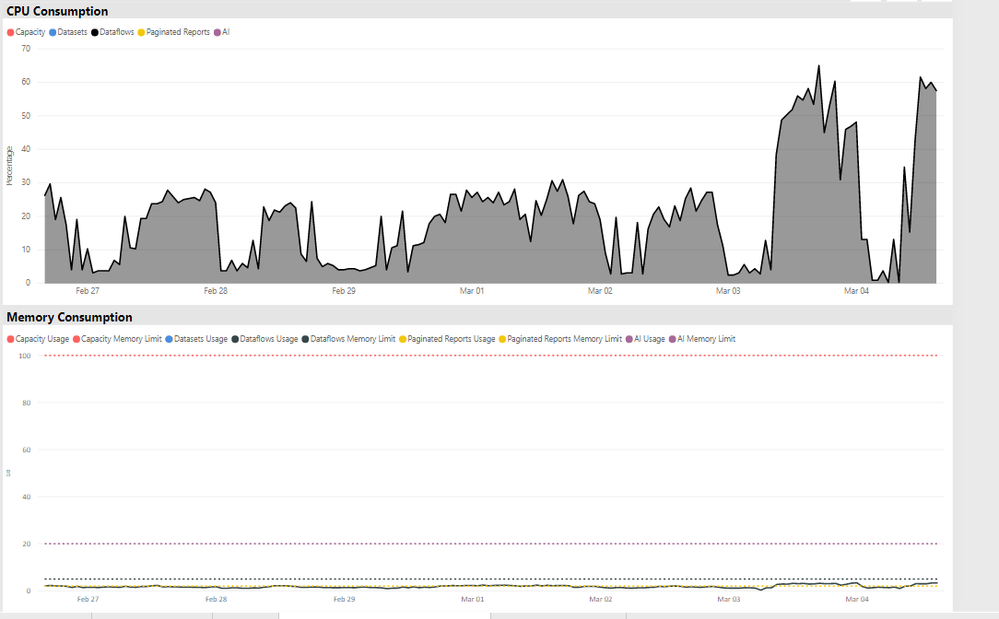

Hi Gilbert/Community,

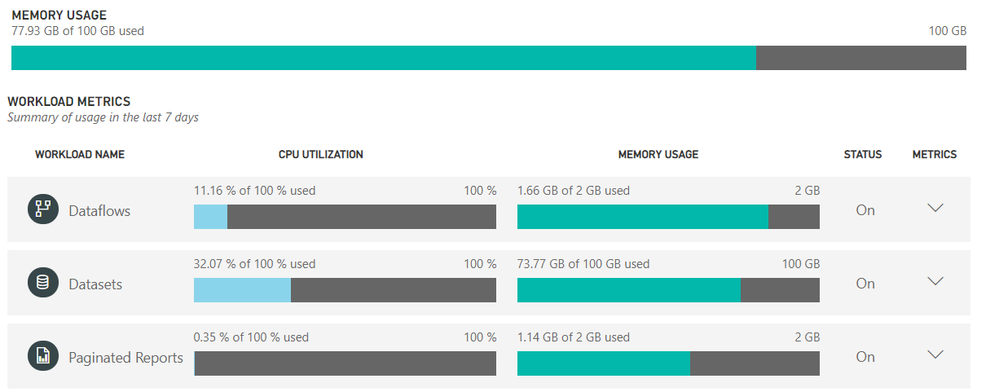

Also attaching the health statistics (metrics) currently we have our Premium capacity. While there are metrics for datasets and paginated reports as well, but i am particularly looking at dataflows only.

Based on this, What does you suggest for us to have the dataflows refresh quickly ? Also to avoid seeing stale data in reports, what is the frequency of refreshes i can do on each data flow ?

Thanks,

G Venkatesh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Based on your image above it appears that only 2GB of memory has been allocated to dataflows. This could certainly be why it is either taking very long to run or not refreshing.

Ask the Admin if it can be increased to 10GB and go from there. Dataflows do require memory for the compute.

Also ask the Admin if Enhanced Compute Engine under dataflows has been enable to allow it to get into your PBIX files faster?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Gilbert,

Thanks for your suggestions . I have discussed this with the Admin team and they are planning to increase data flows size to 10 GB.

Could you please show us how to enable Enhance compute Engine under Data flows ? We tried looking for this option ,but didnt find it in Admin portal.

Kindly assist !!

Thanks,

G venkatesh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous

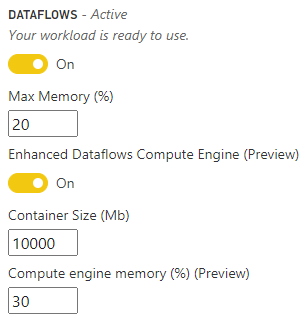

You can find it under the Capacity Settings and then under Workloads

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Mate(champ) . This helps me a lot . I will take this sugestions forward with Admin .

G Venkatesh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Gilbert,

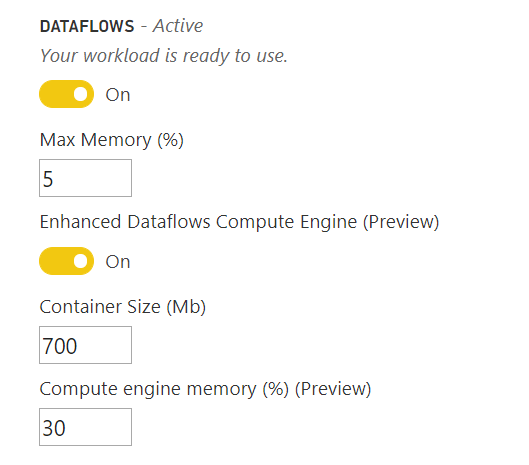

Hope you are doing good. After all the discussions we had last week , i requested my admin to increase the data flows capacity and enable the Enhance compute memory engine.

They tried their best and increased the size to 5 GB and also enabled the Compute memory engine option .

However, I still see that my data flows are running hours to complete refresh. I created a small report based out of SQL as data source in Power BI desktop and Data flow as a data source in Power BI desktop to compare data .

The updates are not proper for data flow record .

Please suggest what we have to do in this cases.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What I am thinking is the potential bottleneck is your Gateway?

If you can confirm that it is as fast as it can be?

In your comparison the "WO*" are different.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Gilbert & R1k91,

Looks like i have some good news after increasing memory limit to 10 GB for data flows and container size to 1000 MB.

- The dataflows are running faster than before ( taking 5 mins today Vs 1 hour yesterday ) . However, i am running them in business off hours and resources might be available. I would like to trigger them after couple of hours and see how they looks like.

Mean while, I am thinking about the Enterprise gateway and the doubts you have raised about it.

where do i need to check if my gateway is working fine or not ? Also,when can we say that gateway is a potential bottleneck ? I would like to know the use cases to answer your questions .

Please assist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

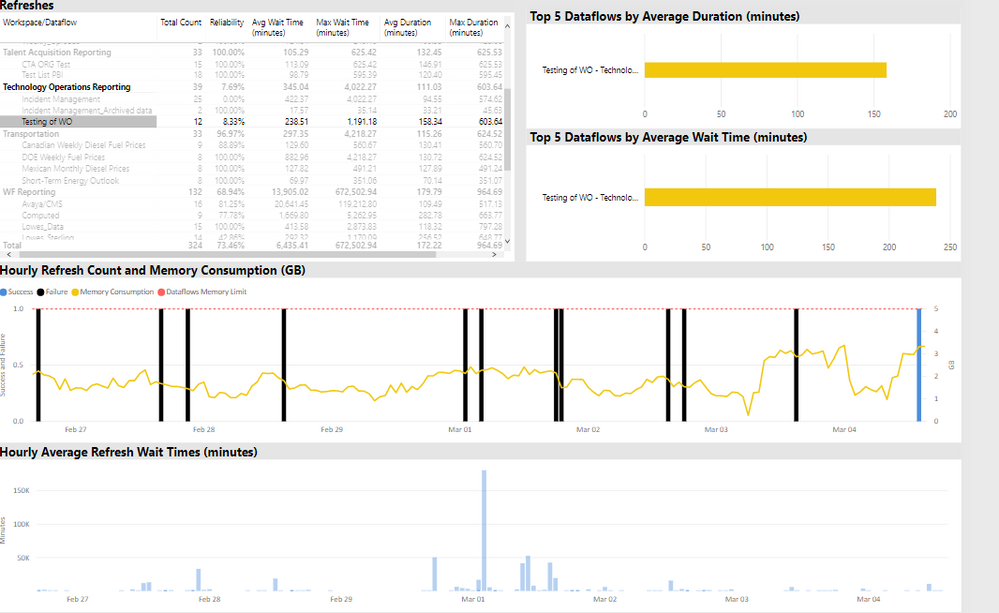

Hey Gilbert,

I have also attached few more screen shots of the metrics we have in our capacity as of now. Please see if all are good.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not sure Enhanced Compute Engine could solve your issue in this way.

If you read the FAQ section of documentation you'll find that in some cases the refresh will be slower because much memory is needed with ECE enabled during load.

https://docs.microsoft.com/it-it/power-bi/service-dataflows-enhanced-compute-engine

You also have to consider that when loading data with ECE enabled, behind the scene it's loading data in both Azure Data Lake Storage and Azure SQL Database hidden instace to push future transformation that will be quicker using the query folding.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi R1K91,

Thanks for replying . I have actually followed a suggestion from one of the member in community. But looking at your post now, I think Data flows are not helping me to fetch data quicker as the refreshes arent working as expected. Before the refresh is Completed, my data in report is getting older .

Are my above statements are true with regards to Data flows or am i doing some thing wrong with those capacity settings ?

Please suggest !!!

Thanks

G VEnkatesh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well it isn't any easy issue. You said your dataflows should fetch just 30 rows but I've some question:

- Which is the source? On-Prem SQL Server? On-Cloud source?

- Are you using a gateway?

- How many transformations are you doing on data?

Have you tried to move the same workload on a pbix in order to see which is the time needed to perform the transformation?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi R1k91,

Thanks for replying . I have answered your questions below. Please find them and provide your support .

- Which is the source? On-Prem SQL Server? On-Cloud source?

Answer : Source is Power query(m- query) which is in fact data coming from SQL server .

- Are you using a gateway?

Answer : I am using enterprise gateway

- How many transformations are you doing on data?

Answer : i do not have any transformations . Its a plain data coming from single table .

Have you tried to move the same workload on a pbix in order to see which is the time needed to perform the transformation?

Answer : yes i tried . it just took 30 seconds to come up with the data .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So.. no transformations at all and 1 single table with 30 records.

Have you already tried to publish the pbix with the same workload and to configure it to refresh the dataset through the same gateway dataflows are running?

This could help you to understand if gateway is the bottleneck.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes Buddy.. I tried that and i didnt find any issues . we have almost 150 reports which run on this gateway every day and they are even scheduled to refresh every one hour (datasets) . we didnt come across any major issues.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.