- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Creating CSV file in Sharepoint from Power BI Data...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Creating CSV file in Sharepoint from Power BI Dataflows

We have a Power BI dataflow with 64 tables (Power Queries) and this dataflow is the single source of truth for quite a few of our reporting. The tables in the dataflow start as SQL queries to on-premise database but then multiple transformations have been added (including merges, aggregations, append queries). The dataflow is used by multiple teams in their Power BI datasets and reports but there has also been a need to provide csv extracts to teams outside of Power BI who use other reporting tools. We have been struggling with this problem but have now figured out a solution using Power Automate.

We know that the individual tables in Power BI Dataflows are stored by Microsoft in their internal storage as CSV files. We figured this out by using a fiddler trace while using Power BI Desktop to connect to a Power BI dataflow table. We looked at the option of switching the storage of the Power BI dataflow to a ADLS Gen 2 storage but this was turned down by our security team as this would mean exposing a ADLS Gen 2 storage to "All Networks" and providing a "Owner" role to the dataflow owner.

So, we therefore decided to mimic the calls from Power BI desktop to the dataflow using Power Automate and to write the output to Sharepoint. Here are the details -

1. We used the "HTTP with Azure AD" connector with the following config and signed in with the account that has access to the Power BI dataflow.

2. The resource path for the "HTTP with Azure AD" connector is shown below with the our dataflow id masked out.

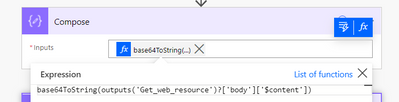

3. This call returns an "Application/Octet-Stream" and had to be decoded as follows -

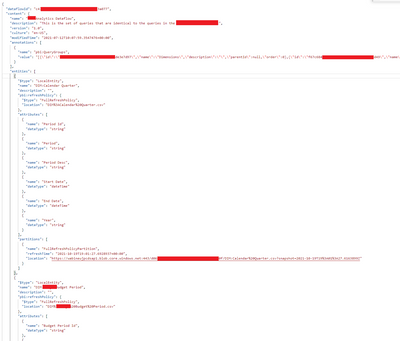

4. The decoded string is a json as shown below. The value we are interested in, is the location of the latest snapshot. This is found in the location filed within each Partitions array under each entity.

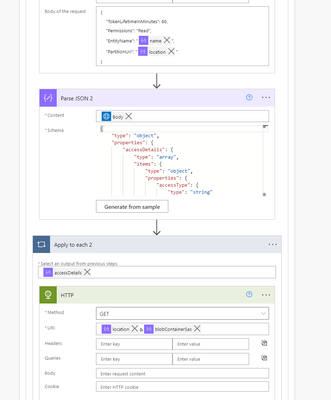

5. To locate the above location value we used the following actions.

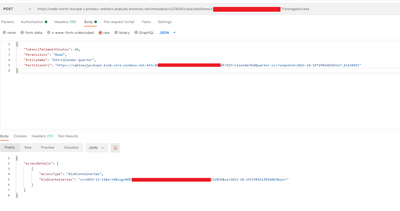

6. The last step in the above screenshot is a call to https://wabi-north-europe-j-primary-redirect.analysis.windows.net/metadata/v201606/cdsa/dataflows/{D... url with the following payload -

The above call retrieves the SAS key that needs to be used in conjunction with the location url to extract the csv files from the Power BI Service internal storage.

7. The steps below retrieve the SAS key from the JSON output and then calls a simple HTTP call to retrieve the CSV file -

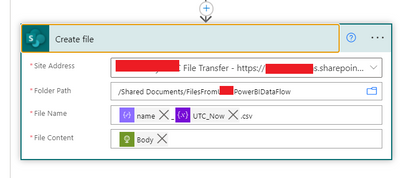

8. The final step writes the output of the HTTP request to share point -

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @prathyoo ,

Please help me in retrieving the vaues for "Base Resource URL" (Step 1) and "Url of the request" (Step 5). I am not sure how to get those.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Base Resource URL is standard. You can use it as seen in the screenshot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @prathyoo ,

Thank you for responding!

I am new to this. As per my understanding the base URL you have mentioned is for the service in Europe. My location is US and that is why the URL will be slightly different if I am not wrong.

Also, where can I see "resource path URL" (step 2) and "Url of the request" (step 5)?

It will be very helpful if I know the steps for where to look for these URLs. 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The way I got the URL information was by accessing the dataflow from Power BI desktop while using fiddler to look at the communication between the Power BI Desktop and the service.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This was the single most useful solution presented in this forum. Thanks a ton for sharing with us.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @prathyoo,

Thank you for sharing, for about the same reasons you mention I'm trying to import some data as well.

However, when trying to retrieve the sas key, I'm receiving the following error:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@prathyoo : Thank you for all the details. We have something similar requirement. Extracting Power BI dataflow tables to CSV. I have two questions here,

1. Can we automate this to run and generate CSV files ? If yes, then how ?

2. When we generate CSV file, is there any way to not override the previous data and maintain history in the CSV files?

Thank you in advance.

Regards,

Jyoti

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

1. Yes - If you notice the power automate we are using, we have triggered it to run as soon as the dataflow is refreshed. And, the dataflow itself has been set to refresh daily. This is the way we have automated our solution.

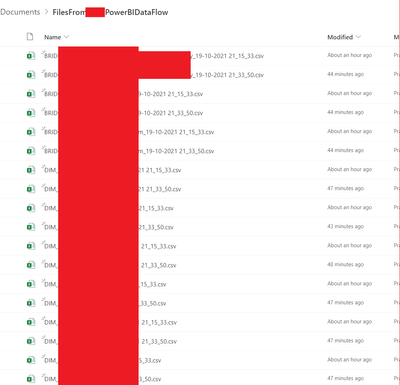

2. In our case, when the csv file is generated, we have created the file names to include the timestamp. This ensures uniqueness and prevents overwriting. You could also use the time stamp to create folders in sharepoint and that will also serve you need.

Regards,

Prathyoo

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Huge kudos for going through all these investigations (and for answering the frequent question "Can dataflows be accessed directly?").

Might have been easier to write a SSIS package that includes the transforms, and would be closer to realtime than the dataflow?

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.