- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Alternative to gateway, or getting data on a e...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Alternative to gateway, or getting data on a external (non oneDrive) file

Hello,

we have data stored in a network disk and normally these data will be available soon after in the cloud. Since the update intervall of the cloud is only at maximum one time per hour because of the cost we have also the possiblities to have one functional account company computer running close to 24/7. Because of security reasons gateway are restricted and won't work.

OneDrive was also an alternative. All the data within the onedrive, sharepoint (office ecosystem) can be used as datasource for powerbi and powerapps as well.

Unfortunately those functional computers have no personal onedrive, since they only have basic company usage in mind and no full office365 license.

The other alternative is that one personal account computer is doing the job with a vba- script to copy data from network disk to the personal onedrive account. But those computers are not made to run 24/7, especially not when you paying the bill yourself.

Are there any alternative to our dilemma?

Thank you very much in advance.

Best.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Best of luck moving it forward. If it comes to it I'm fairly certain Power BI report server is included in your premium capacity if I'm reading this correctly:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wondering if this is any use to you. (Have no experience using such a setup):

https://docs.microsoft.com/en-us/data-integration/vnet/overview

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Assuming you're storing the data as flat csv files on your local drive?

Have a look at creating an azure data lake gen 2 storage account: https://docs.microsoft.com/en-us/azure/databricks/data/data-sources/azure/adls-gen2/azure-datalake-g...

You can run AzCopy on a schedule using task scheduler to copy your files up to it every hour.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@bcdobbs thanks for the response. He already have this kind of implementation. The problem is we want update into powerbi every 15 minutes soon. And thats what is apparently not welcomed to use with the other system.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok I'd missed that requirement. What sort of data volumes are you looking at?

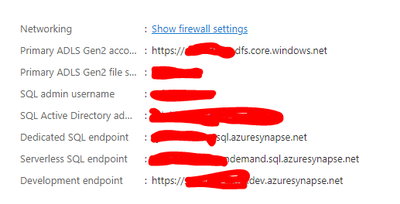

If you can get it into an azure datalake every 15 minutes you can then run power bi in direct query mode against a Synapse Analytics Serverless SQL end point. (It reads direct against the datalake files).

Failing that is there a specific security concern regarding Power BI gateway. You can make sure if only has credentials that can read a specific folder and nothing else if you set it up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The data itself is really not big. Maybe a few Megabyte max, because its very actual data without many rows of historic data. The problem with azure datalake is that it needs to be fetch the data first. Direct Query wouldn't help for the "life data" since the bottleneck will always be azure datalake. I think most datalake sources are fetching data hourly max.

Thats the reason why gateway would have been the most straight forward approach which is closer to "live data".

I did setup a sql server on my computer and switch to direct query. I used bulk insert csv files into the sql server. Works great!

Unfortunately this method only works with powerbi desktop, if I upload it on PowerBi Service I again....need the gateway to work.

Its unfortunate that there is no Desktop Report Visual Mode, without uploading to service. That way I would only need a 24/7 computer with a display to show a report.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Really think Servless SQL Pools Azure Synapse Analytics removes the bottle neck. Data literally sits as is in data lake but you query it through a SQL endpoint.

To get you started:

Create an instance and link it to your data lake. Doesn't cost anything at rest.

It creates a serverless SQL endppoint automatically which you can connect to from Power BI in both import and DirectQuery mode.

Once created

1) Open Synapse Studio.

2) Click Data

3) Click Linked

4) Navigate your linked Azure Data Lake Storage Gen2

5) Right click folder or specifc file and use the Select Top 100 to have it auto generate the query. It'll give something like:

SELECT

TOP 100 *

FROM

OPENROWSET(

BULK 'https://LAKENAME.dfs.core.windows.net/lakehouse/base/DIRECTORYNAME/**',

FORMAT = 'PARQUET'

) AS [result]

You could use that direct in Power BI or better create a symmantic view over it:

CREATE OR ALTER VIEW SchemaName.ViewName

AS

SELECT *

FROM

OPENROWSET (

BULK 'https://LAKENAME.dfs.core.windows.net/lakehouse/base/DIRECTORYNAME/**',

FORMAT = 'PARQUET' )

WITH (

[ID] [int],

[Field1] [nvarchar](200),

[Field2] [datetime2](7),

) AS ROWS

You can tidy it up by defining a datasource in advance etc.

When you connect to it in Power BI it looks just like a normal SQL table. You'd never realise you're querying the lake direct.

Things to note:

Serverless SQL is charged at $5 per TB processed which sound fine from your data volumes.

Use FILEPATH in the view to allow dynamic partition pruning when power bi queries. (Means a query from power bi doesn't have to read everything so costs you less and is faster.) See "Partitioned views" in:

Create and use views in serverless SQL pool - Azure Synapse Analytics | Microsoft Docs

Mastering DP-500 Exam: Querying Partitioned Sources in Azure Storage – Serverless SQL

Also worth reading:

Best practices for serverless SQL pool - Azure Synapse Analytics | Microsoft Docs

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, premium capacity of the workspace and pro user account. Eager to know your idea!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've never used it but within your premium capacity admin portal there should be a key for "Power BI Report Server":

What is Microsoft Power BI Premium? - Power BI | Microsoft Docs

Let's you host your power BI reports on premise.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately I cannot even open the admin center. I guess every company has different security guidelines and we aren't all set as admins roles. I think having premium doesn't mean you automaticallly have report server, you just have the option to to buy that package on top. If my last alternative isn't going to work out I will ask my admin for that option. I think at the it comes down always to two things: cost and/or security reasons.

@bcdobbs thanks so much for your input!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Best of luck moving it forward. If it comes to it I'm fairly certain Power BI report server is included in your premium capacity if I'm reading this correctly:

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.