- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- WORKAROUND: How to use scheduled refresh of a web ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

WORKAROUND: How to use scheduled refresh of a web page without the need for a gateway.

Hi all,

Like many others (here, here and here), I have been struggling with the inability of Power BI service to perform a scheduled refresh on a public web source without using a personal gateway.

According to the MS documentation, this is still not possible. (Don't ask me why)

The problem with this is that the M function Web.Page(), which is needed to work with HTML input, requires a gateway to work.

However, I have been playing around a bit lately, and believe that I have found a workaround that is relatively flexible. I'd like to share it here and get some feedback. Maybe some of you have some suggestions on how to improve the code to make it more broadly applicable.

In this example, I am trying to load the table from the following page into Power Query: https://www.w3schools.com/html/html_tables.asp.

The code

let

URL = "https://www.w3schools.com/html/html_tables.asp",

Start = "<table class=""ws-table-all"" id=""customers"">",

End = "</table>",

Source = Table.FromColumns({Lines.FromBinary(Web.Contents(URL))}),

ToList = Table.Column(Source, "Column1"),

RowCount = Table.RowCount(Source),

TableStart = List.PositionOf(ToList, Start),

TableEnd = List.PositionOf(ToList, End),

RemoveTopRows = Table.Skip(Source,TableStart),

RemoveBottomRows = Table.RemoveLastN(RemoveTopRows,RowCount-TableEnd-1),

List = Table.Column(RemoveBottomRows, "Column1"),

ChangeStart = List.ReplaceValue(List, Start, "<data>", Replacer.ReplaceText),

ChangeEnd = List.ReplaceValue(ChangeStart, End, "</data>", Replacer.ReplaceText),

XML = Xml.Tables(Lines.ToText(ChangeEnd)),

Table = XML{0}[Table],

#"Added Custom1" = Table.AddColumn(Table, "data", each if [th] <> null then [th] else [td]),

#"Removed Columns" = Table.RemoveColumns(#"Added Custom1",{"th", "td"}),

#"Added Custom" = Table.AddColumn(#"Removed Columns", "Custom", each Table.Transpose([data])),

#"Removed Columns1" = Table.RemoveColumns(#"Added Custom",{"data"}),

#"Expanded Custom" = Table.ExpandTableColumn(#"Removed Columns1", "Custom", {"Column1", "Column2", "Column3"}, {"Column1", "Column2", "Column3"}),

#"Promoted Headers" = Table.PromoteHeaders(#"Expanded Custom", [PromoteAllScalars=true]),

#"Changed Type" = Table.TransformColumnTypes(#"Promoted Headers",{{"Company", type text}, {"Contact", type text}, {"Country", type text}})

in

#"Changed Type"

How it works

The key here is to circumvent the use of the Web.Page() function, so here are the steps:

- Specify the URL to crawl.

- The second step is to look at the HTML source code of the webpage you want to scrape, and find the lines in the code that contain the start tag and the end tag of the table you want to import. (the lines containing <table> and </table>). NB: It's important to put the entire line here, so if there are more tags than just the table tags, include them as well.

- Then the data from the page is loaded. The combination of Lines.FromBinary() and Web.Contents() gives you a list of the raw HTML code from the web page.

- Convert the list into a table to locate the position of the table start and table end, and remove the lines above and below the table.

- I changed the start tag and end tag to a cleaner <data> and </data> because there are often other attributes (such as CSS class), that have an effect on subsequent steps.

- Since HTML has a similar structure as XML, we can now use the Xml.Tables() function to transform the raw data to a table. NB: This is where it can go wrong. HTML pages may contain code that does not comply with the more strict XML guidelines. If you get an error, it will tell you on what line it goes wrong. If that happens, go back to the list and filter out the lines that give the error.

- Once you have converted the raw data back to a table, you can go ahead and transform the data back into the form that you wish. In this case, I have transformed it back to resemble the original table from the webpage.

I have tested this strategy with Power BI service, incremental refresh and scheduled refresh and it works like a charm.

If you have any suggestions on how to improve this code, please let me know.

Cheers,

Kirvis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Kirvis,

I'm trying to apply your method to an HTML table I have. I'm getting an error:

Expression.Error: We expected a CountOrCondition value.

Details:

[Table]

This is apparently related to The RemoveTopRows step, am I missing something? Is there something here that needs to change?

The only things I've changed are URL and Start.

URL is pretty straight forward but here's the Start change

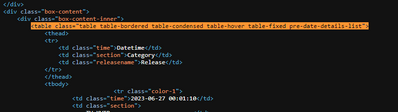

Start = "<table class=""table table-bordered table-condensed table-hover table-fixed pre-date-details-list"">",

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @kirvis ,

that looks like a very nice solution. Unfortunately I don't have the time currently to work on it.

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Kirvis,

This is a great workaround and works very well. Well done!

What if the URL has multiple tables in and is updated say weekly with new tables added?

Curious to see how would that work out as there are many cases going around these days.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For using online data and on premises data like Azure sql server, Share point online and SSAS etc,.

For scheduling refresh web page, it's a good work around.

Please check the below link for the same.

I hope this may help you.

Regards,

Manikumar

If this helps, Appreciate your KUDOS!

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @manikumar34 ,

Thanks for your response.

I am aware of the blog posts that Chris wrote, but if I'm not mistaken, he addresses a different issue. He does not use the function Web.Page() in his script. He does not have to because the response of the URL he uses is JSON, which Power Query handles very well.

The workaround I mention in my post is specifically about scraping data from web pages. As you can see in the MS documentation about sources I mention in the post, you need a gateway to be able to use scheduled refresh for that.

My goal for this workaround was to explicitly circumvent the need for setting up a gateway.

Hope that explains.

Kirvis

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.