- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Sum based on date range

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sum based on date range

Hello, I am currently having an issue with calculating a sum based on the adjacent case in PowerQuery M.

I want to group the Column "Value" based on the the rows where Start_CW date and END_CW date is in between the of the range of columns Valid_from date and Valid_to date.

Thanks in advance,

best Regards

| Index | CW | Start_CW | END_CW | Valid_from | Valid_to | Value |

| 1 | 50 | 12/6/2021 | 12/12/2021 | 12/14/2021 | 12/25/2021 | 15 |

| 1 | 51 | 12/13/2021 | 12/19/2021 | 12/14/2021 | 12/25/2021 | 20 |

| 1 | 52 | 12/20/2021 | 12/26/2021 | 12/14/2021 | 12/25/2021 | 25 |

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

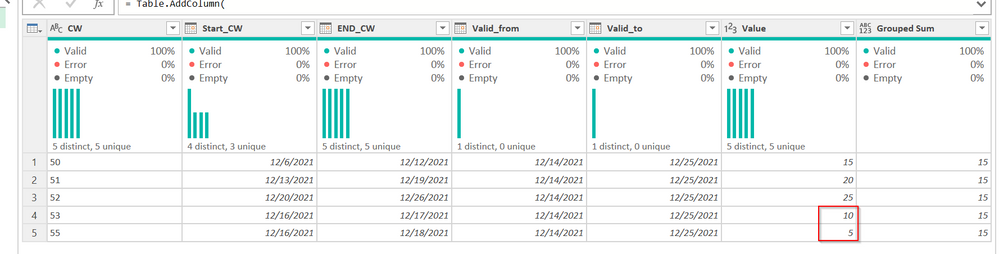

None of your start/end dates are between the valid from/to dates, so nothing will be returned.

I added some rows where it was valid, but it returns that for everything.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUTI1ABKGRvpm+kYGRoYQNhAhcUyQOEamcI6pUqwO1AiYSmNkbZaEzTAyQJhhBJU0QFZpRoQZSO4whqpE0WZOhF8g7gA5wdQUmxkWhM0AOiMWAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Index = _t, CW = _t, Start_CW = _t, END_CW = _t, Valid_from = _t, Valid_to = _t, Value = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Valid_from", type date}, {"Valid_to", type date}, {"Value", Int64.Type}, {"Start_CW", type date}, {"END_CW", type date}}),

GroupedSum =

Table.AddColumn(

#"Changed Type",

"Grouped Sum",

each

List.Sum(

Table.SelectRows(

#"Changed Type",

each [Start_CW] >= [Valid_from] and [END_CW] <= [Valid_to]

)[Value]

)

)

in

GroupedSum

But I am sure that is not what you want.

Can you provide some valid data, as well as an example of the expected output?

By the way, this will NOT perform well on large datasets. Power Query isn't set up to scan tables like this. A few hundred rows will be no problem A few thousand will be noticable. Over 10K-15K records and it will be pain. Over 100K and it won't finish. This is best done in DAX.

How to get good help fast. Help us help you.

How To Ask A Technical Question If you Really Want An Answer

How to Get Your Question Answered Quickly - Give us a good and concise explanation

How to provide sample data in the Power BI Forum - Provide data in a table format per the link, or share an Excel/CSV file via OneDrive, Dropbox, etc.. Provide expected output using a screenshot of Excel or other image. Do not provide a screenshot of the source data. I cannot paste an image into Power BI tables.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

None of your start/end dates are between the valid from/to dates, so nothing will be returned.

I added some rows where it was valid, but it returns that for everything.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUTI1ABKGRvpm+kYGRoYQNhAhcUyQOEamcI6pUqwO1AiYSmNkbZaEzTAyQJhhBJU0QFZpRoQZSO4whqpE0WZOhF8g7gA5wdQUmxkWhM0AOiMWAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Index = _t, CW = _t, Start_CW = _t, END_CW = _t, Valid_from = _t, Valid_to = _t, Value = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Valid_from", type date}, {"Valid_to", type date}, {"Value", Int64.Type}, {"Start_CW", type date}, {"END_CW", type date}}),

GroupedSum =

Table.AddColumn(

#"Changed Type",

"Grouped Sum",

each

List.Sum(

Table.SelectRows(

#"Changed Type",

each [Start_CW] >= [Valid_from] and [END_CW] <= [Valid_to]

)[Value]

)

)

in

GroupedSum

But I am sure that is not what you want.

Can you provide some valid data, as well as an example of the expected output?

By the way, this will NOT perform well on large datasets. Power Query isn't set up to scan tables like this. A few hundred rows will be no problem A few thousand will be noticable. Over 10K-15K records and it will be pain. Over 100K and it won't finish. This is best done in DAX.

How to get good help fast. Help us help you.

How To Ask A Technical Question If you Really Want An Answer

How to Get Your Question Answered Quickly - Give us a good and concise explanation

How to provide sample data in the Power BI Forum - Provide data in a table format per the link, or share an Excel/CSV file via OneDrive, Dropbox, etc.. Provide expected output using a screenshot of Excel or other image. Do not provide a screenshot of the source data. I cannot paste an image into Power BI tables.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI ReportingHelpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 102 | |

| 53 | |

| 21 | |

| 12 | |

| 12 |