- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Slow Power Query & refresh performance w/ mult...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Slow Power Query & refresh performance w/ multiple merges/lookups possible cause

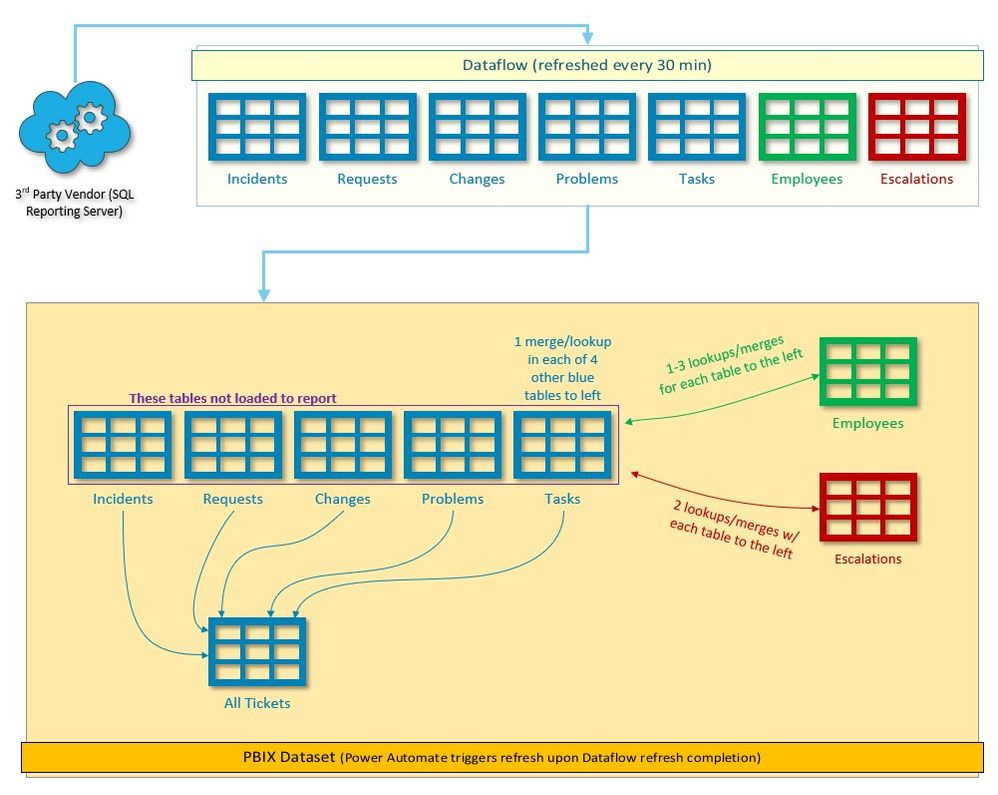

I'm working with Help Desk ticket data from a 3rd-party vendor's reporting server, and a Dataflow handles the initial pull of needed tables and some light transformations. Using Incremental Refresh, that takes 3-5 mins to complete and has scheduled refreshes every 30 mins.

PBIX file takes it from there and performs several table-to-table lookups to pull in Employee table name data (find names instead of user ID numbers) and key event DateTime values as each ticket ages (Escalation table), before one final merge of all ticket types into one reporting table that's loaded to the report. As a result, these 20 total merges have led to a dramatic slowdown of both refresh times and just working in these tables in Power Query... some attempts took hours. This diagram should give an overview:

I tried disabling background refresh to make working in PQ more friendly, but it didn't help much. I also tried using Table.Buffer after researching several posts, but I'm not sure I used it correctly (syntax was good, just wrong placement in query maybe?), as it didn't seem to speed anything up.

My question is: What can I do to speed things up? I thought by using Table.Buffer on tables I merged to for back-to-back steps would help ( STEP 1: Merge T1 -> Table.Buffer(T2)... STEP 2: Merge T1 -> T2 again. except on diff column ), but as I said, it didn't seem to help anything. I realize each merge costs valuable resources, and have only a few small ideas to reduce the total number of merges. Does anyone see where I can streamline this? The refresh length is so long now it sometimes fails to complete before the next half-hour Dataflow's refresh completes, resulting in either a skipped refresh, or near constant refreshing action.

Thanks in advance for all the gurus who respond.

Below is an example query from my Tasks table, which has the highest number of merge steps.

Source = PowerBI.Dataflows(null),

#"1562f35b-c92a-4b71-a782-27007ddc1a3a" = Source{[workspaceId="1562f35b-c92a-4b71-a782-27007ddc1a3a"]}[Data],

#"abd4997c-cf28-4628-939e-01bae91db5fb" = #"1562f35b-c92a-4b71-a782-27007ddc1a3a"{[dataflowId="abd4997c-cf28-4628-939e-01bae91db5fb"]}[Data],

Task1 = #"abd4997c-cf28-4628-939e-01bae91db5fb"{[entity="Task"]}[Data],

#"Removed Other Columns" = Table.SelectColumns(Task1,{"RecId", "Ticket Type", "AssignmentID", "Ticket Number", "Parent Ticket Number", "Created On", "Created On - short", "CreatedBy", "Resolved On", "Resolved On - short", "ResolvedByUID", "Completed On", "Completed On - short", "Status", "Subject", "Description", "Owner UID", "Owner Team", "ParentObjectDisplayID", "Address", "AssignedBy", "Assigned On", "EstimatedEffort", "LastModBy", "LastModDateTime", "ParentLink_RecID", "ParentLink_Category", "TargetDateTime", "Priority", "AcknowledgedBy", "AcknowledgedDateTime", "ActualEffort", "IsFinalState", "TeamManager", "Cost", "ResolutionEscLink_RecID", "ResolutionEscLink_Category", "ResponseEscLink_RecID", "ResponseEscLink_Category", "ActualCost", "EstimatedCost", "PlannedStartDate", "PlannedEndDate", "ActualStartDate", "ActualEndDate", "TaskCategory", "WorkflowInstanceId", "ReadOnly", "CostPerMinute", "Service", "IsInFinalState", "FinalTaskforIncident", "CreateKnowledgeFromTask", "Location", "PrimaryAssetLink_Category", "URL", "Data as of:", "PrimaryAssetLink_RecID"}),

#"Merged Escalation - Resolution" = Table.NestedJoin(#"Removed Other Columns", {"ResolutionEscLink_RecID"}, Frs_data_escalation_watch, {"RecId"}, "Frs_data_escalation_watch", JoinKind.LeftOuter),

#"Expanded Frs_data_escalation_watch" = Table.ExpandTableColumn(#"Merged Escalation - Resolution", "Frs_data_escalation_watch", {"L1DateTime", "L2DateTime", "L3DateTime", "BreachDateTime", "L1DateTime-UTC", "L2DateTime-UTC", "L3DateTime-UTC", "BreachDateTime-UTC", "L1Passed", "L2Passed", "L3Passed", "BreachPassed"}, {"L1DateTime", "L2DateTime", "L3DateTime", "BreachDateTime", "L1DateTime-UTC", "L2DateTime-UTC", "L3DateTime-UTC", "BreachDateTime-UTC", "L1Passed", "L2Passed", "L3Passed", "BreachPassed"}),

#"Merged Escalation - Response" = Table.NestedJoin(#"Expanded Frs_data_escalation_watch", {"ResponseEscLink_RecID"}, Frs_data_escalation_watch, {"RecId"}, "Frs_data_escalation_watch", JoinKind.LeftOuter),

#"Expanded Frs_data_escalation_watch1" = Table.ExpandTableColumn(#"Merged Escalation - Response", "Frs_data_escalation_watch", {"BreachDateTime", "BreachDateTime-UTC", "BreachPassed"}, {"Response BreachDateTime", "Response BreachDateTime-UTC", "Response BreachPassed"}),

#"Merged w/ Employee" = Table.NestedJoin(#"Expanded Frs_data_escalation_watch1", {"ResolvedByUID"}, #"Employee", {"LoginID"}, "Employee", JoinKind.LeftOuter),

#"Expanded Employee" = Table.ExpandTableColumn(#"Merged w/ Employee", "Employee", {"DisplayName"}, {"ResolvedBy"}),

#"Merged w/ Incident" = Table.NestedJoin(#"Expanded Employee", {"ParentLink_RecID"}, Incident, {"RecId"}, "Incident", JoinKind.LeftOuter),

#"Expanded Incident" = Table.ExpandTableColumn(#"Merged w/ Incident", "Incident", {"Customer Name", "CustomerLocation", "OrganizationUnitID", "Priority"}, {"Customer Name-Inc", "CustomerLocation-Inc", "OrganizationUnitID-Inc", "Priority-Inc"}),

#"Merged w/ ServiceReq" = Table.NestedJoin(#"Expanded Incident", {"ParentLink_RecID"}, ServiceReq, {"RecId"}, "ServiceReq", JoinKind.LeftOuter),

#"Expanded ServiceReq" = Table.ExpandTableColumn(#"Merged w/ ServiceReq", "ServiceReq", {"Priority", "Urgency", "OrganizationUnitID", "Customer Name", "CustomerLocation"}, {"Priority-Req", "Urgency-Req", "OrganizationUnitID-Req", "Customer Name-Req", "CustomerLocation-Req"}),

#"Merged w/ Change" = Table.NestedJoin(#"Expanded ServiceReq", {"ParentLink_RecID"}, Change, {"RecId"}, "Change", JoinKind.LeftOuter),

#"Expanded Change" = Table.ExpandTableColumn(#"Merged w/ Change", "Change", {"Customer Name", "OrganizationUnitID", "Priority"}, {"Customer Name-Chg", "OrganizationUnitID-Chg", "Priority-Chg"}),

#"Merged w/ Employee1" = Table.NestedJoin(#"Expanded Change", {"Owner UID"}, #"Employee", {"LoginID"}, "Employee", JoinKind.LeftOuter),

#"Replaced Value" = Table.ReplaceValue(#"Merged w/ Employee1","",null,Replacer.ReplaceValue,{"Priority-Chg"}),

#"Expanded Employee1" = Table.ExpandTableColumn(#"Replaced Value", "Employee", {"DisplayName"}, {"Owner"}),

#"Renamed Columns" = Table.RenameColumns(#"Expanded Employee1",{{"TargetDateTime", "Due On"}, {"Completed On", "Closed On"}, {"Completed On - short", "Closed On - short"}, {"Priority", "Priority-OLD"}}),

#"Merged Queries" = Table.NestedJoin(#"Renamed Columns", {"ParentLink_RecID"}, Problem, {"RecId"}, "Problem", JoinKind.LeftOuter),

#"Expanded Problem" = Table.ExpandTableColumn(#"Merged Queries", "Problem", {"Customer Name", "OrganizationUnitID", "Priority"}, {"Customer Name-Prb", "OrganizationUnitID-Prb", "Priority-Prb"}),

#"Merged Req+Inc Priority" = Table.AddColumn(#"Expanded Problem", "Priority", each Text.Combine({[#"Priority-Req"], [#"Priority-Inc"], [#"Priority-Prb"], [#"Priority-Chg"]}, ""), type text),

#"Merged Org Unit columns" = Table.AddColumn(#"Merged Req+Inc Priority", "Org Unit", each Text.Combine({[#"OrganizationUnitID-Inc"], [#"OrganizationUnitID-Req"], [#"OrganizationUnitID-Chg"], [#"OrganizationUnitID-Prb"]}, ""), type text),

#"Merged Customer columns" = Table.AddColumn(#"Merged Org Unit columns", "Customer Name", each Text.Combine({[#"Customer Name-Prb"], [#"Customer Name-Chg"], [#"Customer Name-Req"], [#"Customer Name-Inc"]}, ""), type text),

#"Merged CustomerLoc columns" = Table.AddColumn(#"Merged Customer columns", "CustomerLocation", each Text.Combine({[#"CustomerLocation-Req"], [#"CustomerLocation-Inc"]}, ""), type text),

#"Removed Columns" = Table.RemoveColumns(#"Merged CustomerLoc columns",{"Priority-Inc", "OrganizationUnitID-Inc", "Customer Name-Inc", "Priority-Req", "OrganizationUnitID-Req", "Customer Name-Req", "Customer Name-Chg", "OrganizationUnitID-Chg", "Priority-Chg", "Customer Name-Prb", "OrganizationUnitID-Prb", "Priority-Prb", "CustomerLocation-Inc", "CustomerLocation-Req"}),

#"Replaced Value1" = Table.ReplaceValue(#"Removed Columns","",null,Replacer.ReplaceValue,{"Priority"})

in

#"Replaced Value1"

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

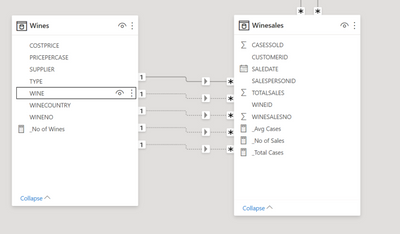

Whilst you can have only one active relationship in a table, you can have any number of inactive relationships (dotted lines) between tables. I'd create the inactive relationships in the model then use the DAX USERELATIONSHIP on measures to swap between them.

Stuart

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm going to take a different approach. You need those merges to build a proper model as I understand it. I would not bring those tables in separately then create filter relationships.

However, Power Query will bog down a server with multiple merges. PQ will try to create the SQL and it doesn't always do it as effeciently as a SQL coder can do in SSMS. Yes, Table.Buffer can help, but if the data is large, then it can hurt more than it helps.

My recommendation is create a view on the server where the merges take place there, then bring that view into PQ. You can try to bring them all in separately and use LOOKUPVALUE and other measures, to model your data in DAX, but your overall performance in the report will be worse than if the merges had been done at the source, and your DAX will sure as heck be more complex to write and maintain.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the different approach, I do appreciate that! Normally, my first step would be to try to do as much as possible by getting the source data modeled as much as possible before loading it. Unfortunately in my case, the source SQL server belongs to a 3rd party vendor, so we don't have the freedom to create views or even connect directly with SSMS due to their security policies. Dataflows ended up being our best connection option, and while it's possible to input a SQL query, that workflow prompts you for the query before you can even see the 1,095 data tables, making developing such queries quite a bit of trial and error - something there's not a lot of free time for.

Looks like my best solution is the USERELATIONSHIP option - at least until we can develop queries that push the work back on that robust SQL engine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@MTracy - one more thing you can try. Intentionally break folding after the point of which incremental refresh is happening, if possible.

You can arbitrarly add a column that uses Table.TextBetweenDelimiters() or something that will not fold. I've done this where Table.Buffer doesn't help, which is anything over 100,000 records or something.

I've used this on several models where I needed 5-6 joins, and after the 4th or 5th join, it bogged the server down. So after the 3rd join/merge, for example, do something that breaks folding, then continue with the merges, then remove the arbitrary column I created. Power Query is still very efficient at merges even if the mashup engine is doing it vs sending it back to the server.

I hope the best for your project. Sometimes it is about trying 10 different things to figure out which one is the least of a problem. 😁

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If all of these merges are just for lookups, then you will have so much joy once you remove all those merges, make proper relationships and USERELATIONSHIP DAX measures. This is the exact data situation that the tabular data model was designed to handle extremely efficiently. These merges are creating so much more data, and unnecessarily wasting your resources. Just load those lookups tables to the data model, make the relationship ships, create the measures, be amazed at the results!

--Nate

I’m usually answering from my phone, which means the results are visualized only in my mind. You’ll need to use my answer to know that it works—but it will work!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you... it looks like the prevailing advice is to follow what you've also suggested. Time to roll up the sleeves and dive into retooling this data model!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Forgive what may be a stupid question, but why are you doing all those merges in Power Query ? I'd normally bring those tables into Power BI and then make relationships between them in the Data Model. Power Query will allways be slow merging data. From a cursory view all those tables can be related in the data model, which will result in a much better, faster model. Why do you need to merge in Power Query ?

Stuart

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for pointing that method out, and I do try to do that when possible. However, in some cases I have more than two columns that need data lookups from the other table... for example, a help ticket has an employee number for Opened By, Owner, Resolved By, and Closed By columns. So four different colunms with employee numbers, and one table to look them up in. With a max of one active relationship and one inactive relationship, I can't make it work to connect those four different colunms to the Employee's key column.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Whilst you can have only one active relationship in a table, you can have any number of inactive relationships (dotted lines) between tables. I'd create the inactive relationships in the model then use the DAX USERELATIONSHIP on measures to swap between them.

Stuart

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For some reason, I thought the max was two relationships, so that's definitely good to learn - thank you! My report already has over 25 measures in it, so I wonder if adding two-dozen more puts the report at risk for slow down on the user's end? Granted, since I'm mainly performing lookups and not calculations/aggregations, it may be wiser for me to take advantage of the 'RELATED' DAX function to draw in the needed column values instead of a measure. My main reason for pushing much of the work to PQ was to keep the report side from becoming too DAX-heavy and affecting user experience.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Measures are only run when they are placed on the canvas, so you can have as many measures as you like but if you only put a few of them on visualisations it won't affect performance. I've certainly seen Power BI reports with hundreds of measures and it doesn't affect load speed or performance. DAX is an efficient language if it's backed by a good Data Model, the key is to use the Star Schema to do the work, then DAX measures can leverage the filter context and only needs to run on a subset of records. Of course it's possible to write inefficient DAX with too much use of iterators which bypass the filter context and churn through all data records, but if you get the Data Model right that shouldn't be necessary.

Stuart

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Again, thanks Stuart. I'd thought perhaps the measures were "pre-calculated" as the model loads, so knowing there's no resources "cost" until they're put into visuals helps me feel more comfortable with creating more of them. Despite my egregious use of merges, the remainder of my model does resemble a star schema, and I definitely try to write efficient DAX (though I wish it were easier to recognize an improvement to the syntax). I really appreciate your guidance on this!

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.