- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Reset count of ID once they've all been seen, ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Reset count of ID once they've all been seen, count how many days it took then reset until next time

Hi,

I have 1500 unique IDs, everyday many are seen and some multiple times a day.

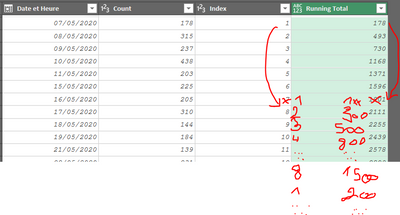

I would like to do a cumulative count and know how many days does it takes to see them all 1500, once it reaches that amount, the cumulative sum needs to reset for another count until the next time the goal is met.

I've done a running total after a grouped count by date, but my issue aside from the needed reset is that the duplicates needs to be removed based on the time period needed to attain the goal. If i'm grouping my IDs by date then i'm losing the ability to sort out duplicates...

So i'm guessing I need to first find how much time is needed, then remove the duplicates from a custom column spanning over its own run tower the goal, and then, calculate the running...

Here's an ugly paint mockup of what i'd like my final result to look like (I realize I need my date format to be date.time otherwise i'll never get a correct goal and it'll be tricky)

I've found this solution here from Lind25 but the syntax is wrong, maybe from a previous PQ version:

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

AddedIndex = Table.AddIndexColumn(Source, "Index", 1, 1),

#"Added Custom" = Table.AddColumn(AddedIndex, "Running total reset", each List.Accumulate(List.FirstN(AddedIndex[Difference],[Index]),0,(state,current)=>if state+current > 60 then 0 else state+current))

in

#"Added Custom"

Any takers ? I'm completely lost on that one...

Thanks

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've simulated a scenario with only 10 IDs using some of the data you provided, see if I'm on the right track? If I'm right, I'll think of the code to implement the end result.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did a test performing the core operation in Python and it seems to finish in less than 20 secs for the data you posted. See it in the attached file. You'll have to update the path to the excel file you shared. Here is the M code for the main query:

Note it should be cleaned up a bit, as it is doing a lot of stuff that might not be necessary

let

Source = Excel.Workbook(File.Contents("d:\Downloads\Sample PQ Help.xlsx"), null, true),

Maintenance_Itinérante___CSV_Table = Source{[Item="Maintenance_Itinérante___CSV",Kind="Table"]}[Data],

#"Changed Type" = Table.TransformColumnTypes(Maintenance_Itinérante___CSV_Table,{{"Date&Time Seen", type datetime}, {"ID", Int64.Type}}),

#"Removed Duplicates" = Table.Distinct(#"Changed Type", {"Date&Time Seen", "ID"}),

#"Sorted Rows" = Table.Sort(#"Removed Duplicates",{{"Date&Time Seen", Order.Ascending}}),

#"Added Custom" = Table.AddColumn(#"Sorted Rows", "Date", each Date.From([#"Date&Time Seen"])),

#"Removed Columns" = Table.RemoveColumns(#"Added Custom",{"Date&Time Seen"}),

#"Reordered Columns" = Table.ReorderColumns(#"Removed Columns",{"Date", "ID"}),

#"Removed Duplicates1" = Table.Distinct(#"Reordered Columns", {"Date", "ID"}),

#"Changed Type1" = Table.TransformColumnTypes(#"Removed Duplicates1",{{"Date", Int64.Type}}),

#"Run Python script" = Python.Execute("# 'dataset' holds the input data for this script#(lf)groupeddataset = dataset.groupby(['Date'])['ID'].apply(lambda x: list(x)).to_frame().reset_index()#(lf)#test.groupby(['Pos'])['Pos2'].apply(lambda x: list(x)).to_frame().reset_index()#(lf)a = list(groupeddataset['ID']) #(lf)acc=list(initial['ID']); res=[]#(lf)for i in range(len(a)):#(lf) acc=set(acc)-set(a[i])#(lf) #acc=set(acc)-set([a[i]])#(lf) if acc == set(): #(lf) acc=initial#(lf) res=res+[i]#(lf)#(lf)output=pandas.DataFrame(res,columns=['Positions'])",[dataset=#"Changed Type1", initial=Table.SelectRows(All_IDsT,each [ID]<> 15133)]),

groupeddataset = #"Run Python script"{[Name="groupeddataset"]}[Value],

groupeddataset2 = Table.TransformColumnTypes(groupeddataset,{{"Date", Int64.Type}}),

#"Changed Type3" = Table.TransformColumnTypes(groupeddataset2,{{"Date", type date}}),

#"Sorted Rows1" = Table.Sort(#"Changed Type3",{{"Date", Order.Ascending}}),

CompletionPositionsT = #"Run Python script"{[Name="output"]}[Value],

CompletionPositionsT2 = Table.TransformColumnTypes(CompletionPositionsT,{{"Positions", Int64.Type}}),

result = List.Select(groupeddataset2[Date], each List.Contains(CompletionPositionsT2[Positions],_ - List.Min(groupeddataset2[Date]))),

#"Converted to Table" = Table.FromList(result, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Changed Type2" = Table.TransformColumnTypes(#"Converted to Table",{{"Column1", type date}}),

#"Renamed Columns" = Table.RenameColumns(#"Changed Type2",{{"Column1", "Completion dates"}})

in

#"Renamed Columns"

The main step is #"Run Python script", with the following Python code:

groupeddataset = dataset.groupby(['Date'])['ID'].apply(lambda x: list(x)).to_frame().reset_index()

a = list(groupeddataset['ID'])

acc=list(initial['ID']); res=[]

for i in range(len(a)):

acc=set(acc)-set(a[i])

if acc == set():

acc=initial

res=res+[i]

output=pandas.DataFrame(res,columns=['Positions'])

It groups the IDs by Dates (day level) and then operates on that to extract a list of the positions where each section with all IDs seen ends. Note I have filtered out ID 15133 from the list of IDs so that there is at least one section that has all IDs

Please mark the question solved when done and consider giving kudos if posts are helpful.

Contact me privately for support with any larger-scale BI needs, tutoring, etc.

Cheers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

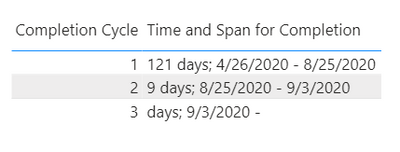

As much a I love M, I will put another plug in for the DAX approach for this one. I don't know if your IDs have category columns associated with them and you will want to also have slicers (which would require a DAX approach). Attached is a pbix with your example data. It uses the below DAX expression to generate the shown table (I also added a small table with the "Cycle" values of 1,2,3). You could do them separate but it is calculation intensive (so since I already had the virtual table in the measure, I generated both outputs and concatenated them together).

Time and Span for Completion =

VAR thiscycle =

SELECTEDVALUE ( Cycle[Completion Cycle] )

VAR ids =

ALLSELECTED ( Seen[ID] )

VAR idcount =

COUNTROWS ( ids )

VAR summarylastcycle =

ADDCOLUMNS (

VALUES ( Seen[Date&Time Seen] ),

"IDsSoFar",

VAR thistime = Seen[Date&Time Seen]

RETURN

COUNTROWS (

FILTER (

ids,

CALCULATE (

COUNT ( Seen[ID] ),

Seen[Date&Time Seen] <= thistime

) >= thiscycle - 1

)

)

)

VAR completiontimelastcycle =

IF (

thiscycle = 1,

MIN ( Seen[Date&Time Seen] ),

MINX (

FILTER (

summarylastcycle,

[IDsSoFar] >= idcount

),

Seen[Date&Time Seen]

)

)

VAR summarythiscycle =

ADDCOLUMNS (

FILTER (

VALUES ( Seen[Date&Time Seen] ),

Seen[Date&Time Seen] >= completiontimelastcycle

),

"IDsSoFar",

VAR thistime = Seen[Date&Time Seen]

RETURN

COUNTROWS (

FILTER (

ids,

CALCULATE (

COUNT ( Seen[ID] ),

Seen[Date&Time Seen] <= thistime

) >= thiscycle

)

)

)

VAR completiontimethiscycle =

MINX (

FILTER (

summarythiscycle,

[IDsSoFar] >= idcount

),

Seen[Date&Time Seen]

)

VAR span =

DATEDIFF (

completiontimelastcycle,

completiontimethiscycle,

DAY

)

VAR range = completiontimelastcycle & " - " & completiontimethiscycle

RETURN

span & " days" & "; " & range

Regards,

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

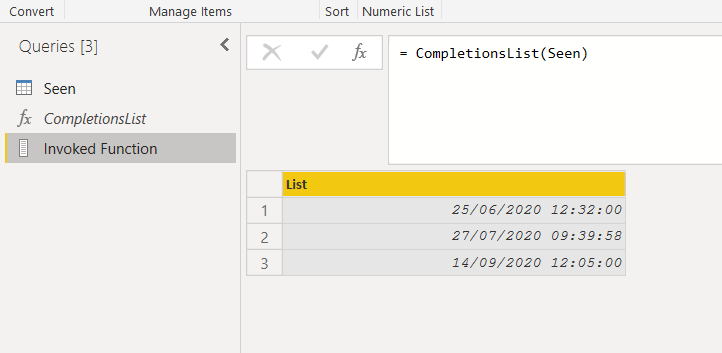

the code that implements the algorithm I described in the previous message

let

completion = (tab)=>

let

grpID=Table.Group(tab, {"ID"}, {"grp", each _}),

nids=Table.RowCount(grpID),

currLastDate=List.Min(List.Last(grpID[grp])[Date]),

rest= Table.SelectRows(tab, each _[Date] > currLastDate ),

result= if Table.RowCount(Table.Distinct(rest,"ID")) < nids then {currLastDate} else {currLastDate} & @ completion(rest)

in

result

in

completion

This function receives as input a table with Date and ID columns and provides a list of dates where the saturation cycle of all the distinct ids of the table is completed.

It seems very fast, respect previou solution based on list.generate and list.difference and so on ...

to obtain a more pleasant output, I grafted a copy of the vector of ids in the original table in three different random points of the [ID] column 😊

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

It depends on the specific needs of @Kiwizqt , the logic is adjustable

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ziying35

I totally agree with you. The logic depends on the specifications.

I don't know if @Kiwizqt thought of a situation like the one you showed.

If it is important for him to distinguish situations, he can choose between red (mine) and blue (yours).

I just point out that the solution you intended may leave some transactions out of each completion block In this case the row n.90) , which I don't know is important or irrelevant.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

Earlier I posted a solution using the List.Generate function, and I've simulated every possible scenario I can think of in the solution, so you'll see where I'm going with this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ziying35

you are right. Your solution leaves no unassigned records for the various blocks.

I apologize for the oversight.

I applied your fix to the recursive function, but then misread the resulting table.

however, the difference in the result remains, which in some cases could be important.

At this point it must be @Kiwizqt who decides what is preferable for its purposes.

I try to give an extreme example, to clarify the difference between the two approaches.

Correct me if I'm wrong.

If on the day a block is completed, the last block ID is present several times, you get the last one, while I get the first one.

A consequence of this different choice is that the next block, in my case, starts from the same day and already contains an occurrence of this ID; in the case of the choice made by ZiYing, the next block does not start with the same ID and therefore the completion of the block also depends on the subsequent occurrence of this ID.

Obviously there is no right or wrong solution they are just different.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

Yes, you have understood my line of thinking perfectly. There is strictly no right or wrong solution for either of us, and the final choice will be @Kiwizqt's actual needs

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

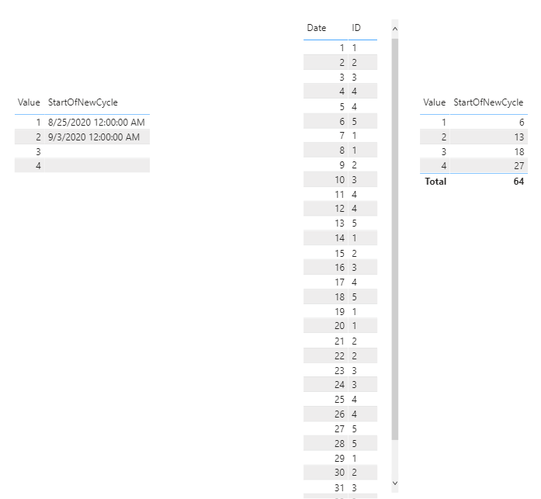

FYI that I thought of a way to do this with DAX and no recursion. See the attached pbix. There were only two cycles in the example data, so I made a mock table too with integer date values (table on right). I made a DAX calculated table with the start date of each cycle, and it would be easy to get the desired measures from it. It would also be possible to put this expression in a variable in a measure and calculate it dynamically (not sure how performant it will be at large scale, and doing it as a table was easier to troubleshoot). Here is the DAX expression that appears to work. Note the upper limit of the GENERATESERIES function should be changed to the max potential # of cycles).

CycleStarts2 =

VAR counts =

ADDCOLUMNS (

CROSSJOIN (

VALUES ( Seen2[Date] ),

VALUES ( Seen2[ID] )

),

"count",

VAR thisDate = Seen2[Date]

RETURN

CALCULATE (

COUNT ( Seen2[ID] ),

ALL ( Seen2[Date] ),

Seen2[Date] <= thisDate

) + 0

)

VAR mincounts =

ADDCOLUMNS (

SUMMARIZE (

counts,

Seen2[Date]

),

"mincount",

VAR thisDate = Seen2[Date]

RETURN

MINX (

FILTER (

counts,

Seen2[Date] = thisDate

),

[count]

)

)

VAR cycles2 =

ADDCOLUMNS (

GENERATESERIES (

1,

4,

1

),

"StartOfNewCycle",

MINX (

FILTER (

mincounts,

[mincount] = [Value]

),

Seen2[Date]

)

)

RETURN

cycles2

Regards,

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This seems to yield the same erroneous result for the example I provided earlier, or am I mistaken? It is a very smart and elegant implementation but the logic for the core computation is essentially the same as before, thus carrying the same flaws. The code still looks, for cycle N, for the earliest date on which all IDs have been seen N times since the beginning. That does work for N=1 but not for N>1.

Best

| Date | ID | |

| 1 | 100 | |

| 2 | 100 | |

| 3 | 100 | |

| 4 | 200 | |

| 5 | 200 | |

| 6 | 300 | <--1st period |

| 7 | 300 | <--2nd period |

| 8 | 200 | |

| 9 | 300 | <--3rd period |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It did work with the set I created but it did break down again when I threw other patterns at it. I had an extra virtual column in there that was more complex but closer to the solution I think. When I had both, the simpler one ("mincount") also worked (which I had created as part of the 2nd one), so I got rid of the more complex one. Below is a variation on it. I think this is close, but the last piece of logic is stumping me. It works by looking ahead and finding the next datetime where the running total count of each ID is different (i.e., at least +1) by using ISBLANK on the COUNTROWS of INTERSECT.

This has gotten pretty academic at this point, but here is the table expression if anyone is still following this one. It calculates against the Seen2 table I had in previous pbix with numeric "datetime" values. It shows when the next full cycle occurs from that point (date), but doesn't get it relative to the start (again, not recursive which may be the fundamental limitation).

CycleStarts4 =

VAR counts =

ADDCOLUMNS (

CROSSJOIN (

VALUES ( Seen2[Date] ),

VALUES ( Seen2[ID] )

),

"count",

VAR thisDate = Seen2[Date]

RETURN

CALCULATE (

COUNT ( Seen2[ID] ),

ALL ( Seen2[Date] ),

Seen2[Date] <= thisDate

) + 0

)

VAR withlast =

ADDCOLUMNS (

ADDCOLUMNS (

VALUES ( Seen2[Date] ),

"mincount",

VAR thisdate3 = Seen2[Date]

RETURN

MINX (

FILTER (

counts,

Seen2[Date] = thisdate3

),

[count]

)

),

"nextcyclestart",

VAR thisdate2 = Seen2[Date]

VAR thismin = [mincount]

VAR thisvalues =

SUMMARIZE (

FILTER (

counts,

Seen2[Date] = thisdate2

),

Seen2[ID],

[count]

)

VAR othervalues =

FILTER (

FILTER (

counts,

Seen2[Date] > thisdate2

),

ISBLANK (

VAR thisdate3 = Seen2[Date]

VAR prevvalues =

SUMMARIZE (

FILTER (

counts,

Seen2[Date] = thisdate3

),

Seen2[ID],

[count]

)

RETURN

COUNTROWS (

INTERSECT (

thisvalues,

prevvalues

)

)

)

)

RETURN

MINX (

othervalues,

Seen2[Date]

)

)

RETURN

withlast

Regards,

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the code that implements the algorithm I described in the previous message

let

completion = (tab)=>

let

grpID=Table.Group(tab, {"ID"}, {"grp", each _}),

nids=Table.RowCount(grpID),

currLastDate=List.Min(List.Last(grpID[grp])[Date]),

rest= Table.SelectRows(tab, each _[Date] > currLastDate ),

result= if Table.RowCount(Table.Distinct(rest,"ID")) < nids then {currLastDate} else {currLastDate} & @ completion(rest)

in

result

in

completion

This function receives as input a table with Date and ID columns and provides a list of dates where the saturation cycle of all the distinct ids of the table is completed.

It seems very fast, respect previou solution based on list.generate and list.difference and so on ...

to obtain a more pleasant output, I grafted a copy of the vector of ids in the original table in three different random points of the [ID] column 😊

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

let

completion = (tab)=>

let

grpID=Table.Group(tab, {"ID"}, {"grp", each _}),

nids=Table.RowCount(grpID),

currFirstDate=Table.First(List.First(grpID[grp]),"Date"),

currLastDate=Table.First(List.Last(grpID[grp]),"Date"),

rest= Table.SelectRows(tab, each _[Date] > currLastDate[Date] ),

result= if Table.RowCount(Table.Distinct(rest,"ID")) < nids then {[Start=currFirstDate, End=currLastDate]} else {[Start=currFirstDate, End=currLastDate]} & @ completion(rest)

in

result

in

completion

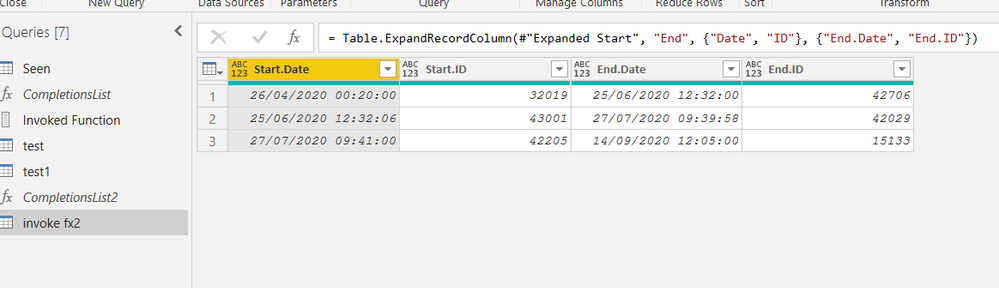

invoking above function

let

Source = Table.FromRecords(CompletionsList2(Seen)),

#"Expanded Start" = Table.ExpandRecordColumn(Source, "Start", {"Date", "ID"}, {"Start.Date", "Start.ID"}),

#"Expanded End" = Table.ExpandRecordColumn(#"Expanded Start", "End", {"Date", "ID"}, {"End.Date", "End.ID"})

in

#"Expanded End"

and get:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

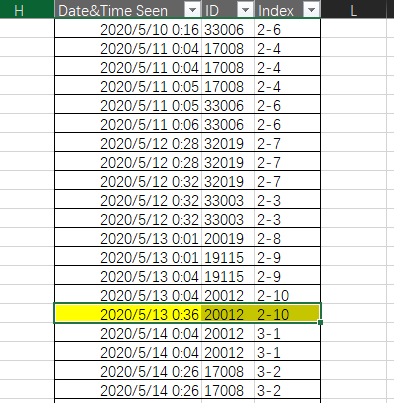

Hi, @Anonymous

If the last ID happens to occur consecutively at the end of the loop, your code has a problem! For example, in the following case, which I've marked yellow.

The recursive code needs to be changed, Here's how I modified it.

currLastDate=let tb = List.Last(grpID[grp])

in Table.Last(Table.FirstN(tb, each Date.From([#"Date&Time Seen"]) = Date.From(tb[#"Date&Time Seen"]{0})), "Date"),

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

About the changing the recursive code to implement the different logic suggested by @ziying35 , I would like to propose a different way of obtaining the same result.

In practice, this is done: take the last element of the first group of list of groups by day of the last group of list of groups by ID.

In M code, this becomes

let

completion = (tab) =>

let

grpID = Table.Group(tab, {"ID"}, {"grp", each _}),

nids = Table.RowCount(grpID),

currFirstDate = Table.First(List.First(grpID[grp]), "Date"),

currLastDate = Table.Last(

List.First(

Table.Group(

List.Last(grpID[grp]),

{"Date"},

{"idByday", each _},

GroupKind.Local,

(x, y) => Date.Day(x[Date]) - Date.Day(y[Date])

)[idByday]

),

"Date"

),

rest = Table.SelectRows(tab, each _[Date] > currLastDate[Date]),

result =

if Table.RowCount(Table.Distinct(rest, "ID")) < nids then

{[Start = currFirstDate, End = currLastDate]}

else

{[Start = currFirstDate, End = currLastDate]} & @completion(rest)

in

result

in

completion

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Anonymous

This recursive solution of yours is awesome, can you upload the file of this solution? lt's been great to study with you guys.

Thanks in advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ziying35

here the files (data from Kiwzqt and my pibx) :

per scaricarli, fai click sul seguente link e segui le istruzioni.

As you can verify the table Seen used contains 3 times the ID 15133 which is not rpesent in the original table.

Those are the three positions where I grafted the entire vector of ids (Total ID) to get at least three full "completion" and be able to test the function to see if, as well as pretty, it really worked ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous , Thanks for sharing that.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

Genius!! Fantastic solution.

If I am not mistaken, the "completion "function implicitly assumes that the table it receives initially:

1. is sorted by (ascending) date

2. has exactly all the IDs we are interested in (not more, not fewer)

This however can easily be checked and the table can be prepared before invoking the function. In the case that the number of IDs in the table is fewer than those of interest, we can directly say there are no complete periods.

Please mark the question solved when done and consider giving kudos if posts are helpful.

Contact me privately for support with any larger-scale BI needs, tutoring, etc.

Cheers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

too generous.

Yes, as you observe, the function does what is required if the input table is sorted in ascending order on the given column.

I thought about this and wanted to put a sort statement at the beginning of the function, but given the nature of the data arriving, the chronological order should be guaranteed.

For IDs, the logic of the function is based on the fact that all (distinct) IDs in the ID column make up the universe of IDs.

The effectiveness of the algorithm in quickly solving the problem is based on the fact that, in a certain sense, instead of multiplying the size of the table (100k records) by the size of the IDs (1.5k), then making 1.5M comparisons , divides the 100k records working only on the groups, which are at most 1.5k. (so the improvement is about 1000 times!?!?!)

Among other things, on these groups only the library functions (Table.First, List.Last, etc.) are used, which are very efficient as they are written in a language suitable by people who do these things for their profession.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Kiwizqt

Unfortunately I am not able to directly evaluate the code in the case of @AlB because my version of PBI is not updated to the point of being able to open the file (which I keep until my IT department updates the sw); in @mahoneypat 's case because I don't know anything about DAX at all.

In any case, based on some parts of what is reported in their messages, I have made the following reflections which I think it is useful to evaluate.

From the AIB post it is clear (I had detected it myself by doing some checks directly on the excel file containing the Seen and Total ID tables) that in the Seen table there are not all the IDs of the Total ID.

AIB, removing the lines relating to ID 15133, as I understand, found only one "complete" period.

While Pat found two: one that ends on 25/08 and one that ends on 3/9.

In my post I have attached a file where two full periods are detected one as Pat (and probably as AIB, but I can't evaluate its code) and one ending 9/19.

My conjecture, to be evaluated and possibly refuted, is that the second period of pat is incorrect, as it does not include the IDs that my script finds different between 3/9 and 19/9.

But mine is not correct either: in fact the first period has 1373 different IDs (in the Total ID list provided are 1374); the second period has 1368 different IDs (and Pat's second period has even fewer, I suppose).

What happened: having seen in the excel file that the ids between the Seen table and Total ID were not matching, I thought of making myself independent from the Total ID list and obtaining the list by grouping By of the Seen.

What happens is that after the first group there are not all the complete ids (as AIB seems to have detected) so my group By on the rest of the records resulted in a smaller group of IDs (1368). This is a problem that can be overcome with a simple check or by using the ids list of the first broup by.

I have only sketched the idea of the solution, having seen that you are able to write the code yourself, but if you want, I can complete the solution by providing as output the table of the complete periods and the dates in which they open and close.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's getting late here, so I'll start tomorrow with a solution in case only all valid IDs exist.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If the interesting solution that @Anonymous suggests is slow when the tables are large like in your case, perhaps the core operation can be done in a Python script invoked from power query. It would probably be quite faster.

Also, does the data you have provided yield the expected result you mentioned before as seen below? It doesn't look like it. Can you provide the full data that should yield the result in the pic below?

Please mark the question solved when done and consider giving kudos if posts are helpful.

Contact me privately for support with any larger-scale BI needs, tutoring, etc.

Cheers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@AlBApologies I didn't properly answer your last paragraph, those are not factual datas, I only wrote so to provide a desired structural outcome. Days to achieve & Time span values are not related to the datas I submitted before.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.