- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Removing nonbreaking space and carriage return fro...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Removing nonbreaking space and carriage return from pipe delimited text file

Hello!

I have a pipe-delimited text file that has non-breaking spaces followed by carriage returns within some of the address fields. When I try to import this via Power Query, it reads those line breaks as new records. I'm trying to find a way to replace those combo "space and CR" with any other character possible to fix the error so that Power Query can see the full row correctly. I can't seem to find a working model in Transform>Replace thus far despite trying several combos (non-breaking space + CR, space via keyboard + CR, replacing CR altogether, etc.). Is there a way to accomplish this such that I can correctly read each record into Power Query?

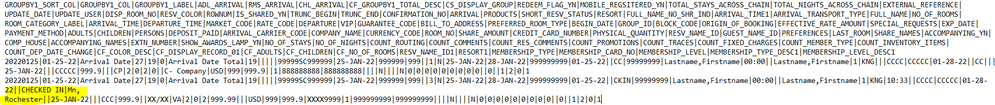

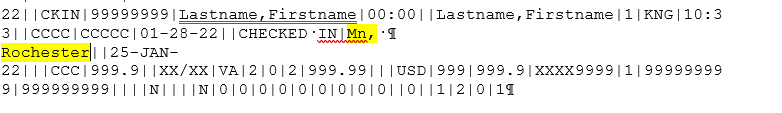

In the images enclosed, you can see the file structure from text reader (problem region highlighted), as well as the formatting characters sitting between the text when read in Word (same region), PII data has been anonymized in both, but I'm hoping the structure remains clear. The header row has no problems, and the first data record has no problems, but the address block in the 2nd record has the non-breaking space followed by carriage return that causes PQ to interpret this as the start of a new record. The rest of that 2nd record is fine, but is interpreted as the 3rd record of the data set.

Any help in a method that might work to remove/replace these problem combination of characters would be most appreciated!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think something like this will work (though you may need to adjust the exact new line and space characters):

let

Source = Lines.FromBinary(File.Contents("C:\Users\aolson\Downloads\TestCSV.csv")),

ReplaceText = Text.Replace(Lines.ToText(Source)," #(cr)#(lf)", " "),

TextToList = List.RemoveItems(Text.Split(ReplaceText, "#(cr)#(lf)"), {""}),

ToTable = Table.FromList(TextToList, Splitter.SplitTextByDelimiter("|"))

in

ToTable- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think something like this will work (though you may need to adjust the exact new line and space characters):

let

Source = Lines.FromBinary(File.Contents("C:\Users\aolson\Downloads\TestCSV.csv")),

ReplaceText = Text.Replace(Lines.ToText(Source)," #(cr)#(lf)", " "),

TextToList = List.RemoveItems(Text.Split(ReplaceText, "#(cr)#(lf)"), {""}),

ToTable = Table.FromList(TextToList, Splitter.SplitTextByDelimiter("|"))

in

ToTable- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Holy moly, it worked perfectly. I was trying a long loop to read in line-by-line and brute force a find and replace, but this did it in one step even with 49K rows, thanks so much for your help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ronrsnfld I wish I had a choice in the matter, but the exports come from a legacy SaaS platform that has apparently never heard of quoted text or escape characters. The CRs are easy enough to get rid of manually (open text reader, do a RegEx-type search for carriage returns and replace them with spaces, hyphens, etc. and save changes), but there are hundreds of files to process in each dump, kind of defeats the purpose of running batch conversions in the first place.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And here is PQ code that can handle those included carriage returns. It works on your limited one row sample.

Read the code comments and explore the applied steps to better understand the algorithm.

If this data will be going to an Excel worksheet, I suggest doing the Text.ReplaceRange using linefeed rather than carriage return as that seems to be standard in Excel multiline cells (eg replace "#(cr)" with "#(lf)" in the Text.ReplaceRange function)

let

//Read in file as a Text File

Source = Table.FromColumns({Lines.FromBinary(File.Contents("full_pathname"), null, null, 1252)}),

//create grouping column

// add Index column

// add custom column the = Index value if line does NOT end with nbsp (else null)

// fill up

#"Added Index" = Table.AddIndexColumn(Source, "Index", 0, 1, Int64.Type),

#"Added Custom" = Table.AddColumn(#"Added Index", "grouper", each if Text.EndsWith([Column1],"#(00A0)") then null else [Index]),

#"Removed Columns" = Table.RemoveColumns(#"Added Custom",{"Index"}),

#"Filled Up" = Table.FillUp(#"Removed Columns",{"grouper"}),

//Group by the "grouper" column

//Concatentate the rows after replacing any terminal nbsp with cr

#"Grouped Rows" = Table.Group(#"Filled Up", {"grouper"}, {

{"textStrings", (t)=>

Text.Combine(

List.Transform(t[Column1],

each if Text.EndsWith(_,"#(00A0)")

then Text.ReplaceRange(_,Text.Length(_),1,"#(cr)")

else _),

"")}

}),

//Remove the grouper column

#"Removed Columns1" = Table.RemoveColumns(#"Grouped Rows",{"grouper"}),

//Split by the Pipe

#"Split Column by Delimiter" = Table.SplitColumn(#"Removed Columns1", "textStrings",

Splitter.SplitTextByDelimiter("|", QuoteStyle.None))

in

#"Split Column by Delimiter"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You might need a different tool to do this optimally. But in PQ you could certainly detect the <nbsp><cr> combinations and replace them with something (or use that to combine with the next line, depending on the rest of your code, and your data). If you want, upload a sample text file that has the problem, and I'll take a look.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The convention, for a csv file, is that if certain characters are included within a section, that section should be surrounded by text qualification characters -- usually a double quote. Those certain characters include the comma and the carriage return. Your csv file does not have that, so one solution would be to have the csv file better formed.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.