- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Remove the duplicate values without consolidating ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Remove the duplicate values without consolidating values

Hello,

Please help to create custom table by removing duplicate values, But it should not consolidate in column. Below is the table i have

| sessionid | startdatetime | enddatetime | KeyValue | Total_Sec |

| 4ef0-b6b2-b48f455cb558 | 23-10-2019 16.26 | 23-10-2019 16.26 | ROI4387 | 6 |

| 41f3-846a-3f78e208ff2f | 23-10-2019 16.31 | 23-10-2019 16.33 | ROI4111 | 88 |

| 41f3-846a-3f78e208ff2f | 23-10-2019 16.31 | 23-10-2019 16.33 | ROI4464 | 88 |

| 41f3-846a-3f78e208ff2f | 23-10-2019 16.31 | 23-10-2019 16.33 | ROI1169 | 88 |

| 424c-a789-575a668aecc8 | 23-10-2019 16.36 | 23-10-2019 16.36 | ROI1413 | 5 |

| 4307-8cc7-4ae053b1dd92 | 23-10-2019 16.41 | 23-10-2019 16.42 | ROI4210 | 18 |

| 4307-8cc7-4ae053b1dd92 | 23-10-2019 16.41 | 23-10-2019 16.42 | ROI4134 | 18 |

| 421f-a4d9-aa77376b67df | 23-10-2019 16.51 | 23-10-2019 16.51 | ROI4335 | 6 |

| 49ec-a047-ade8351fdd53 | 23-10-2019 17.01 | 23-10-2019 17.01 | ROI1222 | 5 |

| 47a1-9597-222bafe78f25 | 23-10-2019 17.07 | 24-10-2019 16.41 | ROI4449 | 84853 |

| 47a1-9597-222bafe78f25 | 23-10-2019 17.07 | 24-10-2019 16.41 | ROI4270 | 84853 |

| 4171-b340-8dcdda926b9b | 01-11-2019 7.00 | 01-11-2019 7.01 | ROI4404 | 94 |

| 48ba-b338-7247f6c8a12a | 04-11-2019 9.53 | 04-11-2019 9.56 | ROI4480 | 160 |

My requirement is have a custom column with below data with max limit of value 50000, by using unique sessionid.

| sessionid | startdatetime | enddatetime | KeyValue | Total_Sec | Custom colume |

| 4ef0-b6b2-b48f455cb558 | 23-10-2019 16.26 | 23-10-2019 16.26 | ROI4387 | 6 | 6 |

| 41f3-846a-3f78e208ff2f | 23-10-2019 16.31 | 23-10-2019 16.33 | ROI4111 | 88 | 88 |

| 41f3-846a-3f78e208ff2f | 23-10-2019 16.31 | 23-10-2019 16.33 | ROI4464 | 88 | |

| 41f3-846a-3f78e208ff2f | 23-10-2019 16.31 | 23-10-2019 16.33 | ROI1169 | 88 | |

| 424c-a789-575a668aecc8 | 23-10-2019 16.36 | 23-10-2019 16.36 | ROI1413 | 5 | 5 |

| 4307-8cc7-4ae053b1dd92 | 23-10-2019 16.41 | 23-10-2019 16.42 | ROI4210 | 18 | 18 |

| 4307-8cc7-4ae053b1dd92 | 23-10-2019 16.41 | 23-10-2019 16.42 | ROI4134 | 18 | |

| 421f-a4d9-aa77376b67df | 23-10-2019 16.51 | 23-10-2019 16.51 | ROI4335 | 6 | 6 |

| 49ec-a047-ade8351fdd53 | 23-10-2019 17.01 | 23-10-2019 17.01 | ROI1222 | 5 | 5 |

| 47a1-9597-222bafe78f25 | 23-10-2019 17.07 | 24-10-2019 16.41 | ROI4449 | 84853 | 50000 |

| 47a1-9597-222bafe78f25 | 23-10-2019 17.07 | 24-10-2019 16.41 | ROI4270 | 84853 | |

| 4171-b340-8dcdda926b9b | 01-11-2019 7.00 | 01-11-2019 7.01 | ROI4404 | 94 | 94 |

| 48ba-b338-7247f6c8a12a | 04-11-2019 9.53 | 04-11-2019 9.56 | ROI4480 | 160 | 160 |

Anyhelp is really appreciated.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

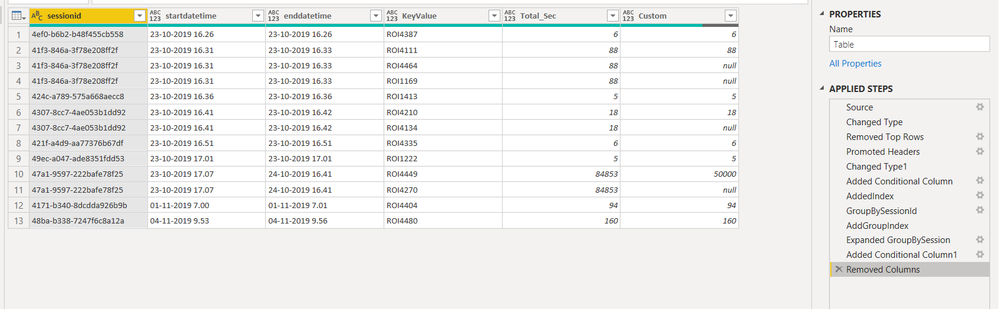

You can achieve the desired results by adding a couple of steps in query editor. Try these:

1. Add Conditional Column to replace total_sec values > 50000 with 50000 else total_sec value.

2. Add an Index Column.

3. Group By SessionId, choose Operation = All Rows. You will get a table for each session id.

4. Transform each row to add another index to all rows in the table (generated in step above). This will generate a groupIndex i.e. new indices per session id.

5. Expand this new column now.

6. Based on this new group index column, if its not equal to 1, replace values with null.

Hope that helps.

Here are the steps from advanced editor.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("rZPNilsxDIVfpWQ9CpYsW/IjlC4KbekmhCL/QWA6A83tom9f35t0JjPuMnAX8rk+H/KxfDjsdg833/HhsDu38/n0/HSqQzgv9muptrTl9LONdXuqN6tP7c93e/y9lt+eF3v88bWVDcGtO8gxE2TWziGUHIKObeQBHZDD9AHjnuL/pS+fP7JXGVW84LB7UI4Gvos2cto79cnrcZb8FYe4/lS9F48j35OHGNMNj7iAiSYIEixGtVbKHJ+f4/PX+JBxJYcLzjsBLUWArbngM9aaaPLy3B7T9biEblSo9+Kh5xseYQfjmsBMxEvMUeocX5h5m7RNiw+v05LaSM+xgNWmPmCvNfh3Xtm797irtKZHRK/piSGkkASGmq030U5h9q7jSjwFsA0Lb5fLOtq4E5LEvUWiIGTPDrSW8UYTxZzy2OIQEC/+QXST8tKjW28k8YWm2QbNKwix9FjUkGz18os37bdQ3yr/Xi/rNi/R7Y7Hvw==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Column1 = _t, Column2 = _t, Column3 = _t, Column4 = _t, Column5 = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Column1", type text}, {"Column2", type text}, {"Column3", type text}, {"Column4", type text}, {"Column5", type text}}),

#"Removed Top Rows" = Table.Skip(#"Changed Type",1),

#"Promoted Headers" = Table.PromoteHeaders(#"Removed Top Rows", [PromoteAllScalars=true]),

#"Changed Type1" = Table.TransformColumnTypes(#"Promoted Headers",{{"sessionid", type text}, {"startdatetime", type text}, {"enddatetime", type text}, {"KeyValue", type text}, {"Total_Sec", Int64.Type}}),

#"Added Conditional Column" = Table.AddColumn(#"Changed Type1", "custom_column", each if [Total_Sec] > 50000 then 50000 else [Total_Sec]),

AddedIndex = Table.AddIndexColumn(#"Added Conditional Column", "Index", 1, 1),

GroupBySessionId = Table.Group(AddedIndex, {"sessionid"}, {{"GroupBySession", each _, type table [sessionid=text, startdatetime=text, enddatetime=text, KeyValue=text, Total_Sec=number, custom_column=number, Index=number]}}),

AddGroupIndex = Table.TransformColumns(GroupBySessionId, {{"GroupBySession", each Table.AddIndexColumn(Table.Sort(_,"Index"), "GroupIndex",1), type table}}),

#"Expanded GroupBySession" = Table.ExpandTableColumn(AddGroupIndex, "GroupBySession", {"startdatetime", "enddatetime", "KeyValue", "Total_Sec", "custom_column", "Index", "GroupIndex"}, {"startdatetime", "enddatetime", "KeyValue", "Total_Sec", "custom_column", "Index", "GroupIndex"}),

#"Added Conditional Column1" = Table.AddColumn(#"Expanded GroupBySession", "Custom", each if [GroupIndex] = 1 then [custom_column] else null),

#"Removed Columns" = Table.RemoveColumns(#"Added Conditional Column1",{"custom_column", "Index", "GroupIndex"})

in

#"Removed Columns"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @chandu080 ,

You also could simplify code like below

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("rZM7bgQhEESvYk3sXvUPujmCI0tOVxvwGe5/BDOz4/3hcDNUUE9NUZzPi64docTCUNS7hlBLCL58LixACIyUPiieOP4v/Xx/qbiNVVwunwNHXcA1ZpBuvjJ679wnr9AsyYEj2jbd38XTqO/kEcX0wGOtkM0TBAs5Rs9rrXN8MscnR3yktJHDFSdo4LUaaF4xSKHWEk9encdTPq7LhGNF/i4eiT7wmDpkbQlyNhOLJVqb4wszb5f2tki4tyWtIz1Ug9xWl0C9tSAvXjvhK+6QtvSY+Z6eZYIUksFQS+6reecwe7e6sk4B7GXR/XHVxxhvQrLhM5KMoIgieKut5cSxpDKOIAHR1T+IOCm3GXF7kaRXmpc8aOJgrNZj9UycN6/evOm0h/qs/P1e9b0vEZfL5Rc=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [sessionid = _t, startdatetime = _t, enddatetime = _t, KeyValue = _t, Total_Sec = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"sessionid", type text}, {"startdatetime", type text}, {"enddatetime", type text}, {"KeyValue", type text}, {"Total_Sec", Int64.Type}}),

#"Added Index" = Table.AddIndexColumn(#"Changed Type", "Index", 0, 1),

#"Grouped Rows" = Table.Group(#"Added Index", {"sessionid"}, {{"all", each _, type table [sessionid=text, startdatetime=text, enddatetime=text, KeyValue=text, Total_Sec=number, Index=number]}}),

#"Added Custom" = Table.AddColumn(#"Grouped Rows", "Custom", each Table.AddIndexColumn([all], "group id",1,1)),

#"Removed Columns" = Table.RemoveColumns(#"Added Custom",{"all"}),

#"Expanded Custom" = Table.ExpandTableColumn(#"Removed Columns", "Custom", {"startdatetime", "enddatetime", "KeyValue", "Total_Sec", "group id"}, {"startdatetime", "enddatetime", "KeyValue", "Total_Sec", "group id"}),

Custom1 = Table.ReplaceValue( #"Expanded Custom", each [group id], each if [group id]<>1 then null else if [Total_Sec]>5000 then 5000 else [Total_Sec], Replacer.ReplaceValue, {"group id"})

in

Custom1Best Regards,

Zoe Zhi

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I got the simple solution.

SingleTime = if('DE'[Index]=CALCULATE(min('DE'[Index]), ALLEXCEPT('DE', 'DE'[sessionid])), min(500000,IF(ISBLANK('DE'[Total_Work_Sec]),'DE'[Total_Sec],'DE'[Total_Work_Sec])), BLANK())

It worked. grouping tables will not work for dynamic data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I got the simple solution.

SingleTime = if('DE'[Index]=CALCULATE(min('DE'[Index]), ALLEXCEPT('DE', 'DE'[sessionid])), min(500000,IF(ISBLANK('DE'[Total_Work_Sec]),'DE'[Total_Sec],'DE'[Total_Work_Sec])), BLANK())

It worked. grouping tables will not work for dynamic data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @chandu080 ,

You also could simplify code like below

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("rZM7bgQhEESvYk3sXvUPujmCI0tOVxvwGe5/BDOz4/3hcDNUUE9NUZzPi64docTCUNS7hlBLCL58LixACIyUPiieOP4v/Xx/qbiNVVwunwNHXcA1ZpBuvjJ679wnr9AsyYEj2jbd38XTqO/kEcX0wGOtkM0TBAs5Rs9rrXN8MscnR3yktJHDFSdo4LUaaF4xSKHWEk9encdTPq7LhGNF/i4eiT7wmDpkbQlyNhOLJVqb4wszb5f2tki4tyWtIz1Ug9xWl0C9tSAvXjvhK+6QtvSY+Z6eZYIUksFQS+6reecwe7e6sk4B7GXR/XHVxxhvQrLhM5KMoIgieKut5cSxpDKOIAHR1T+IOCm3GXF7kaRXmpc8aOJgrNZj9UycN6/evOm0h/qs/P1e9b0vEZfL5Rc=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [sessionid = _t, startdatetime = _t, enddatetime = _t, KeyValue = _t, Total_Sec = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"sessionid", type text}, {"startdatetime", type text}, {"enddatetime", type text}, {"KeyValue", type text}, {"Total_Sec", Int64.Type}}),

#"Added Index" = Table.AddIndexColumn(#"Changed Type", "Index", 0, 1),

#"Grouped Rows" = Table.Group(#"Added Index", {"sessionid"}, {{"all", each _, type table [sessionid=text, startdatetime=text, enddatetime=text, KeyValue=text, Total_Sec=number, Index=number]}}),

#"Added Custom" = Table.AddColumn(#"Grouped Rows", "Custom", each Table.AddIndexColumn([all], "group id",1,1)),

#"Removed Columns" = Table.RemoveColumns(#"Added Custom",{"all"}),

#"Expanded Custom" = Table.ExpandTableColumn(#"Removed Columns", "Custom", {"startdatetime", "enddatetime", "KeyValue", "Total_Sec", "group id"}, {"startdatetime", "enddatetime", "KeyValue", "Total_Sec", "group id"}),

Custom1 = Table.ReplaceValue( #"Expanded Custom", each [group id], each if [group id]<>1 then null else if [Total_Sec]>5000 then 5000 else [Total_Sec], Replacer.ReplaceValue, {"group id"})

in

Custom1Best Regards,

Zoe Zhi

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i was able to do the row wise condiction trick in excel (=IF(P2="","",IF(COUNTIF(P1,P2)=1,"",P2)), But i need coustom colume value based on unique sessionid.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can achieve the desired results by adding a couple of steps in query editor. Try these:

1. Add Conditional Column to replace total_sec values > 50000 with 50000 else total_sec value.

2. Add an Index Column.

3. Group By SessionId, choose Operation = All Rows. You will get a table for each session id.

4. Transform each row to add another index to all rows in the table (generated in step above). This will generate a groupIndex i.e. new indices per session id.

5. Expand this new column now.

6. Based on this new group index column, if its not equal to 1, replace values with null.

Hope that helps.

Here are the steps from advanced editor.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("rZPNilsxDIVfpWQ9CpYsW/IjlC4KbekmhCL/QWA6A83tom9f35t0JjPuMnAX8rk+H/KxfDjsdg833/HhsDu38/n0/HSqQzgv9muptrTl9LONdXuqN6tP7c93e/y9lt+eF3v88bWVDcGtO8gxE2TWziGUHIKObeQBHZDD9AHjnuL/pS+fP7JXGVW84LB7UI4Gvos2cto79cnrcZb8FYe4/lS9F48j35OHGNMNj7iAiSYIEixGtVbKHJ+f4/PX+JBxJYcLzjsBLUWArbngM9aaaPLy3B7T9biEblSo9+Kh5xseYQfjmsBMxEvMUeocX5h5m7RNiw+v05LaSM+xgNWmPmCvNfh3Xtm797irtKZHRK/piSGkkASGmq030U5h9q7jSjwFsA0Lb5fLOtq4E5LEvUWiIGTPDrSW8UYTxZzy2OIQEC/+QXST8tKjW28k8YWm2QbNKwix9FjUkGz18os37bdQ3yr/Xi/rNi/R7Y7Hvw==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Column1 = _t, Column2 = _t, Column3 = _t, Column4 = _t, Column5 = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Column1", type text}, {"Column2", type text}, {"Column3", type text}, {"Column4", type text}, {"Column5", type text}}),

#"Removed Top Rows" = Table.Skip(#"Changed Type",1),

#"Promoted Headers" = Table.PromoteHeaders(#"Removed Top Rows", [PromoteAllScalars=true]),

#"Changed Type1" = Table.TransformColumnTypes(#"Promoted Headers",{{"sessionid", type text}, {"startdatetime", type text}, {"enddatetime", type text}, {"KeyValue", type text}, {"Total_Sec", Int64.Type}}),

#"Added Conditional Column" = Table.AddColumn(#"Changed Type1", "custom_column", each if [Total_Sec] > 50000 then 50000 else [Total_Sec]),

AddedIndex = Table.AddIndexColumn(#"Added Conditional Column", "Index", 1, 1),

GroupBySessionId = Table.Group(AddedIndex, {"sessionid"}, {{"GroupBySession", each _, type table [sessionid=text, startdatetime=text, enddatetime=text, KeyValue=text, Total_Sec=number, custom_column=number, Index=number]}}),

AddGroupIndex = Table.TransformColumns(GroupBySessionId, {{"GroupBySession", each Table.AddIndexColumn(Table.Sort(_,"Index"), "GroupIndex",1), type table}}),

#"Expanded GroupBySession" = Table.ExpandTableColumn(AddGroupIndex, "GroupBySession", {"startdatetime", "enddatetime", "KeyValue", "Total_Sec", "custom_column", "Index", "GroupIndex"}, {"startdatetime", "enddatetime", "KeyValue", "Total_Sec", "custom_column", "Index", "GroupIndex"}),

#"Added Conditional Column1" = Table.AddColumn(#"Expanded GroupBySession", "Custom", each if [GroupIndex] = 1 then [custom_column] else null),

#"Removed Columns" = Table.RemoveColumns(#"Added Conditional Column1",{"custom_column", "Index", "GroupIndex"})

in

#"Removed Columns"

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.