- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Loop / recursive / DAX / PowerQuery / Same Table l...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Loop / recursive / DAX / PowerQuery / Same Table loop

Hi, I am new to Power BI and I need help with creating a loop using DAX or Power Query.

Problem Statement:

I have only 1 table (which shows unidirectional links between workitems) with 4 columns.

| SourceWorkItemId | ParentWorkItemId | SourceWorkItemType | ParentWorkItemType | CapID (OUTPUT) |

| 11 | 22 | Story1 | Feature1 | 66 |

| 22 | 33 | Feature1 | Epic1 | 66 |

| 44 | 55 | Theme1 | MBI1 | 0 |

| 33 | 44 | Epic1 | Theme1 | 0 |

| 55 | 66 | MBI1 | Cap1 | 0 |

| 111 | 222 | Story2 | Cap1 | 222 |

| 1111 | 2222 | Story3 | Cap2 | 2222 |

| 1 | 66 | Story4 | Cap1 | 66 |

| 77 | 2222 | Epic2 | Cap2 | 0 |

The WorkItemType hierarchy is not fixed. It can be Story -> Feature -> Cap or it can be Story -> Cap or anything else.

The maximum depth (in this order) is: Story -> Feature -> Epic -> Theme -> MBI -> Cap.

I need to show CapID against Stories and Features that are directly or indirectly linked to that Cap by adding 1 new column in the existing table.

I tried to do this using a python script which works fine in python but it times-out in Power BI and gives 1800000ms error, probably because of the table size.

Code Example with comments:

Cap = [] // created a blank list

for indices,row in df.iterrows(): // started a for loop

if row['SourceWorkItemType'] in ['Story','Feature']: // if SourceType is Story/Feature

if row['ParentWorkItemType'] == 'Cap': // if current ParentType is Cap

Cap.append(row['ParentWorkItemId']) // append current CapID to list Cap

else: // if ParentType is not Cap

for indices,row_1 in df.iterrows(): // nested for loop

if row['ParentWorkItemId'] == row_1['SourceWorkItemId']: // if ParentID is found in another row's SourceID

if row_1['ParentWorkItemType'] != 'Cap': // if ParentType is not Cap

row['ParentWorkItemId'] = row_1['ParentWorkItemId'] // then search new ParentID in another row's sourceID

else: // else if current Parent is Cap

Cap.append(row_1['ParentWorkItemId']) // add the current ParentID to Cap list

else: // if SourceType is not Story/Feature or if CapID is not found

Cap.append(0) // append 0 to the Cap list

print(Cap) // add Cap list as the 5th column

Request you to help me with this. Thanks in advance! 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @vyask

let

Source = Table.FromRecords(Json.Document(Binary.Decompress(Binary.FromText("ndJNC4JAEAbg/zJnL7t+gceiwIMQKHSIDmIDRZiyrAeJ/nutSYTuu6DX/ZjnnWFOT8qbTlV8bNQ91VynF0qE8OhQKn7o/1Mpvcnbom+ZEsp1o3pB0z/j7Z5L3SkW9PJslqk6t3wfWL9qQNu1twpRQWCjwhBQxZVrDGWbFDkm/NwxutX5RgbMGMIOmehzKIoANEQGzrZskSLQOjj3Qa6TEOW0fIclkbVodAMTrGgpjhc2ZLbBNbtPP+c3",BinaryEncoding.Base64),Compression.Deflate))),

chtype = Table.TransformColumnTypes(Source,{{"SourceWorkItemId", type text}, {"ParentWorkItemId", type text}}),

rec = Record.FromList(List.Zip({chtype[ParentWorkItemId],chtype[SourceWorkItemType]}),chtype[SourceWorkItemId]),

fx =(rec, fd, k)=>

let val = Record.Field(rec, fd),

out = if not List.Contains({"Story", "Feature"}, k, (x,y)=>Text.StartsWith(y,x)) then 0

else let sw = Record.HasFields(rec,val{0})

in if sw then @fx(rec, val{0}, k) else val{0}

in out,

tbl = Table.AddColumn(chtype,"CapID", each fx(rec, [SourceWorkItemId], [SourceWorkItemType]))

in

tblTry this recursive code of mine. I learned it from the Chinese bigwig

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @mahoneypat @ziying35 @Anonymous , thanks a lot for your replies...

All these queries work when the data size is small.

However, when I run these on the actual data, Power BI keeps on evaluating for hours.

Is there a way using DAX please, as it seems faster to me?

@mahoneypat , there are zeros because, I only need to show the CapIDs (which your query is determining correctly) against SourceWorkItemType Stories and Features.

@ziying35 , just a note that your function stops at the last parent, but I also need to put a check that the last parent is Cap, otherwise it should give zero or null.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

what is the size of your real table?

how many columns and rows there are?

I just adaptde an old script, but may be a specific one for your date could be more performant

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous : It has the same 4 columns with around 4,50,000 rows.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@vyask If the data exceeds 400,000 lines, my code may not have the processing power. Can you remove sensitive information from your real form and upload it to onedrive?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

here the

let

Source = let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjRU0lEyMgISwSX5RZUgnltqYklpUSqIaWamFKsTDZE3NkaVcy3ITEZSY2ICZJuaAomQjNRcsApfJ08QZQCWB2sHK4JphKuDKADrBRoG1+ecWICQNYQ6FO5SI4QKkChUDZQLV2UMUWUEEwYrg9kDVmGCMAfqE3NzhCEgtxohzAC6JRYA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [sid = _t, pid = _t, sit = _t, pit = _t, #"CapID (OUTPUT)" = _t]),

#"Changed Type" = Table.TransformColumnTypes(

Source,

{

{"sid", Int64.Type},

{"pid", Int64.Type},

{"sit", type text},

{"pit", type text},

{"CapID (OUTPUT)", Int64.Type}

}

),

#"Inserted Merged Column" = Table.AddColumn(

#"Changed Type",

"Merged.1",

each Text.Combine({Text.From([sid], "it-IT"), [sit]}, ":"),

type text

),

#"Inserted Merged Column1" = Table.AddColumn(

#"Inserted Merged Column",

"Merged",

each Text.Combine({Text.From([pid], "it-IT"), [pit]}, ":"),

type text

)

in

Table.RenameColumns(#"Inserted Merged Column1", {{"Merged", "col2"}, {"Merged.1", "col1"}}),

#"Removed Other Columns" = Table.SelectColumns(Source,{"col1", "col2"}),

#"Added Index" = Table.AddIndexColumn(#"Removed Other Columns", "Index", 0, 1),

cols=Table.ToColumns(#"Added Index"),

#"Added Custom" = Table.AddColumn(#"Added Index", "linked", each List.Last(List.RemoveNulls(let cr=List.Skip(cols{0},_[Index]) in if List.Contains({"Story", "Feature"}, _[col1], (x,y)=>Text.Contains(y,x)) then List.Accumulate({0..5},{_[col1]},(s,c)=>s&{try cols{1}{let pos=List.PositionOf(cr,s{c})+_[Index] in if pos <_[Index] then -1 else pos}otherwise null}) else {"0"})))

in

#"Added Custom"

the version that uses an index column that confines the search to the rest of the table, as you proceed.

This obviously works if the linked groups are not intertwined, in the sense that the components are in the following order

Story -> Feature -> Epic -> Theme -> MBI -> Cap.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am a decent M-er, but not as good as @Anonymous , so I switched to the DAX route. Here is an approach that gets your desired results.

1. Make a Calculated DAX Table that has all Parent Values in the in the Source columns, so we can use the PATH function. Your original table is called Caps.

CapsForPath =

VAR currenttable =

SELECTCOLUMNS (

Caps,

"Child", Caps[SourceWorkItemId],

"Parent", Caps[ParentWorkItemId]

)

VAR nochild =

ADDCOLUMNS (

EXCEPT ( VALUES ( Caps[ParentWorkItemId] ), VALUES ( Caps[SourceWorkItemId] ) ),

"Parent", Caps[ParentWorkItemId]

)

VAR unioned =

DISTINCT (

UNION (

currenttable,

SELECTCOLUMNS ( nochild, "Child", Caps[ParentWorkItemId], "Parent", [Parent] )

)

)

RETURN

unioned

2. Add these two Calculated Columns to that new table

If this works for you, please mark it as the solution. Kudos are appreciated too. Please let me know if not.

Regards,

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this solution is based on the assumption that the linked groups are all contiguous.

Therefore the search is confined in a window of 6 elements, instead of on the whole table. This reduces the time by approximately (number of lines) / 6 times. For example, for a 600k row table, the time is about 1/10000 shorter.

I did the test on a randomly generated 1M row table, getting the answer within 10 '.

sorgente

let

zoo=(items)=>

let

itemsL=List.RemoveLastN(items,1),

itemsR=List.RemoveFirstN(items,1)

in Table.FromColumns({itemsL,itemsR}),

items={"S","F","E","T","M","C"} ,

seq=List.Transform({1..300000}, (r)=> List.Transform(List.Sort(List.Distinct( List.Transform(List.Random(7,r), each Number.RoundDown(_*6)))), each items{_}&Text.From(r))),

seq1= Table.Combine(List.Transform(seq, each zoo(_)))

in

seq1

let

Source = Table.RenameColumns(sorgente, {{"Column1", "col1"}, {"Column2", "col2"}}),

#"Added Index" = Table.AddIndexColumn(Source, "Idx", 1, 1),

cols=Table.ToColumns(Source),

cols0=List.Buffer(cols{0}),

cols1=List.Buffer(cols{1}),

#"Added Custom" = Table.AddColumn(#"Added Index", "linked", each if List.Contains({"S", "F"}, _[col1], (x,y)=>Text.Contains(y,x)) then let wind={cols0{_[Idx]},cols0{_[Idx]+1},cols0{_[Idx]+2},cols0{_[Idx]+3},cols0{_[Idx]+4},cols0{_[Idx]+5}} in List.Last(List.RemoveNulls(List.Accumulate({0..5},{_[col1]},(s,c)=>s&{try cols1{List.PositionOf(wind,s{c})}otherwise null}))) else "0")

in

#"Added Custom"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

here a code which follow the same logic of my penultimate message.

I used the "trick" of the list.buffer function to store the two columns on which repeated searches are performed.

This seems to have an excellent effect on execution times.

A few minutes for a table of about 500k rows

############Edited############

Used list.buffer on indexed version, that seems faster.

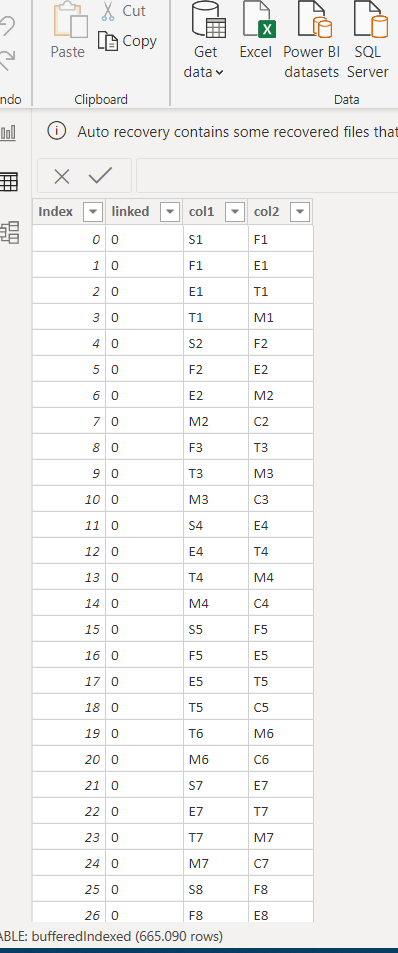

Follow a randomly generated table; a funcion that is udes to generate that table and the code to perform the required tarnsformations on the table rows (665k).

sorgente

let

items={"S","F","E","T","M","C"} ,

seq=List.Transform({1..200000}, (r)=> List.Transform(List.Sort(List.Distinct( List.Transform(List.Random(7,r), each Number.RoundDown(_*6)))), each items{_}&Text.From(r))),

seq1= Table.Combine(List.Transform(seq, each Table.Transpose(Table.FromColumns(zoo(_)))))

in

seq1

zoo

let

zipOddEven=(items)=>

let

itemsL=List.RemoveLastN(items,1),

itemsR=List.RemoveFirstN(items,1)

in List.Zip({itemsL,itemsR})

in

zipOddEven

let

Source= Table.RenameColumns(sorgente, {{"Column1", "col1"}, {"Column2", "col2"}}),

#"Removed Other Columns" = Table.SelectColumns(Source,{"col1", "col2"}),

#"Added Index" = Table.AddIndexColumn(#"Removed Other Columns", "Index", 0, 1),

cols=Table.ToColumns(#"Added Index"),

cols0=List.Buffer(cols{0}),

cols1=List.Buffer(cols{1}),

#"Added Custom" = Table.AddColumn(#"Added Index", "linked", each List.Last(List.RemoveNulls(let cr=List.Buffer(List.Skip(cols0,_[Index])) in if List.Contains({"Story", "Feature"}, _[col1], (x,y)=>Text.Contains(y,x)) then List.Accumulate({0..5},{_[col1]},(s,c)=>s&{try cols1{let pos=List.PositionOf(cr,s{c})+_[Index] in if pos <_[Index] then -1 else pos}otherwise null}) else {"0"})))

in

#"Added Custom"

PS

I would like to thank @mahoneypat for your kind words to me, but I would like to specify that I do not use power queries for work and therefore I have almost never tried the scripts that I produce on real tables.

So I have no efficient code experience, moreover my experience with power query is quite recent (due to the lock down covid-19), therefore my indications on processing times could be very approximate.

Unlike @mahoneypat , I can't even try different routes as I know absolutely nothing about DAX and therefore I have to try to do everything with M 😀

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

there are 450000 rows or 4500000 rows?

give a try using this scheme.

It is possible also make some improvements using an auxiliary index column.

make "incremental" attempts, starting from a table 1/10 of the maximum size; then depending on the time test 2/10, 1/2, etc.

let

Source = let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjRU0lEyMgISwSX5RZUgnltqYklpUSqIaWamFKsTDZE3NkaVcy3ITEZSY2ICZJuaAomQjNRcsApfJ08QZQCWB2sHK4JphKuDKADrBRoG1+ecWICQNYQ6FO5SI4QKkChUDZQLV2UMUWUEEwYrg9kDVmGCMAfqE3NzhCEgtxohzAC6JRYA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [sid = _t, pid = _t, sit = _t, pit = _t, #"CapID (OUTPUT)" = _t]),

#"Changed Type" = Table.TransformColumnTypes(

Source,

{

{"sid", Int64.Type},

{"pid", Int64.Type},

{"sit", type text},

{"pit", type text},

{"CapID (OUTPUT)", Int64.Type}

}

),

#"Inserted Merged Column" = Table.AddColumn(

#"Changed Type",

"Merged.1",

each Text.Combine({Text.From([sid], "it-IT"), [sit]}, ":"),

type text

),

#"Inserted Merged Column1" = Table.AddColumn(

#"Inserted Merged Column",

"Merged",

each Text.Combine({Text.From([pid], "it-IT"), [pit]}, ":"),

type text

)

in

Table.RenameColumns(#"Inserted Merged Column1", {{"Merged", "col2"}, {"Merged.1", "col1"}}),

#"Removed Other Columns" = Table.SelectColumns(Source,{"col1", "col2"}),

cols=Table.ToColumns(#"Removed Other Columns"),

#"Added Custom" = Table.AddColumn(#"Removed Other Columns", "linked", each if List.Contains({"Story", "Feature"}, _[col1], (x,y)=>Text.Contains(y,x)) then List.Last(List.RemoveNulls(List.Accumulate({0..5},{_[col1]},(s,c)=>s&{try cols{1}{List.PositionOf(cols{0},s{c})}otherwise null}))) else "0")

in

#"Added Custom"

In general, as time increases more than linearly with the size of the table, if it is possible to partition the table into some smaller subtables and run the script on the list of subtables, it will take much less time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is a good application for the PATH funciton in DAX. However, since some of the parent IDs are not also listed in SourceIDs, it will error. Fortunately, there is an M path equivalent that does not error. Below is example M code on how to do it. I don't understand the logic why some of your rows are 0. If you can explain that, I may be able to adapt this to do that. To see how it works, just create a blank query, go to Advanced Editor, and replace the text there with the M code below.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjRU0lEyMgISwSX5RZUgnltqYklpUSqIaWamFKsTDZE3NkaVcy3ITEZSY2ICZJuaAomQjNRcsApfJ08QZQCWB2sHK4JphKuDKADrBRoG1+ecWICQNYQ6FO5SI4QKkChUDZQLV2UMUWUEEwYrg9kDVmGCMAfqE3NzhCEgtxohzAC6JRYA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [SourceWorkItemId = _t, ParentWorkItemId = _t, SourceWorkItemType = _t, ParentWorkItemType = _t, #"CapID (OUTPUT)" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"SourceWorkItemId", Int64.Type}, {"ParentWorkItemId", Int64.Type}, {"SourceWorkItemType", type text}, {"ParentWorkItemType", type text}, {"CapID (OUTPUT)", Int64.Type}}),

RemovedColumns = Table.RemoveColumns(#"Changed Type",{"CapID (OUTPUT)"}),

#"Added Custom" = Table.AddColumn(RemovedColumns, "Final Cap", each let //Define your columns here

mytable=RemovedColumns,p="ParentWorkItemId",c="SourceWorkItemId"

in

let mylist={Record.Field(_,c)} & List.Generate(()=>[x=0,y=Record.Field(_,p),w=1],each [w] > 0,each [z=[y], x=Table.Column(Table.SelectRows(mytable,each Record.Field(_,c)=z),p),y=x{0},w=List.Count(x)

],

each [y])

in

Text.Combine(List.Reverse(List.RemoveItems(

List.Transform(mylist,each Text.From(_)),{null,""})),"|")),

#"Changed Type1" = Table.TransformColumnTypes(#"Added Custom",{{"Final Cap", type text}}),

#"Extracted Text Before Delimiter" = Table.TransformColumns(#"Changed Type1", {{"Final Cap", each Text.BeforeDelimiter(_, "|"), type text}})

in

#"Extracted Text Before Delimiter"

If this works for you, please mark it as the solution. Kudos are appreciated too. Please let me know if not.

Regards,

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Seems the same logic of a previuos case.

you just have to change the names of the two columns of your table in this way

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjRU0lEyMgISwSX5RZUgnltqYklpUSqIaWamFKsTDZE3NkaVcy3ITEZSY2ICZJuaAomQjNRcsApfJ08QZQCWB2sHK4JphKuDKADrBRoG1+ecWICQNYQ6FO5SI4QKkChUDZQLV2UMUWUEEwYrg9kDVmGCMAfqE3NzhCEgtxohzAC6JRYA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [sid = _t, pid = _t, sit = _t, pit = _t, #"CapID (OUTPUT)" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"sid", Int64.Type}, {"pid", Int64.Type}, {"sit", type text}, {"pit", type text}, {"CapID (OUTPUT)", Int64.Type}}),

#"Inserted Merged Column" = Table.AddColumn(#"Changed Type", "Merged", each Text.Combine({Text.From([pid], "it-IT"), [pit]}, ":"), type text),

#"Inserted Merged Column1" = Table.AddColumn(#"Inserted Merged Column", "Merged.1", each Text.Combine({Text.From([sid], "it-IT"), [sit]}, ":"), type text),

#"Renamed Columns" = Table.RenameColumns(#"Inserted Merged Column1",{{"Merged.1", "col1"}, {"Merged", "col2"}}),

#"Removed Other Columns" = Table.SelectColumns(#"Renamed Columns",{"col2", "col1"})

in

#"Removed Other Columns"

then use this code, which returns the complete chain of links

let

Source = Table,

add = (tab, n) =>

let

ac = Table.AddColumn(

tab,

"col" & Text.From(n),

each List.Distinct(

Table.SelectRows(tab, (r) => r[col1] = Record.Field(_, "col" & Text.From(n - 1)))[col2]

)

),

tabn = Table.ExpandListColumn(ac, "col" & Text.From(n))

in

tabn,

last = (tab, coln) => not List.IsEmpty(

List.Intersect({Table.Column(tab, "col" & Text.From(coln - 1)), tab[col1]})

),

steps = List.Generate(

() => [y = Source, n = 3, x = true],

each [x],

each [y = add([y], [n]), n = [n] + 1, x = last([y], [n])],

each [y]

)

in

Table.SelectRows(List.Last(steps), each not List.Contains(Source[col2], [col1]))

antother way is to adapt the input atble in this way.

#########edited###############

let

Source = let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjRU0lEyMgISwSX5RZUgnltqYklpUSqIaWamFKsTDZE3NkaVcy3ITEZSY2ICZJuaAomQjNRcsApfJ08QZQCWB2sHK4JphKuDKADrBRoG1+ecWICQNYQ6FO5SI4QKkChUDZQLV2UMUWUEEwYrg9kDVmGCMAfqE3NzhCEgtxohzAC6JRYA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [sid = _t, pid = _t, sit = _t, pit = _t, #"CapID (OUTPUT)" = _t]),

#"Changed Type" = Table.TransformColumnTypes(

Source,

{

{"sid", Int64.Type},

{"pid", Int64.Type},

{"sit", type text},

{"pit", type text},

{"CapID (OUTPUT)", Int64.Type}

}

),

#"Inserted Merged Column" = Table.AddColumn(

#"Changed Type",

"Merged.1",

each Text.Combine({Text.From([sid], "it-IT"), [sit]}, ":"),

type text

),

#"Inserted Merged Column1" = Table.AddColumn(

#"Inserted Merged Column",

"Merged",

each Text.Combine({Text.From([pid], "it-IT"), [pit]}, ":"),

type text

)

in

Table.RenameColumns(#"Inserted Merged Column1", {{"Merged", "col2"}, {"Merged.1", "col1"}}),

add = (tab, n) =>

let

ac = Table.AddColumn(

tab,

"col" & Text.From(n),

each List.Distinct(

Table.SelectRows(tab, (r) => r[col1] = Record.Field(_, "col" & Text.From(n - 1)))[col2]

)

),

tabn = Table.ExpandListColumn(ac, "col" & Text.From(n))

in

tabn,

last = (tab, coln) => not List.IsEmpty(

List.Intersect({Table.Column(tab, "col" & Text.From(coln - 1)), tab[col1]})

),

steps = List.Generate(

() => [y = Source, n = 3, x = true],

each [x],

each [y = add([y], [n]), n = [n] + 1, x = last([y], [n])],

each [y]

),

#"Inserted Merged Column" = Table.AddColumn(

List.Last(steps),

"Merged",

each

if List.ContainsAny({[col1]}, {"Story", "Feature"}, Text.Contains)

then Text.Combine({[col1], [col2], [col3], [col4], [col5], [col6]}, ",")

else [col1] & ",0",

type text

),

#"Added Custom" = Table.AddColumn(

#"Inserted Merged Column",

"cap",

each Text.AfterDelimiter([Merged], ",", {0, RelativePosition.FromEnd})

)

in

#"Added Custom"

in any case, whatever your logic (probably different from this in some respects), you can use the list.generate function schema to simulate what you do with Python

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.