- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: How to hide/remove duplicates based on conditi...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to hide/remove duplicates based on condition

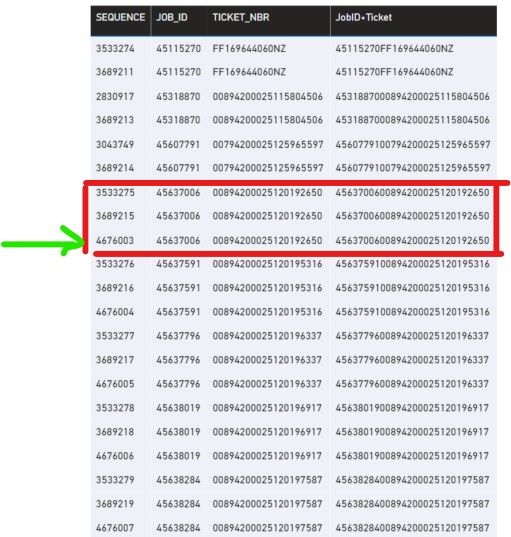

Hi, how do I hide/remove duplicate rows based on the following condition:

- if [JobID+Ticket] column contains duplicate, keep row with the highest [Sequence] number and remove the other duplicate rows. Screenshot example below - keep Sequence "4676003" and delete the lower sequences above it

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm guessing that it eliminated duplicates for each file individually but there were duplicates across files. I guess you do have to de-duplicate after combining the files then.

In this case, you use the same code (with any step references adjusted, e.g. #"Merge Columns" --> #"Inserted Merged Column") but put it at the end of the FreightForward v2 query rather than the Transform Files (2) function.

If you're going to use a database eventually, you might want to try approach #4 from my blog post.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As per my understanding the funcion "remove duplicates" works from top to bottom, so all you need to do is:

- to append the new data to the old one (you will have now duplicates in your key column

- sort them by the column you desire (for example if you need the most recent, sort it descending by update date, or If you need the highest sequence number, sort them in descending number). The important is that the record you want to keep is on a higher row than the one you want to remove

- now right click on the key column and remove duplicates

Sometimes the easiest solution is the best 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I recently wrote a blog post on this:

Select Distinct Rows Ordered by Another Column -- Power Query Edition

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@AlexisOlson, thanks for sharing your post. Its a beast! Which method would you recommend for my case? My datasource is importing spreadsheets from sharepoint with each file containing an average of ~300k rows, current table rows at 3M+ rows.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

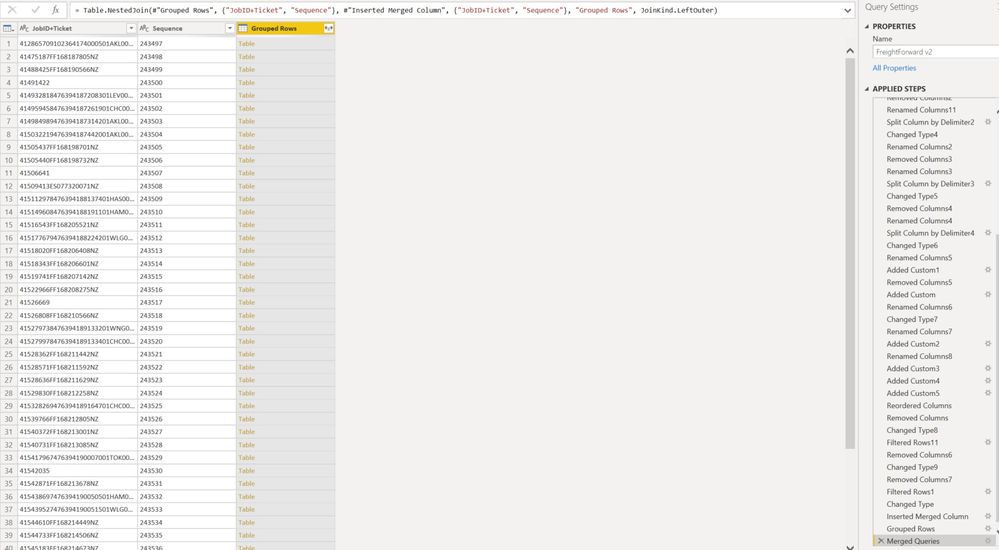

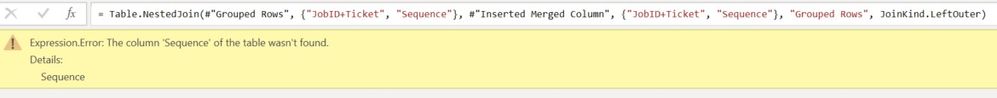

I tried option 4 from your blog post but I'm getting an error "after merging with the step before the grouping". @BA_Pete , @mahoneypat any suggestions?

Appreciate any help please.

Link to file if you need

https://drive.google.com/drive/folders/1dmmbhdbzySZBr8oTxj9CWsmztwFQ3f8A?usp=sharing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't think query folding is relevant here, so I'd probably go with approach #2 or #3.

I tested #3 (the Table.Max(_, "Col") version) on a subset of your data loaded into an Excel file and it seems to work OK.

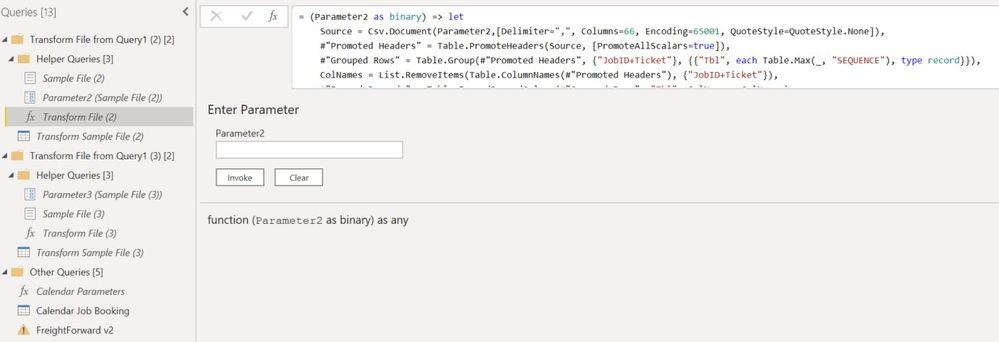

You could also try including the logic in your Transform File function like this (not tested):

let

Source = (Parameter2 as binary) => let

Source = Csv.Document(Parameter2,[Delimiter=",", Columns=66, Encoding=65001, QuoteStyle=QuoteStyle.None]),

#"Promoted Headers" = Table.PromoteHeaders(Source, [PromoteAllScalars=true]),

#"Grouped Rows" = Table.Group(#"Promoted Headers", {"JobID+Ticket"}, {{"Tbl", each Table.Max(_, "SEQUENCE"), type record}}),

ColNames = List.RemoveItems(Table.ColumnNames(#"Promoted Headers"), {"JobID+Ticket"}),

#"Expand Records" = Table.ExpandRecordColumn(#"Grouped Rows", "Tbl", ColNames, ColNames)

in

#"Expand Records"

in

Source

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AlexisOlson , I inserted your logic in the 'Transform File' function with no issue, but then when I clicked on the actual table, the following error appears...

Fyi the [JobID+Ticket] column is a manual merge I created in the past.

Details: JobID+Ticket"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK. Then you can either do the manual merge in the transform before referencing it or else do all of the logic in the big query rather than the function component.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AlexisOlson , I tried option 3 by doing all of the logic in the big query but its still processing the first step "Group by" for over 30min now and still going...

Can you please provide screenshots of the code and the exact steps using the function component method so we can save time?

I tried manually adding merge step in function component but kept getting same error i.e. "[JobID+Ticket] column not found"...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You've got a big dataset from a non-database source. I wouldn't expect to be very fast regardless of how good the approach is since you have to do it all locally in memory rather than being able to push it back to a server that's good at that kind of thing. Hopefully, it at least gives you progress details when loading.

If you're getting [JobID+Ticket] not found, that means you didn't create that column before referencing it or are referencing the wrong step. I think you could insert that merge into the Transform File (2) function like this:

let

Source = (Parameter2 as binary) => let

Source = Csv.Document(Parameter2,[Delimiter=",", Columns=66, Encoding=65001, QuoteStyle=QuoteStyle.None]),

#"Promoted Headers" = Table.PromoteHeaders(Source, [PromoteAllScalars=true]),

#"Merge Columns" = Table.AddColumn(#"Promoted Headers", "JobID+Ticket", each Text.Combine({[JOB_ID], [TICKET_NBR]}, ""), type text),

#"Grouped Rows" = Table.Group(#"Merge Columns", {"JobID+Ticket"}, {{"Tbl", each Table.Max(_, "SEQUENCE"), type record}}),

ColNames = List.RemoveItems(Table.ColumnNames(#"Merge Columns"), {"JobID+Ticket"}),

#"Expand Records" = Table.ExpandRecordColumn(#"Grouped Rows", "Tbl", ColNames, ColNames)

in

#"Expand Records"

in

SourceI can't easily test this on my end since I don't have valid credentials to connect to your SharePoint site so I don't get any previews in the query editor of your file.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AlexisOlson , that makes sense and thanks for clarifying. The datasource will be transferred to a database in the near future.

Ok so I tried your latest code and it worked without issue however, when doing a test on the front end, the duplicates were still present. Did I miss something in the process?

Fyi I've added a new folder in the Google drive folder link I shared with you that contains a couple of spreadsheets of the raw data if you wanted to replicate it from your end

Sorry for taking up your time, appreciate your patience.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm guessing that it eliminated duplicates for each file individually but there were duplicates across files. I guess you do have to de-duplicate after combining the files then.

In this case, you use the same code (with any step references adjusted, e.g. #"Merge Columns" --> #"Inserted Merged Column") but put it at the end of the FreightForward v2 query rather than the Transform Files (2) function.

If you're going to use a database eventually, you might want to try approach #4 from my blog post.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok might try your suggestion in the interim as I've just been informed by colleague that they will proceed with migrating the datasource to a database in the coming days which will mitigate the duplicate issues from my end. Thanks again for your help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

It looks like @AlexisOlson is already helping you with this. You're in excellent hands. 👍

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.