- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Data overflow error when the "List.Accumulate" fun...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Data overflow error when the "List.Accumulate" function was used to process data over 2000 rows

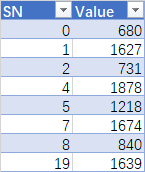

My Source Table:

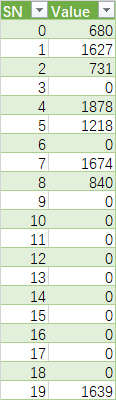

Desired Table:

Based on the Source Table, insert the missing "SN" with a value of 0, My M code as below:

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

rows = List.Buffer(Table.ToRows(Source)),

acc = List.Accumulate(

rows,

{"", {}},

(s,c)=>if s{0}="" then {c{0}, {c}}

else if s{0}+1=c{0} then {c{0},s{1}&{c}}

else {c{0}, s{1}&List.Transform({s{0}+1..c{0}-1}, each {_,0})&{c}}

),

result = Table.FromRows(acc{1}, Table.ColumnNames(Source))

in

result

When my data volume is less than 2000 lines, I use the List.Accumulate function to handle, the code is running properly, the data volume is more than 2000 lines, there will be a stack overflow error, Using just the List.Accumulate solution, is there a way to get code to work properly with more than 2,000 lines of data.

Thank in advance

the link to my excel file: Insert Missing SN

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Curt Hagenlocher's argument seems interesting to me.

<<...

But broadly speaking, M is a lazy language and some of the things you compute actually result in promises instead of immediate values. If the combination function passed to List.Accumulate results in a promise, then the result of accumulating over your list is a promise on top of a promise on top of... etc. At some point, when the actual value is calculated, it's done recursively -- the chain of promises is too long and the stack flows over.

>>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @ziying35

try this approach. Should be faster

let

Source = #table

(

{"SN","Value"},

{

{"0","1"}, {"1","2"}, {"2","3"}, {"3","4"}, {"5","5"}, {"10","6"}, {"18","7"}, {"20","8"}, {"25","9"}, {"10000","10"}

}

),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"SN", Int64.Type}}),

GetListSN = List.Numbers(List.Min(#"Changed Type"[SN]), List.Max(#"Changed Type"[SN])-List.Min(#"Changed Type"[SN])),

#"Converted to Table" = Table.FromList(GetListSN, Splitter.SplitByNothing(),1, null, ExtraValues.Error),

#"Changed Type1" = Table.TransformColumnTypes(#"Converted to Table",{{"Column1", Int64.Type}}),

#"Renamed Columns" = Table.RenameColumns(#"Changed Type1",{{"Column1", "SN"}}),

Join = Table.NestedJoin(#"Renamed Columns","SN",#"Changed Type","SN","Real SN"),

#"Expanded Real SN" = Table.ExpandTableColumn(Join, "Real SN", {"Value"}, {"Value"}),

#"Replaced Value" = Table.ReplaceValue(#"Expanded Real SN",null,"0",Replacer.ReplaceValue,{"Value"})

in

#"Replaced Value"

Copy paste this code to the advanced editor in a new blank query to see how the solution works.

If this post helps or solves your problem, please mark it as solution (to help other users find useful content and to acknowledge the work of users that helped you)

Kudoes are nice too

Have fun

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Jimmy801

Thank you for your solution, but my problem is not this, the problem of inserting missing data, I can write several solutions myself. But my problem today is mainly to figure out how to solve the List.Accumulate function when there is a stack overflow of data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it is not clear to me where and why the problem of overflow with the list.accumulate function is needed (apart from the one in which an infinite loop is created).

But for the original problem, a faster solution than that using list.accumulate might be to turn the initial table into a record ( i called sorgente) where the SN column are the fields and the Value column are the values.

after with the following expression get the final table

S = Table.FromRows(List.Transform(List.Transform({1..200000},each Text.From(_)),each {_,Record.FieldOrDefault(sorgente,_,"0")}),{"SN","Value"})

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it seems the problem you ran into is already known

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

I think it is the problem at the bottom of the program. When the amount of data is less than 2000, the speed is still very fast. When the amount of data is more than 2000 rows, the speed will slow down and even make mistakes, I read the post linked above, but Microsoft hasn't fixed the problem. Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I made a little experiment with those two basic expressions:

sum = List.Accumulate(Sorgn,0,(s,c)=> s+c),

combine = List.Accumulate(Sorgn,{},(s,c)=> List.Combine({s,{c}}))

the first one it can even calculate millions of terms, instead of the second overflowing after about 64000/64500 elements

this

List.Accumulate(Sorgn,[a={0}],(s,c)=> s&[a=s[a]&{c}])and this

List.Accumulate(Sorgn,[a=0],(s,c)=> s&[a=s[a]+c])goes in overflow just after 7000 elements.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Iterating over a record with the List.Accumulate function usually doesn't go wrong, but it does when it comes to combining lists or tables etc.

In our PQ group, we usually only dare to use it to deal with some data under 2000 lines

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

with a Sorgn={1..1000000}, for example

comrec = List.Accumulate(Sorgn,[a=0],(s,c)=> s&[a=c]),

comrec=List.Accumulate(Sorgn,[acc=0,cur=0],(s,c)=> s&[acc=[acc]+c,cur=c]),

comrec=List.Accumulate(Sorgn,[acc=0,cur=0],(s,c)=> s&[acc=s[acc]+c,cur=c])

the first expression gives the result immediately;

the second quickly gives as a result a record with the field acc = error(*) and the field cur = 100000;

the third expression overflows

(*) the correct form is the third expression.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why is everyone making this so complicated:

Will this work for you

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

SNRange = {0 .. List.Max(Source[SN])},

AsTable = Table.FromColumns({SNRange}, {"SN"}),

Join = Table.NestedJoin(AsTable, "SN", Source, "SN", "Value", JoinKind.LeftOuter),

#"Expanded Value" = Table.ExpandTableColumn(Join, "Value", {"Value"}, {"Value"}),

#"Replaced Value" = Table.ReplaceValue(#"Expanded Value",null,0,Replacer.ReplaceValue,{"Value"}),

#"Sorted Rows" = Table.Sort(#"Replaced Value",{{"SN", Order.Ascending}})

in

#"Sorted Rows"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've written code solutions like that before, but my problem isn't that

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I couldn't find a solution, so I decided to close the topic

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Curt Hagenlocher's argument seems interesting to me.

<<...

But broadly speaking, M is a lazy language and some of the things you compute actually result in promises instead of immediate values. If the combination function passed to List.Accumulate results in a promise, then the result of accumulating over your list is a promise on top of a promise on top of... etc. At some point, when the actual value is calculated, it's done recursively -- the chain of promises is too long and the stack flows over.

>>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, what problem are you trying to solve then? You can iterate though a list and refer to previous values without using recursion. Just use List.Generate instead

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just want to know that the amount of data being processed is not that large, and that it's easy to get stack overflows by using the List.Accumulate function to handle them.

I can also write a code solution using the List.Genarate function, which can handle this kind of data in a second or two

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't think the problem is in the size of the input table.

You should check if you have some null value in the SN column.

Seems to me that you initial if construct a sort of recursive loop that never ends.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

This is just one of my cases, and there are many others that have this problem. I see that you often use List.Accumulate function to help others provide solutions, you can try to increase the data volume of those cases, I am sure that the same problem will occur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi @ziying35 ,

In fact, I have never experienced such a situation and I cannot absolutely exclude for all the solutions I have proposed using constructs that create loops something like this may happen.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

in your you could try this

S = Table.Buffer(Table.RemoveMatchingRows(Source, {[SN=null]},"SN")),

acc = List.Accumulate(

{1..Table.RowCount(S)-1},

{Record.FieldValues(S{0})},

(s,c)=>if S{c}[SN]=S{c-1}[SN]+1 then s&{Record.FieldValues(S{c})}

else s&List.Transform({S{c-1}[SN]+1..S{c}[SN]-1}, each {_,0})&{Record.FieldValues(S{c})}

),

result=Table.FromRows(acc,{"SN","Value"})

in

result

or this

S = Table.Buffer(Table.RemoveMatchingRows(Source, {[SN=null]},"SN")),

acc = List.Accumulate(

{1..Table.RowCount(S)-1},

{S{0}},

(s,c)=>if S{c}[SN]=S{c-1}[SN]+1 then s&{S{c}}

else s&List.Transform({S{c-1}[SN]+1..S{c}[SN]-1}, each [SN=_,Value=0])&{S{c}}

),

result=Table.FromRecords(acc)

in

result

but, I'm sure, you don't need any help on this aspect.

I proposed a solution only to partecipate at the discussion.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

The file I uploaded to cloud disk is some data I simulated. The data volume is more than 190,000 lines, with no null value, I asked a few friends to use a higher configuration of the computer in my file to test your code, although it will not make mistakes, but there is no way to load out the data

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.