- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Custom Function to filter a table (1 Query) mu...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Custom Function to filter a table (1 Query) multiple times

Hi all,

I hope everyone is doing well. I have a quick favour to ask to the community members.

I have a table (Lets call it table A) (something like below)

| ID | Date |

| 1 | 5/07/2020 |

| 2 | 3/09/2020 |

| 3 | 2/11/2020 |

| 4 | 1/01/2021 |

| 5 | 1/04/2021 |

| 6 | 30/06/2021 |

| 7 | 28/09/2021 |

| 8 | 27/12/2021 |

| 9 | 26/01/2022 |

I need to filter Table A based on date range of 1/7/2020 to 1/04/2021 as an example. I need to create 30 different tables from Table A as I need to create multiple layers of same data for my purpose. All 30 tables that I need to create have the same step that is Date is >= 1/7/2020 and date is < 1/04/2021. This step is repeating 30 different times and making the file very slow. I need help to get the same results by using custom function so that all 30 queries flow through same step which will hopefully make my file less slower. I have simplified the example above as in reality the other queries are pretty complex, but solving my problem with custom function will help me solve other problems in a much more efficient way.

I understand that there are other ways like filtering Table A First and referencing the query 30 times later, but for my model, it would be really helpful and I would be really grateful if anyone can help me solve the problem by the use of Custom Function(creating a parameter).

Thanks to all community member who have read the above question.

Kind regards,

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

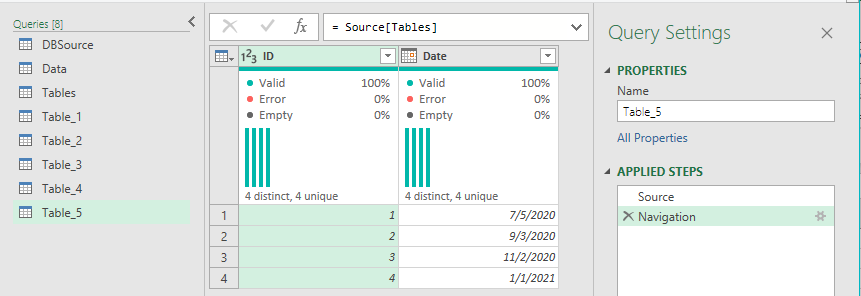

You can do something like this.

First, load your table from the database, and then add your main filter that you want to flow through all queries. Note that I needed to transform the date columns with the locale; you may not need that step:

let

Source = DBSource,

#"Changed Type with Locale" = Table.TransformColumnTypes(Source, {{"Date", type date}}, "en-GB"),

#"Changed Type" = Table.TransformColumnTypes(#"Changed Type with Locale",{{"ID", Int64.Type}}),

#"Filtered Rows" = Table.SelectRows(#"Changed Type", each [Date] >= #date(2020, 1, 7) and [Date] <= #date(2021, 1, 4))

in

#"Filtered Rows"

Now you have this table (I named the query "Data"):

ID Date

| 1 | 7/5/2020 |

| 2 | 9/3/2020 |

| 3 | 11/2/2020 |

| 4 | 1/1/2021 |

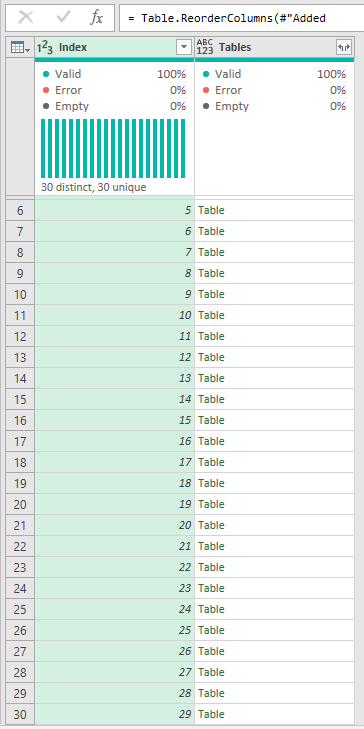

Now you can add another query that will first buffer the filtered table, then repeat the table 30 times, and then split the table into 30 nested tables. Then add an index to the table so you have a way to delineate each table. This Query is names Tables:

let

Source = Data,

BufferTable = Table.Buffer(Source),

RepeatTable = Table.Repeat(BufferTable, 30),

SplitTable = Table.Split(RepeatTable, List.Max(RepeatTable[ID])),

#"Converted to Table" = Table.FromList(SplitTable, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Renamed Columns" = Table.RenameColumns(#"Converted to Table",{{"Column1", "Tables"}}),

#"Added Index" = Table.AddIndexColumn(#"Renamed Columns", "Index", 0, 1, Int64.Type),

#"Reordered Columns" = Table.ReorderColumns(#"Added Index",{"Index", "Tables"})

in

#"Reordered Columns"

Now you have 30 tables, each filtered already via the data table. By buffering the table first, we ensure that that table is not reevaluated for the duration of THIS query--which means that you'll need to make a decision at this point. If you are comfortable working with nested tables, then you can make all of your transforms right in this query, and continue to enjoy the benefit of the single buffered table. If you'd rather work with each table on its own, you can either add new blank queries with the source being:

=Tables{4}//Just the name of the query (Tables) and the row number--Tables{number}

Which is the 5th table in the Tables Query.

You can also just right click on a table in the list and choose "Add as new query."

Again, the buffered table only exists through the duration of the Tables query. Even so, it's probably still going to be a lot faster than 30 separate queries--but that's for you to decide!

---Nate

I’m usually answering from my phone, which means the results are visualized only in my mind. You’ll need to use my answer to know that it works—but it will work!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can do something like this.

First, load your table from the database, and then add your main filter that you want to flow through all queries. Note that I needed to transform the date columns with the locale; you may not need that step:

let

Source = DBSource,

#"Changed Type with Locale" = Table.TransformColumnTypes(Source, {{"Date", type date}}, "en-GB"),

#"Changed Type" = Table.TransformColumnTypes(#"Changed Type with Locale",{{"ID", Int64.Type}}),

#"Filtered Rows" = Table.SelectRows(#"Changed Type", each [Date] >= #date(2020, 1, 7) and [Date] <= #date(2021, 1, 4))

in

#"Filtered Rows"

Now you have this table (I named the query "Data"):

ID Date

| 1 | 7/5/2020 |

| 2 | 9/3/2020 |

| 3 | 11/2/2020 |

| 4 | 1/1/2021 |

Now you can add another query that will first buffer the filtered table, then repeat the table 30 times, and then split the table into 30 nested tables. Then add an index to the table so you have a way to delineate each table. This Query is names Tables:

let

Source = Data,

BufferTable = Table.Buffer(Source),

RepeatTable = Table.Repeat(BufferTable, 30),

SplitTable = Table.Split(RepeatTable, List.Max(RepeatTable[ID])),

#"Converted to Table" = Table.FromList(SplitTable, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Renamed Columns" = Table.RenameColumns(#"Converted to Table",{{"Column1", "Tables"}}),

#"Added Index" = Table.AddIndexColumn(#"Renamed Columns", "Index", 0, 1, Int64.Type),

#"Reordered Columns" = Table.ReorderColumns(#"Added Index",{"Index", "Tables"})

in

#"Reordered Columns"

Now you have 30 tables, each filtered already via the data table. By buffering the table first, we ensure that that table is not reevaluated for the duration of THIS query--which means that you'll need to make a decision at this point. If you are comfortable working with nested tables, then you can make all of your transforms right in this query, and continue to enjoy the benefit of the single buffered table. If you'd rather work with each table on its own, you can either add new blank queries with the source being:

=Tables{4}//Just the name of the query (Tables) and the row number--Tables{number}

Which is the 5th table in the Tables Query.

You can also just right click on a table in the list and choose "Add as new query."

Again, the buffered table only exists through the duration of the Tables query. Even so, it's probably still going to be a lot faster than 30 separate queries--but that's for you to decide!

---Nate

I’m usually answering from my phone, which means the results are visualized only in my mind. You’ll need to use my answer to know that it works—but it will work!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @watkinnc

Sorry for getting back on this one late. I really really appreciate this solution. It meets exactly what I needed.

You're the best.

Cheers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why thank you!

--Nate

I’m usually answering from my phone, which means the results are visualized only in my mind. You’ll need to use my answer to know that it works—but it will work!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @watkinnc,

This sounds about exactly what I need. I really appreciate you providing me this solution. I'll test this approach and will let you know by Saturday 12/02.

You totally understood what I needed this for, just using your head which is very very impressive.

After I pass on the date filters, I needed to create those 30 tables for 6 different regions. (5 tables per region). These tables would then be used to interact with user input to perform what ifs, (5 scenarios per region). One refresh after user inputs and we get 5 scenarios for each region, which is then visualized in Power BI. Meaning I would very likely work with each tables on its own after this point as the user input reference for each tables will be different.

Can't wait to try it.

Thank you very much.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @shekhar_shres ,

I detect an XY Problem here.

What are you actually trying to achieve with your report that you think you need to create 30 different tables from the source table?

It sounds to me like you just need to bring your source table into the model and do the rest with DAX.

And, FWIW, I'm not sure a custom function is going to give you any performance gains on referencing a filtered source query 30 times anyway. Power Query will (almost) always make multiple calls to the source, at least one for each query in your list. You can't (generally) shortcut the way that Power Query fundamentally works with a function.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Pete,

This one is actually in Excel Power Query, and there are some inputs from user side that interacts with 30 table all in a very unique way. If it is unachievable using custom functions then I'll just have to leave it as it is. I also have option to extract all the 30 tables in its own way straight from SQL, but because their are multiple other automated reports that uses the server power already, I decided to have 1 table come from SQL server and all the other transformation in Power Query so that the load remains in user's computer.

I really appreciate your response and going through my question above.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @shekhar_shres ,

Ok. Very difficult to give you the best option for you without fully understanding your exact scenario, but here's my thoughts on what you've provided:

You can still build a data model in Excel and create DAX measures:

I'm assuming you are on Office 365 and/or have the latest version of Excel but, even if you don't, you can get Power Pivot as an ADD-IN. I think this is what you'll need to do if you're worried about making multiple calls to your SQL server.

Using Power Query to generate these 30 tables, no matter how you do it, is in most circumstances going to result in ~30 calls to the server. That how PQ works. If you want to pass the load to the end user, you will need to only bring the source table through from Power Query, then use DAX to create the 30 tables (which could be very resource-intensive on the end user machine) and/or use DAX to create measures against the source table (far less resource-intensive).

As I said before, I can't really imagine a scenario requiring the spawning of 30 new tables from a single source table which couldn't be largely negated using DAX, but without further detail I can't help here.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Pete,

Thank you for your response again, the reason I needed 30 tables was very complicated to elaborate. All I needed was a way to have those 30 tables in a most efficient way so that I could subsequently transform them individually. I think I may have found the answer from @watkinnc .

Anyways, I really do appreciate your effort to try and help me out. I'll update you of the outcome as well.

Thanks again

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.