- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Counting blocks of consecutive numbers -is it ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Counting blocks of consecutive numbers -is it possible?

Hi,

I don't mind whether this can be done in M or DAX, as long as it is done without other languages like Python (which are not enabled for me). Is it possible at all to get a list of blocks of consecutive numbers (please see the example diagram below)? I have tried for 2 days straight and I'm not getting anywhere. I am trying to get the table like the one on the right (below) from the table on the left.

Thanks

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry @lazarus1907 ,

my bad. Forgot that this technique always compares against the first element in the group and not against the previous.

I'd recommend this approach then instead:

let

// Replace this Source step by a reference to your table

Source = Table.FromRows(

Json.Document(

Binary.Decompress(

Binary.FromText(

"JcqxEQAgCAPAXVJTEBQdhmP/NTxD98VXgTDQHW2FkCkvOeT9zTkpzznynCsnuh8=",

BinaryEncoding.Base64

),

Compression.Deflate

)

),

let

_t = ((type nullable text) meta [Serialized.Text = true])

in

type table [Ref = _t, Code = _t]

),

#"Changed Type" = Table.TransformColumnTypes(Source, {{"Ref", Int64.Type}, {"Code", Int64.Type}}),

ToList = List.Buffer(Table.ToRecords(#"Changed Type")),

AllBucketID = List.Generate(

() => Record.AddField(Record.AddField(ToList{0}, "CountAll", 0), "CountGroup", 0),

each [CountAll] <= List.Count(ToList),

each [

CountAll = [CountAll] + 1,

CountGroup =

if [Code] = ToList{[CountAll]}[Code] - 1 then

[CountGroup]

else

[CountGroup] + 1,

Code = ToList{[CountAll]}[Code],

Ref = ToList{[CountAll]}[Ref]

]

),

SkipInitialElement = List.Skip(AllBucketID),

#"Converted to Table" = Table.FromList(

SkipInitialElement,

Splitter.SplitByNothing(),

null,

null,

ExtraValues.Error

),

#"Expanded Column1" = Table.ExpandRecordColumn(

#"Converted to Table",

"Column1",

{"Ref", "Code", "CountGroup"},

{"Ref", "Code", "CountGroup"}

),

#"Grouped Rows" = Table.Group(

#"Expanded Column1",

{"CountGroup"},

{

{"Ref", each List.Max([Ref]), type number},

{"Code", each List.Max([Code]), type number},

{"Count", each Table.RowCount(_), Int64.Type}

},

GroupKind.Local

),

#"Removed Columns" = Table.RemoveColumns(#"Grouped Rows", {"CountGroup"})

in

#"Removed Columns"

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

using an auxiliary index column, the V element of the table.group function can dig the spider out of the hole.

let

Origine = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("Lc3LDcAwCATRXjjn4AV/a7HcfxtJBt/eSGjZ22SPSWHn2ea44sANV9xxwwN3PPHAC8/P7rmzcO6oEDkkEbkkJ3JKQeSW/udR71kj7lkn7tn/P1rJmIQyFuF2zgs=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Colonna1 = _t, Colonna2 = _t]),

#"Modificato tipo" = Table.TransformColumnTypes(Origine,{{"Colonna2", Int64.Type}}),

aci = Table.AddIndexColumn(#"Modificato tipo", "Indice", 0, 1, Int64.Type),

#"Modificato tipo1" = Table.TransformColumnTypes(aci,{{"Colonna2", Int64.Type}}),

#"Raggruppate righe" = Table.Group(#"Modificato tipo1", {"Colonna2", "Indice"}, {{"last", each Table.Last(_)},{"Conteggio", each Table.RowCount(_), Int64.Type}}, GroupKind.Local,(x,y)=>-x[Colonna2]+y[Colonna2]-(y[Indice]-x[Indice])),

#"Tabella ref espansa" = Table.ExpandRecordColumn(#"Raggruppate righe", "last", {"Colonna1", "Colonna2"}, {"ref", "code"}),

#"Rimosse colonne" = Table.RemoveColumns(#"Tabella ref espansa",{"Colonna2", "Indice"})

in

#"Rimosse colonne"

PS

questa soluzione presuppone che la tabella sia ordina in senso crescente nella colonna Code.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @lazarus1907 ,

I got a simpler solution, but there is also limitations, depends on the complex of your data. You can try the formula to add a new column, this column will help you to identify the same group, then you can group this new column and count the row number. I have added several rows addtionally to test the formula and it seems ok.

Table.AddColumn(

#"Changed Type",

"Custom.3",

each Number.ToText(

List.Max(Table.SelectRows(#"Changed Type", (x) => x[Code] - [Code] = x[Ref] - [Ref])[Code])

)

& Number.ToText(

List.Max(Table.SelectRows(#"Changed Type", (x) => x[Code] - [Code] = x[Ref] - [Ref])[Ref])

)

)

RefCodeCustom.3

| 1 | 113 | 1175 |

| 2 | 114 | 1175 |

| 3 | 115 | 1175 |

| 4 | 116 | 1175 |

| 5 | 117 | 1175 |

| 6 | 204 | 2068 |

| 7 | 205 | 2068 |

| 8 | 206 | 2068 |

| 9 | 558 | 56213 |

| 10 | 559 | 56213 |

| 11 | 560 | 56213 |

| 12 | 561 | 56213 |

| 13 | 562 | 56213 |

| 14 | 601 | 60215 |

| 15 | 602 | 60215 |

| 16 | 115 | 11516 |

| 17 | 114 | 11417 |

| 18 | 113 | 11520 |

| 19 | 113 | 11319 |

| 20 | 115 | 11520 |

| 21 | 117 | 11721 |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @lazarus1907

You can also try my coding solution, which can handle about 12,000 lines of data.

// output

let

Source = Table.FromRecords(Json.Document(Binary.Decompress(Binary.FromText("Tc+xDYAwDAXRXVJTJA42hJYNaBEdoWUAxO5QEB+d9WSd9NcrLPUIU+rCfO71PVK+uw8F7B0zqI49aI4KDo7WUCLNAaQ5gjRLQ9XRMUW0oL5JLaKCJjSjgvosi79fReXeHg==", BinaryEncoding.Base64),Compression.Deflate))),

rows = Table.ToRows(Source)&{{}},

acc = List.Accumulate(

rows,

{{}, {}, 0},

(s,c)=>if s{0}={} then {c&{1}, s{1}, 1}

else if s{0}{1}+1=c{1}? then {c&{s{2}+1}, s{1}, s{2}+1}

else {c&{1}, s{1}&{s{0}}, 1}

){1},

result = Table.FromRows(acc, {"Ref", "Code", "Count"})

in

result- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @lazarus1907

You could also try this approach:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("Lc3BDQAhCETRXjh7AFxQazH238bKjLf3wyTsLSZNzLqctsXhD+5wwB+ccMADzmtX7gfM/YS5X9cREzZFLEZ9jlSGI4zREc6o56nvEoh3qfejLucH", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Ref = _t, Code = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source, {{"Ref", Int64.Type}, {"Code", Int64.Type}}),

// Get codes and find the last consecutive one, counting consecutives in the process:

#"Codes and Counts List" = List.Accumulate(List.Sort(#"Changed Type"[Code]), {},

(s, c) =>

if ((c - List.Last(s){0}?)??1) <= 1

then List.RemoveLastN(s, 1) & {{c, ((List.Last(s){1}?)??0) + 1}}

else s & {{c, 1}}),

#"Codes and Counts to Table" = Table.FromList(#"Codes and Counts List", (l) => {l{0}, l{1}}, {"Code", "Count"}),

// Keep only the last of the consecutive codes

#"Keep Last Codes" = Table.Join(#"Changed Type", {"Code"}, #"Codes and Counts to Table", {"Code"}, JoinKind.Inner)

in

#"Keep Last Codes"

Best,

Spyros

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

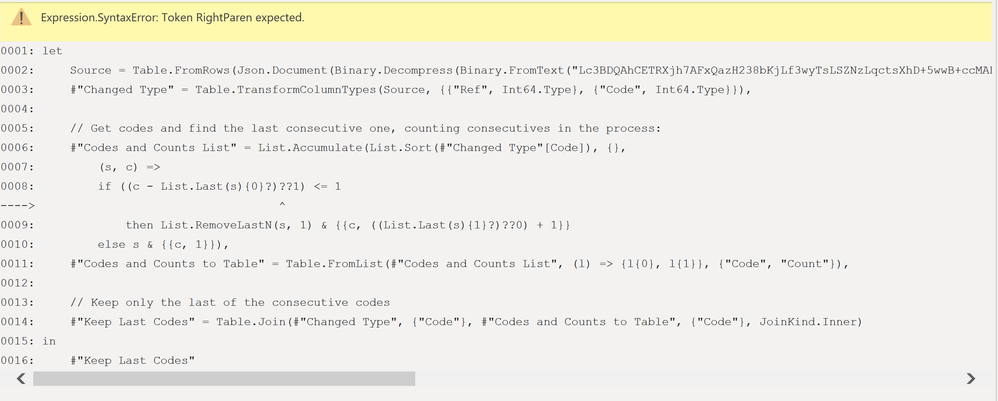

Interesting code! However, I get the error "Token RightParen expected". Could you fix it for me?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The version we have to use at work is from October 2019, and I'm definitely getting an error (please see below). Is that double question mark a new feature? I'm not familiar with it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@lazarus1907 thought so, no worries.

?? is a null coalescing operator: a shorthand for "if x = null then y else x"

Try this one:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("Lc3BDQAhCETRXjh7AFxQazH238bKjLf3wyTsLSZNzLqctsXhD+5wwB+ccMADzmtX7gfM/YS5X9cREzZFLEZ9jlSGI4zREc6o56nvEoh3qfejLucH", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Ref = _t, Code = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source, {{"Ref", Int64.Type}, {"Code", Int64.Type}}),

// NullCheck = helper function for dealing with nulls

NullCheck = (check as any, return as any) as any => if check = null then return else check,

// Get codes and find the last consecutive one, counting consecutives in the process:

#"Codes and Counts List" = List.Accumulate(List.Sort(#"Changed Type"[Code]), {},

(s, c) =>

if NullCheck((c - List.Last(s){0}?), 1) <= 1

then List.RemoveLastN(s, 1) & {{c, NullCheck((List.Last(s){1}?), 0) + 1}}

else s & {{c, 1}}),

#"Codes and Counts to Table" = Table.FromList(#"Codes and Counts List", (l) => {l{0}, l{1}}, {"Code", "Count"}),

// Keep only the last of the consecutive codes

#"Keep Last Codes" = Table.Join(#"Changed Type", {"Code"}, #"Codes and Counts to Table", {"Code"}, JoinKind.Inner)

in

#"Keep Last Codes"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

using an auxiliary index column, the V element of the table.group function can dig the spider out of the hole.

let

Origine = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("Lc3LDcAwCATRXjjn4AV/a7HcfxtJBt/eSGjZ22SPSWHn2ea44sANV9xxwwN3PPHAC8/P7rmzcO6oEDkkEbkkJ3JKQeSW/udR71kj7lkn7tn/P1rJmIQyFuF2zgs=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Colonna1 = _t, Colonna2 = _t]),

#"Modificato tipo" = Table.TransformColumnTypes(Origine,{{"Colonna2", Int64.Type}}),

aci = Table.AddIndexColumn(#"Modificato tipo", "Indice", 0, 1, Int64.Type),

#"Modificato tipo1" = Table.TransformColumnTypes(aci,{{"Colonna2", Int64.Type}}),

#"Raggruppate righe" = Table.Group(#"Modificato tipo1", {"Colonna2", "Indice"}, {{"last", each Table.Last(_)},{"Conteggio", each Table.RowCount(_), Int64.Type}}, GroupKind.Local,(x,y)=>-x[Colonna2]+y[Colonna2]-(y[Indice]-x[Indice])),

#"Tabella ref espansa" = Table.ExpandRecordColumn(#"Raggruppate righe", "last", {"Colonna1", "Colonna2"}, {"ref", "code"}),

#"Rimosse colonne" = Table.RemoveColumns(#"Tabella ref espansa",{"Colonna2", "Indice"})

in

#"Rimosse colonne"

PS

questa soluzione presuppone che la tabella sia ordina in senso crescente nella colonna Code.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

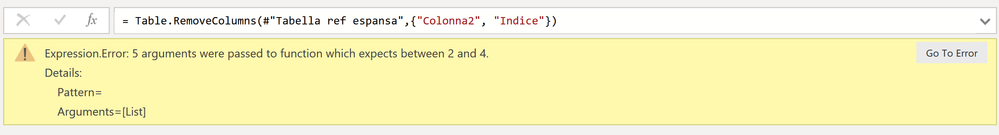

@Anonymous

Thanks for your code. For some reason, I'm getting an error. Could it be because my version is from October 2019?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you could also try using Table.FuzzyGroup on your Code column 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

a solution (?) using only the mouse, without any "esoteric" function

let

Origine = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("Lc3LDcAwCATRXjjn4AV/a7HcfxtJBt/eSGjZ22SPSWHn2ea44sANV9xxwwN3PPHAC8/P7rmzcO6oEDkkEbkkJ3JKQeSW/udR71kj7lkn7tn/P1rJmIQyFuF2zgs=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Colonna1 = _t, Colonna2 = _t]),

#"Modificato tipo" = Table.TransformColumnTypes(Origine,{{"Colonna2", Int64.Type}, {"Colonna1", Int64.Type}}),

aci = Table.AddIndexColumn(#"Modificato tipo", "Indice", 1, 1, Int64.Type),

#"Aggiunta colonna personalizzata" = Table.AddColumn(aci, "diff", each [Colonna2]-[Indice]),

#"Raggruppate righe" = Table.Group(#"Aggiunta colonna personalizzata", {"diff"}, {{"Conteggio", each Table.RowCount(_), Int64.Type}, {"code", each List.Max([Colonna2]), type nullable number}, {"ref", each List.Max([Colonna1]), type nullable text}})

in

#"Raggruppate righe"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@AnonymousI just had to remove some "Int64.type" which my version doesn't like, and both of your attemps work like pure magic! Amazing!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @lazarus1907 ,

have you tried the third method I suggested?

I'm curious to know if it is useful for you.

Certainly it depends on the specific content of the column of consecutive ids to be grouped, but perhaps with the appropriate calibrations it could be a third way ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh, I tried both and I found them incredibly useful. Now I am doing things I didn't know I could do before, and yes, I sorted out my original problem at work. Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"both" means two, but I give three suggestions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@AnonymousIf you are referring to the "fuzzy" option, I'm planning to check it at some point, but I'm fairly happy with any of the other two for the moment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @lazarus1907 ,

there is a magical 5th parameter in the Table.Group function that allows for just this kind of logic:

let

// Replace this Source step by a reference to your table

Source = Table.FromRows(

Json.Document(

Binary.Decompress(

Binary.FromText(

"i45WMlTSUTI0MFCK1YlWMgKzDcFsYzDbGMw2AbNNlGJjAQ==",

BinaryEncoding.Base64

),

Compression.Deflate

)

),

let

_t = ((type nullable text) meta [Serialized.Text = true])

in

type table [Ref = _t, Code = _t]

),

#"Changed Type" = Table.TransformColumnTypes(Source, {{"Ref", Int64.Type}, {"Code", Int64.Type}}),

#"Grouped Rows" = Table.Group(

#"Changed Type",

{"Code"},

{{"Ref", each List.Max([Ref]), type nullable number}, {"Count", each List.Count(_)}},

// Don't skip the following parameter, as the logic would break otherwise:

GroupKind.Local,

// This is where the magic happens:

// The difference between the current Code value and the previous will be calculated and and a new group will be created if it is not equal to 1 (non consecutive)

(x, y) => Number.From(y[Code] - x[Code] <> 1)

)

in

#"Grouped Rows"

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Brilliant answer, Imke, thanks a lot! Something is not quite right with your code, because I never get groups larget than 2, but I was unaware of these options, so maybe I can find the solution myself and learn in the process. I'll check your site carefully -it's not the first time I find useful stuff there!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry @lazarus1907 ,

my bad. Forgot that this technique always compares against the first element in the group and not against the previous.

I'd recommend this approach then instead:

let

// Replace this Source step by a reference to your table

Source = Table.FromRows(

Json.Document(

Binary.Decompress(

Binary.FromText(

"JcqxEQAgCAPAXVJTEBQdhmP/NTxD98VXgTDQHW2FkCkvOeT9zTkpzznynCsnuh8=",

BinaryEncoding.Base64

),

Compression.Deflate

)

),

let

_t = ((type nullable text) meta [Serialized.Text = true])

in

type table [Ref = _t, Code = _t]

),

#"Changed Type" = Table.TransformColumnTypes(Source, {{"Ref", Int64.Type}, {"Code", Int64.Type}}),

ToList = List.Buffer(Table.ToRecords(#"Changed Type")),

AllBucketID = List.Generate(

() => Record.AddField(Record.AddField(ToList{0}, "CountAll", 0), "CountGroup", 0),

each [CountAll] <= List.Count(ToList),

each [

CountAll = [CountAll] + 1,

CountGroup =

if [Code] = ToList{[CountAll]}[Code] - 1 then

[CountGroup]

else

[CountGroup] + 1,

Code = ToList{[CountAll]}[Code],

Ref = ToList{[CountAll]}[Ref]

]

),

SkipInitialElement = List.Skip(AllBucketID),

#"Converted to Table" = Table.FromList(

SkipInitialElement,

Splitter.SplitByNothing(),

null,

null,

ExtraValues.Error

),

#"Expanded Column1" = Table.ExpandRecordColumn(

#"Converted to Table",

"Column1",

{"Ref", "Code", "CountGroup"},

{"Ref", "Code", "CountGroup"}

),

#"Grouped Rows" = Table.Group(

#"Expanded Column1",

{"CountGroup"},

{

{"Ref", each List.Max([Ref]), type number},

{"Code", each List.Max([Code]), type number},

{"Count", each Table.RowCount(_), Int64.Type}

},

GroupKind.Local

),

#"Removed Columns" = Table.RemoveColumns(#"Grouped Rows", {"CountGroup"})

in

#"Removed Columns"

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.