- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Column values change when loading into model

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Column values change when loading into model

Hi all,

See attached file for support.

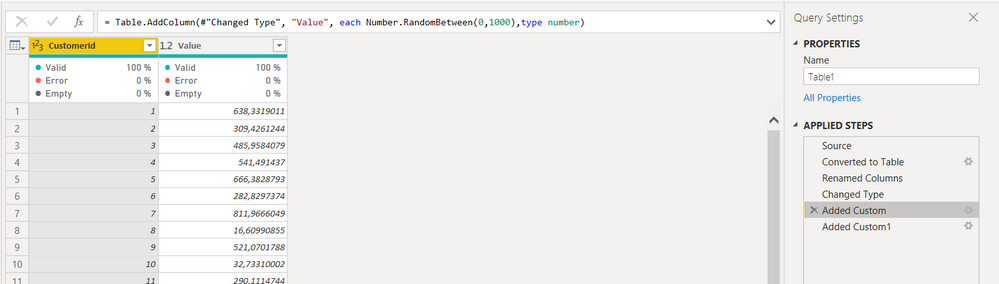

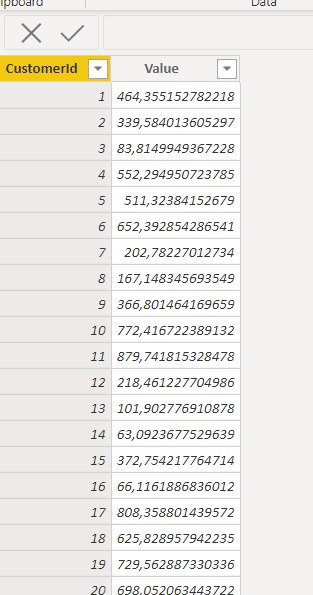

We build a simple table in the query editor (M code below). Note we are creating a custom column "Value" with randomly generated numbers. In the query editor, the final result shows a different value for each row:

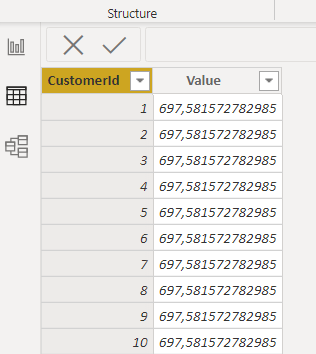

However, after loading the table into the model, column Value shows a unique value:

Any idea what is going on?

Many thanks

let

Source = List.Numbers(1, NumCustomers),

#"Converted to Table" = Table.FromList(Source, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Renamed Columns" = Table.RenameColumns(#"Converted to Table",{{"Column1", "CustomerId"}}),

#"Changed Type" = Table.TransformColumnTypes(#"Renamed Columns",{{"CustomerId", Int64.Type}}),

#"Added Custom" = Table.AddColumn(#"Changed Type", "Value", each Number.RandomBetween(0,1000), type number)

in

#"Added Custom"

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AlB

I'll try to explain a little bit.

No matter the queries we write, in the end we have the PQ Engine to do the work for us. In order to make this engine fast enough, there have been written a whole lot of optimisations. So, it will do some steps together, it will rid of some all the way because they are deemed not needed, etc. That's why it's a functional language: it starts from the result and works its way up using only parts that it is actually going to need. And this is probably why the "DataMashup" has the Mashup in its name.

Some of these optimisations give birth to "bugs" like the one you came accross.

Using:

#"Added Custom" = Table.AddColumn(#"Changed Type", "Value", each Number.RandomBetween(0,1000), type number),

The PQe will "understand" that the row values are not needed: We ask for a number, we do not make use of any row value, and then return it. So, when mashing this thing up, it will actually compute Number.RandomBetween(0,1000) once and then add it in every row.

It is a bit smarter than that of course, we cannot "trick" it to use the row values if they're not needed:

#"Added Custom" = Table.AddColumn(#"Changed Type", "Value", each Number.RandomBetween(0,1000) + [CustomerId] - [CustomerId], type number),

will also never use the rows' values.

To actually see this happening in PQ Editor one can use a Transformer function:

Table.TransformColumns(#"Added Custom",{{"Value", each Number.RandomBetween(0,1000), type number}})

with which every row should be filled by the same number.

OK, now we need to actually force it to calculate this thing for every row. And this is why Index or Buffer are commonly used:

i) By indexing after the random number generation, the PQe actually has to go through every row, look at its values, save their position and values and then go on.

ii) By buffering, it loads the whole thing into memory, so it actually computes the random numbers once again for every row, but also keeping all that.

There's another solution not really talked about, which is for me the fastest one I know of: Replacing. Table.ReplaceValues actually reads every row and discards it, being a bit faster than indexing. These two steps should work as expected:

#"Added Custom" = Table.AddColumn(#"Changed Type", "Value", each null, type number),

#"Replaced Value" = Table.ReplaceValue(#"Added Custom",null,each Number.RandomBetween(0, 1000),Replacer.ReplaceValue,{"Value"})

Last, you can avoid all that by using List.Random, which is actually made to compute different random numbers. However, afterwards you'll need to zip it with your data and expand etc etc, or add an index and transform the index to the corresponding list's random value. All that taking some valueable calculation time. But take those two steps:

#"Zip with List Random" = List.Zip({#"Changed Type"[CustomerId], List.Transform(List.Random(List.Count(#"Changed Type"[CustomerId])), each Value.Multiply(_, 1000))}),

#"List to Table" = Table.FromList(#"Zip with List Random", (x) => x, {"CustomerId", "Value"})

in

#"List to Table"

Hope it makes some sense now.

Best,

Spyros

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AlB

I'm glad you found this helpful. I've read a lot about how the mechanics work, but this documentation is found mostly on forums, by reading responses by people actually involved in the engine's developement (I am not one of them 🙂 ). There's a good series by Ben Gribaudo about M, and it does meddle a little bit with these topics (lazy evaluation) but not so much.

OK, now to explaining this last bit. The query preview actually computes some things and has its own buffer, which is the one helping us to see if what we're doing actually works. When we're previewing the results, they are buffered in the DataMashup so we can see and work with them, we can see how each step is computed by clicking on them etc. (btw this is why a good optimisation when working with large data models is disabling background preview).

In a way, the preview is a bit less lazy (less optimisations in our case) than the actual query plan, in regards to trying to put the steps in order, letting you look at these steps etc. So, Number.RandomBetween(0,1000) gets evaluated and shown.

However, when the queries are applied, the queries are not looked at individually but get reevaluated all together, compressed and put into the Tabular model's Storage Engine (VertiPaq). The preview's results are not used. These optimisations are a lot better - we see that sometimes while working with very complicated queries taking about the same time for 1000 rows (preview) and for the actual evaluation. One such optimisation is assuming idempotence for every function: given the same arguments the function will return the same result. With this, Number.RandomBetween(0,1000) is evaluated once and the result is subsequentl added in every row.

Of course, with static data we see no difference, but with random data we do.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Smauro

First, of all, thanks very much for taking the time to put together such a fantastic, detailed and extensive explanation. I really appreciate it.

I understand the whole idea but still something bugs me. In the example I provided, the engine seems to calculate already one different random value for each row, since it is showing them. It is only when loading into the model that it seems to calculate again and, that time, with one same value for all rows. This doesn't seem to make much sense. Why is it, apparently, calculating the values twice? I mean, following what you explained, I would expect it to, in the last PQ step, optimize by just calculating one random value and showing that only random value in all rows and then loading that same unique value into the model. Showing different values first and then loading only one seems incoherent.

What do you think?

By the way, are you or have you been involved with the development of the PQ engine?

Many thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AlB

I'm glad you found this helpful. I've read a lot about how the mechanics work, but this documentation is found mostly on forums, by reading responses by people actually involved in the engine's developement (I am not one of them 🙂 ). There's a good series by Ben Gribaudo about M, and it does meddle a little bit with these topics (lazy evaluation) but not so much.

OK, now to explaining this last bit. The query preview actually computes some things and has its own buffer, which is the one helping us to see if what we're doing actually works. When we're previewing the results, they are buffered in the DataMashup so we can see and work with them, we can see how each step is computed by clicking on them etc. (btw this is why a good optimisation when working with large data models is disabling background preview).

In a way, the preview is a bit less lazy (less optimisations in our case) than the actual query plan, in regards to trying to put the steps in order, letting you look at these steps etc. So, Number.RandomBetween(0,1000) gets evaluated and shown.

However, when the queries are applied, the queries are not looked at individually but get reevaluated all together, compressed and put into the Tabular model's Storage Engine (VertiPaq). The preview's results are not used. These optimisations are a lot better - we see that sometimes while working with very complicated queries taking about the same time for 1000 rows (preview) and for the actual evaluation. One such optimisation is assuming idempotence for every function: given the same arguments the function will return the same result. With this, Number.RandomBetween(0,1000) is evaluated once and the result is subsequentl added in every row.

Of course, with static data we see no difference, but with random data we do.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AlB

I'll try to explain a little bit.

No matter the queries we write, in the end we have the PQ Engine to do the work for us. In order to make this engine fast enough, there have been written a whole lot of optimisations. So, it will do some steps together, it will rid of some all the way because they are deemed not needed, etc. That's why it's a functional language: it starts from the result and works its way up using only parts that it is actually going to need. And this is probably why the "DataMashup" has the Mashup in its name.

Some of these optimisations give birth to "bugs" like the one you came accross.

Using:

#"Added Custom" = Table.AddColumn(#"Changed Type", "Value", each Number.RandomBetween(0,1000), type number),

The PQe will "understand" that the row values are not needed: We ask for a number, we do not make use of any row value, and then return it. So, when mashing this thing up, it will actually compute Number.RandomBetween(0,1000) once and then add it in every row.

It is a bit smarter than that of course, we cannot "trick" it to use the row values if they're not needed:

#"Added Custom" = Table.AddColumn(#"Changed Type", "Value", each Number.RandomBetween(0,1000) + [CustomerId] - [CustomerId], type number),

will also never use the rows' values.

To actually see this happening in PQ Editor one can use a Transformer function:

Table.TransformColumns(#"Added Custom",{{"Value", each Number.RandomBetween(0,1000), type number}})

with which every row should be filled by the same number.

OK, now we need to actually force it to calculate this thing for every row. And this is why Index or Buffer are commonly used:

i) By indexing after the random number generation, the PQe actually has to go through every row, look at its values, save their position and values and then go on.

ii) By buffering, it loads the whole thing into memory, so it actually computes the random numbers once again for every row, but also keeping all that.

There's another solution not really talked about, which is for me the fastest one I know of: Replacing. Table.ReplaceValues actually reads every row and discards it, being a bit faster than indexing. These two steps should work as expected:

#"Added Custom" = Table.AddColumn(#"Changed Type", "Value", each null, type number),

#"Replaced Value" = Table.ReplaceValue(#"Added Custom",null,each Number.RandomBetween(0, 1000),Replacer.ReplaceValue,{"Value"})

Last, you can avoid all that by using List.Random, which is actually made to compute different random numbers. However, afterwards you'll need to zip it with your data and expand etc etc, or add an index and transform the index to the corresponding list's random value. All that taking some valueable calculation time. But take those two steps:

#"Zip with List Random" = List.Zip({#"Changed Type"[CustomerId], List.Transform(List.Random(List.Count(#"Changed Type"[CustomerId])), each Value.Multiply(_, 1000))}),

#"List to Table" = Table.FromList(#"Zip with List Random", (x) => x, {"CustomerId", "Value"})

in

#"List to Table"

Hope it makes some sense now.

Best,

Spyros

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Many thanks for the link. Very interesting

Any idea why there's a need to include an index column. Why does it work differently with or without the index, since we are not doing anything with it?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @AlB

To create multiple columns containing random numbers, add an index column each time before using the Number.Random(). You can delete the columns later.

You can also wrap the step before the Number.Random() step with Table.Buffer() to generate different number on each row. For example, if you added a custom column in the step previous to adding random number column, your step would look like Table.Buffer(Table.AddColumn(...)). In this case you will not need to add an index column.

For further information, I'd like to suggest you refer to the following article.

Random Number Generation in Power BI

Best Regards

Allan

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. Yes, I'd seen that with the extra steps. I'd like to know the reason for that behaviour though. It seeems quite strange (and inconsistent) that the values it shows on the column depend on whether you have more steps afterwards, or what kind of steps you have afterwards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AlB ,

It appears that adding the index column after adding the custom column is what forces PQ to evaluate each row separately from that point onwards (including the load in PBI).

Without that Index column you would get the same behaviour in PQ editor if you continued on working there. See below:

Adding any extra step:

Regards,

Kudos are another nice way to acknowledge those who tried to help you.

J. Payeras

Mallorca, Spain

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AlB ,

It seems to need the creation of an Index Column to work.

let

Source = List.Numbers(1,NumCustomers),

#"Converted to Table" = Table.FromList(Source, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Renamed Columns" = Table.RenameColumns(#"Converted to Table",{{"Column1", "CustomerId"}}),

#"Changed Type" = Table.TransformColumnTypes(#"Renamed Columns",{{"CustomerId", Int64.Type}}),

#"Added Custom" = Table.AddColumn(#"Changed Type", "Value", each Number.RandomBetween(0,1000), type number),

#"Added Index" = Table.AddIndexColumn(#"Added Custom", "Index", 0, 1, Int64.Type),

#"Removed Columns" = Table.RemoveColumns(#"Added Index",{"Index"})

in

#"Removed Columns"

Regards,

Kudos are another nice way to acknowledge those who tried to help you.

J. Payeras

Mallorca, Spain

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.